Compression

Files can often be compressedthat is, represented using fewer bytes than the standard representation. This technique is useful for saving disk space and for reducing network traffic. The total time required to compress a file, send the compressed file, and uncompress it at the other end is often less than the time required to send the uncompressed file. This explains why software made available for download over the Internet is usually compressed.

In this section, we discuss two algorithms for compressing data. The discussion is in terms of text, but these algorithms can be adapted to work for any kind of data.

Huffman Encoding

In an ASCII text file, each character is represented by the same number of bits. Such a fixed-length encoding is somewhat wasteful, because some characters are more common than others. If a character appears frequently, it should have a shorter representation. Huffman encoding produces such an efficient representation.

Consider the String "beekeepers_&_bees". Eight different characters appear in this String, so we could have a 3-bit code for each character (Figure 17-14). (ASCII works like this, using 7 bits to encode 128 different characters.) In a Huffman encoding, common characters such as 'e' have shorter codes than rare characters such as '&'.

|

Character |

Fixed-Length Code |

Huffman Code |

|---|---|---|

|

b |

000 |

110 |

|

e |

001 |

0 |

|

k |

010 |

11110 |

|

p |

011 |

11111 |

|

r |

100 |

1011 |

|

s |

101 |

100 |

|

_ |

110 |

1110 |

|

& |

111 |

1010 |

Representing the String "beekeepers_&_bees" using the fixed-length encoding requires 51 bits:

000 001 001 010 001 001 011 001 100 101 110 111 110 000 001 001 101

Using the Huffman encoding, this takes only 45 bits:

110 0 0 11110 0 0 11111 0 1011 100 1110 1010 1110 110 0 0 100

The savings can be considerably greater on a long String, such as the entire text of a novel.

We included spaces above to make it clear where one code begins and another ends. In practice, there is only a sequence of bits. It is easy to detect the end of a code in a fixed-length encoding, because each code uses the same number of bits. We might worry that, in a Huffman encoding, we might incorrectly conclude that we've read the code 100 when we've really only read the first three characters of the code 10011. To avoid this danger, Huffman encodings are designed so that no code is a prefix of another code. For example, the code for 'b' is 110, and no other code starts with 110.

A Huffman encoding can be automatically generated from a table of character frequencies. These should be drawn from a string typical of those being compressed. For example, when compressing English text, character frequencies on the front page of the New York Times will do nicely. To keep our example small, however, we will use the frequencies in the message being compressed (Figure 17-15).

|

Character |

Count |

|---|---|

|

b |

2 |

|

e |

7 |

|

k |

1 |

|

p |

1 |

|

r |

1 |

|

s |

2 |

|

_ |

2 |

|

& |

1 |

To generate a Huffman encoding, we first construct a binary tree. When we are done, leaves in this tree corresponds to characters. Deeper leaves have longer codes.

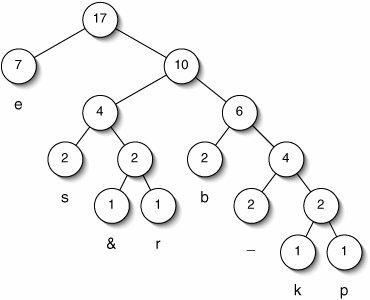

One node is created for each character, holding both that character and its count (Figure 17-16). This is a forest of trees, each containing one node. On each pass through the main loop, we choose the two lowest-count roots and merge them. (It doesn't matter how we handle ties.) The count for the new parent is the sum of its children's counts.

Figure 17-16. Building a Huffman tree. Initially, each node is in a separate tree (top). On each pass through the main loop, we combine the two roots with the lowest counts.

(This item is displayed on page 480 in the print version)

This continues until there is only one tree (Figure 17-17). The code for each character is determined by the path from the root to the corresponding leaf. Each right descent is represented by a 1, each left descent by a 0. For example, the path to 'b' is right-right-left, so its code is 110.

Figure 17-17. Final Huffman tree. The code for each character is determined by the path from the root to the corresponding leaf.

(This item is displayed on page 480 in the print version)

To translate these ideas into Java, we first need a class HuffmanNode (Figure 17-18). This is almost identical to a BinaryNode, but it has an additional field count. It also implements Comparable, so that we can find the lowest-count root. Inheritance pays off handsomely here.

Figure 17-18. The HuffmanNode class extends BinaryNode.

1 /** Node in a Huffman tree. */

2 public class HuffmanNode extends BinaryNode

3 implements Comparable {

4

5 /** Frequency of this letter or set of letters. */

6 private int count;

7

8 /** Create a node with no children. */

9 public HuffmanNode(char letter, int count) {

10 super(letter);

11 this.count = count;

12 }

13

14 /**

15 * Create a node with two children. Its count is the sum of

16 * its children's counts.

17 */

18 public HuffmanNode(HuffmanNode left, HuffmanNode right) {

19 super('?', left, right);

20 this.count = left.count + right.count;

21 }

22

23 /** The comparison is based on the counts of the nodes. */

24 public int compareTo(HuffmanNode that) {

25 return count - that.count;

26 }

27

28 }

|

An instance of the Huffman class contains a tree of such nodes and a direct addressing table mapping characters to their codes (Figure 17-19). The codes are given as Strings of ones and zeroes for readability. In practice, the program would really read and write bits from a file; this is left as Project 17.20.

Figure 17-19. The constructor for the Huffman class first generates a tree, then uses the tree to generate a table of codes.

1 /** Huffman encoder/decoder. */

2 public class Huffman {

3

4 /** The root of the Huffman tree. */

5 private HuffmanNode root;

6

7 /** Direct addressing table mapping characters to Strings. */ 8 private String[] table; 9 10 /** The frequency distribution is in the code for this method. */ 11 public Huffman() { 12 char[] letters = "bekprs_&".toCharArray(); 13 int[] counts = {2, 7, 1, 1, 1, 2, 2, 1}; 14 root = generateTree(letters, counts); 15 table = new String[128]; 16 generateCodes(root, ""); 17 } 18 19 } |

In generating the tree, we need to keep track of a set of roots. In each pass through the main loop, we need to grab the two roots with the smallest counts. This is a job for a priority queue (Figure 17-20).

Figure 17-20. A priority queue is used in building a Huffman tree.

1 /** Generate the Huffman tree. */

2 protected HuffmanNode generateTree(char[] letters, int[] counts) {

3 Heap q = new Heap();

4 for (int i = 0; i < letters.length; i++) {

5 q.add (new HuffmanNode(letters[i], counts[i]));

6 }

7 while (true) {

8 HuffmanNode a = q.remove();

9 if (q.isEmpty()) {

10 return a;

11 }

12 HuffmanNode b = q.remove();

13 q.add (new HuffmanNode(a, b));

14 }

15 }

|

This tree will be useful in decoding, but to encode a String we'll need a way to quickly get from a character to its code. This is accomplished using a direct-addressing table, which is populated by the elegant recursive method generateCodes() (Figure 17-21).

Once we have this table, encoding a String is just a matter of concatenating the codes for the characters making up the String (Figure 17-22).

Now, for example, if we

encode("beekeepers_&_bees")

Figure 17-21. As generateCodes() recurs more deeply into the tree, code gets longer.

1 /** Generate the table of codes. */

2 protected void generateCodes(BinaryNode root,

3 String code) {

4 if (root.isLeaf()) {

5 table[root.getItem()] = code;

6 } else {

7 generateCodes(root.getLeft(), code + "0");

8 generateCodes(root.getRight(), code + "1");

9 }

10 }

|

Figure 17-22. The code for each character can be looked up in the table.

1 /**

2 * Return the bits of the encoded version of message, as a

3 * human-readable String.

4 */

5 public String encode(String message) {

6 StringBuilder result = new StringBuilder();

7 for (char c : message.toCharArray()) {

8 result.append(table[c] + " ");

9 }

10 return result.toString();

11 }

|

the result is:

"110 0 0 11110 0 0 11111 0 1011 100 1110 1010 1110 110 0 0 100"

To decode a message, we start at the root of the tree and use the incoming bits to steer left and right (Figure 17-23). When we hit a leaf, we add a character to the result and go back to the root.

Figure 17-23. The Huffman tree is used in decoding a String.

| 1 /** Return the original version of a String encoded by encode(). */ 2 public String decode(String encodedMessage) { 3 StringBuilder result = new StringBuilder(); 4 BinaryNode node = root; 5 for (char c : encodedMessage) { 6 if (c == '0') { 7 node = node.getLeft(); 8 } else if (c == '1') { 9 node = node.getRight(); 10 } 11 if (node.isLeaf()) { 12 result.append(node.getItem()); 13 node = root; 14 } 15 } 16 return result.toString(); 17 } |

Now, for example,

decode(encode("beekeepers_&_bees"))

returns "beekeepers_&_bees".

LempelZiv Encoding

It is possible to prove that Huffman encoding is the best possible character-by-character encoding. We can do even better by creating codes for frequently occurring substrings. For example, when compressing Java programs, it could be useful to have special codes for substrings such as "public" and "for (int i = 0; i <". LempelZiv encoding uses this idea.

The set of codes used in LempelZiv encoding is not created in advance, but generated during encoding or decoding. Each code is an int corresponding to a String of one or more characters. Originally, there is a code for each single character.

As an example, consider encoding the String "beekeepers_&_bees" (Figure 17-24). We begin by reading the character 'b' and outputting the code for 'b'. On each subsequent step, we output a code and create a new code. As we work through the text, we eventually encounter substrings for which we have already created codes.

|

Output Code |

New Code |

|---|---|

|

b |

|

|

e |

be |

|

e |

ee |

|

k |

ek |

|

ee |

ke |

|

p |

eep |

|

e |

pe |

|

r |

er |

|

s |

rs |

|

_ |

s_ |

|

& |

_& |

|

_ |

&_ |

|

be |

_b |

|

e |

bee |

|

s |

We output the code corresponding to the longest prefix of the remaining text for which there is a code. For example, when the remaining text is "eepers_&_bees", there is a code for "ee" but not for "eep", so the code for "ee" is output.

When a new code is created, it represents a String constructed from the Strings represented by the last two codes emitted. Specifically, it is the concatenation of the older string and the first character of the newer string. For example, when the last two codes emitted represented "k" and "ee", the new code represents "ke".

To implement this algorithm, we need two data structures to keep track of the codes. The first structure is a direct-addressing table mapping ints to Strings. The second structure is a digital search tree (Section 14.3) with codes stored at the nodes.

These data structures are declared in Figure 17-25. The constructor initializes them by creating one child of root for each of the 128 ASCII characters.

Our encode() method (Figure 17-26) reads from a Scanner and writes ints to an ObjectOutputStream. In the main loop on lines 820, we work down the digital search tree, using characters from the input to choose a child. When we can't go any further, we've found the longest prefix, so we emit a code and create a new one (lines 1115).

Figure 17-25. Data structures and constructor for the LempelZiv class.

| 1 import java.io.*; 2 import java.util.Scanner; 3 4 /** Lempel-Ziv compression of text. */ 5 public class LempelZiv { 6 7 /** Root of the digital search tree. */ 8 private DigitalNode root; 9 10 /** Direct-addressing table mapping codes to Strings. */ 11 private ArrayList strings; 12 13 /** Initialize the codes with ASCII values. */ 14 public LempelZiv() { 15 root = new DigitalNode(null); 16 strings = new ArrayList(); 17 for (char i = 0; i < 128; i++) { 18 root.setChild(i, new DigitalNode((int)i)); 19 strings.add("" + i); 20 } 21 } 22 23 } |

Figure 17-26. LempelZiv encoding.

1 /** Read text from in, write ints to out. */

2 public void encode(Scanner in, ObjectOutputStream out)

3 throws IOException {

4 DigitalNode parent = null;

5 DigitalNode node = root;

6 while (in.hasNextLine()) {

7 String line = in.nextLine() + "

";

8 for (int i = 0; i < line.length();) {

9 DigitalNode child = node.getChild(line.charAt(i));

10 if (child == null) {

11 int code = node.getItem();

12 out.writeInt(code);

13 addNewCode(parent, code);

14 parent = node;

15 node = root;

16 } else {

17 node = child;

18 i++;

19 }

20 }

21 }

22 out.writeInt(node.getItem());

23 out.close();

24 }

|

The protected method addNewCode() adds a node to the tree and an entry to the direct-addressing table (Figure 17-27).

Figure 17-27. Adding a new code to both data structures.

1 /**

2 * Add a new code. It represents the concatenation of the String

3 * for the code at parent and the first character of the String for

4 * code.

5 */

6 protected void addNewCode(DigitalNode parent, int code) {

7 if (parent != null) {

8 char firstChar = strings.get(code).charAt(0);

9 parent.setChild(firstChar,

10 new DigitalNode(strings.size()));

11 strings.add(strings.get(parent.getItem()) + firstChar);

12 }

13 }

|

Now, for example, to compress the file words into the file words.lz, we could do this:

Scanner in = new Scanner(new File("words"));

ObjectOutputStream out

= new ObjectOutputStream(new FileOutputStream("words.lz"));

new LempelZiv().encode(in, out);

Surprisingly, we don't need to keep the data structures around to decode a string. They can be generated on the fly during the decoding process, just as during the encoding process (Figure 17-28).

Figure 17-28. LempelZiv decoding.

1 /** Read ints from in, write text to out. */

2 public void decode(ObjectInputStream in, PrintWriter out)

3 throws IOException {

4 DigitalNode parent = null;

5 while (in.available() > 0) {

6 int code = in.readInt();

7 DigitalNode node = root;

8 String s = strings.get(code);

9 for (char c : s.toCharArray()) {

10 out.print(c);

11 node = node.getChild(c);

12 }

13 addNewCode(parent, code);

14 parent = node;

15 }

16 out.close();

17 }

|

To uncompress the previously compressed file, we could do this:

ObjectInputStream in

= new ObjectInputStream(new FileInputStream("words.lz"));

PrintWriter out = new PrintWriter("words");

new LempelZiv().decode(in, out);

Since each int takes up four bytes, LempelZiv encoding provides no compression until the encoder starts emitting codes representing strings longer than four characters. On long files, however, it can provide significant compression.

Java provides several classes that perform a variation on LempelZiv encoding, including java.util.zip.ZipInputStream, java.util.zip.GZIPInputStream, and java.util.jar.JarInputStream. We won't discuss these in detail.

Exercises

| 17.5 |

Can any compression algorithm guarantee that every String becomes shorter when compressed? Explain. (Hint: Recall the pigeonhole principle.) |

| 17.6 |

Discuss how these algorithms might be adapted to work on files containing binary data rather than text. |

| 17.7 |

Why can't line 8 of Figure 17-23 be a simple else? |

| 17.8 |

If we use our LempelZiv encoding algorithm on a sufficiently large file, the code table will become too large. It might become so large that an int is not sufficient to specify an index, or it might simply become too large to fit in memory. In practice, a limit is set on the table size. Discuss how the algorithm might reasonably proceed when the table is full. |

Part I: Object-Oriented Programming

Encapsulation

Polymorphism

Inheritance

- Inheritance

- Extending a Class

- The Object Class

- Packages and Access Levels

- Summary

- Vocabulary

- Problems

- Projects

Part II: Linear Structures

Stacks and Queues

- Stacks and Queues

- The Stack Interface

- The Call Stack

- Exceptions

- The Queue Interface

- Summary

- Vocabulary

- Problems

- Projects

Array-Based Structures

- Array-Based Structures

- Shrinking and Stretching Arrays

- Implementing Stacks and Queues

- The List Interface

- Iterators

- The Java Collections Framework: A First Look

- Summary

- Vocabulary

- Problems

- Projects

Linked Structures

- Linked Structures

- List Nodes

- Stacks and Queues

- The LinkedList Class

- The Java Collections Framework Revisited

- Summary

- Vocabulary

- Problems

- Projects

Part III: Algorithms

Analysis of Algorithms

- Analysis of Algorithms

- Timing

- Asymptotic Notation

- Counting Steps

- Best, Worst, and Average Case

- Amortized Analysis

- Summary

- Vocabulary

- Problems

- Projects

Searching and Sorting

- Searching and Sorting

- Linear Search

- Binary Search

- Insertion Sort

- The Comparable Interface

- Sorting Linked Lists

- Summary

- Vocabulary

- Problems

- Projects

Recursion

- Recursion

- Thinking Recursively

- Analyzing Recursive Algorithms

- Merge Sort

- Quicksort

- Avoiding Recursion

- Summary

- Vocabulary

- Problems

- Projects

Part IV: Trees and Sets

Trees

Sets

- Sets

- The Set Interface

- Ordered Lists

- Binary Search Trees

- Hash Tables

- The Java Collections Framework Again

- Summary

- Vocabulary

- Problems

- Projects

Part V: Advanced Topics

Advanced Linear Structures

- Advanced Linear Structures

- Bit Vectors

- Sparse Arrays

- Contiguous Representation of Multidimensional Arrays

- Advanced Searching and Sorting

- Summary

- Vocabulary

- Problems

- Projects

Strings

Advanced Trees

- Advanced Trees

- Heaps

- Disjoint Set Clusters

- Digital Search Trees

- Red-Black Trees

- Summary

- Vocabulary

- Problems

- Projects

Graphs

- Graphs

- Terminology

- Representation

- Graph Traversal

- Topological Sorting

- Shortest Paths

- Minimum Spanning Trees

- Summary

- Vocabulary

- Problems

- Projects

Memory Management

Out to the Disk

- Out to the Disk

- Interacting with Files

- Compression

- External Sorting

- B-Trees

- Summary

- Vocabulary

- Problems

- Projects

Part VI: Appendices

A. Review of Java

- A. Review of Java

- A.1. The First Program

- A.2. Variables and Types

- A.3. Loops

- A.4. Interacting with the User

- A.5. Branching

- A.6. Methods and Breaking Out

- A.7. Constants

- A.8. Operators

- A.9. Debugging

- A.10. Coding Conventions

B. Unified Modeling Language

C. Summation Formulae

- C. Summation Formulae

- C.1. Sum Notation

- C.2. Sum of Constants

- C.3. Sum of First n Integers

- C.4. Sums of Halves and Doubles

- C.5. Upper Limit on Sum of a Function

- C.6. Constant Factors

D. Further Reading

Index

EAN: 2147483647

Pages: 216

- Chapter VII Objective and Perceived Complexity and Their Impacts on Internet Communication

- Chapter VIII Personalization Systems and Their Deployment as Web Site Interface Design Decisions

- Chapter IX Extrinsic Plus Intrinsic Human Factors Influencing the Web Usage

- Chapter X Converting Browsers to Buyers: Key Considerations in Designing Business-to-Consumer Web Sites

- Chapter XI User Satisfaction with Web Portals: An Empirical Study