Multiobjective Decision-Making and AHP

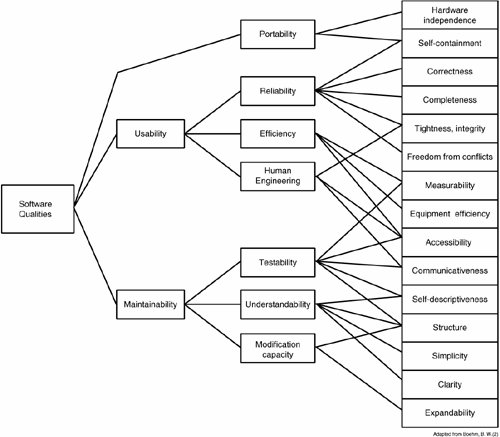

| Software development includes numerous situations involving multiple factors, criteria/objective, and metrics. Depending on size, complexity, and level of analysis, there could be dozens of quality characteristics. Our definition of trustworthy software contains five major customer requirementsreliability, safety, security, maintainability, and customer responsiveness; each of these comprises several quality characteristics at various levels of analysis. Add to this the cost and schedule requirements, and we have a high degree of problem intricacy from decision and design perspectives. Figure 8.1 illustrates the point we are making: just two customer-demanded quality characteristics, maintainability and usability, result in 15 characteristics from the developer's perspective. Furthermore, quality metrics based on these characteristics would result in dozens of characteristics. The Walter and McCall Model identifies (at a minimum) a three-level hierarchy in quality characteristics:[3]

Figure 8.1. Hierarchical Structure of Software Product Quality Characteristics Figure 8.1 illustrates just the simplest hierarchy of software quality characteristics. It is not uncommon to have subcriteria and sub-subcriteria in large and complex software. Given the complexity caused by a large number of variables, intuitive decision-making is insufficient to make the best design choices. Littlewood and Strigini articulate the challenges of software complexity:[4]

Managing complexity remains a critical design challenge, especially in a large software development process. This can be addressed by a two-pronged strategy:

The Gartner Group offers a line of Decision Engine Products,[5] as well as a best practice for technology selection called Refined Hierarchical Analysis. They are based on AHP and Expert Choice (EC), a computerized implementation of AHP and extensions to AHP. This chapter uses a relatively simple decision situation (Case Study 8.1) to describe the application of AHP as a practical decision theory and then illustrates the use of Expert Choice. TerminologyThe terminology used in decision-making can be confusing, as evidenced by some of the terms used. We read and speak of factors, characteristics, attributes, criteria, objectives, requirements, pros, cons, metrics, musts, and wants. The formal decision-making literature includes three different descriptions of what some might consider the same endeavor:

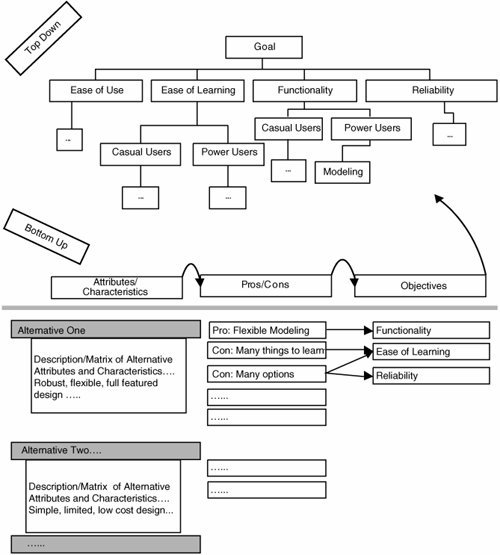

Although some subtle differences exist, these differences are basically historical and academic in nature and serve to confuse rather than enlighten. We have found that most or all of the confusion can be removed by focusing on objectives. Doing so leads to decisions that best align an organization's actions with what it is trying to achieve. In contrast, focusing on competing vendor attributes, characteristics, or other factors that may or may not be relevant to the organization's objectives increases confusion and can lead to actions that are not well-aligned with an organization's objectives. Focusing on objectives makes it clear when you are erroneously "double counting" (considering an attribute as it relates to more than one objective) and when you should double count. For example, a car's size might be included just once in a hierarchy of attributes. But in making a decision, you should focus on what you want from a car. You should ask, "Why do I care about the car's size?" A large car might be more comfortable, carry more passengers, and have a larger cargo capacity, but it might be less fuel-efficient than a small car. So rather than including the attribute size just once in an attribute hierarchy, you should include the multiple objectives related to size (comfort, safety, passenger capacity, cargo capacity, and perhaps ease of parking) in an objectives hierarchy. Structuring an Objectives HierarchyWe recommend using both a top-down and bottom-up approach to identify the objectives of an objectives hierarchy, as illustrated in Figure 8.2. The top-down approach elicits objectives directly. For example, the objectives in choosing from a set of alternative designs for a software product might include ease of use, ease of learning, functionality, and reliability. The subobjectives for ease of learning might be different for casual users and power users. The process can continue to include a lower level of subobjectives. Figure 8.2. Structuring an Objectives Hierarchy The bottom-up approach elicits objectives indirectly, by articulating the pros and cons of each alternative, some of which emerge from the alternatives' characteristics or attributes. For example, in looking at the description and matrix of attributes for Alternative One in Figure 8.2, you can see that this alternative provides very flexible modeling capabilities (a pro). However, this would require the user to learn many things (a con), and a large number of combinations of paths will need to be tested (a con). Each pro and con points to one or more objectives or subobjectives to be included in the hierarchy. A pro for one alternative may be a con for another alternative. At this point, it doesn't matter whether something is a pro or con. The relative preference for the former alternative will be greater than that for the latter alternative when judgments are made to derive priorities later in the AHP process. You don't need to identify all the pros and cons for each alternative under consideration. The focus at this point is on identifying objectives for the objectives hierarchy. However, it may be worth the time to identify and document all the pros and cons so that a clear audit trail exists. If a decision is at all political (the more important the decision, the more likely it is political as well as technical) any conclusion is likely to be challenged by someone who feels that another alternative is better. If these "reasons" are already documented as pros and cons and are represented by objectives in the decision hierarchy, it's easy to see how the person's concern is addressed in the evaluation. If the person identifies some concerns that were overlooked in constructing the objectives hierarchy, and others feel that they are appropriate, the objectives and/or judgments should be included or modified and another iteration of the evaluation performed. The results will either convince everyone that the original selection was best, or a possibly costly mistake will be avoided. To best model complex decisions, Expert Choice provides two guidelines:[6]

AHP software such as Expert Choice can help with structuring. It provides functionality to help you record pros and cons for each alternative, convert them to objectives, and view and manipulate affinity diagrams to cluster objectives according to the two guidelines using drag-and-drop capabilities. Decision HierarchyA decision hierarchy contains the objectives hierarchy and the alternatives under consideration, as shown in Figure 8.2. We will call the lowest-level objectives (or subobjectives) of the objectives hierarchy covering objectives because they cover the alternatives. This terminology is concise, unambiguous, and can be extended as necessary. For example, the objectives hierarchy can, in some cases, include other factors, such as scenarios or "players." You can also readily "map" the terminology to other terminologies, such as The Walter and McCall three-level model discussed earlier. The first-level quality characteristics from the perspective of the users that they would call factors, we will call objectives. The second-level quality characteristics from the perspective of developers that they would call criteria, we will call subobjectives. The third-level deployment of criteria that they would call metrics, we will call sub-subobjectives. Because they are the lowest level of this model, we will also call them covering objectives. Because we will be evaluating the alternatives with respect to these covering objectives, we may in fact be employing metrics in the process.

|

EAN: 2147483647

Pages: 394