Loading Data in Data Objects in SAP BW

Figures 9-6 and 9-7 show transaction data options but do not show paths for reference data. This is because when reference data is loaded in SAP BW, master data, texts, and hierarchies are stored in individual tables where texts and hierarchies are linked to the master data records using primary/foreign key relationships. Once saved, reference data requires no further data manipulation. The tables are just lookup tables. However, when you have transaction data, you have several options to save in several data objects, and this sometimes gets confusing-especially in SAP BW 2.0A, as shown in Figure 9-7.

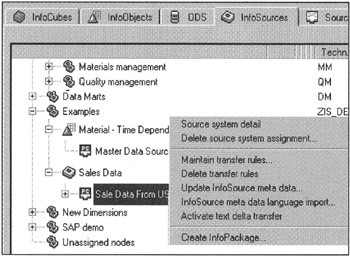

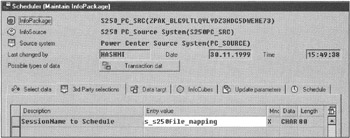

To load data in SAP BW, you define an InfoPackage. An InfoPackage is defined at an individual InfoSource level. While in BW Administrator Workbench, select an InfoSource, right-click, and select Create InfoPackage as shown in Figure 9-9. You are asked for the InfoPackage name, in this example Load Customer. To schedule this InfoPackage to load data in SAP BW, right-click on the InfoPackage and select Schedule.

Figure 9-9: Creating an InfoPackage to Load Data in SAP BW 1.2B.

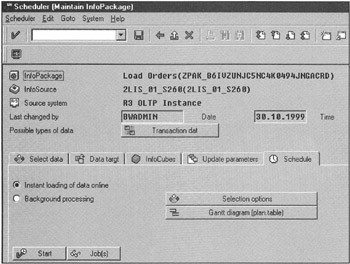

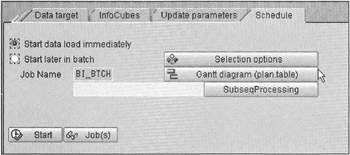

InfoPackage is like a job stream that links incoming data requests to SAP BW internal data objects by scheduling several activities as defined by the user, such as data selection criterion, sequence of data objects to populate, or additional processes needed to be run before or after the data load. Figure 9-10 shows the InfoPackage Scheduler window. Depending on the SAP BW version and the data source type, you may find different sets of folder tabs and options under each section. For example, Figure 9-10 represents the InfoPackage Scheduling option for an InfoSource when the data source is an SAP R/3 OLTP instance. However, the same window looks somewhat different in SAP BW 2.0A. Overall the content is the same but with one exception. The SAP BW 2.0 InfoPackage Schedule option, as shown in Figure 9-11, also allows you to set up several pre- and post-data load activities. This simplifies the process of typical data warehouse construction where you need to validate or perform operational tasks before and after building InfoCubes. For example, maybe you want to first delete existing working tables, back up existing tables, drop indexes and then build the InfoCubes, then later notify operations staff or other parties if the job was successful. It is up to individual database operations teams to decide how to put together such job streams.

Figure 9-10: InfoPackage Scheduling for SAP R/3 Transactional InfoSources Based on SAP BW Version 1.2B.

Figure 9-11: InfoPackage Scheduling for SAP R/3 Transactional InfoSources Based on SAP BW Version 2.0A.

You can either run this job instantaneously or define a background job to run at a given time. To submit this job, click the Start button. You will receive a message when the job has been scheduled successfully. Now you can monitor the job activities and verify that your job has been completed.

| Note | You need to define a data load scheme once and use it repeatedly to load subsequent data loads. Once you have verified that a data load scheme works as defined, you can submit such data load jobs to be run periodically-for example, daily at 1:00 A.M. to load new order changes and populate the Sales Analysis InfoCube. Such job scheduling is done right from within the InfoPackage Scheduler window, as shown in Figure 9-10. |

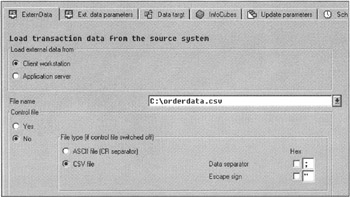

When the InfoSource is based on an SAP R/3 instance, SAP BW knows how to connect and get data. However, when InfoSources are based on flat files of third-party data extraction and load products, you need to provide the location of data sources in the InfoPackage Scheduler, as shown in Figures 9-12 and 9-13. The actual mechanics of working with flat files and third-party tools is discussed in Chapter 11, "Analyzing SAP BW Data," and Chapter 14.

Figure 9-12: InfoPackage Scheduling Options for Flat File InfoSources.

Figure 9-13: InfoPackage Scheduling for Third-Party Data Loading Tools that Use SAP BW Staging BAPIs.

Before an InfoPackage job is scheduled, you should know target data objects in SAP BW and the sequence of data processing needed before storing. The data flow, as shown in Figures 9-6 and 9-7, is defined at this stage.

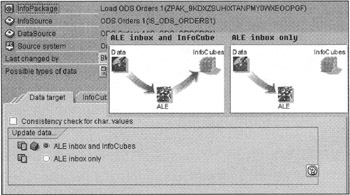

While in the InfoPackage Scheduling window, click the Data target tabstrip. Two options are available: ALE inbox only and ALE inbox and InfoCube, as shown in Figure 9-14. For a visual aid, click the question mark icon on the bottom-right of the window. This shows the path of data for updates.

Figure 9-14: Defining Data Load Flow in SAP BW When the Data Transfer Method is ALE IDOC.

| Note | The data load method is defined when you define the transfer rules. Here you are simply defining the data execution flow. |

By selecting either option, you are using the ALE IDOCs mechanism to move data from the SAP data source and save it in IDOC data tables in SAP BW and then build the InfoCubes. Saving data in the IDOCs is similar to saving data in ODS, but data is stored using IDOCs structures. Here you can use the ALE transaction to edit, simulate, or process for downstream data loads.

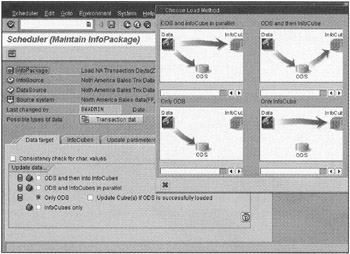

Though the ALE method works fine, due to ALE record size limitations-1,000 bytes/record-and intense IDOC processing resources, you should define the transfer method for an InfoSource as tRFC with ODS. Once this mode is set at the transfer rules level, you have several options to define data load paths during job schedules, as shown in Figure 9-15.

Figure 9-15: Defining Data Load Flow in SAP BW When the Data Transfer Method is tRFC with ODS. In SAP BW Version 2.0A, ODS Means PSA.

| Note | The ODS options shown in Figure 9-15 are only available when the transfer method for the InfoSource is elected as tRFC with ODS, as shown in Figure 9-8 and discussed earlier in this chapter. |

Note that in SAP BW 1.2B, Figure 9-15 should work. In SAP BW 2.0A, this scheme will work as well, but here ODS means PSA. True ODS integration with the InfoPackage scheduling process will be integrated with the SAP BW Administrator Workbench in SAP BW 2.0B. That will allow you to select all possible data paths defined as shown in Figure 9-7.

Initial Data Loads

Before an InfoPackage job is scheduled, you should have a rough estimate of incoming data, especially during the initial data loads. Moreover, you should know the target data objects in SAP BW and the sequence of data processing and storage, as shown in Figures 9-6 and 9-7. You need to identify these update modes at this time.

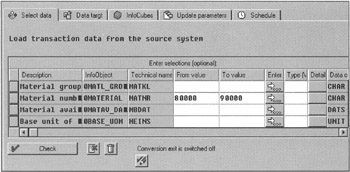

When you have large volumes, break one long running data load job into several small jobs. The InfoPackage scheduler provides a data selection screen to set this scheme. Click the Select Data tab and enter a range of records you want to fetch from its data source, as shown in Figure 9-16. Here, for example, all order transaction data for materials with 8000 to 9000 ID ranges has been selected.

Figure 9-16: Loading a Large Amount of Data in SAP BW by Splitting One Huge Task into Multiple Data Load Sessions. Each Session is Limited to a Given Range of Material Values.

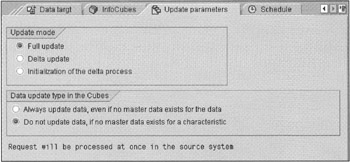

Because initial data loads can take several hours to several days, by scheduling data in small segments over several days, you will not cause any interruptions to daily SAP R/3 OLTP operations. However, you need to plan full data loads in conjunction with statistical updates on the OLTP side, which means that if you are loading initial order transaction data from an SAP R/3 instance, select the Full Load option (see Figure 9-17) when scheduling the job, and that you have executed the statistical run for that data set on SAP R/3.

Figure 9-17: Loading Incremental Changes in SAP BW.

Loading master data is similar to loading transaction data. You do not have many options other than data selection criterion at the scheduling time. Remember, you cannot use tRFC or store data ODS for master data elements.

From a database perspective, make sure that your database administrator is aware of incoming initial large data volumes. Make sure that the database tables have sufficient space available and that the database tables extents for master and transaction data are large enough to speed up data loads.

Delta Data Loads

Once initial data has been loaded in SAP BW, you can only pull new data from SAP R/3. Before you can pull new data from SAP R/3, you need to activate updates for LIS structures by use of transaction OMO1 or OMO2. Then select the delta update option available in the Update parameters tabstrip of InfoPackage Scheduler, as shown in Figure 9-17.

When delta update requests reach SAP R/3, data is pulled from the Delta Change table currently active and not from Source LIS structure, as shown in Figure 9-3 and described earlier in this chapter.

Most LIS-based InfoSources can automatically capture new data for SAP BW. However, for other application modules and reference data, one has to either write specific delta update programs to capture new data for SAP BW or do full update loads. For low volumes or small SAP R/3 operations, full loads are always preferred over writing change capture programs. This subject is discussed in Chapter 13, "Enhancing Business Content and Developing Data Extractors."

| Team-Fly |

EAN: 2147483647

Pages: 174