SQL Server 2000

Now that the technologies for the operating system have been laid out, it is time to focus on what SQL Server 2000 offers for providing availability. Each of the topics introduced in this section has its own chapter later in this book.

Failover Clustering

When you install SQL Server 2000 for use on a server cluster, this configuration is known as SQL Server 2000 failover clustering. This installation is also considered a virtual server, and is an instance of SQL Server 2000. The virtual server can either be a named instance or a default instance, but just like a stand-alone configuration, there can only be one default SQL Server 2000 instance per server cluster. A SQL Server 2000 failover cluster supports the core SQL Server functionality; Analysis Services is not cluster-aware. Chapter 10, Designing High Availability Solutions with Microsoft SQL Server, addresses how to make Analysis Services available. To administrators and end users alike (with any exceptions noted here or in Chapter 6), a failover cluster should act, look, and feel like a stand-alone SQL Server. Each SQL Server 2000 failover cluster installation gets its own group and set of resources in the server cluster.

| More Info | Chapter 6 details the planning, configuration, and administration of a SQL Server 2000 failover cluster. |

SQL Server 2000 Failover Clustering versus Previous SQL Server Clustering Implementations

If you are familiar with the Microsoft SQL Server 6.5 or Microsoft SQL Server 7.0 implementation of clustering, you will realize that SQL Server 2000 greatly enhances clustering functionality. In fact, with SQL Server 2000, clustering support was redesigned from the ground up. This is one of the reasons that the feature in SQL Server 2000 is called failover clustering, whereas in SQL Server 7.0, it was just called clustering.

One of the first differences with SQL Server 2000 failover clustering is the support for more than a two-node server cluster. Microsoft Windows NT 4.0 Enterprise Edition, the platform for which SQL Server 8.0 clustering was originally designed, only supported two-node clustering. SQL Server 2000 takes advantage of more nodes when they are available. With the introduction of Windows Server 2003, up to eight nodes can be supported by the operating system. As noted in Table 3-2, the 32-bit version of SQL Server is still limited to a maximum of four nodes per virtual server.

| SQL Server Version | Maximum Number of Nodes |

|---|---|

| SQL Server 6.5 and 7.0 | 2 |

| SQL Server 2000 (32-bit) | 4 |

| SQL Server 2000 (64-bit) | 8 |

Instance support is the primary difference between SQL Server 2000 and earlier versions. An instance, simply put, is a separate installation of SQL Server that can run side-by-side with another SQL Server installation on the same machine. The instance can either be a default instance or a named instance. Think of a default instance as the way SQL Server has historically been architected. A named instance is just that ”one that is given a name to make it distinct. SQL Server 2000 supports up to 16 instances (clustered or not), which can include 1 default and 15 named instances, or 16 named instances. Instances allow multiple SQL Server virtual servers to be installed on a cluster with ease. With SQL Server 6.5 and SQL Server 7.0 clustering, you were limited to at most two installations of SQL Server on any given server cluster.

SQL Server 6.5 and SQL Server 7.0 clustering relied on the concept of active/passive and active/active clustering. When you only have two installations of SQL Server, these concepts are easy to grasp. Active/passive clustering occurs when you have only two nodes, and one controls the active resources. Active/active clustering occurs when both nodes in the cluster have active resources (such as two SQL Servers). When instances were introduced with SQL Server 2000, these definitions proved problematic . Because the operating system and SQL Server itself can now support more than two nodes, the terms really do not fit. The new equivalent terms are single-instance cluster for active/passive and multiple-instance cluster for active/active. If you only have up to two nodes and two SQL virtual servers, active/passive and active/active might still apply, but it is better to use the new terminology. Single-instance clusters are also sometimes referred to as single active instance clusters, and multiple- instance clusters are sometimes referred to as multi-instance clusters or multiple active instance clusters.

Other enhancements with SQL Server 2000 failover clustering include the following:

-

Installing and uninstalling a SQL Server 2000 failover cluster are both now done using the SQL Server 2000 Setup program, and not through the combination of setting up your database server as a stand-alone server, then running a wizard. Clustering is part of the installation process. This also means that SQL Server 2000 failover clustering is a permanent option, and the only way to remove it is to uninstall the clustered instance of SQL Server.

-

SQL Server 2000 includes extensive support for recovering from a server node failure in the cluster, including a one-node cluster. If a node fails it can be removed, reinstalled, and rejoined to the cluster while all other nodes continue to function properly. It is then a simple operation with SQL Server 2000 Setup to add the new server back into the virtual server definition. SQL Server 6.5 and SQL Server 7.0 clustering did not have this capability, and in some cases, a severe problem could result in a complete cluster rebuild. Although, this can still happen with SQL Server 2000 in extreme cases, it can be avoided for most problems.

-

All nodes now have local copies of the SQL Server tools (including performance counters) as well as the executables, so in the event of a failover, you can administer the server from a remote system or the clustered node itself. SQL Server 6.5 and SQL Server 7.0 required that the binaries be installed on the shared cluster disk subsystem. This was a problem, because only the cluster node that performed the installation had the tools registered.

-

Each SQL Server 2000 virtual server has its own full-text resource. In previous versions of SQL Server clustering, full-text functionality was not supported. The underlying Microsoft Search service is shared among all things accessing it on the node, but if your database requires Full-Text Search it is now an option if you decide to use failover clustering.

-

SQL Server 2000 failover cluster configurations can be updated by rerunning the setup program, which simplifies the configuration process.

-

Database administrators can now use all of the SQL Server tools, including SQL Server Service Manager or SQL Server Enterprise Manager, to fully administer the database, including starting and stopping SQL Server. Previously some functionality, such as stopping and starting SQL Server, required using Cluster Administrator. That meant database administrators (DBAs) had to be SQL Server experts, as well as Server Cluster experts, which could cause problems if something was done in the wrong place.

-

Service packs are applied directly to the SQL Server 2000 virtual server. With SQL Server 7.0, you had to uncluster the server prior to applying a service pack.

-

SQL Server 2000 is now a fully cluster-aware application. This allows SQL Server 2000 to interact properly with the Cluster service, and it provides some benefits such as preventing the creation of databases on invalid logical drives .

How SQL Server Failover Clustering Works

Each node of the cluster is capable of owning a resource, whether it is SQL Server, a disk, and so on. The node currently owning the disk resource (whether it is a SQL Server disk, or the quorum disk), which hopefully is the designated primary owner, reserves the resource every three seconds. The point of the reservation process is to protect against physical access by another node; if a node loses the reservation, another node can now take ownership.

For example, if the node owning the instance fails due to a problem (network, disk, or something else) at second 19, the competing node detects it at the next reservation cycle. If the reservation cycle fails for the node that owned the disk resource three more times, the new node takes ownership, in this case at around second 34.

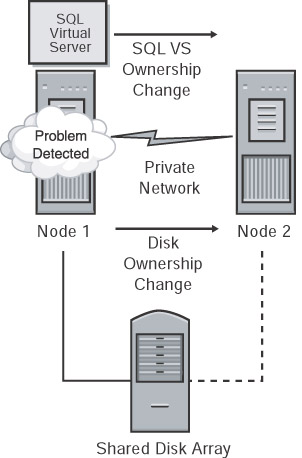

From a SQL Server perspective, the node hosting the SQL Server resource does the LooksAlive check. Remember that this does not mean that SQL Server is up and running. The IsAlive is then run, and that issues the aforementioned SELECT @@SERVERNAME. Again, this does not guarantee that the database you need can service a request, but SQL Server itself can. Should the IsAlive check fail (which means the query fails), it is retried five times. In the event all five retries fail, the SQL Server resource fails. Depending on the failover threshold configuration (discussed in Chapter 6) of the SQL Server resource, the server cluster either attempts to restart the resource on its current node or fails it over to the next preferred node that is still running (see Figure 3-3). The execution of the IsAlive tolerates a few errors, such as licensing issues or having a paused instance of SQL Server, but ultimately fails if its threshold is exceeded.

Figure 3-3: A failure detected on the node currently owning the SQL Server virtual server.

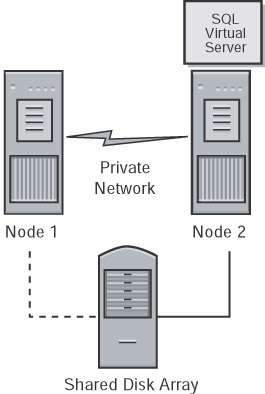

During the failover process from one node to another, the server cluster starts the SQL Server service for that instance on the new node and goes through the recovery process to start the databases. The entire switch of the SQL Server virtual server from one node to another takes a fairly short time. After the service is started and the master database is online, the SQL Server resource is considered to be running (see Figure 3-4). This process, on average, takes less than a minute or two. However, keep in mind the user databases will go through the normal recovery process, which means that any completed transactions in the transaction log are rolled forward, and any incomplete transactions are rolled back. The complete length of the recovery process depends on how much activity must be rolled forward or rolled back at startup, so the entire failover process takes longer than it takes to start the processes and bring up the master on the other node. This behavior is the same as a stand-alone instance of SQL Server that would be stopped and started.

Figure 3-4: The new node after failover.

Clients and SQL Server 2000 Failover Clustering

As noted earlier, end users and applications access the SQL virtual server with either its name or IP address, not the name or IP address of the node hosting the resources. Users and applications do not need to worry about which node owns the resources. That is one of the best benefits of failover clustering ” things are largely transparent to the end user. One noticeable result, depending on how the application is designed, is that during the failover process, any active connections are broken because the SQL Server is stopped and started. Therefore, whatever the user was working on when the server fails over might not be completed unless the transaction completes before the server goes down or the transaction and subsequent reconnect is handled within the application. This possibility must be taken into account in any decision to involve a cluster on the back end.

SQL Server 2000 failover clustering is always transactionally current: when the database is started on another node, it starts at the exact state it was in prior to failover. This is one of the benefits ”no transactions are lost from a database, so the database is always current. Only transactions that were incomplete or issued after the failure and before SQL Server is restarted are lost, and that can be handled in the application. In fact, because the whole SQL Server instance fails over to another node, all databases and their objects (including logons , stored procedures, and so on) survive the switch intact.

Failover Clustering and Wasted Resources

This topic is dealt with in more depth in Chapter 6, but sometimes there is the notion ” especially in a single-instance cluster or a multiple-node Windows Datacenter cluster with only one SQL virtual server ”that unused nodes are being wasted. However, these nodes serve as an insurance policy in the event of a failure. If you start utilizing the wasted node for something else, what will happen in a failover? Will SQL Server have enough resources to allocate to it?

Because only one SQL Server can own a given disk resource (for more information, see Chapter 4, Chapter 5, and Chapter 6), you cannot share certain resources among clustered SQL instances. For example, if you have a drive D with 100 GB of space and need only 30 GB of it for one SQL virtual server, you cannot use any of the remaining disk space on drive D for another SQL virtual server.

Finally, because there is no load balancing with failover clustering, two SQL Servers cannot share the same underlying database because of the shared nothing mechanics of the server cluster. So you cannot, for example, use one SQL Server instance to do the reporting and another to do the inserting on the same database.

Log Shipping

Log shipping is not a new technology for SQL Server 2000; DBAs have used it for some time. Log shipping is the process of taking a full database backup from a specific point in time and restoring it in a special way on another server such that transaction log backups can be applied to it. Transaction logs are then made on the active server, also known as the primary , and then copied and applied to the waiting server. This server can be known as the secondary or warm standby . The secondary server stays in this mode until it needs to be brought online. If the switch needs to be made to the warm standby, this process is called a role change, not a failover. (Role change is described in detail in Chapter 7, Log Shipping. )

The first released Microsoft implementation of log shipping was released in the Microsoft BackOffice Resource Kit 4.5 (published by Microsoft Press, ISBN 0-7356-0583-1), and it was script-based. SQL Server 2000 took the log shipping functionality a step further and integrated it into the platform, but and it is available only in SQL Server 2000 Enterprise Edition and SQL Server 2000 Developer Edition (which can only be used for development purposes). Microsoft does recommend the use of the built-in functionality, as it also provides a Log Shipping Monitor to display the status of the process. Log shipping can be custom scripted for other editions of SQL Server or to accommodate other requirements, such as compression or encryption of the files being sent across the wire. Functionality like the Log Shipping Monitor would also need custom coding. Log shipping does not require any specialized hardware or solutions other than the proper version of SQL Server.

Log Shipping Transactions

Log shipping is always transactionally consistent, although there is usually some sort of latency involved. It is not considered real time, in which each transaction is sent as soon as it occurs. With log shipping, you are only as current as:

-

The last transaction completed on the primary

-

The last transaction log backed up on the primary

-

The last transaction log copied from the primary

-

The last transaction log applied to the secondary

The log sent to the secondary is only as current as the last transaction log that is applied, so if the delta is five minutes off of the primary, your secondary should always remain five minutes behind if all is functioning properly. Analyzing these points is fairly straightforward given the previous sentence . If a transaction is completed, it is written to the transaction log. If the SQL Server experiences a failure before that transaction log is backed up, there is a chance that if the server cannot recover and read the transaction log, it will be lost, and you will only be as current as the secondary. If a transaction log is backed up but not copied over to the secondary, and either the copy process fails or the hard disk that contains that transaction log is lost, again, you are only as current on the secondary as what is available to it. If a transaction log is copied from the primary, and is still accessible to the secondary, it can be applied, so your secondary will be as current as the last transaction in the transaction log.

One benefit of log shipping is that anything that is recorded as a transaction for the database being log shipped is applied to the secondary. On the flip side, when the log shipped database is configured, only the objects found in that database exist at the secondary. Anything outside of the immediate database ”logons, stored procedures used in another database, and so on ” would have to be transferred using another process external to the core log shipping process.

Another use of log shipping, but not its main or intended use, is that the secondary database can potentially be used for read-only reporting-type access. The problem, however, is that to apply the transaction log, SQL Server needs exclusive access to the database, so no users can be accessing the database. If even one connection is left open , you could be putting your availability solution at risk. If the transaction logs are applied frequently, there might not be enough time between transactions to allow read-only access.

If full-text searching is required by the database that is being log shipped, you need to consider what to do in the event of a role change. Here is the crux of the issue: you cannot generate a full-text index on the secondary while the database is loading transaction logs. Even if you are backing up the full-text index and performing maintenance on it on the primary, even on the primary it will be out of sync if applied to a database that is at a later moment in time. Unless you can get to the full-text index and ensure that it is exactly at the same point as the log shipped secondary, you cannot bolt it on to the secondary. In that case, you will likely have to regenerate the full-text index after the database is brought online.

Clients and Log Shipping

Another consideration regarding log shipping is its affect on clients and applications. Applications do need to take various things into account, including connecting to another server, as it will have another name. Application issues relating to log shipping are detailed in Chapter 7. Because the role change is not an automatic process, you need to have a tested plan in place to perform the switch in servers. There will definitely be some sort of availability outage during the switch. Network Load Balancing might be able to help abstract the name change of the server, but it certainly does not prevent downtime. The switch time could take anywhere from seconds if all available transaction logs are applied, to much longer if they are not.

| More Info | Chapter 7 details the planning, configuration, and administration of log shipping. |

Replication

Replication is another technology that is not new to SQL Server. Replication has been part of SQL Server since SQL Server 4.21a. There are three forms of replication, as follows :

-

Merge Replication Designed to allow sites to work autonomously and allow updates of data to occur in multiple places, and then have those changes rolled up to a central repository at specified intervals. Merge replication is a good choice for disconnected environments that need to update data, but is not appropriate for most availability scenarios.

-

Snapshot Replication Just that, a snapshot of where the data is at a particular moment. Because a snapshot is a complete copy of the data and there is usually quite a bit of latency between publications , it is not appropriate for availability.

-

Transactional Replication Allows data to be replicated to a subscriber as soon as it is modified at the publisher. Transactional replication can be bidirectional, meaning subscribers can update publishers. Because of the low latency involved with transactional replication, it is appropriate in certain cases to use for SQL Server high availability. Any further discussions in this chapter and book refer to transactional replication in the availability context.

Each form of replication shares a similar architecture. Each has a publisher, a distributor, and a subscriber. The publisher is the originator of the data or publication, which then sends it to the distributor. The distributor sends the data to be published to the appropriate subscribers. The subscriber is the end user of the data from a SQL Server perspective. For more information about each form of replication, consult SQL Server 2000 help files ”SQL Server Books Online.

Transactional replication was not designed with availability in mind, much like using Network Load Balancing with SQL Server was not the original intention of Network Load Balancing. Replication allows the customization of articles and publications sent. So, if you need to have only a certain portion of data available, transactional replication allows you to send a smaller portion of your data elsewhere. No other SQL Server-based technology allows this customization.

Replication Issues

There are some issues to consider when using replication as your method of high availability. One of the main issues is that certain objects associated with each database or that are created do not necessarily get re-created as part of the article or publication. It is important to understand what objects replication brings over and what it does not. Another issue is that because it was not designed for transactional replication, there is really no out-of-the box solution for switching from one server to the replicated warm standby. Tasks that ensure synchronization of the proper logons, stored procedures, and so on must also now have a process devised to move them to the standby.

Transactional replication is always transactionally consistent. With replication (any form), you are only as good as the following:

-

The last transaction completed on the publisher

-

The last transaction or publication sent to the distributor

-

The last transaction or publication applied to the subscriber

The scenarios are similar to the one detailed earlier for log shipping in terms of what the delta is between the publisher and the subscriber.

Obviously, one of the main uses of replication is that you are making data available elsewhere, whether it is reporting or another use. Therefore, you do not have to worry about accessing the secondary while it is being updated.

Full-text indexing will have to be configured against the replicated database. If you intend on possibly using the replicated database as a primary at some point, do not wait to start generating the full-text index. In addition, if only a subset of the data is replicated, and that database now needs to assume full functionality, you might not be able to use that database with the application. Different types of replication have limits on how much data is transferred in an article or publication.

Clients and Replication

Replication suffers from the same dilemmas as log shipping when it comes to clients: if a client or application expects to be connected to one server, how does it handle being changed to another server? The switch process also needs to be planned; it is not automatic. Replication does not detect the failure, other than, perhaps, showing up in the Replication Monitor as everything being out of sync. There will be downtime in the switch and the length of the downtime varies depending on the circumstances.

| More Info | Chapter 8, Replication, details the planning, configuration, and administration of replication for high availability. |

Backup and Restore

Backup and restore really needs no introduction. You do not have to be a DBA to understand that databases and all files related to the solution (including the operating system) need to be properly backed up, no matter what other availability methods are employed, such as failover clustering. You still need a proper backup and restore plan. The other technologies do not supplant the need to do the basics!

No matter your current situation, there is a chance that backup and restore might be the only option available. A well-planned backup and restore process can greatly increase availability. For most DBAs, this simply means that being able to restore the system quickly is a good idea in addition to whatever else you are doing to increase uptime. However, in the case of a very large database, you might need to consider a hardware-assisted solution to assist with quick restoration of the data.

It is imperative to ensure that backups are made frequently and tested to verify that the backups made are good and that you know the timings. If you have an overall availability goal as well as service level agreements (SLAs) that require certain availability requirements, you need to know if the restore will not meet the time specified in the SLA, and then possibly adjust your backup and restore strategy accordingly .

| More Info | Chapter 9, Database Environment Basics for Recovery, and Chapter 10, Implementing Backup and Restore, go into depth about the planning, configuration, and administration of the backup and restore process for high availability. |

EAN: 2147483647

Pages: 137

- Context Management of ERP Processes in Virtual Communities

- Intrinsic and Contextual Data Quality: The Effect of Media and Personal Involvement

- Healthcare Information: From Administrative to Practice Databases

- Relevance and Micro-Relevance for the Professional as Determinants of IT-Diffusion and IT-Use in Healthcare

- Development of Interactive Web Sites to Enhance Police/Community Relations