Capacity Planning

Numerous factors go into deciding how your disks will be configured. The first part of that decision process must be capacity planning, the art of determining exactly how much space you need. For new systems, this can range from very simple to very complex, depending on how much information you have up front. For extending existing systems, upgrading, or migrating to a new hardware or software platform, capacity planning should be easier because there should be documented history on the prior growth of that system, database, and application.

Two kinds of disk space usage must be known: raw space, and the physical number of disk drives needed for storage and to achieve the desired level of performance. Remember that figuring out how much raw space, which will then dictate how many drives you need, is based on your application s requirements of how it will be using SQL Server. The information and equations are based on these basic tenets of disk capacity planning.

| Note | The disk space presented by the disk controller at the LUN level and the disk space that is available to the application are not the same. All capacity planning must be based on the actual usable capacity as seen by Windows at the file system level, not what the storage controller thinks is being presented. |

| Important | Remember that any physical implementation is vendor- specific because each storage vendor might use similar ” but different ”architectures and structures. |

Raw Disk Space Needed

Conceptually, the amount of raw disk space is represented by the following equation:

Minimum Disk Space Needed = Size of Data (per database, including system databases) + Size of All Indexes (per database, including system databases)

There is a flaw in this equation, however. Calculating your disk space based on the minimum amount needed would be a mistake. You need to take into account the growth of the database over the time that the system will be in service. That would transform the equation into this:

Minimum Disk Space Needed = Size of Data (per database, including system databases) + Size of All Indexes (per database, including system databases, full-text indexes, and so on) + Planned Growth + Microsoft Distributed Transaction Coordinator (MS DTC) Logging Space + Amount of Operating Reserve + Amount Reserved for Hardware Optimization

The revised equation is much more realistic. The amount of operating reserve is the total drive space needed to handle an emergency situation.

For example, if you need to add a new column to a table, you need enough transaction log space for the entire table (possibly two times the table) because all changes are logged row by row and the table is affected in its entirety within one transaction. The amount reserved for hardware optimization is based on the disk drive performance of the inner tracks of the physical drive, which might be slower than the outer tracks (see the section Understanding Your Hardware later in this chapter for information on media banding and physical drive characteristics).

In this case, the amount can range from 10 to 40 percent depending on whom you ask, but the performance characteristics might be different on more modern disk drives (up to 25 percent faster on the outer tracks than the inner tracks). You can combine this reserve space with the operating system reserve in most cases. With MS DTC usage, if you have high transactional volume, the logging of MS DTC might become a bottleneck.

| Note | How do you actually determine the size of each database? You need to know how the applications will be using the databases ”not only the user databases created specifically for the application, but system databases msdb and tempdb as well. |

Application Database Usage

Each application using SQL Server has its own signature that is its distinct usage of SQL Server. Assess each application that will be utilizing one or more databases. What kind of work is it doing? Is it mainly reads? Mainly writes? A mixture? Only reads? Only writes? If the workload is a combination of reads and writes, what is the ratio of reads to writes ? (Some hardware solutions can assist you in this matter to report on real read versus write statistics, as well as storage caching statistics.) If this is an existing database system, how has the usage changed over time? If this is a new system, what is the projected usage of the system? Do you anticipate changes in usage patterns? You might need to ask more questions. For a packaged application that you are not developing, ensure that your vendor can reasonably answer the questions so you can plan your hardware appropriately. If possible, get the input of other customers who have used that software as well.

For example, an accounting package might have heavy inserts , for 70 percent on average, and 30 percent reads. Because the software is doing some aggregations at the SQL Server level and bringing back intermediate result sets, it also uses tempdb heavily. The company that manufactures the accounting package recommends a minimum of 60 GB as a starting size for the database, but you can best determine how the software would be used in your environment.

These questions are vital to starting the disk configuration process because, like the guiding principles that will carry through the entire high availability process, how the database is to be used directly influences every decision made about disk configuration.

Application Schema

Knowing the schema and how data is used also helps you determine the capacity needed. Important things to track are whether it is a custom in-house application or a third-party application; usage of data that is inserted, updated, and deleted; the most frequently used tables (for reads and writes); and how much these tables grow. A database administrator (DBA) or someone else, if appropriate, should track these items on a regular basis. Tracking these items over time for new and existing systems not only provides an accurate picture of your database usage, but also helps in areas such as performance tuning (figuring out what indexes to use, avoiding hotspotting at a physical level when designing a disk subsystem). If you have been using a database for years and now need to migrate it to a new version of the database software (for example, from Microsoft SQL Server 7.0 to Microsoft SQL Server 2000), you can accurately know how to configure your disk subsystem and SQL Server file sizes only if you know the answers to the above questions.

One consideration when thinking about schemas is the use or nonuse of Unicode. Unicode data is stored as nchar, nvarchar, and ntext data types in SQL Server as opposed to char, varchar and textr. This means that Unicode data types take up twice as much storage as non-Unicode data types, effectively halving how data is actually stored at a physical level (or doubling your space requirement, depending on how you look at it). This must be taken into account when calculating how much each row will total in terms of bytes ”if a row that is non-Unicode is 8000 bytes, for a Unicode row it will be 16,000 bytes.

Another consideration is fixed-length versus variable-length text columns . To be able to predict row length, and ultimately table growth over time, you should use fixed-length fields such as a char instead of a varchar. A varchar also consumes more disk space. If you are writing an application that must support international customers in different languages, you need to account for the space required for each language. Therefore, if you are writing an application that must store information in English, French, Spanish, Dutch, Japanese, and German, you need to account for up to six different versions of each column. As with many other things related to system and application designs, there are trade-offs when it comes to schema design.

For example, you have an application that accesses a table named CustomerInfo. Table 4-1 shows the table structure.

| Column Name | Type/Length | Column Size (in Bytes) |

|---|---|---|

| Customer_id | Int | 8 |

| Customer_lname | char(50) | 50 |

| Customer_fname | char(50) | 50 |

| Cust_addr1 | char(75) | 75 |

| Cust_addr2 | char(75) | 75 |

| Cust_city | char(30) | 30 |

| Cust_state | Int | 8 |

| Cust_province | char(40) | 40 |

| Cust_postalcode | char(15) | 15 |

| Cust_country | Int | 8 |

| Total | 359 |

According to Table 4-1, each row inserted into the CustomerInfo table consumes 359 bytes. Assume that this is an e-commerce application, and the database is expected to grow to 500,000 customers in two years. That equates to 179,500 kilobytes, or just fewer than 180 MB for this table alone. Each entry might also include updates to child tables (such as customer profiles or customer orders). With each insert, you need to take into account all parts of the transaction. If your table has 10, 20, 50, 100, or 500 tables in it, suddenly 180 MB is only a small part of the puzzle. If you have not gathered already, things get complex very quickly when it comes to disk architecture.

| Important | Remember to take into account columns that might also be able to contain a value of NULL, such as VARCHAR. Most column types require the same space at a physical level even if you use NULL and technically do not store data. This means that if you do not count the nullable column into your row size calculations in determining how many rows fit per page, you might cause page splits when actual data is inserted into the row. |

Indexes

Indexes are integral to a schema and help queries return data faster. Take performance out of the equation for a moment: each index takes up physical space. The total sum of all indexes on a table might actually exceed or equal the size of the data. In many cases, it is smaller than the total amount of data in the table.

There are two types of indexes: clustered and nonclustered. A clustered index is one in which all the data pages are written in order, according to the values in the indexed columns. You are allowed one clustered index per table. Clustered indexes are great, but over time, due to inserts, updates, and deletions, they can become fragmented and negatively impact performance.

Indexes can help or hurt performance. UPDATE statements with corresponding WHERE clauses and the right index can speed things up immensely. However, if you are doing an update with no WHERE clause and you have several indexes, each index containing the column being updated needs to be updated.

In a similar vein, if you are inserting a row into a table, each index that contains the row has to be updated, in addition to the data actually being inserted into the table. That is a lot of disk input/output (I/O). Then there is the issue of inserting bulk data and indexes ”indexes hinder any kind of bulk operation. Think about it logically ”when you do one insert, you are not only updating the data page, but all indexes. Multiply that by the number of rows you are inserting, and that is a heavy I/O impact no matter how fast or optimized your disk subsystem is at the hardware level. For performing bulk inserts, indexes should be dropped and re-created after the inserting of the data in most cases.

Queries might or might not need an index. For example, if you have a lookup table with two columns, one an integer, and one a fixed length of five characters , putting indexes on the table, which might only have 100 rows, could be overkill. Not only would it take up more physical space than is necessary, but also a table scan on such a small amount of data and rows is perfectly acceptable in most cases.

| More Info | You also need to consider the effect of automatic statistics and their impact on indexes. See the sections Index Statistics and Index Cost in Chapter 15 of Inside Microsoft SQL Server 2000 by Kalen Delaney (Microsoft Press, 2000, 0-7356-0998-5) for more information. SQL Server Books Online (part of SQL Server documentation) also explores indexes in much more detail than what is offered here. |

Deletes, Inserts, Selects, and Updates

The code you write to delete, insert, select, and update your data is the last part of the application troika, as it contains the instructions that tell SQL Server what to do with the data. Each delete, insert, and update is a transaction. Optimizing to reduce disk I/O is not an easy task because what is written is directly related to how well designed the schema is and the indexing scheme used. The key is to return or update only the data that you need, keeping the resulting transaction as atomic as possible. Atomic transactions and queries are ones that are kept extremely short. (It is possible to write a poor transaction that is seemingly atomic, so do not be misled.) Atomicity has an impact on disk I/O, and in terms of technologies like log shipping and transactional replication, the smaller the transaction that SQL Server has to handle, the better off you are in terms of availability.

For example, a Web-based customer relationship management (CRM) program used by your sales force allows you to access your company s entire customer list and page through the entries one by one. The screen only displays 25, and at a maximum, displays up to 200. Assume the schema listed earlier for the CustomerInfo table is used. The application developer implements this functionality by issuing a SELECT * FROM CustomerInfo query with no WHERE clause; as you might have deduced , the combination of a SELECT * that pulls back all columns and no WHERE clause to narrow the result set is not recommended. The application then takes the results and displays only the top 200 customers. Here is the problem with this query: when you reach 500,000 customers, every time this functionality is called, it returns 180 MB of data to the client. If you have 500 salespeople, 100 of whom are always accessing the system at one time, that is 18,000 MB or 18 GB of data that can potentially be queried at the same time. Not only will you be thrashing your disks, but network performance will also suffer. A better optimization would be to issue the same query with a TOP 200 added so that you are only returning the maximum result set handled by the application. Another way to handle the situation is to make the rowcount a parameter allowing each user to customize how many rows he or she would like returned, but that would not guarantee predictability in the application.

To make matters worse , if the user wanted to do different sorts, the application developer would not utilize the 180 MB result set that was already queried: he or she issues another SELECT * FROM CustomerInfo query, this time with a sorting clause (such as ORDER BY Customer_lname ASC). Each round trip, you are thrashing disks and consuming network bandwidth when you want to do a sort! If there were any sort of action associated with the rows returned, you would now have multiple users going through the list in different directions, potentially causing deadlocks and compounding the problem. Unless the data that will be pulled back changes, the application should be able to handle the sort of the data for display without another round trip. If you need to pull back different data, using a WHERE clause combined with the aforementioned TOP also helps disk I/O, especially if you have the proper indexing scheme, as you can go directly to the data you are looking for.

Transact -SQL statements that use the locking hints in SQL Server, such as HOLDLOCK, NOLOCK, and so on can either help or hinder SQL Server performance and availability. You should not use locking hints because the query processor of SQL Server in most cases chooses the right locking scheme behind the scenes as its default behavior. For example, a user of the same CRM application updates a row into the CustomerInfo table. The developer, with the best of intentions, wants to ensure that no one else can update the record at the same time, so naively he or she adds the XLOCK hint to the UPDATE statement. Adding XLOCK forces the transaction to have an exclusive lock on the CustomerInfo table. This means that no other SELECT, INSERT, UPDATE, or DELETE statements can access the table, backing up requests for SQL Server, backing up disk resources, and causing a perceived unavailability problem in the application because the users cannot do their work. Thus one seemingly small decision can affect not only many people, but also various resources on the server.

| More Info | For more information on locks, see Chapters 14 and 15 of Inside Microsoft SQL Server 2000 , by Kalen Delaney (Microsoft Press, 2000, 0-7356-0998-5). |

Understanding Physical Disk Performance

If you are using a database now, or are testing one that is going into production, you need to capture statistics about what is going on at the physical and logical layers from a performance perspective under no load, light load, medium load, and heavy load scenarios. You might be set with raw disk capacity in terms of storage space, but do you already have a performance bottleneck that might only get worse on a new system configured in the same way?

For Microsoft Windows 2000 Server and Microsoft Windows Server 2003, disk performance counters are permanently enabled. If, for some reason, they are not currently configured in Performance Monitor (they should appear as the LogicalDisk and PhysicalDisk categories), execute DISKPERF “Y to enable the disk performance counters at the next system restart. Obviously, this causes a potential availability problem if a restart is required immediately. For more information about the different options with DISKPERF, execute DISKPERF /? at a command prompt. Having the disk performance counters turned on adds approximately 10 percent of overhead to your system.

Once the disk performance counters are enabled, you can begin capturing information to profile your disk usage. There are two types of disk statistics to consider:

-

Logical A logical disk performance counter relates to what the operating system and application uses, which is usually represented by a drive letter, such as D.

-

Physical A physical disk performance counter relates to what is going on at the hardware layer.

The most important statistics are the physical counters, although the logical ones could help in some environments. It really depends on how your disk subsystem is configured and what is using each physical and logical disk. More on logical and physical disks is covered in the section Pre-Windows Disk Configuration later in this chapter.

The performance counters described in Table 4-2 should provide you with a wealth of information about your disk subsystem.

| Category | Counter | Purpose | How to Interpret |

|---|---|---|---|

| Physical Disk | % Disk Time ” track for each physical disk used | Percentage of the elapsed time that the selected physical disk drive has spent servicing read and write requests. | This number should be less than 100 percent. However, if you are seeing sustained high utilization of the physical disk, it might either be close to being overutilized, or should be tracked according to its mean time between failures (MTBF). For further follow-up or information, % Disk Read Time and % Disk Write Time can be tracked. |

| Physical Disk | Avg. Disk Queue Length ”track for each physical disk used | The average number of requests (both reads and writes) that were waiting for access to the disk during the sample interval. | Realistically, you want the number to be 0. However, 1 is acceptable, 2 could indicate a problem, and above 2 is definitely a problem. However, keep in mind that this is relative ”there might not be a problem if the queuing is not sustained, but was captured in one interval (say, one reading of 3), or if it is sustained. Remember that the number should not be divided by the number of spindles that make up a LUN. Queuing relates to the single worker thread handling the physical volume and therefore still represents a bottleneck. Follow-up or additional information can be captured with Avg. Disk Read Queue Length, Avg. Disk Write Queue Length, and Current Disk Queue Length for more isolation. |

| Physical Disk | Avg. Disk sec/Read | The average time (in seconds) it takes a read of data to occur from the disk. | This can be used to help detect latency problems on disk arrays, especially when combined with the total number of updates and the aggregate number of indexes. |

| Physical Disk | Avg. Disk sec/Write | The average time (in seconds) it takes a write of data to occur from the disk. | This can be used to help detect latency problems on disk arrays, especially when combined with the total number of updates and the aggregate number of indexes. |

| Physical Disk | Disk Bytes/sec ”track for each physical disk used | The total amount of data (in bytes) at a sample point that both reads and writes are transferred to and from the disk. | This number should fit the throughput that you need. See Pre-Windows Disk Configuration for more details. For more information or follow-up, Disk Read Bytes/sec and Disk Write Bytes/sec can further isolate performance. The average, and not actual value, can also be tracked in addition to or instead of this counter (Avg. Disk Bytes/Read, Avg. Disk Bytes/Transfer, Avg. Disk Bytes/Write). |

| Physical Disk | Disk Transfers/sec ”track for each physical disk used | The sum of all read and write operations to the physical disk. | For more information or follow-up, Disk Reads/sec and Disk Writes/sec further isolate performance. |

| Physical Disk | Avg. Disk sec/Transfer | The time (in seconds) on average it takes to service a read or write to the disk. | Sustained numbers mean that the disks might not be optimally configured. For follow-up or more isolation, use Avg. Disk sec/Read and Avg. Disk sec/Write. |

| Physical Disk | Split IO/sec | The rate (in seconds) at which I/Os were split due to a large request or something of that nature. | This might indicate that the physical disk is fragmented and is currently not optimized. |

| LogicalDisk | % Free Space ” track for each logical disk used | The amount of free space available for use. | This should be an acceptable number for your organization. Note that with NTFS, there is something called the master file table (MFT). There is at least one entry in the MFT for each file on an NTFS volume, including the MFT itself. Utilities that defragment NTFS volumes generally cannot handle MFT entries, and because MFT fragmentation can affect performance, NTFS reserves space for MFT to keep it as contiguous as possible. With Windows 2000 and Windows Server 2003, use Disk Defragmenter to analyze the NTFS drive and view the report that details MFT usage. Remember to take MFT into your free space account. Also keep in mind that database disks rarely get extremely fragmented because file creation does not happen as often (unless you are expanding frequently or using the SQL disks for other purposes). |

| Logical Disk | Free Megabytes | The amount of free space on the logical drive, measured in megabytes. | As with % Free Space, this should be at an acceptable level. |

Some of the counters in Table 4-2, such as Avg. Disk sec/Transfer, have equivalents in the LogicalDisk category. It is a good idea to further isolate and gather performance statistics to track numbers for each logical disk used by SQL Server to help explain some of the numbers that occur on the PhysicalDisk counters.

Using SQL Server to Assist with Disk Capacity Planning

SQL Server can also help you understand what is happening with your disk usage. SQL Server has a function named fn_virtualfilestats that provides I/O information about all of the files (data, log, index) that make up an individual database. fn_virtualfilestats requires the number of the database you are looking for statistics about, which can be gathered by the following query:

SELECT * FROM master..sysdatabases

Or you could use the following:

sp_helpdb

To see the actual names of the files used in your database, execute the following query:

SELECT * FROM databasename..sysfiles

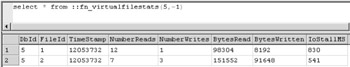

Finally, to get the statistics for all files for a specified database, execute a query using fn_virtualfilestats. It takes two parameters: the database ID, which is the first number, and the file number. If you want to return all files, use -1 for the second parameter. This example retrieves the results for all files used with pubs, as shown in Figure 4-1.

Figure 4-1: The results of a query designed to show the I/O statistics for all files comprising the pubs database.

If you take the data returned from the IOStallMS column and divide by the sum of NumberReads and NumberWrites (IOStallMS/[NumberReads+NumberWrites]), the result determines if you have a log bottleneck.

EAN: 2147483647

Pages: 137