Handling Exceptions

With the packages now migrated, the real work begins. As you can see in Figure 11-10, when you open the converted package, there are a number of errors that will need to be addressed. The specific errors in this case are that the target table that you’re trying to load doesn’t exist. You can fix this error in one of two ways. You can either run the Create Table Execute SQL Task manually, or change the DelayValidation property to True for the Data Flow Task. This validation error is because the table that the later step refers to doesn’t exist until the Execute SQL Task has been run.

Figure 11-10: Errors to be addressed

When using the wizard to convert your DTS packages, you may experience quite a few exceptions. The remaining sections in this chapter speak to some of those exceptions.

Dynamic Properties Task

The first problem to solve with both of the example downloaded DTS packages is what to do with the Dynamic Properties Task that didn’t convert over. As you know, there is no such thing as a Dynamic Properties Task in SSIS, so the wizard converts the task to a Script Task with stub code. The following stub code does nothing at all, other than to offer you a scope document on how to finish the conversion of your package:

Public Class ScriptMain ' DTS Execution Engine will call this method when your task is executed. ' You can access DTS object model through Dts identifier. Connections, Variables, ' Events and Logging features are available as static members of Dts class. ' Before returning, set Dts.TaskResult to indicate success or failure. ' ' Use F1 key to open help system and Ctrl-Alt-J to open Object Browser. Public Sub Main() ' ' Add your code here ' Source Type = 2 ' Global variable = strServerName ' Destination = 'Connections';'Local Temp DB';'OLEDBProperties';'Data Source';'Properties';'Value' ' *************************************************** ' Source Type = 2 ' Global variable = strFileName ' Destination = 'Connections';'Text File (Source)';'Properties';'DataSource' ' *************************************************** ' Dts.TaskResult = Dts.Results.Success End Sub End Class

In this code, you can see that the Source Type is equal to 2, which means that the following variable is mapping to some property in the package. In this case, the strServerName variable maps to the Data Source property in the Local Temp DB Connection Manager. Additionally, the strFileName property maps to the DataSource property in the Text File (Source) Connection Manager.

This stub code is essentially useless to you since it serves no purpose other than to show you what to change. At this point, you can delete the Script Task after you see the scope of the change.

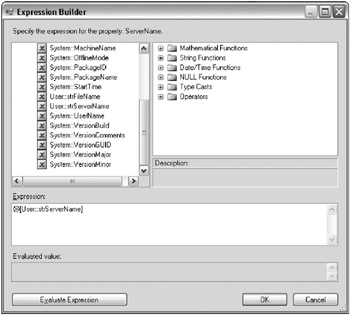

The first step is to select the Local Temp DB Connection Manager in the Connection Managers pane of the package. In the Properties window, click the ellipsis button next to the Expressions option to open the Property Expressions Editor. Select ServerName from the property drop-down list box. For the Expression column, click the ellipsis button to open the Expression Builder. In the builder, drag the User::strServerName variable into the Expression box, as shown in Figure 11-11.

Figure 11-11: The User::strServerName variable dragged into the Expression box

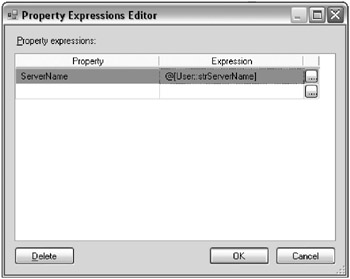

When you click OK, you’ll be taken back to the Property Expressions Editor screen, where the screen will now be filled out as shown in Figure 11-12. You will now want to repeat the same steps for the Text File (Source) Connection Manager. For that connection manager, you’ll want to set the ConnectionString property to the strFileName variable, just as you did for the other Connection Manager.

Figure 11-12: Property Expressions Editor screen filled out

The package is now dynamic again and the Script Task that was once the Dynamic Properties Task can now be removed. The Simple Load.dts package should now be fully migrated and is production-ready.

To tidy up the package, the last thing you may want to do is remove the duplicate SQL Server Connection Manager, which is called Microsoft OLE DB Provider for SQL Server 2. DTS often keeps orphaned connections that are no longer being used. Those all migrate as orphaned connection managers. Removing those should cause no harm to your package.

Complex Transform Data Tasks

Complex Transform Data Tasks are not handled properly by the migration wizard. By complex, we mean a Transform Data Task that contains any business logic inside of it. For example, say that you were to encapsulate business logic in an ActiveX Script inside the Transform Data Task, as shown in Figure 11-13. In this example (Simple Load (UCASE).dts), the transformation between the StateAbbr columns has an ActiveX Script Task on it to uppercase the data as it flows in. This simple transformation causes the task to not migrate properly to a Data Pump Task.

Figure 11-13: Encapsulated business logic in an ActiveX Script inside the Transform Data Task

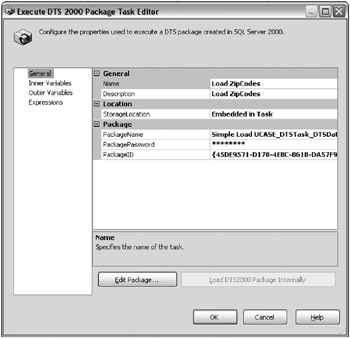

The task will instead migrate into an Execute DTS 2000 Package Task. Inside this task, the part of the package that could not be migrated will be embedded into the task, as shown in Figure 11-14. This now creates an interesting usability issue with the SSIS package. When the package is running, it will jump out of the package and load and run the DTS package. Although this is functional, it may not be feasible to go to production with pieces of the DTS package still in the SSIS package. It makes it much harder to support, since you’ll have pieces in two different architectures.

Figure 11-14: Part of the package that could not be migrated over

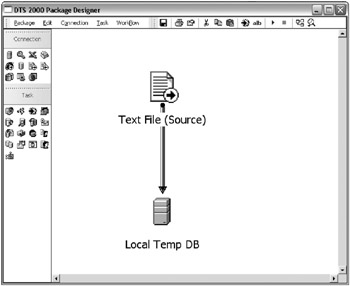

The segment of the DTS package that could not be migrated can be viewed inside the task by clicking Edit Package. An example of this view is shown in Figure 11-15. To edit or view the package, you must have the DTS Designer Components installed. You can locate this component by searching for the SQL Server 2005 Feature Pack with your favorite search engine.

Figure 11-15: Viewing the segment of the DTS package that could not be migrated

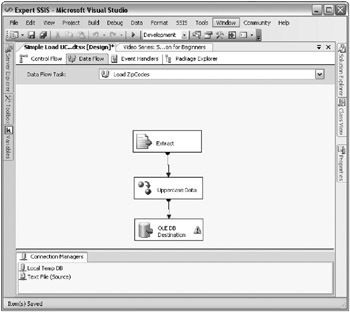

The only way to truly migrate this task is to start this one component from scratch. First, document the business logic functionality being performed inside the Transform Data Task. Then, delete the Execute DTS 2000 Package Task and replace it with a Data Flow Task. In the Data Flow Task, you create a Flat File Source that connects to a Character Map transform. The Character Map transform will uppercase the data in place. Finally, the Character Map transform connects to the SQL Server destination, which loads the ZipCodeLookup table. The final data flow resembles Figure 11-16.

Figure 11-16: Final data flow

After this, you’re ready to execute the package. There may be issues with the Flat File Connection Manager, but those will be discussed in the next section. The warning you see on the OLE DB Destination in Figure 11-16 is a truncation warning, where the metadata is incorrect in the Flat File Connection Manager.

If the ActiveX Script business logic is not important, you can use a free tool called DTStoSSIS-Prepare to remove the logic from a command line. You can also remove it inside the DTS designer, but the DTStoSSIS-Prepare tool (which can be downloaded from www.sqlbi.eu/) can be automated for you. DTS Xchange is another tool, which will perform a much more complex migration easily (www.pragmaticworks.com).

Flat File Connection Managers

Quite often, you will see issues when you migrate a flat file connection in DTS to a Flat File Connection Manager. If your DTS package uses a flat file, you will want to be sure that, prior to deploying your package to production, you open the converted connection manager and configure the metadata in the Advanced page. By default, the columns will all be set up as 50-character string data types, and will need to be set up to the appropriate data type.

Sometimes, when you first open the connection manager, you’ll see an error as shown in Figure 11-17, where the columns need to be defined. In this situation, you must only go to the Columns page for the columns to be detected automatically. Then, go to the Advanced page to set up the metadata for each column.

Figure 11-17: Error appearing when you first open the Connection Manager

ActiveX Script Task

ActiveX Script Tasks are more likely than not going to migrate over to SSIS. They will, of course, migrate to an ActiveX Script Task in SSIS, but the task will probably have bad code in it for SSIS. This is mainly because most DTS programmers used this task to set variables or to interface with the DTS object model. This object model has changed completely now, and anywhere you interfaced with the DTS object model, it will no longer be able to run.

For setting variables, you can quickly do this with the expression language. Simply select the variable and change the EvaluateAsExpression option to True. Then, write an expression to set the variable to your desired value at runtime. This works for simple business logic on variables. You can also use the Script Task to set your variables if the business logic is too complex for an expression. To do this, you’d use the Dts.Variables object inside the Script Task.

Another common usage for the ActiveX Script Task is to interface with the file system. In these cases, you can quickly and easily replace the functionality with the File System Task. A common use for this task was to archive a file or move a file. All of this is now done through a GUI File System Task, and will be much easier to read and maintain for future developers looking at your SSIS package.

Previously, the most common example for using the ActiveX Script Task in DTS was to loop through a directory of files, dynamically load the file into a destination, and change the connections based on the file name. That is now replaced easily with the ForEach Loop and the For Each File enumerator. Again, this is out-of-the-box and requires no coding; making it much easier for your successors to read your work.

Just because you can migrate your DTS ActiveX Script Tasks to SSIS, it doesn’t mean you want to. So, many of the reasons for which you would use this task have now been ported to simple out-of-the-box tasks in SSIS. If you choose to keep the script, be sure to migrate the logic to SSIS Script Tasks and not ActiveX Script Tasks, since the task has been deprecated and will most likely not survive the next release of SQL Server.

EAN: 2147483647

Pages: 111