Explicit Memory Management

A computer's memory is divided into two sections: the call stack and the heap. The call stack, discussed in Section 4.2, is where variables (and arguments) are stored. The rest of memory is the heap. (This has nothing to do with the use of the word "heap" in Section 14.1.)

For each variable, a certain number of bytes are allocated in the call frame where the variable lives. If the variable is of a primitive type, the required number of bytes is known in advance, so the variable's value can be stored directly in the call frame. For reference types, which are often polymorphic, it is not clear how many bytes must be allocated. For example, a variable of type Object might hold a simple object like a Die or or something much more elaborate like a Hash-Table. Such objects live in the heap, which is effectively a gigantic array. Within the call frame, a reference to the heap object is stored. A reference is actually a number, called the address of the object, indicating where in the heap the object lives.

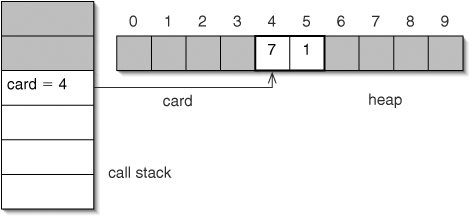

An object may take up several positions, or cells, in the heap. For example, an instance of class Card has two fields, so it takes up two cells in the heap. (This is an oversimplification, but it will do for our purposes.) When we initialize a variable

Card card = new Card(7, Card.HEARTS);

we get the situation shown in Figure 16-1.

Figure 16-1. The variable card, on the call stack, is a reference to an object in the heap. The address of this object is 4. The arrow is redundant; the actual pointer is the number 4 on the call stack. Shaded cells are not in use.

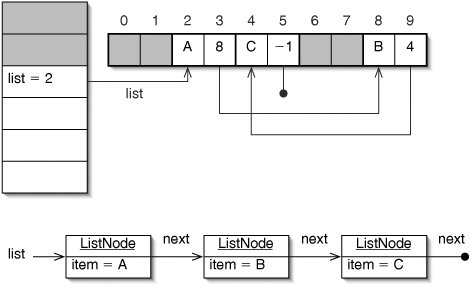

An object can, of course, have fields of reference types. In other words, the heap can contain pointers. A linked list might be represented as in Figure 16-2. Notice that the list can be scattered around memory. Indeed, as we shall see in Section 16.2, the Java system might even move things around in memory without our knowledge! As long as following the pointers gets us to the correct objects, this is not a problem.

Figure 16-2. A linked list. In the heap (top), the first node is at address 2, the second at address 8, and the last at address 4. Within each node, the second cell is a reference to (that is, gives the address of) the next one. The order in which the elements appear in the list (bottom) is not necessarily the order in which they appear in memory.

(This item is displayed on page 445 in the print version)

A null pointer is represented by a pointer to some invalid address. In Figure 16-2, this is the number 1. This is why we use ==, rather than equals(), to check if an object is null. The == operator directly compares the contents of two locations in memory. In contrast, equals() follows the pointers in those two locations and compares the objects to which they refer.

In languages with explicit memory management, like C and C++, it is possible to determine the address of an object. In Java, we can't see the addresses. This is what people mean when they say that "Java doesn't have pointers." We do have references in Java, so we can build linked structures, but we don't have direct access to the addresses themselves.

Aficionados of various languages will argue at great length about whether or not addresses should be visible. We won't attempt to settle this argument here, but we will illustrate some of the consequences of each approach.

We can't access real addresses in Java, but we can build a simulated memory in which the addresses are visible (Figure 16-3).

Figure 16-3. A simulated memory.

1 /**

2 * A simulated memory for illustrating memory management. Each

3 * object takes up two cells.

4 */

5 public class Memory {

6

7 /** Number of cells in memory. */

8 public static final int MEMORY_SIZE = 1000;

9

10 /** The null reference. */

11 public static final int NULL = -1;

12

13 /** Data are stored in these locations. */

14 private int[] heap;

15

16 /** Create the heap. */ 17 public Memory() { 18 heap = new int[MEMORY_SIZE]; 19 } 20 21 /** Return the contents of address. */ 22 public int get(int address) { 23 return heap[address]; 24 } 25 26 /** Set the contents of address to value. */ 27 public void set(int address, int value) { 28 heap[address] = value; 29 } 30 31 } |

Figure 16-4 shows Java statements and the corresponding statements for the simulated memory.

|

Java |

Simulated Memory |

|---|---|

Card card = new Card(7, 1); |

int card = 4; set(card + 0, 7); set(card + 1, 1); |

int r = card.rank; |

int r = get(card + 1); |

card.suit = 2; |

set(card + 0, 2); |

The reader with an eye for detail will wonder why the address 4 was chosen. This choice is arbitrary, but it raises a very serious concern: how do we make sure we don't choose a section of the heap that overlaps with one being used for some other object? Memory management is the job of keeping track of which addresses are in use.

The Free List

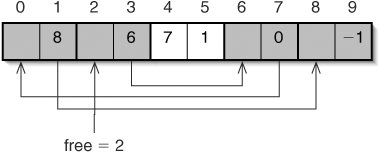

Let us assume, for simplicity, that each object takes up exactly two cells in the heap. We can string all of the unused cell pairs together in a sort of linked list (Figure 16-5). This list of available memory is called the free list.

Figure 16-5. The free list contains the objects beginning at addresses 2, 6, 0, and 8. As shown here, the free list does not necessarily start at address 0.

When we first create our memory, we have to string all of the cell pairs together to create the free list (Figure 16-6).

Figure 16-6. When the memory is first created, all memory must be put on the free list.

1 /** Address of the beginning of the free list. */

2 private int free;

3

4 /** Create the heap. */

5 public Memory() {

6 heap = new int[MEMORY_SIZE];

7 for (int i = 0; i < heap.length; i += 2) {

8 set(i + 1, i + 2);

9 }

10 set(heap.length - 1, NULL);

11 free = 0;

12 }

|

When we need a new object, we just take the first one off the front of the free list (Figure 16-7).

Figure 16-7. The allocate() method returns the address of an available object.

1 /**

2 * Return the address of an available object, which is removed from

3 * the free list.

4 */

5 public int allocate() {

6 int result = free;

7 free = get(free + 1);

8 return result;

9 }

|

Thus, instead of

int card = 4;

we would say:

int card = allocate();

This allocation is similar to what happens in Java when we use new. A more significant difference comes when we're done with an object. In Java, if there are no references to an object, it is automatically returned to the free list. In a language with explicit memory management, we have to tell the system that we're no longer using the memory allocated for that object. In our simulated memory, this is accomplished with the method free() (Figure 16-8).

Figure 16-8. In explicit memory management, when we're done with an object, we have to manually put it back on the free list, using this method.

1 /** Put the object at address back on the free list. */

2 public void free(int address) {

3 set(address + 1, free);

4 free = address;

5 }

|

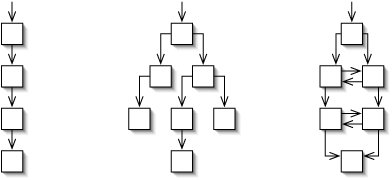

If we are done with a linked structure (Figure 16-9), we must be sure to free each of the objects in that structure.

Figure 16-9. Freeing linked structures requires some care. Objects can be linked in lists (left), trees (middle), and directed graphs (right). Each square here represents an object, which may occupy many cells in the heap and therefore contain several pointers.

Freeing a list is fairly easy, but we must take care to store the address of the next object before we free the current one (Figure 16-10). Once we free some memory, any other part of our program (or even another program) may claim it and change its contents.

Freeing a tree is more difficult. We have to have some way of distinguishing cells that contain pointers from those that don't. In practice, the compiler builds this information into the instruction part of the program. In our simulated memory, we assume that any value less than NULL is not a pointer.

Figure 16-10. Freeing a linked list.

1 /** Free the linked list starting at address. */

2 public void freeList(int address) {

3 while (address != NULL) {

4 int next = get(address + 1);

5 free(address);

6 address = next;

7 }

8 }

|

To free a tree, we just traverse it, freeing objects as we go (Figure 16-11). Once we free the memory for an object, we can no longer look inside it to find its descendants. It therefore is important to do this traversal postorder.

Figure 16-11. A tree can be freed with a postorder traversal.

1 /** Free the tree rooted at address. */

2 public void freeTree(int address) {

3 if (address > NULL) {

4 freeTree(get(address));

5 freeTree(get(address + 1));

6 free(address);

7 }

8 }

|

Notice that freeTree() would also work to free a linked list. If we know that we have a linked list, though, freeList() is more efficient, because it does not involve recursive method invocations.

We will not give a method for freeing a graph just yet, but note that such a method would also work for a tree or a linked list. Again, there would be a price in efficiency for handling a more general case. The automatic memory management techniques described in Section 16.2 address this most general case, so the programmer needn't think about the details of freeing linked structures.

Advocates of explicit memory management emphasize the efficiency cost of always using general-purpose techniques when sometimes a simpler method like freeList() would do. Advocates of automatic memory management point out that insidious bugs that can arise from failure to properly free memory when we're done with it.

Suppose a method allocates memory for a temporary object, but fails to return it to the free list afterward. Every time the method is run, a little bit less memory is available. This kind of bug is called a memory leak. A memory leak is difficult to find, because it does not cause a problem immediately. The program runs perfectly for a while, until the computer runs out of memory and the program crashes.

A complementary bug is freeing some memory that we're not really done using. A pointer into memory that we don't really control is called a dangling pointer. A dangling pointer can be caused either by freeing memory and then trying to access it, or by failing to initialize a pointer in the first place. Debugging a program with a dangling pointer is particularly nasty, because the program may behave differently on each run. When we free memory, its contents will probably stay the same for a while, but once someone else grabs that memory, its contents will change in unpredictable ways. Even more bizarre behavior can result from writing to the memory on the other end of a dangling pointer, which could alter the dataor even the instructionsof another program.

Barring bugs in the Java compiler, both memory leaks and dangling pointers are impossible in Java. Memory is returned to the free list exactly when we're done with it. We can't even get a dangling pointer by failing to initialize a field of a reference type, because Java automatically initializes such fields to null. If we try to follow such a reference, we get a NullPointerException.

Returning to the other side of the debate, advocates of explicit memory management point out that certain things can be done only with direct access to addresses. For example, consider the method swap() (Figure 16-12).

Figure 16-12. This method works in the simulated memory, in which we have access to addresses, but can't be written for normal Java variables.

1 /** Swap the data at addresses x and y. */

2 public void swap(int x, int y) {

3 int temp = get(x);

4 set(x, y);

5 set(y, temp);

6 }

|

We can do this in the simulated memory, but in plain Java, there is no way to write a method swap() so that after

int x = 1; int y = 2; swap(x, y);

x will be 2 and y will be 1.

The debate between explicit and automatic memory management is analogous to the debate between manual and automatic transmissions. A manual transmission offers more efficiency and precise control, but requires more attention and responsibility from the driver. An automatic transmission sacrifices a bit of efficiency to let the driver concentrate on other tasks, such as steering. In programming, the tradeoff is between efficiency on the one hand and correctness and rapid development on the other hand.

Using a Node Pool

We can gain some of the efficiency of explicit memory management without switching to another language such as C or C++. In a program which spends a lot of time allocating and freeing memory, we can allocate a bunch of objectssay, list nodesin advance. These objects comprise a node pool. We manage the node pool much like the free list described above (Figure 16-13).

Figure 16-13. A node pool. All of the nodes are created in the constructor.

1 /** A pool of ListNodes. */

2 public class NodePool {

3

4 /** Number of nodes in the pool. */

5 public static final int POOL_SIZE = 1000;

6

7 /** First in the linked list of free nodes. */

8 private ListNode front;

9

10 /** Create the pool. */

11 public NodePool() {

12 for (int i = 0; i < POOL_SIZE; i++) {

13 front = new ListNode(null, front);

14 }

15 }

16

17 /** Get a node from the pool, set its fields, and return it. */

18 public ListNode allocate(E item, ListNode next) {

19 ListNode result = front;

20 front = front.getNext();

21 result.setItem(item);

22 result.setNext(next);

23 return result;

24 }

25

26 /** Return a node to the pool. */

27 public void free(ListNode node) {

28 node.setNext(front);

29 front = node;

30 }

31

32 }

|

The node pool improves the efficiency of our program for two reasons. First, we don't have to use Java's general-purpose automatic memory manager to keep track of our nodes. Second, since all of the nodes are allocated at the same time, they probably live near each other in the heap, which improves cache performance.

How much speed do we gain from using a node pool? The answer can vary considerably, depending on the particular Java system being used. We can perform an empirical test by doing some memory-intensive operations both with and without the node pool. The methods in Figure 16-14 grow and shrink a linked stack of 1,000 nodes, repeating the experiment 10,000 times.

Figure 16-14. Testing the value of a node pool.

| 1 /** Number of times to run the experiment. */ 2 public static final int RUNS = 10000; 3 4 /** Compare memory-intensive operations with and without pool. */ 5 protected void test() { 6 long before; 7 long after; 8 ListNode list; 9 System.out.print("With node pool: "); 10 list = null; 11 before = System.currentTimeMillis(); 12 for (int run = 0; run < RUNS; run++) { 13 for (int i = 0; i < POOL_SIZE; i++) { 14 list = allocate(null, list); 15 } 16 for (int i = 0; i < POOL_SIZE; i++) { 17 ListNode node = list; 18 list = list.getNext(); 19 free(node); 20 } 21 } 22 after = System.currentTimeMillis(); 23 System.out.println((after - before) + " milliseconds"); 24 System.out.print("Without node pool: "); 25 list = null; 26 before = System.currentTimeMillis(); 27 for (int run = 0; run < RUNS; run++) { 28 for (int i = 0; i < POOL_SIZE; i++) { 29 list = new ListNode(null, list); 30 } 31 for (int i = 0; i < POOL_SIZE; i++) { 32 list = list.getNext(); 33 } 34 } 35 after = System.currentTimeMillis(); 36 System.out.println((after - before) + " milliseconds"); 37 } 38 39 /** Create a pool and test it. */ 40 public static void main(String[] args) { 41 NodePool pool = new NodePool(); 42 pool.test(); 43 } |

Results will vary from one machine to another. On the author's system, a typical run produces this result:

With node pool: 200 milliseconds Without node pool: 960 milliseconds

The use of the node pool appears to speed up this program by a factor of between 4 and 5. This result should be taken with a grain of salt, because this particular program does almost nothing but allocate and free memory.

Like any other optimization, a node pool should be used sparingly. A program should first be made to run correctly. Once this is done, if there is reason to suspect that a lot of time is being spent on memory management, a node pool can offer considerable speedup.

Exercises

| 16.1 |

Do arrays live on the call stack or in the heap? Explain. |

|

| 16.2 |

What programs have you used that were too slow, and therefore might have benefited from explicit memory management? What programs were either buggy or took too long to develop, and therefore might have benefited from automatic memory management? |

|

| 16.3 |

What's wrong with the version of freeList() in Figure 16-15? Figure 16-15. Broken version of freeList() for Exercise 16.3.

|

|

| 16.4 |

In line 3 of Figure 16-11, what would happen if the > were replaced with >=? |

|

| 16.5 |

Does the use of a node pool make it possible to produce a memory leak or a dangling pointer? Explain. |

|

| 16.6 |

What happens if we invoke allocate() on a NodePool in which all of the nodes are in use? |

|

| 16.7 |

Many programs take some time to initialize data structures before they can accept commands from the user. Does the use of a node pool speed this up or slow it down? Explain. |

Automatic Memory Management |

Part I: Object-Oriented Programming

Encapsulation

Polymorphism

Inheritance

- Inheritance

- Extending a Class

- The Object Class

- Packages and Access Levels

- Summary

- Vocabulary

- Problems

- Projects

Part II: Linear Structures

Stacks and Queues

- Stacks and Queues

- The Stack Interface

- The Call Stack

- Exceptions

- The Queue Interface

- Summary

- Vocabulary

- Problems

- Projects

Array-Based Structures

- Array-Based Structures

- Shrinking and Stretching Arrays

- Implementing Stacks and Queues

- The List Interface

- Iterators

- The Java Collections Framework: A First Look

- Summary

- Vocabulary

- Problems

- Projects

Linked Structures

- Linked Structures

- List Nodes

- Stacks and Queues

- The LinkedList Class

- The Java Collections Framework Revisited

- Summary

- Vocabulary

- Problems

- Projects

Part III: Algorithms

Analysis of Algorithms

- Analysis of Algorithms

- Timing

- Asymptotic Notation

- Counting Steps

- Best, Worst, and Average Case

- Amortized Analysis

- Summary

- Vocabulary

- Problems

- Projects

Searching and Sorting

- Searching and Sorting

- Linear Search

- Binary Search

- Insertion Sort

- The Comparable Interface

- Sorting Linked Lists

- Summary

- Vocabulary

- Problems

- Projects

Recursion

- Recursion

- Thinking Recursively

- Analyzing Recursive Algorithms

- Merge Sort

- Quicksort

- Avoiding Recursion

- Summary

- Vocabulary

- Problems

- Projects

Part IV: Trees and Sets

Trees

Sets

- Sets

- The Set Interface

- Ordered Lists

- Binary Search Trees

- Hash Tables

- The Java Collections Framework Again

- Summary

- Vocabulary

- Problems

- Projects

Part V: Advanced Topics

Advanced Linear Structures

- Advanced Linear Structures

- Bit Vectors

- Sparse Arrays

- Contiguous Representation of Multidimensional Arrays

- Advanced Searching and Sorting

- Summary

- Vocabulary

- Problems

- Projects

Strings

Advanced Trees

- Advanced Trees

- Heaps

- Disjoint Set Clusters

- Digital Search Trees

- Red-Black Trees

- Summary

- Vocabulary

- Problems

- Projects

Graphs

- Graphs

- Terminology

- Representation

- Graph Traversal

- Topological Sorting

- Shortest Paths

- Minimum Spanning Trees

- Summary

- Vocabulary

- Problems

- Projects

Memory Management

- Memory Management

- Explicit Memory Management

- Summary

- Vocabulary

- Problems

- Projects

Out to the Disk

- Out to the Disk

- Interacting with Files

- Compression

- External Sorting

- B-Trees

- Summary

- Vocabulary

- Problems

- Projects

Part VI: Appendices

A. Review of Java

- A. Review of Java

- A.1. The First Program

- A.2. Variables and Types

- A.3. Loops

- A.4. Interacting with the User

- A.5. Branching

- A.6. Methods and Breaking Out

- A.7. Constants

- A.8. Operators

- A.9. Debugging

- A.10. Coding Conventions

B. Unified Modeling Language

C. Summation Formulae

- C. Summation Formulae

- C.1. Sum Notation

- C.2. Sum of Constants

- C.3. Sum of First n Integers

- C.4. Sums of Halves and Doubles

- C.5. Upper Limit on Sum of a Function

- C.6. Constant Factors

D. Further Reading

Index

EAN: 2147483647

Pages: 216

- Chapter V Consumer Complaint Behavior in the Online Environment

- Chapter VI Web Site Quality and Usability in E-Commerce

- Chapter VII Objective and Perceived Complexity and Their Impacts on Internet Communication

- Chapter X Converting Browsers to Buyers: Key Considerations in Designing Business-to-Consumer Web Sites

- Chapter XVI Turning Web Surfers into Loyal Customers: Cognitive Lock-In Through Interface Design and Web Site Usability