Load Balancing

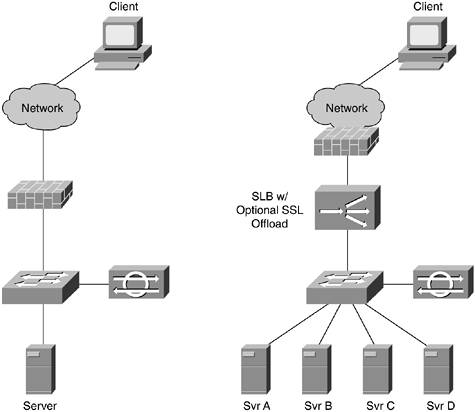

Load balancing, in the context of this section, refers to balancing the load across several servers or network security devices in order to increase the rate of performance they can deliver. Generally, a set of servers sits behind a load-balancing device, as shown in Figure 11-1.

Figure 11-1. Basic Load-Balancing Design

Requests are sent to the virtual IP address of the load balancer and then are translated by the load balancer to the physical server with the greatest current capacity.

It is important to differentiate between high availability (HA) and load balancing. Load balancing generally includes HA, provided the load after a single server failure doesn't affect the response time of the remaining servers. HA, though, can be done without load balancing, particularly with network devices. Hot Standby Router Protocol (HSRP) in its most basic configuration is an HA solution, not a load-balancing one.

In terms of when to deploy, HA can be deployed out of a desire to provide continual service to clients in the event of a failure. Load balancing is generally deployed only when the capacity of one box is not sufficient to deal with the expected client load. You usually don't deploy load balancing because you want to; you deploy it because you have to.

Security Considerations

Load-balancing devices have few unique security considerations beyond that of routers or switches. Instead of making a forwarding decision based on destination address, they make a forwarding decision based on device load. Like any network device, their configuration should be hardened, and management traffic should be secured.

One area in need of special attention is content synchronization between the servers. Depending on the content that is transferred, you likely are concerned that the source content can be distributed to each of the servers without interception or modification. This can include moving content from one server to another.

Server Load Balancing

Server load balancing (SLB) is the traditional load-balancing application. Very common in large e-commerce applications, server load balancing allows two or more devices to distribute the load delivered to a single IP address from the outside. In the past, low-tech solutions such as DNS round robin were used for this function. Today, many organizations use dedicated load-balancing hardware to determine which physical server is best able to serve the client request at the time the request is submitted.

Security Considerations

In addition to the security considerations mentioned earlier, SLB devices often take on some characteristics of a basic firewall and sometimes those of a content-filtering device. SLB devices can limit incoming requests of the servers to a particular port and can limit the requests within that port to certain application functions. In HTTP, for instance, you can differentiate between a GET and a POST or perhaps do even more granular filtering. SYN flood protection is usually available in SLB devices, too.

Although some networks use SLB technology instead of a dedicated firewall (particularly for web servers), it is better to have SLB act as another layer of your security. There are two main reasons for this. First, this allows your main firewall to still act as a centralized audit point. If you had some servers protected only by the SLB device, you would need to get separate audit logs from those devices. The second reason is that an SLB device is not designed first and foremost to do security. Although it offers some unique security capabilities, they should not be relied on in isolation. Defense-in-depth still applies.

SSL Offload

Secure Sockets Layer (SSL) offload is often paired with SLB devices. In this design (Figure 11-2), SSL traffic is terminated and decrypted at the SLB device and then sent over a single SSL tunnel to the server behind the SLB. This allows a hardware device to focus on SSL decryption and leaves the servers to handle requests at the application layer.

Figure 11-2. SSL Offload

SSL offload offers some security benefit by allowing NIDS to receive cleartext copies of traffic after it has been decrypted and before it is sent over the SSL tunnel to the server. A NIDS would otherwise see only ciphertext and thus be unable to see any attacks. Care should be taken to ensure that only the NIDS is able to see the cleartext traffic, possibly by sending cleartext traffic to a dedicated interface containing only the NIDS. Otherwise, a compromise of one of the load-balanced servers could allow an attacker to sniff the cleartext traffic destined for other servers that the attacker would ordinarily be unable to see.

The importance of proper host, application, and network system security cannot be overstated. Because all traffic is decrypted and reencrypted at the SSL offload device, a compromise of that device could have catastrophic consequences for the data in its care. By using SSL offload, you are effectively introducing a deliberate security vulnerability into your network in the name of increased performance. This isn't necessarily a bad decision, but it is one that should be undertaken with extreme care.

Security Device Placement

The security design when adding SLB devices doesn't significantly change as compared to the design without SLB. Figure 11-3 shows the same basic design with and without SLB devices.

Figure 11-3. Security Before and After SLB Deployment

NOTE

In all these designs, redundant SLB devices can be used to ensure HA of the load-balancing process. Single LB devices are used in most designs in this chapter for simplicity.

In the diagram, the firewall is placed before the SLB device, and the NIDS is placed close to the servers (the same location in both designs). Note that if all the traffic to the servers is SSL, the NIDS will do no good without the SSL offload. You should instead use host IDS (HIDS). In most networks, though, SSL and cleartext traffic terminates on the same server, making NIDS useful for the cleartext traffic.

Security Device Load Balancing

Because network security functions inspect deeper into the packet than many other network devices, they can be a performance bottleneck. Often this issue doesn't manifest itself because most organizations deploy security technology only at their Internet edge, which is usually performance constrained by the upstream bandwidth to their Internet service provider (ISP).

Whether at the Internet edge or the internal network, it is possible in many cases to exceed the throughput capacity of a security device. In these cases, one of the options you have is to load balance the device to increase throughput.

When to Use

The easy answer regarding when to use security device load balancing is "after you've exhausted every other option." Although load-balancing technology is sometimes viewed as sexy among IT folks, it should always be viewed as a last resort rather than a deliberate design goal (like HA might be). The following are the options to consider before opting for security device load balancing.

Buy a Faster Box

Although seemingly straightforward, this option is often ignored by organizations that have become comfortable with a particular offering. With advances in hardware inspection for firewalling and NIDS, boxes are available today that far exceed the performance capabilities of a general-purpose PC and operating system (OS). Load-balancing devices are often expensive, and if you need HA, several devices often must be purchased (particularly for the sandwich deployment option described in the next section). Although the cost is significant in upfront capital, it is even more significant in ongoing operations. Troubleshooting networks that use security device load balancing can be very painful.

Modify the Network Design

Another option is to modify your network design to have multiple choke points (each with a lower throughput requirement). For example, if all your traffic from the Internet flows through a single firewall (VPN, e-commerce, mail, FTP, and outbound traffic), you can consider a second firewall dedicated to the VPN access control, as shown in Chapter 10, "IPsec VPN Design Considerations."

Distribute the Security Functions

Instead of redesigning the network, you can instead redistribute the security functions among more devices. In the single-firewall example just discussed, perhaps you can perform basic filtering on your WAN router as discussed in Chapter 6. This should stop a portion of the traffic from even reaching the firewall for inspection. In extreme cases, you can move from stateful firewalls to stateless access control lists (ACLs), many of which can be run at line rate (the full capacity of the link) in modern hardware. To augment this loss of state tracking, you must pay even more attention to IDS and host security controls.

NOTE

Make sure you read the fine print in any vendor's performance claims and watch out for what are commonly called "marketing numbers." Oftentimes, such testing is done in the most performance advantageous configuration possible. Unlike more mature technologies that have adopted loose testing guidelines, the security industry is still the Wild West in terms of performance claims. Take measuring firewall performance for example: to maximize performance, a vendor might test under the following configuration:

- No Network Address Translation (NAT)

- One ACL entry (permit all)

- No application inspection

- No logging

- 1500-byte packets

- UDP rather than TCP flows

When you get the same device into your network and add a typical corporate firewall configuration, the performance will be lower, sometimes much lower. When designing your network, think of the performance numbers advertised by the vendor as the number it was just able to squeak through the box before the chips overheated and the box was set afire and fell out of the rack. The good news is most vendors have more reasonable numbers if you ask for them. Don't always count on security trade rags or independent testers either. Oftentimes, vendors influence the test bed to show their products in the most advantageous light. This is particularly bad in vendor-sponsored performance testing by independent consultants. In these cases, the vendor paying the bill decides the exact test procedures to run and even selects the vendors against which to provide a comparison.

Deployment Options

There are two primary deployment options for security device load balancing (LB) when using LB-specific hardware: "sandwich" and "stick." LB designs that work by communicating among the devices directly, such as a round-robin configuration, are not shown here.

Sandwich

In the sandwich model, the security devices are put in between load-balancing devices, as shown in Figure 11-4.

Figure 11-4. Sandwich Security Device Load Balancing

This design is appropriate for security devices that require traffic to pass through the device (such as a firewall, proxy, or VPN gateway). To ensure HA of the load balancers and the security devices, you need a minimum of four load balancers (two on each side) plus the associated Layer 2 (L2) switching infrastructure. Figure 11-5 compares a traditional HA firewall to an HA/LB firewall.

Figure 11-5. HA Firewall versus HA/LB Firewall

Neither design shows any L2 switching, which might be necessary. Even with this simple representation, however, the HA/LB solution is anything but simple. The number of devices involved is enormous. For a four-firewall LB design, as you can see in Figure 11-5, you need as many LB devices as you have firewalls.

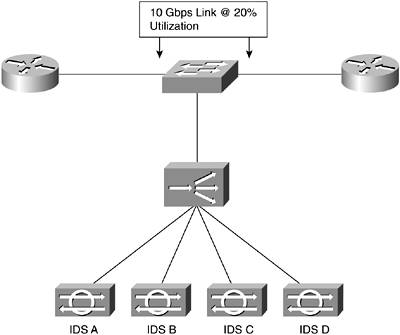

Stick

The stick model is most appropriate for security technology that doesn't directly interrupt the flow of traffic. Although you can use it in environments where traffic is inline, it halves the throughput of the LB device because it must see each flow twice. NIDS is the most common deployment choice. The design is very similar to the SLB design and operates in the same way. Say, for example, you have a NIDS solution that can comfortably inspect 500 Mbps with your traffic mix. In your network, however, you have a 10 Gbps link that is at 20 percent utilization. The LB design to handle this traffic load is shown in Figure 11-6.

Figure 11-6. Stick LB NIDS Design

It should be rare that you employ such a design, primarily because by deploying your NIDS as close to your critical networks as possible, you can lessen the performance requirements. NIDS in a load-balanced design, as shown in Figure 11-6, has a reduced ability to stop attacks because TCP resets sometimes can't be sent back through the load-balancing device. In addition, management becomes tricky because correlating a single attack might require carefully examining the alarm data from each of the load-balanced sensors.

NOTE

LB NIDS desensitizes the NIDS environment to some attacks. For example, depending on the LB algorithm chosen, you can miss port scans. Assume that you have set up flow-based LB to your servers. Flow-based LB is when flows are sent to specific servers based on a specific source IP and L4 port and destination IP and L4 port. Also assume that you have four NIDS in an LB environment protecting (among others) eight hosts the attacker has targeted. The attacker does a port scan on port 80 to the hosts one host at a time. It is possible that each NIDS will see only two TCP port 80 requests. Most port-scanning signatures are not tuned to fire on two consecutive port requests from the same source IP. (Your alarm console would constantly be lighting up.) But they might be tuned to fire on eight consecutive port 80 requests from the same source IP. Keep this in mind when tuning NIDS systems operating in an LB environment.

Part I. Network Security Foundations

Network Security Axioms

- Network Security Axioms

- Network Security Is a System

- Business Priorities Must Come First

- Network Security Promotes Good Network Design

- Everything Is a Target

- Everything Is a Weapon

- Strive for Operational Simplicity

- Good Network Security Is Predictable

- Avoid Security Through Obscurity

- Confidentiality and Security Are Not the Same

- Applied Knowledge Questions

Security Policy and Operations Life Cycle

- Security Policy and Operations Life Cycle

- You Cant Buy Network Security

- What Is a Security Policy?

- Security System Development and Operations Overview

- References

- Applied Knowledge Questions

Secure Networking Threats

- Secure Networking Threats

- The Attack Process

- Attacker Types

- Vulnerability Types

- Attack Results

- Attack Taxonomy

- References

- Applied Knowledge Questions

Network Security Technologies

- Network Security Technologies

- The Difficulties of Secure Networking

- Security Technologies

- Emerging Security Technologies

- References

- Applied Knowledge Questions

Part II. Designing Secure Networks

Device Hardening

- Device Hardening

- Components of a Hardening Strategy

- Network Devices

- NIDS

- Host Operating Systems

- Applications

- Appliance-Based Network Services

- Rogue Device Detection

- References

- Applied Knowledge Questions

General Design Considerations

- General Design Considerations

- Physical Security Issues

- Layer 2 Security Considerations

- IP Addressing Design Considerations

- ICMP Design Considerations

- Routing Considerations

- Transport Protocol Design Considerations

- DoS Design Considerations

- References

- Applied Knowledge Questions

Network Security Platform Options and Best Deployment Practices

Common Application Design Considerations

- Common Application Design Considerations

- DNS

- HTTP/HTTPS

- FTP

- Instant Messaging

- Application Evaluation

- References

- Applied Knowledge Questions

Identity Design Considerations

- Identity Design Considerations

- Basic Foundation Identity Concepts

- Types of Identity

- Factors in Identity

- Role of Identity in Secure Networking

- Identity Technology Guidelines

- Identity Deployment Recommendations

- References

- Applied Knowledge Questions

IPsec VPN Design Considerations

- IPsec VPN Design Considerations

- VPN Basics

- Types of IPsec VPNs

- IPsec Modes of Operation and Security Options

- Topology Considerations

- Design Considerations

- Site-to-Site Deployment Examples

- IPsec Outsourcing

- References

- Applied Knowledge Questions

Supporting-Technology Design Considerations

- Supporting-Technology Design Considerations

- Content

- Load Balancing

- Wireless LANs

- IP Telephony

- References

- Applied Knowledge Questions

Designing Your Security System

- Designing Your Security System

- Network Design Refresher

- Security System Concepts

- Impact of Network Security on the Entire Design

- Ten Steps to Designing Your Security System

- Applied Knowledge Questions

Part III. Secure Network Designs

Edge Security Design

- Edge Security Design

- What Is the Edge?

- Expected Threats

- Threat Mitigation

- Identity Considerations

- Network Design Considerations

- Small Network Edge Security Design

- Medium Network Edge Security Design

- High-End Resilient Edge Security Design

- Provisions for E-Commerce and Extranet Design

- References

- Applied Knowledge Questions

Campus Security Design

- Campus Security Design

- What Is the Campus?

- Campus Trust Model

- Expected Threats

- Threat Mitigation

- Identity Considerations

- Network Design Considerations

- Small Network Campus Security Design

- Medium Network Campus Security Design

- High-End Resilient Campus Security Design

- References

- Applied Knowledge Questions

Teleworker Security Design

- Teleworker Security Design

- Defining the Teleworker Environment

- Expected Threats

- Threat Mitigation

- Identity Considerations

- Network Design Considerations

- Software-Based Teleworker Design

- Hardware-Based Teleworker Design

- Design Evaluations

- Applied Knowledge Questions

Part IV. Network Management, Case Studies, and Conclusions

Secure Network Management and Network Security Management

- Secure Network Management and Network Security Management

- Utopian Management Goals

- Organizational Realities

- Protocol Capabilities

- Tool Capabilities

- Secure Management Design Options

- Network Security Management Best Practices

- References

- Applied Knowledge Questions

Case Studies

- Case Studies

- Introduction

- Real-World Applicability

- Organization

- NetGamesRUs.com

- University of Insecurity

- Black Helicopter Research Limited

- Applied Knowledge Questions

Conclusions

- Conclusions

- Introduction

- Management Problems Will Continue

- Security Will Become Computationally Less Expensive

- Homogeneous and Heterogeneous Networks

- Legislation Should Garner Serious Consideration

- IP Version 6 Changes Things

- Network Security Is a System

References

Appendix A. Glossary of Terms

Appendix B. Answers to Applied Knowledge Questions

Appendix C. Sample Security Policies

INFOSEC Acceptable Use Policy

Password Policy

Guidelines on Antivirus Process

Index

EAN: 2147483647

Pages: 249

- Integration Strategies and Tactics for Information Technology Governance

- Assessing Business-IT Alignment Maturity

- Linking the IT Balanced Scorecard to the Business Objectives at a Major Canadian Financial Group

- A View on Knowledge Management: Utilizing a Balanced Scorecard Methodology for Analyzing Knowledge Metrics

- The Evolution of IT Governance at NB Power

- Working with Queries, Expressions, and Aggregate Functions

- Performing Multiple-table Queries and Creating SQL Data Views

- Working with SQL JOIN Statements and Other Multiple-table Queries

- Understanding Transaction Isolation Levels and Concurrent Processing

- Writing External Applications to Query and Manipulate Database Data