Understanding ParallelKnoppix

ParallelKnoppix is a live CD designed to make setting up ad-hoc clusters quick and easy. It's ideal for getting started with parallel computing; it only takes a few machines. It uses a form of clustering known as MPI (Message Passing Interface), which enables applications specifically designed for parallel computation to be run on several different processors or computers.

| Note | ParallelKnoppix was developed by Michael Creel at the Universitat Autonoma de Barcelona. Its homepage (http://pareto.uab.es/mcreel/ParallelKnoppix/) has links to ISO images you can download, and much more useful information. |

Download an ISO image and burn it to a CD before continuing.

Setting Up ParallelKnoppix

ParallelKnoppix has pretty basic requirements:

-

A master node, with CD-ROM, keyboard, mouse, network card, and a hard disk partition on which you can write. This machine can be a stock Windows installation using FAT32, or a Linux installation using ext3, ReiserFS, or any other filesystem. It just needs to be writable from Knoppix.

-

From 1 to 200 slave nodes, with kernel-supported network cards and the capability to PXE boot. (PXE booting enables your computer to boot off the network instead of a hard drive or CD-ROM. You can find out more at http://en.wikipedia.org/wiki/Pxe.) You want these to all take the same boot-time arguments for Knoppix because they'll be booted homogeneously by default. You also need to know which kernel driver supports the network card.

-

A reasonably fast network

-

A single copy of the ParallelKnoppix CD, which enables you to create a cluster from 2 to 200 nodes

One thing to note right up front: ParallelKnoppix clusters are, by design, very insecure. You really don't want to run on an open network such as a campus computer lab. In addition, they depend on being the only DHCP/netboot server on the network, so pull the uplink to the outside network before you begin (just remember to plug it back in after you're finished with your crunching!). An astute reader might notice that there is no way to get your programs and data into the cluster; you'll deal with that in a second.

After you have all your equipment together, you're about 10 minutes from having your very own computer cluster. First, boot the master node with the ParallelKnoppix CD, just like you would if you were running Knoppix on the machine. Leave all the slaves off — they need to boot up after the master is configured. When the master node is up, you'll be at a normal KDE desktop, with a copy of the ParallelKnoppix home page on the screen. From the KDE menu, select ParallelKnoppix → Setup ParallelKnoppix. A series of windows appear to guide you through configuring your cluster.

This is where you'll need a list of required network drivers (I just accept the default list, and haven't run into any problems). Given how fast this process is, it's probably easier to just run through once to find any kinks, and then resolve them in the second pass. It's worth noting that your network will be configured as 192.168.0.0/255.255.255.0: If this conflicts with your existing network setup, you won't be able to use the network, which means you won't be able to set up a cluster. Unfortunately, there's no way to work around this, short of remastering the ParallelKnoppix distribution CD.

After the network is configured, you need to decide which existing partition on the master node to use. The setup program creates a directory named parallel_knoppix_working in the root of the partition unless the directory already exists. If it does exist, ParallelKnoppix just leaves it in place, creating a great place to stash things you want to keep across sessions. This directory is NFS-exported with relatively open permissions.

| Caution | Did I mention that this setup is insecure by design and that you want to be on your own private network segment? It really is, and you really do. |

Further into the setup, a dialog box pops up informing you that "Now would be a good time to boot the slave nodes." Go ahead and do so. If you have machines in your cluster that require their own boot arguments, make sure they have keyboards and a monitor. Boot all your homogeneous machines, and then boot these special machines one by one. If you don't have monitors on all your nodes, be sure to wait a few minutes for the boot process to complete. After they're all booted, click OK in the dialog box. The setup program then SSHes into each slave machine as root to mount the NFS share. If you find that one of your machines isn't quite booted yet, you'll have to start the setup process over or try to limp along without that machine.

After all your slave nodes are booted, you return to the KDE desktop on the master node. From here, you can run one or two of the included demos to get a feel for things and then settle into a semi-permanent cluster.

Exploring ParallelKnoppix Demo Applications

ParallelKnoppix ships with a few good demo programs on the CD. These programs are a fine way to test a new cluster to make sure it's working. They're also a good learning experience: You can use them as bases for your own clustering applications.

Using Octave for Kernel Regression

GNU Octave is a numeric computation program, used to solve systems of equations. One of the unique features of ParallelKnoppix is an MPI-enabled version of Octave, which makes it a great environment for doing numeric analysis when your processing lends itself to parallelization. As a general rule of thumb, if you have multiple large, mostly independent steps in your processing, then you can parallelize your process. It might take some creativity, but it can (probably) be done.

Take a look at a kernel regression implemented in GNU Octave. If you're not a mathematician, this probably isn't the kernel you're thinking of: Octave is a numeric computation environment. In this context, a "kernel regression" is a fancy way of saying "curve fitting." If this is new to you, don't worry: It's still a good example of running an MPI program under ParallelKnoppix. That said, this problem is of special interest to math nerds.

First get things into shared space. You can do this really easily: On the KDE desktop is a link, named parallel_knoppix_working, that points at the working space. The example programs can also be found on the desktop, in the ParallelKnoppix directory. (That directory's icon isn't a folder, but a white square.). Open ParallelKnoppix, go into the Examples directory, and copy the Octave folder into the parallel_knoppix_working folder. Then open a shell window, and cd into the copied Octave/kernel_regression/ directory. From there, run octave to start the Octave interpreter. Once you reach a new prompt, type kernel_example1 and press Enter.

After a little while, you get a graph and its fit. Okay, it isn't the most visually stunning example, but it is a good test to ensure that your cluster is functional.

Calculating Pi

In the same vein, the pi sample program is an instructive case if you have an MPI application you'd like to compile on ParallelKnoppix. It's a simple demonstration of compiling an MPI application for use on a ParallelKnoppix cluster.

The pi example contains a neat technique for estimating pi: Instead of trying to do a lot of calculations, it simulates the "dartboard" technique, which is elegant in its own right. Imagine you have a square dartboard. Now, draw a circle centered on one of the corners with a radius equal to the side length of the square. This leaves a quarter-circle that covers the majority of the square's surface. Next, you throw darts at this board, keeping track of how many hit inside the quarter-circle and how many hit outside. Compare the areas and you see that there's a (πr2/4)/r2 = π/4 probability of a dart landing inside the circle. Just multiply the percentage of darts landing inside the circle by four, and you have an estimate of π! In a moment, you'll see just how accurate the estimate is.

As with the Octave example, begin by copying the pi directory into the shared working space. The pi folder is found in ParallelKnoppix/Examples/C/pi/; just copy that directory into the working folder. Switch to a console window, cd into the newly copied directory, and run mpiCC -o pi pi_send.c dboard.c. This command should create a pi binary.

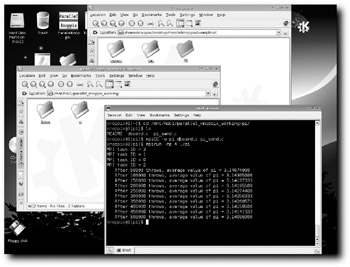

Run the binary with mpirun -np 4 ./pi, a command that handles all the MPI-related voodoo that gets nodes to start running the application. All of these steps are shown in Figure 7-1.

Figure 7-1: A sample run of pi.

/dev/hdc1 was used for mounting in this example, so it became /mnt/hdc1 in the prompt. You need to replace it with the appropriate partition on your setup.

Getting Data into a ParallelKnoppix Cluster

You have source code and real datasets to move in and crunch, which is why you're interested in clustering, right? With the master node booted off a CD, and without a network, you face a problem: getting your data into the cluster. There are three basic methods, each with its own benefits and drawbacks.

First, Knoppix has excellent removable storage support: You can just schlep your material in on a USB or IEEE1394 drive. Plug the drive in, it is detected and mounted, and you just need to manually move things off the disk before you start (don't forget to move your results back onto the disk!). The removable storage method is a very simple, low-overhead way to get things into and out of your cluster. If your data is large, or you're stuck with USB 1.x devices, however, it might be too slow.

The second technique is the most obvious: Slap the data on a drive in the master node before you get going. This allows really fast access and makes it less likely that you'll forget to save your results after you finish. Unfortunately, it also requires opening the master node, or at least a lot of planning work with the master node. If you are using a computer lab in the middle of the night, you might not have time to do all that planning, but if you are in the lab, you thoughtfully removed the uplink, right? That should leave you a place to plug into the network, which leads to the third option.

The last technique is a nice compromise between the first two. Because ParallelKnoppix only supports 201 nodes, but practically requires its own /24 netblock, you have more than 50 free IP addresses to play with. Just bring in a fileserver laptop or desktop containing your data. You can then use CIFS/SMB to access your data on the master node. This enables you to do all your staging up front without worrying about the speed of the USB on your master node. As long as the network is reasonably fast, you can copy your working set to the master node in a matter of minutes, crunch your data, and then offload your results in a few minutes. If you need Internet access, adding a second network card to the fileserver enables you to set it up as a firewall of sorts. Not only does this protect your cluster from the network; it protects other nodes on the network from your master node (I use this technique, although I typically use my laptop's wireless card as the upstream).

Creating a Semi-Permanent Cluster for Using ParallelKnoppix

The next challenge you face with ParallelKnoppix is settling in. If you're doing any long-term projects, you will invariably want to use the cluster for more than just one or two runs. Instead of compiling your application every time, it would be nice to just fire things up, load in your data, and run.

One option available is the new UnionFS support, which enables you to put new data onto the Knoppix CD, assuming that it's a CD-RW and that your master node has the appropriate CD-RW device. This is a good first attempt, but it has one minor drawback: It would be even better if your applications would work with any new version of the ParallelKnoppix CD. Then you could upgrade your cluster by just burning a new CD image. This would require some storage that won't change out from under you.

There are two obvious candidates: the storage you're carrying around in which your data resides and the hard disk you're using for your working directory. If your working directory won't disappear on you, it's a good first choice. In addition, because that path is the only shared space on the cluster, it's the best place to put any libraries, shared data, and so on, required by your software. Even if you need to use external storage to guarantee persistence, you will probably be putting your "constant stuff" into your working directory anyway.

After your ParallelKnoppix habits have settled in a bit, so that you use the same partition each time and know what applications you need, you can start firming your installation to match. Software can be downloaded and installed in the usual ways. For instance, the following example builds a new MPICH installation from ANL. You don't actually need the new MPICH, but there is a really neat demo program embedded, and it's typical of third-party build and installation. Here's what to do:

-

Go to the MPICH home page at http://www-unix.mcs.anl.gov/mpi/mpich/.

-

Go to the download page and download the source code (mpich.tar.gz) into a location on your hard disk.

-

Untar the file and go through a standard build process, but with the installation directory being the working directory. First, to set things up:

configure --prefix=/mnt/hdc4/parallel\_knoppix\_working/

Then, to build it:

make

Finally, to install the new MPICH:

make install

After all that, you can go on to build a very cool parallel-processing demo. Figure 7-2 shows the payoff: building and running pmandel, a parallel-processing Mandelbrot set viewer. There's hardly a more appropriate problem for a cluster. Because a fractal is computed pixel by pixel, you can just break the field of view into a bunch of independent rectangular regions. Each of those regions is sent to a cluster node, which then sends back the appropriate values to the master, which draws it in. If your cluster is slow enough, you can watch the output window as it splits into white rectangles, which are then filled in sequentially. To see just how much your cluster helps, try mpirun -np 1 ./pmandel to run a single process instead of the usual four. It's a stunning presentation of the power of parallel processing, perfect for demonstrations (when you're applying for funds, for example).

Figure 7-2: Beautiful results from a pmandel run

Now you should be able to use your cluster however you want. Even if you're not using ParallelKnoppix, the experience gained here gives you a good idea of how to proceed with another clustering setup.

EAN: 2147483647

Pages: 118