Networking and Firewalls

|

For quite some time, it was common for companies to think that once they deployed a firewall, they were secure. However, firewalls are just one component in an enterprise security strategy. They are generally good at what they do (filtering traffic), but they cannot do everything. The nature of perimeter security has also changed; many companies no longer need outbound-only traffic. Many enterprises now deal with much more complex environments that include business partner connections, Virtual Private Networks (VPNs), and complicated e-commerce infrastructures. This complexity has driven huge increases in firewall functionality. Most firewalls now support multiple network interfaces and can control traffic between them, support VPNs, and enable secure use of complicated application protocols such as H.323 for videoconferencing. The risk, however, is that as more and more functionality is added to the firewall, holes might arise in these features, compromising integrity and security. Another risk is that these features will exact a performance penalty, reducing the firewall's ability to focus on traffic filtering.

So the message is this: Try to use your firewall to the minimum extent possible so it can focus on its core function, and you can better manage the security risk of the other functions by shifting them to other systems to handle the load.

Firewall systems have certainly evolved over the years. Originally, firewalls were hand-built systems with two network interfaces that forwarded traffic between them. However, this was an area for experts only, requiring significant programming skills and system administration talent. Recognizing a need in this area, the first somewhat commercial firewall was written by Marcus Ranum (working for TIS at the time) in the early 1990s. It was called the Firewall Toolkit, or fwtk for short. It was an application proxy design (definitions are given for firewall types in the following section) that intermediated network connections from users to servers. The goal was to simplify development and deployment of firewalls and minimize the amount of custom firewall building that would otherwise be necessary. The now familiar Gauntlet firewall product evolved from the original fwtk, and TIS was acquired by Network Associates, Inc. Other vendors got into the firewall market, including Check Point, Secure Computing, Symantec, and of course, Cisco.

RBC Capital Markets estimated in a 2002 study that in 2000 the firewall market globally represented US$736 million, with an annual growth rate of 16 percent over the following five years. This shows that not everyone has deployed a firewall yet, that more companies are deploying them internally, and that there is ongoing replacement activity.

Next, let's look at the types of firewalls and compare their functionalities.

Firewall Interfaces: Inside, Outside, and DMZ

In its most basic form, a firewall has just two network interfaces: inside and outside. These labels refer to the level of trust in the attached network, where the outside interface is connected to the untrusted network (often the Internet) and the inside interface is connected to the trusted network. In an internal deployment, the interface referred to as outside may be connected to the company backbone, which is probably not as untrusted as the Internet but just the same is trusted somewhat less than the inside. Recall the previous example of a firewall deployed to protect a payroll department.

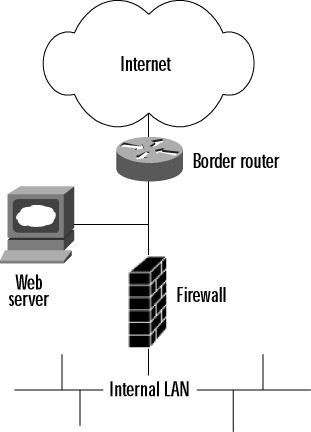

As a company's Internet business needs become more complex, the limitations of having only two interfaces becomes apparent. For example, where would you put a Web server for your customers? If you place it on the outside of the firewall, as in Figure 2.3, the Web server is fully exposed to attacks, with only a screening router for minimal protection. You must rely on the security of the host system in this instance.

Figure 2.3: A Web Server Located Outside the Firewall

The other possibility in the two-interface firewall scenario is to put the Web server inside the firewall, on an internal segment (see Figure 2.4). The firewall would be configured to allow Web traffic on port 80, and maybe 443 for Secure Sockets Layer (SSL), through to the IP address of the Web server. This prevents any direct probing of your internal network by an attacker, but what if he or she is able to compromise your Web server through port 80 and gain remote superuser access? Then he or she is free to launch attacks from the Web server to anywhere else in your internal network, with no restrictions.

Figure 2.4: A Web Server Located Inside the Firewall

The answer to these problems is to have support for multiple interfaces on your firewall, as most commercial systems now do. This solution allows for establishment of intermediate zones of trust that are neither inside nor outside. These are referred to as DMZs (from the military term demilitarized zone). A DMZ network is protected by the firewall to the same extent as the internal network but is separated so that access from the DMZ to the internal network is filtered as well. Figure 2.5 shows this layout.

Figure 2.5: A DMZ Network

Another design that is sometimes deployed uses two firewalls: an outer one and an inner one, with the DMZ lying between them (see Figure 2.6). Sometimes firewalls from two different vendors are used in this design, with the belief that a security hole in one firewall would be blocked by the other firewall. However, evidence shows that nearly all firewall breaches come from misconfiguration, not from errors in the firewall code itself. Thus, such a design only increases expense and management overhead, without providing much additional security, if any.

Figure 2.6: A Two-Firewall Architecture

Some sites have even implemented multiple DMZs, each with a different business purpose and corresponding level of trust. For example, one DMZ segment could contain only servers for public access, whereas another could host servers just for business partners or customers. This approach enables a more granular level of control and simplifies administration.

In a more complex e-commerce environment, the Web server might require access to customer data from a backend database server on the internal LAN. In this case, the firewall would be configured to allow Hypertext Transfer Protocol (HTTP) connections from the outside to the Web server and then specific connections to the appropriate IP addresses and ports as needed from the Web server to the inside database server.

Firewall Policies

As part of your security assessment process, you should have a clear idea of the various business reasons for the different communications allowed through your firewall. Each protocol carries with it certain risks, some far more than others. These risks must be balanced with business benefits. For example, one person needing X Windows access (a notoriously difficult protocol to secure properly) through the firewall for a university class she is taking is unlikely to satisfy this requirement. On the other hand, a drop-box FTP server for sharing of files with customers might satisfy the business requirement. It often happens that the firewall rule base grows organically over time and reaches a point where the administrator no longer fully understands the reasons for everything in there. For that reason, it is essential that the firewall policy be well documented, with the business justification for each rule clearly articulated in this documentation. Changes to the firewall policy should be made sparingly and cautiously, only with management approval, and through standard system maintenance and change control processes.

Address Translation

RFC1918, "Address Allocation for Private Internets," specifies certain non-registered IP address ranges that are to be used only on private networks and are not to be routed across the Internet. The RFC uses the term ambiguous to refer to these private addresses, meaning that they are not globally unique. The reserved ranges are:

-

10.0.0.0 - 10.255.255.255 (10/8 prefix)

-

172.16.0.0 - 172.31.255.255 (172.16/12 prefix)

-

192.168.0.0 - 192.168.255.255 (192.168/16 prefix)

The primary motivation for setting aside these private address ranges was the fear in 1996 that the 32-bit address space of IP version 4 was becoming rapidly depleted due to inefficient allocation. Organizations that had at most a few thousand hosts, most of which did not have to be accessible from the Internet, had been allocated huge blocks of IP addresses that had gone mostly unused. By renumbering their private networks with these reserved address ranges, companies could potentially return their allocated public blocks for use elsewhere, thus extending the useful life of IP v4.

The sharp reader, however, will point out that if these addresses are not routable on the Internet, how does one on a private network access the Web? The source IP of such a connection would be a private address, and the user's connection attempt would just be dropped before it got very far. This is where Network Address Translation (NAT), defined in RFC 1631, comes into play. Most organizations connected to the Internet use NAT to hide their internal addresses from the global Internet. This serves as a basic security measure that can make it a bit more difficult for an external attacker to map out the internal network. NAT is typically performed on the Internet firewall and takes two forms, static or dynamic. When NAT is performed, the firewall rewrites the source and/or the destination addresses in the IP header, replacing them with translated addresses. This process is configurable.

In the context of address translation, inside refers to the internal, private network. Outside is the greater network to which the private network connects (typically the Internet). Within the inside address space, addresses are referred to as inside local (typically RFC 1918 ranges) and are translated to inside global addresses that are visible on the outside. Global addresses are registered and assigned in blocks by an Internet Service Provider (ISP). For translations of outside addresses coming to the inside, distinction is made also between local, part of the private address pool, and global registered addresses. Outside local, as the name might imply, is the reverse of inside global. These are addresses of outside hosts that are translated for access internally. Outside global addresses are owned by and assigned to hosts on the external network.

To keep these terms straight, just keep in mind the direction in which the traffic is going—in other words, from where it is initiated. This direction determines which translation will be applied.

Static Translation

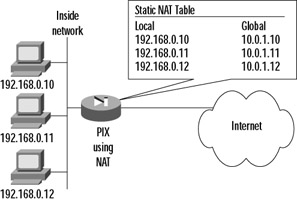

In static NAT, a permanent one-to-one mapping is established between inside local and inside global addresses. This method is useful when you have a small number of inside hosts that require access to the Internet and have adequate globally unique addresses to translate to. When a NAT router or firewall receives a packet from an inside host, it looks to see if there is a matching source address entry in its static NAT table. If there is, it replaces the local source address with a global source address and forwards the packet. Replies from the outside destination host are simply translated in reverse and routed onto the inside network. Static translation is also useful for outside communication initiated to an inside host. In this situation, the destination (not the source) address is translated. Figure 2.7 shows an example of static NAT. Each local inside address (192.168.0.10, 192.168.0.11, and 192.168.0.12) has a matching global inside address (10.0.1.10, 10.0.1.11, and 10.0.1.12, respectively).

Figure 2.7: Static Address Translation

Dynamic Translation

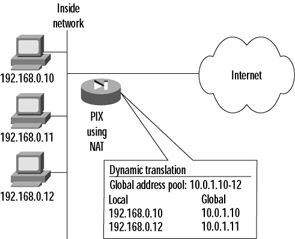

When dynamic NAT is set up, a pool of inside global addresses is defined for use in outbound translation. When the NAT router or firewall receives a packet from an inside host and dynamic NAT is configured, it selects the next available address from the global address pool that was set up and replaces the source address in the IP header. Dynamic NAT differs from static NAT because address mappings can change for each new conversation that is set up between two given endpoints. Figure 2.8 shows how dynamic translation might work. The global address pool (for example purposes only) is 10.0.1.10 through 10.0.1.12, using a 24-bit subnet mask (255.255.255.0). The local address 192.168.0.10 is mapped directly to the first address in the global pool (10.0.1.10). The next system needing access (local address 192.168.0.12 in this example) is mapped to the next available global address of 10.0.1.11. The local host 192.168.0.11 never initiated a connection to the Internet, and therefore a dynamic translation entry was never created for it.

Figure 2.8: Dynamic Address Translation

Port Address Translation

What happens when there are more internal hosts initiating sessions than there are global addresses in the pool? This is called overloading, a configurable parameter in NAT, also referred to as Port Address Translation, or PAT. In this situation, you have the possibility of multiple inside hosts being assigned to the same global source address. The NAT/PAT box must have a way to keep track of which local address to send replies back to. This is done by using unique source port numbers as the tracking mechanism and involves possibly rewriting of the source port in the packet header. You should recall that TCP/UDP uses 16 bits to encode port numbers, which allows for 65,536 different services or sources to be identified. When performing translation, PAT tries to use the original source port number if it is not already used. If it is, the next available port number from the appropriate group is used. Once the available port numbers are exhausted, the process starts again using the next available IP address from the pool.

Virtual Private Networking

The concept of VPN developed as a solution to the high cost of dedicated lines between sites that had to exchange sensitive information. As the name indicates, it is not quite private networking, but "virtually private." This privacy of communication over a public network such as the Internet is typically achieved using encryption technology and usually addresses the issues of confidentiality, integrity, and authentication.

In the past, organizations that had to enable data communication between multiple sites used a variety of pricey WAN technologies such as point-to-point leased lines, Frame Relay, X.25, and Integrated Services Digital Network (ISDN). These were especially expensive for companies that had international locations. However, whether circuit-switched or packet-switched, these technologies carried an inherent decent measure of security. A hacker would typically need to get access to the underlying telecom infrastructure to be able to snoop on communications. This was, and still is, a nontrivial task, since carriers have typically done a good job on physical security. Even so, organizations such as banks that had extreme requirements for WAN security would deploy link encryption devices to scramble all data traveling across these connections.

Another benefit to having dedicated links has been that there is a solid baseline of bandwidth that you could count on. Applications that had critical network throughput requirements would drive the specification of the size of WAN pipe that was needed to support them. VPNs experienced slow initial adoption due to the lack of throughput and reliability guarantees on the Internet as well as the complexity of configuration and management.

Now that the Internet has proven its reliability for critical tasks and many of the management hurdles have been overcome, VPN adopters are now focusing their attention on issues of interoperability and security. The interoperability question has mostly been answered as VPN vendors are implementing industry-standard protocols such as IPsec for their products. The IPsec standards provide for confidentiality, integrity, and optionally, authentication.

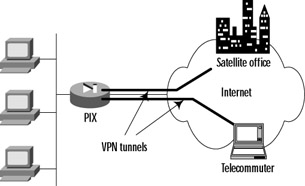

Because of these improvements, organizations are now able to deploy VPNs in a rather straightforward manner, enabling secure access to the enterprise network for remote offices and/or telecommuters. Figure 2.9 shows the two main reasons for setting up VPNs. The first is to provide site-to-site connectivity to remote offices. The second is for telecommuters, adding flexibility by enabling enterprise access not only via dial-up to any ISP but also through a broadband connection via a home or hotel, for example. VPNs are used for many other reasons nowadays, including setting up connectivity to customers, vendors, and partners.

Figure 2.9: VPN Deployment

Many organizations have gone through the trouble of setting up VPN links for their remote users but have not taken the extra step of validating or improving the security of the computers that these workers are using to access the VPN. The most secure VPN tunnel offers no protection if the user's PC has been compromised by a Trojan horse program that allows a hacker to ride through the VPN tunnel right alongside legitimate, authorized traffic.

The solution is to deploy cost-effective firewall and intrusion detection software or hardware for each client that will be accessing the VPN, as well as continuous monitoring of the datastream coming out of the tunnel. Combined with real-time antivirus scanning and regular security scans, this solution helps ensure that the VPN does not become an avenue for attack into the enterprise.

|

EAN: 2147483647

Pages: 240