Chapter 8. Abuse Cases

Chapter 8. Abuse Cases[1]

Software development is all about making software do something. People who build software tend to describe software requirements in terms of what a system will do when everything goes rightwhen users are cooperative and helpful, when environments are pristine and friendly, and when code is defect free. The focus is on functionality (in a more perfect world). As a result, when software vendors sell products, they talk about what their products do to make customers' lives easierimproving business processes or doing something else positive. Following the trend of describing the positive, most systems for designing software also tend to describe features and functions. UML, use cases, and other modeling and design tools allow software people to formalize what the software will do. This typically results in a description of a system's normative behavior, predicated on assumptions of correct usage. In less fancy language, this means that a completely functional view of a system is usually built on the assumption that the system won't be intentionally abused. But what if it is? By now you should know that if your software is going to be used, it's going to be abused. You can take that to the bank. Consider a payroll system that allows a human resources department to control salaries and benefits. A use case might say, "The system allows users in the HR management group to view and modify salaries of all employees." It might even go so far as to say, "The system will only allow a basic user to view his or her own salary." These are direct statements of what the system will do. Savvy software practitioners are beginning to think beyond features, touching on emergent properties of software systems such as reliability, security, and performance. This is mostly due to the fact that more experienced software consumers are beginning to say, "We want the software to be secure" or "We want the software to be reliable." In some cases, these kinds of wants are being formally and legally applied in service-level agreements (SLAs) and acceptance criteria regarding various system properties.[2] (See the box Holding Software Vendors Accountable for an explanation of SLAs and software security.)

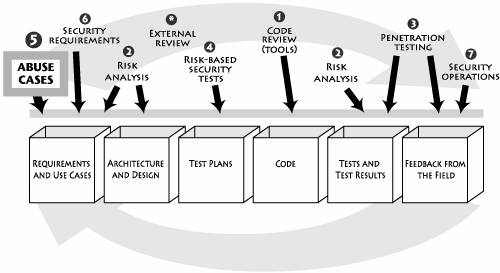

The problem is that security, reliability, and other software -ilities are complicated. In order to create secure and reliable software, abnormal behavior must somehow be anticipated. Software types don't normally describe non-normative behavior in use cases, nor do they describe it with UML; but we really need some way to talk about and prepare for abnormal behavior, especially if security is our goal. To make this concrete, think about a potential attacker in the HR example. An attacker is likely to try to gain extra privileges in the payroll system and remove evidence of any fraudulent transaction. Similarly, an attacker might try to delay all the paychecks by a day or two and embezzle the interest that is accrued during the delay. The idea is to get out your black hat and think like a bad guy. Surprise! You've already been thinking like a bad guy as you worked through previous touchpoints. This chapter is really about making the idea explicit. When you were doing source code analysis with a tool, the tool pumped out a bunch of possible problems and suggestions about what might go wrong, and you got to decide which ones were worth pursuing. (You didn't even have to know about possible attacks because the tool took care of that part for you.) Risk analysis is a bigger challenge because you start with a blank page. Not only do you have to invent the system from whole cloth, but you also need to anticipate things that will go wrong. Same goes for testing (especially adversarial testing and penetration testing). The core of each of these touchpoints is in some sense coming up with a hypothesis of what might go wrong. That's what abuse cases are all about.

Abuse cases (sometimes called misuse cases as well) are a tool that can help you begin to think about your software the same way that attackers do. By thinking beyond the normative features and functions and also contemplating negative or unexpected events, software security professionals come to better understand how to create secure and reliable software. By systematically asking, "What can go wrong here?" or better yet, "What might some bad person cause to go wrong here?" software practitioners are more likely to uncover exceptional cases and frequently overlooked security requirements. Think about what motivates an attacker. Start here.... Pretend you're the bad guy. Get in character. Now ask yourself: "What do I want?" Some ideas: I want to steal all the money. I want to learn the secret ways of the C-level execs. I want to be root of my domain. I want to reveal the glory that is the Linux Liberation Front. I want to create general havoc. I want to impress my pierced girlfriend. I want to spy on my spouse. Be creative when you do this! Bad guys want lots of different things. Bring out your inner villain. Now ask yourself: "How can I accomplish my evil goal given this pathetic pile of software before me? How can I make it cry and beg for mercy? How can I make it bend to my iron will?" There you have it. Abuse cases. Because thinking like an attacker is something best done with years of experience, this process is an opportune time to involve your network security guys (see Chapter 9). However, there are alternatives to years of experience. One excellent thought experiment (suggested by Dan Geer) runs as follows. I'll call it "engineer gone bad." Imagine taking your most trusted engineer/operator and humiliating her in publicthrow her onto the street, and dare her to do anything about it to you or to your customers. If the humiliated street bum can do nothing more than head banging on the nearest wall, you've won. This idea, in some, cases may be even more effective than simply thinking like a bad guyit's turning a good guy into a bad guy. The idea of abuse cases has a short history in the academic literature. McDermott and Fox published an early paper on abuse cases at ACSAC in 1999 [McDermott and Fox 1999]. Later, Sindre and Opdahl wrote a paper that explained how to extend use case diagrams with misuse cases [Sindre and Opdahl 2000]. Their basic idea is to represent the actions that systems should prevent in tandem with those that it should support so that security analysis of requirements is easier. Alexander advocates using misuse and use cases together to conduct threat and hazard analysis during requirements analysis [Alexander 2003]. Others have since put more flesh on the idea of abuse cases, but, frankly, abuse cases are not as commonly used as they should be. |

EAN: 2147483647

Pages: 154