PIXEL SHADERS

|

|

A pixel shader takes color, texture coordinate(s), and selected texture(s) as input and produces a single color rgba value as its output. You can ignore any texture states that are set. You can create your own texture coordinates out of thin air. You can even ignore any of the inputs provided and set the pixel color directly if you like. In other words, you have near total control over the final pixel color that shows up. The one render state that will change your pixel color is fog. The fog blend is performed after the pixel shader has run.

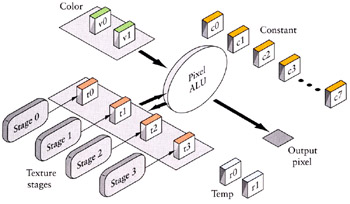

Inside a pixel shader, you can look up texture coordinates, modify them, blend them, etc. A pixel shader has two color registers as input, some constant registers, and texture coordinates and textures set prior to the execution of the shader through the render states (Figure 4.8).

Figure 4.8: Pixel shaders take color inputs and texture coordinates to generate a single output color value.

Using pixel shaders, you are free to interpret the data however you like. Since you are pretty much limited to sampling textures and blending them with colors, the size of pixel shaders is generally smaller than vertex shaders. The variety of commands, however, is pretty great since there are commands that are subtle variations of each other.

In addition to the version and constant declaration instructions, which are similar to the vertex shader instructions, pixel shader instructions have texture addressing instructions and arithmetic instructions.

Arithmetic instructions include the common mathematical instructions that you'd expect. These instructions are used to perform operations on the color or texture address information.

The texture instructions operate on a texture or texture coordinates that have been bound to a texture stage. There are instructions that can sample a texture or you can assign a texture to a stage using the SetTexture() function. This will assign a texture to one of the texture stages that the device currently supports. You control how the texture is sampled through a call to SetTextureStageState(). The simplest pixel shader we can write that samples the texture assigned to stage 0 would look like this.

// a pixel shader to use the texture of stage 0 ps.1.0 // sample the texture bound to stage 0 // using the texture coordinates from stage 0 // and place the resulting color in t0 tex t0 // now copy the color to the output register mov r0, t0

There are a large variety of texture addressing and sampling operations that give you a wide variety of options for sampling, blending, and other operations on multiple textures.

Conversely, if you didn't want to sample a texture but were just interested in coloring the pixel using the iterated colors from the vertex shader output, you could ignore any active textures and just use the color input registers. Assuming that we were using either the FFP or our vertex shader to set both the diffuse and specular colors, a pixel shader to add the diffuse and specular colors would look like this.

// a pixel shader to just blend diffuse and specular ps.1.0 // since the add instruction can only access // one color register at a time, we need to // move one value into a temporary register and // perform the add with that temp register mov r0, v1 add r0, v0, r0

As you can see, pixel shaders are straightforward to use, though understanding the intricacies of the individual instructions is sometimes a challenge.

Unfortunately, since pixel shaders are so representative of the hardware, there's a good deal of unique behavior between shader versions. For example, almost all the texture operations that were available in pixel shader 1.0 through 1.3 were replaced with fewer but more generic texture operations available in pixel shader 1.4 and 2.0. Unlike vertex shaders (for which there was a good implementation in the software driver), there was no implementation of pixel shaders in software. Thus since pixel shaders essentially expose the hardware API to the shader writer, the features of the language are directly represented by the features of the hardware. This is getting better with pixel shaders 2.0, which are starting to show more uniformity about instructions.

DirectX 8 Pixel Shader Math Precision

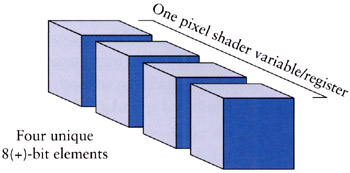

In pixel shader versions 2.0 or better (i.e., DirectX 9 compliant), the change was made to make the registers full precision registers. However, in DirectX 8, that minimum wasn't in place. Registers in pixel shaders before version 2.0 are not full 32-bit floating point values (Figure 4.9). In fact, they are severely limited in their range. The minimum precision is 8 bits, which usually translates to an implementation of a fixed point number with a sign bit and 7-8 bits for the fraction. Since the complexity of pixel shaders will only grow over time, and hence the ability to do lengthy operations, you can expect that you'll rarely run into precision problems unless you're trying to do something like perform multiple lookup operations into large texture spaces or performing many rendering passes. Only on older cards (those manufactured in or before 2001) or inexpensive cards will you find the 8-bit minimum. As manufacturers figure out how to reduce the size of the silicon and increase the complexity, they'll be able to squeeze more precision into the pixel shaders. DirectX 9 compliant cards should have 16- or 32-bits of precision.

Figure 4.9: Pixel shader registers prior to version 2.0 consisted of a four-element vector made of (at least) 8-bit floating point elements; 2.0 pixel shaders are 32-bit floats.

As the number of bits increases in the pixel shader registers, so will the overall range. You'll need to examine the D3DCAPS8.MaxPixelShaderValue Or D3DCAPS9.PixelShader1xMaxValue capability value in order to see the range that pixel registers are clamped to. In DirectX 6 and DirectX 7, this value was 0, indicating an absolute range of [0,1]. In later versions of DirectX, this value represented an absolute range, thus in DirectX 8 or 9, you might see a value of 1, which would indicate a range of [−1,1], or 8, which would indicate a range of [−8,8]. Note that this value typically depends not only on the hardware, but sometimes on the driver version as well!

No, No, It's Not a Texture, It's just Data

One of the largest problems people have with using texture operations is getting over the fact that just because something is using a texture operation, it doesn't have to be texture data. In the early days of 3D graphics, you could compute lighting effects using hardware acceleration only at vertices. Thus if you had a wall consisting of one large quadrangle and you wanted to illuminate it, you had to make sure that the light fell on at least one vertex in order to get some lighting effect. If the light was near the center, it made no difference since the light was calculated only at the vertices, and then linearly interpolated from there—thus a light at the center of a surface was only as bright as at the vertices. The brute force method of correcting this (which is what some tools like RenderMan do) is to tessellate a surface till the individual triangles are smaller than a pixel will be, in effect turning a program into a pixel accurate renderer at the expense of generating a huge number of triangles.

It turns out that there already is a hardware accelerated method of manipulating pixels—it's the texture rendering section of the API. For years, people have been doing things like using texture to create pseudolighting effects and even to simulate perturbations in the surface lighting due to a bumpy surface by using texture maps. It took a fair amount of effort to get multiple texture supported in graphics hardware, and when it finally arrived at a fairly consistent level in consumer-level graphics cards, multitexturing effects took off. Not content with waiting for the API folks to get their act together, the graphics programmers and researchers thought of different ways to use layering on multiple texture to get the effects they wanted. It's this tradition that pixel shaders are built upon. In order to get really creative with pixel shaders, you have to forget the idea that a texture is an image. A texture is just a collection of 1D, 2D, or 3D matrix of data. We can use it to hold an image, a bump map, or a lookup table of any sort of data. We just need to associate a vertex with some coordinate data and out in the pixel shader will pop those coordinates, ready for us to pluck out our matrix of data.

|

|

EAN: 2147483647

Pages: 104