Getting Serious

Okay, we have covered everything you need to know to get DirectX Audio making sound. As you start to work with this, though, you will find that there are some big picture issues that need to be sorted out for optimal performance.

-

Minimize latency: By default, the time between when you start playing a sound and when you hear it can be too long for sudden sound effects. Clearly, that needs fixing.

-

Manage dynamic 3D resources: What do you do when you have 100 objects flying in space and ten hardware AudioPaths to share among them all?

-

Keep music and sound effects separate: How to avoid clashing volume, tempo, groove level, and more.

Minimize Latency

Latency is the number one concern for sound effects. For music, it has not been as critical, since the responsiveness of a musical score can be measured in beats and sometimes measures. Although the intention was to provide as low latency as possible for both music and sound effects, DX8 shipped with an average latency of 85 milli-seconds. That's fine for ambient sounds, but it simply doesn't work for sounds that are played in response to sudden actions from the user. Obvious examples of these "twitch" sound effects would be gunfire, car horns, and other sounds that are triggered by user actions and so cannot be scheduled ahead of time in any way. These need to respond at the frame rate, which is usually between 30 and 60 times a second, or between 33 and 16 milliseconds, respectively.

Fortunately, DX9 introduces dramatically improved latency. With this release, the latency has dropped as low as 5ms, depending on the sound card and driver. Suddenly, even the hair-trigger sound effects work very well.

But there still are a few things you need to do to get optimal performance. Although the latency is capable of dropping insanely low, it is by default still kept pretty high — between 55 and 85ms, depending on the sound card. Why? In order to guarantee 100 percent compatibility for all applications on all sound cards on all systems, Microsoft decided that the very worse case setting must be used to reliably produce glitch-free sound. We're talking a five-year-old Pentium I system with a buggy sound card running DX8 applications.

Fortunately, if you know your application is running on a half-decent machine, you can override the settings and drive the latency way, way down. There are two commands for doing this. Because the audio is streamed from the synthesizer and through the effects chains, the mechanism that does this needs to wake up at a regular interval to process another batch of sound. You can determine both how close to the output the write cursor sits and how frequently it wakes up. The lower these numbers, the lower the overall latency. But there's a cost: If the write cursor is too close to the output time, you can get glitches in the sound when it simply doesn't wake up in time. If the write period is too frequent, the CPU overhead goes up. Indeed, these commands actually always existed with DirectMusic from the very start, but they could not reliably deliver significant results because it was very easy to drive the latency down too far and get horrible glitching. But DX9 comes with a rewrite of the audio sink system that borders on sheer genius. (I mean it; I am extremely impressed with what the development team has done.) With that, we can dial the numbers down as low as we want and still not glitch. Keep in mind, however, that there is the caveat that there's an esoteric bad driver out there that could prove the exception.

The two commands set the write period and write latency and are implemented via the IKsControl mechanism. IKsControl is a general-purpose mechanism for talking to kernel modules and low-level drivers. The two commands are represented by GUIDs:

-

GUID_DMUS_PROP_WritePeriod: This sets how frequently (in ms) the rendering process should wake up. By default, this is 10 milliseconds. The only cost in lowering this is increased CPU overhead. Dropping to 5ms, though, is still very reasonable. The latency contribution of the write period is, on average, half the write period. So, dropping to 5ms is an average latency increase of 2.5ms — worst case 5ms.

-

GUID_DMUS_PROP_WriteLatency: This sets the latency, in milliseconds, to add to the sound card driver's latency. For example, if the sound card has a latency of 5ms and this is set to 5ms, the real write latency ends up being 10ms.

So, total latency ends up being WritePeriod/2 + WriteLatency + DriverLatency. Here's the code to use this. This example sets the write latency to 5ms and the write period to 5ms.

IDirectMusicAudioPath *pPath; if (SUCCEEDED(m_pPerformance->GetDefaultAudioPath(&pPath)) { IKsControl *pControl; pPath->GetObjectInPath(0, DMUS_PATH_PORT,0, // Talk to the synth GUID_All_Objects,0, // Any type of synth IID_IKsControl, // IKsControl interface (void **)&pControl); if (pControl) { KSPROPERTY ksp; DWORD dwData; ULONG cb; dwData = 5; // Set the write period to 5ms. ksp.Set = GUID_DMUS_PROP_WritePeriod ; // The command ksp.Id = 0; ksp.Flags = KSPROPERTY_TYPE_SET; pControl->KsProperty(&ksp, sizeof(ksp), &dwData, sizeof(dwData), &cb); dwData = 5; // Now set the latency to 5ms. ksp.Set = GUID_DMUS_PROP_WriteLatency ; ksp.Id = 0; ksp.Flags = KSPROPERTY_TYPE_SET; pControl->KsProperty(&ksp, sizeof(ksp), &dwData, sizeof(dwData), &cb); pControl->Release(); } pPath->Release(); } Before you put these calls into your code, remember that it needs to be running on DX9. If not, latency requests this low will definitely cause glitches in the sound. Since you can ship your application with a DX9 installer, this should be a moot point. But if your app needs to be distributed in a lightweight way (i.e., via the web), then you might not include the install. If so, you need to first verify on which version you are running.

Unfortunately, there is no simple way to find out which version of DirectX is installed. There was some religious reason for not exposing such an obvious API call, but I can't remember for the life of me what it was. Fortunately, there is a sample piece of code, GetDXVersion, that ships with the SDK. GetDXVersion sniffs around making calls into the various DirectX APIs, looking for specific features that would indicate the version. Mingle includes the GetDXVersion code, so it will run properly on DX8 as well as DX9. Beware — this code won't compile under DX8, so I've included a separate project file, ![]() MingleDX8.dsp, that you should compile with if you still have the DX8 SDK.

MingleDX8.dsp, that you should compile with if you still have the DX8 SDK.

| Note | The low latency takes advantage of hardware acceleration in a big way. As long as all of the buffers are allocated from hardware, the worst-case driver latency stays low. Once hardware buffers are used up and software emulation comes into play, additional latency is typically added as the software emulation kicks in. However, today's cards usually have at least 64 3D buffers available, which, as we discuss, is typically more than you'll want. Nevertheless, the best low latency strategy makes sure that the number of buffers allocated never exceeds the hardware capabilities. |

Manage Dynamic 3D Resources

So we get to the wedding scene, and there are well over 100 pigs flying overhead, each emitting a reliable stream of oinks, snorts, and squeals over the continuous din of wing fluttering. Ooops, we only have 32 3D hardware buffers to work with. How do we manage the voices? How do we ensure that the pig buzzing the camera is always heard at the expense of silencing faraway pigs? And that trio of pigs with guitars — we want to make sure we hear them regardless of how near or far they may be.

DirectSound does have a dynamic voice management system whereby each playing sound can be assigned a priority and voices can swap out as defined by any combination of priority, time playing, and distance from the listener. It's not perfect, though. It only terminates sounds. So, if a pig that is flying away from the listener is terminated in order to make way for the hungry sow knocking over the dessert table, it remains silent once it flies back toward the listener because there's no mechanism to restart its sounds.

For better or worse, the AudioPath mechanism doesn't even try to handle this. You must roll your own. In some ways, this is a win because you can completely define the algorithm to suit your needs, and you have the extra flexibility of being able to restart with Segments or call into script routines or whatever is most appropriate for your application. This does mean quite a bit of work. With that in mind, the major thrust of the Mingle application deals with this exact issue. Mingle includes an AudioPath management library that you can rip out, revise, and replace in your app as you see fit. How does it work? Let's start with an overview of the design here, and then let's investigate in depth later when we look at the Mingle code.

There are two sets of items that we need to track:

-

Things that emit sound: Each "thing" represents an independently movable object in the world that makes a noise and so needs to be playing via one instance of an AudioPath. Each thing is assigned a priority, which is a criteria for figuring out which things can be heard, should there not be enough AudioPaths to go around. There is no limit to how large the set of things can be. There is no restriction to how priority should be calculated, though distance from the listener tends to be a good one.

-

3D AudioPaths: This set is limited to the largest amount of AudioPaths that can be running at one time. Each AudioPath is assigned to one thing that plays through the AudioPath.

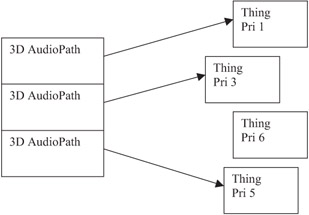

Since we have a finite set of AudioPaths, we need to match these up with the things that have the highest priorities. But priorities can change over time, especially as things move, so we need to scan through the things every frame and make sure that the highest priority things are still the ones being heard. Figure 13-1 shows the relationship of AudioPaths to things.

Figure 13-1: Three AudioPaths manage sounds for four things, sorted by priority.

Once a frame, we do the following:

-

Scan through the list of things and recalculate their positions and priorities. However, do not assign their positions yet.

-

Look for things with newly high priorities that outrank things currently assigned to AudioPaths. Stop the older things from playing and assign their AudioPaths to the new, higher priority things.

-

Get the new 3D positions from the things assigned to AudioPaths and set the AudioPath positions.

-

Start the new things, which have just been assigned AudioPaths, to make sound.

Keep Music and Sound Effects Separate

If you think about it, sound effects and music follow very different purposes as well as paradigms in entertainment, be it a movie, game, or even a web site. Sound effects focus on recreating the reality of the scene (albeit with plenty of artistic license). Music, on the other hand, clearly has nothing to do with reality and is added on top of the story to manipulate our perception of it. This is all fine and good, but if you have the same system generating both the music and the sound effects, you can easily fall into situations where they are at cross-purposes. For example, adjusting the tempo or intensity of the music should not unintentionally alter the same for sound effects. In a system as sophisticated as DirectX Audio, this conflict can happen quite frequently and result in serious head scratching. Typical problems include:

-

Groove level interference: Groove level is a great way to set intensity for both music and sound effects, but you might want to have separate intensity controls for music and sound effects. You can accomplish this by having the groove levels on separate group IDs. But there has to be an easier way…

-

Invalidation interference: When a primary or controlling Segment starts, it can automatically cause an invalidation of all playing Segments. This is necessary for music because some Segments may be relying on the control information and need to regenerate with the new parameters. But sound effects couldn't care less if the underlying music chords changed. Worse, if a wave is invalidated, it simply stops playing. You can avoid this problem by playing controlling or primary Segments with the DMUS_SEGF_INVALIDATE_PRI or DMUS_SEGF_AFTERPREPARETIME flags set. But there has to be an easier way…

-

Volume interference: Games often offer separate music and sound effects volume controls to the user. Global control of the volume is most easily managed using the global volume control on the Performance (GUID_PerfMasterVolume). That affects everything, so it can't be used. One solution is to call SetVolume() on every single AudioPath. Again, there has to be an easier way…

Okay, I get the hint. Indeed, there is an easier way. It's really quite simple. Create two separate Performance objects, one for music and one for sound effects. Suddenly, all the interference issues go out the window. The only downside is that you end up with a little extra work if the two worlds interconnect with each other, but even scripting supports the concept of more than one Performance, so that can be made to work if there is a need.

There are other bonuses as well:

-

Each Performance has its own synth and effects architecture. This means that they can run at different sample rates. The downside is that any sounds or instruments shared by both are downloaded twice, once to each synth.

-

You can do some sound effects in music time, which has better authoring support in some areas of DirectMusic Producer. Establish a tempo and write everything at that rate. This is how the sound effects for Mingle were authored. Of course, Segments authored in clock time continue to work well.

Mingle takes this approach. It creates two CAudio objects, each with its own Performance. The sound effects Performance runs at the default tempo of 120BPM without ever changing. 120BPM is convenient because one measure equals one second. The music runs at a sample rate of 48 kHz, while the sound effects run at 32 kHz. There are separate volume controls for each. For efficiency, the two CAudio instances share the same Loader.

EAN: 2147483647

Pages: 170