Mingle

Okay, let's have some programming fun. Imagine a cocktail party with a decent complement of, uh, interesting people wandering around: the snob with the running commentary, the hungry boor noshing on every hors d'oeuvre in sight, the very irritating mosquito person, and the guy who just can't stop laughing. Gentle music plays in the background, barely above the crowd noise. To experience all this and more without inhaling any secondhand smoke, run Mingle by selecting it from the Start menu (if you installed the CD). Otherwise, double-click on its icon in the Unit II\Bin directory or compile and run it from the 13_SoundEffects source directory. See Figure 13-2.

Figure 13-2: Mingle.

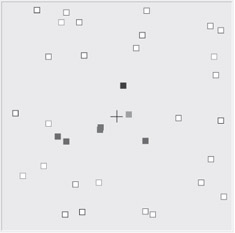

Taking up most of the Mingle window is a large square box with a plus sign in the middle. The box is a large room, and you are the plus marker in the middle. When Mingle first starts, there is general party ambience, and some music starts playing quietly. Click in the box. Ooops, you bumped into a partygoer, and he is letting you know how he feels about that. Try again, but be more careful. Ouch!

| Note | Regarding the munchkins, my two little boys walked into my office when I was recording. |

This is demonstrating low latency sound effects and 3D positioning. Click around the box and hear how the sounds are positioned in space.

| Note | If you are running under DX8, you should hear significant buzzing or breakup of the sound. Mingle is really only meant to work under DX9. Either install DX9 or rebuild Mingle with the low latency code disabled (more on that in a bit). |

Now let's have some more serious fun. There are four boxes down the left side, each representing a different person.

Click on the Talk button. This creates an instance of the person and plops it in the party. You should immediately hear the person gabbing and wandering around the room. Click the Talk button several more times and additional instances of the person enter the room. The little number box on the bottom right shows how many instances of the particular person are yacking it up at the party. Click on the Shut Up! button to remove a partygoer. Click on other participants, and note that they are displayed with different colors.

Below the four boxes is an edit field that shows how many 3D AudioPaths are allocated:

![]()

This displays how many AudioPaths are currently allocated for use by all of the sound effects. This includes one AudioPath allocated for the sounds that happen when you click the mouse. Mingle dynamically reroutes the 3D AudioPaths to the people who are closest to the center of the box (where the listener is). Continue to click on the Talk buttons and create more people than there are AudioPaths. Notice how the colored boxes turn white as they get farther from the center. This indicates that a person is no longer being played through an AudioPath.

To change the number of available AudioPaths, just edit the number in the 3D Paths box. See what happens when you drop it down to just two or three. Change the number of people by clicking on the Talk and Shut Up! buttons. How many AudioPaths does it take before you can no longer track them individually by ear? How many before it's not noticeable when the dynamic swapping occurs? Keep experimenting, and try not to let the mosquito person irritate you too much.

Across the bottom is a set of three sliders. These control three of the listener parameters: Distance Factor, Doppler Factor, and Rolloff Factor.

Drop the number of sounds to a reasonable number, so it's easier to track an individual sound, and then experiment with these sliders. If you can handle it, Mosquito is particularly good for testing because it emits a steady drone. Drag Distance Factor to the right. This increases the distances that the people are moving. Notice that the Doppler becomes more pronounced. That's because the velocities are much higher, since the distance traveled is greater. Drag Doppler to the right. This increases the Doppler effect. Drag Rolloff to the right. Notice how people get much quieter as they leave the center area.

Finally, take a look at the two volume sliders:

These control the volumes for sound effects and music. Drag them up or down to change the balance. Ah, much better. Drag them all the way down and you no longer want to mangle Mingle.

That completes the tour. Mingle demonstrates several useful things:

-

Separate sound effects and music environments

-

Volume control for sound effects and music

-

Dynamic 3D resource management

-

Low latency sound effects

-

Background ambience

-

Avoiding truncated waves

-

Manipulating the listener properties

-

Scripted control of sound effects

-

Creating music with the composition engine

Now let's see how they work.

Separate Sound Effects and Music Environments

Although Mingle doesn't have the same UI as Jones, it does borrow and continue to build on the CAudio class library for managing DirectX Audio. In the previous projects, we created one instance of CAudio, which managed the Performance, Loader, and all Segments, scripts, and AudioPaths. For Mingle, we use two instances of CAudio, which keeps the worlds of music and sound effects very separate. However, we'd like to share the Loader. Also, we'd like to set a different sample rate and default AudioPath for each, since music and sound effects have different requirements. So, CAudio::Init() takes additional parameters for default AudioPath type and sample rate.

HRESULT CAudio::Init( IDirectMusicLoader8 *pLoader, // Optionally provided Loader DWORD dwSampleRate, // Sample rate for the Performance. DWORD dwDefaultPath) // Optional default AudioPath. { // If a Loader was provided by the caller, use it. HRESULT hr = S_OK; if (pLoader) { m_pLoader = pLoader; pLoader->AddRef(); } // If not, call COM to create a new one. else { hr = CoCreateInstance( CLSID_DirectMusicLoader, NULL, CLSCTX_INPROC, IID_IDirectMusicLoader8, (void**)&m_pLoader); } // Then, create the Performance. if (SUCCEEDED(hr)) { hr = CoCreateInstance( CLSID_DirectMusicPerformance, NULL, CLSCTX_INPROC, IID_IDirectMusicPerformance8, (void**)&m_pPerformance); } if (SUCCEEDED(hr)) { // Once the Performance is created, initialize it. // Optionally, create a default AudioPath, as defined by // dwDefaultPath, and give it 128 pchannels. // Set the sample rate to the value passed in dwSampleRate. // Also, get back the IDirectSound interface and store // that in m_pDirectSound. This may come in handy later. DMUS_AUDIOPARAMS Params; Params.dwValidData = DMUS_AUDIOPARAMS_VOICES | DMUS_AUDIOPARAMS_SAMPLERATE; Params.dwSize = sizeof(Params); Params.fInitNow = true; Params.dwVoices = 100; Params.dwSampleRate = dwSampleRate; hr = m_pPerformance->InitAudio(NULL,&m_pDirectSound,NULL, dwDefaultPath, // Default AudioPath type. 128,DMUS_AUDIOF_ALL,&Params); } CMingleApp has two instances of CAudio, m_Effects and m_Music. It sets these up in its initialization. If both succeed, it opens the dialog window (which is the application) and closes down after that is finished.

BOOL CMingleApp::InitInstance() { // Initialize COM. CoInitialize(NULL); // Create the sound effects CAudio. Give it a sample rate // of 32K and have it create a default path with a stereo // Buffer. We'll use that for the background ambience. if (SUCCEEDED(m_Effects.Init( NULL, // Create the Loader. 32000, // 32K sample rate. DMUS_APATH_DYNAMIC_STEREO))) // Default AudioPath is stereo. { if (SUCCEEDED(m_Music.Init( m_Effects.GetLoader(), // Use the Loader from FX. 48000, // Higher sample rate for music. DMUS_APATH_SHARED_STEREOPLUSREVERB))) // Standard music path. { // Succeeded initializing both CAudio's, so run the window. CMingleDlg dlg; m_pMainWnd = &dlg; dlg.DoModal(); // Done, time to close down music. m_Music.Close(); } // Close down effects. m_Effects.Close(); } // Done with COM. CoUninitialize(); return FALSE; } Notice that the calls to CoInitialize() and CoUninitialize() were yanked from CAudio and placed in CMingle::InitInstance(). As convenient as it was to put the code in CAudio, it was inappropriate, since these should be called once.

Volume Control for Sound Effects and Music

Once we have two Performances for sound effects and music, it is very easy to apply global volume control independently for each. Just calculate the volume in units of 100 per decibel and use the Performance's SetGlobalParam() method to set it.

We add a method to CAudio to set the volume.

void CAudio::SetVolume(long lVolume) { m_pPerformance->SetGlobalParam( GUID_PerfMasterVolume, // Command GUID for master volume. &lVolume, // Volume parameter. sizeof(lVolume)); // Size of the parameter. } Then, it's trivial to add the sliders to the Mingle UI and connect them to this method on the two instances of CAudio.

Dynamic 3D Resource Management

This is the big one. We walked through the overall design earlier, so now we can focus on the implementation in code. We add new fields and functionality to the CAudioPath and CAudio classes and introduce two new classes, CThing and CThingManager, which are used to manage the sound-generating objects in Mingle.

CAudioPath

First, we need to add some fields to CAudioPath so it can track the specific object (or "thing") that it is rendering in 3D. These include a pointer to the object as well as the object's priority and whether a change of object is pending. We also add a method for directly setting the 3D position. Fields and flags are added for managing the priority and status of a pending swap of objects, and an IDirectSound3DBuffer interface is added to provide a direct connect to the 3D controls.

// Set the 3D position of the AudioPath. Optionally, the velocity. bool Set3DPosition(D3DVECTOR *pvPosition, D3DVECTOR *pvVelocity,DWORD dwApply); // Get and set the 3D object attached to this AudioPath. void * Get3DObject() { return m_p3DObject; }; void Set3DObject(void *p3DObject) { m_p3DObject = p3DObject; }; // Get and set the priority of the 3D object. float GetPriority() { return m_flPriority; }; void SetPriority(float flPriority) { m_flPriority = flPriority; }; // Variables added for 3D object management. void * m_p3DObject; // 3D tracking of an object in space. float m_flPriority; // Priority of 3D object in space. D3DVECTOR m_vLastPosition; // Store the last position set. D3DVECTOR m_vLastVelocity; // And the last velocity. IDirectSound3DBuffer *m_p3DBuffer; // Pointer to 3D Buffer interface. m_p3DObject is the object that the AudioPath renders, and m_flPriority is the object's priority. The two D3D vectors are used to cache the current position and velocity, so calls to set these can be a little more efficient. Likewise, the m_p3DBuffer field provides a quick way to access the 3D interface without calling GetObjectInPath() every time.

| Note | The object stays intentionally unspecific to CAudioPath. It is just a void pointer, so it could point to anything. (In Mingle, it points to a CThing.) Why? We'd like to keep CAudioPath from having to know a specific object design, which would tie it in much closer to the application design and make this code a little less transportable. |

CAudioPath::CAudioPath

The constructor has grown significantly to support all these new parameters. In particular, it calls GetObjectInPath() to create a pointer shortcut to the 3D Buffer interface at the end of the AudioPath. This will be used to directly change the coordinates every time the AudioPath moves.

CAudioPath::CAudioPath(IDirectMusicAudioPath *pAudioPath,WCHAR *pzwName) { m_pAudioPath = pAudioPath; pAudioPath->AddRef(); wcstombs(m_szName,pzwName,sizeof(m_szName)); m_lVolume = 0; // 3D AudioPath fields follow... m_vLastPosition.x = 0; m_vLastPosition.y = 0; m_vLastPosition.z = 0; m_vLastVelocity.x = 0; m_vLastVelocity.y = 0; m_vLastVelocity.z = 0; m_flPriority = FLT_MIN; m_p3DObject = NULL; m_p3DBuffer = NULL; // Try to get a 3D Buffer interface, if it exists. pAudioPath->GetObjectInPath( 0,DMUS_PATH_BUFFER, // The DirectSound Buffer. 0,GUID_All_Objects,0, // Any Buffer (should only be one). IID_IDirectSound3DBuffer, (void **)&m_p3DBuffer); }

CAudioPath::Set3DPosition()

Set3DPosition() is intended primarily for 3D AudioPaths in the 3D pool. It is called on a regular basis (typically once per frame) to update the 3D position of the AudioPath.

Set3DPosition() sports two optimizations. First, it stores the previous position and velocity of the 3D object in the m_vLastPosition and m_vLastVelocity fields. It compares to see if either the position or velocity has changed. If the 3D object's position or velocity have not changed, Set3DPosition() returns without doing anything. Secondly, Set3DPosition() keeps a pointer directly to the IDirectSound3DBuffer interface so it doesn't have to call GetObjectInPath() every time. Set3DPosition() calls the appropriate IDirectSound3DBuffer methods to update the m_vLastPosition and m_vLastVelocity fields if the position or velocity do change.

Set3DPosition() returns true for success and false for failure.

bool CAudioPath::Set3DPosition( D3DVECTOR *pvPosition, D3DVECTOR *pvVelocity, DWORD dwApply) { // First, verify that this has changed since last time. If not, just // return success. if (!memcmp(&m_vLastPosition,pvPosition,sizeof(D3DVECTOR))) { // Position hasn't changed. What about velocity? if (pvVelocity) { if (!memcmp(&m_vLastVelocity,pvVelocity,sizeof(D3DVECTOR))) { // No change to velocity. No need to do anything. return true; } } else return true; } // We'll be using the IDirectSound3DBuffer interface that // we created in the constructor. if (m_p3DBuffer) { // Okay, we have the 3D Buffer. Control it. m_p3DBuffer->SetPosition(pvPosition->x,pvPosition->y,pvPosition->z,dwApply); m_vLastPosition = *pvPosition; // Velocity is optional. if (pvVelocity) { m_p3DBuffer->SetVelocity(pvVelocity->x,pvVelocity->y,pvVelocity->z,dwApply); m_vLastVelocity = *pvVelocity; } return true; } return false; }

CAudio

CAudio needs to maintain a pool of AudioPaths. CAudio already has a general-purpose list of AudioPaths, which we explored in full in Chapter 11. However, the 3D pool needs to be separate since its usage is significantly different. It carries a set of identical 3D AudioPaths, intended specifically for swapping back and forth, as we render. So, we create a second list.

CAudioPathList m_3DAudioPathList; // Pool of 3D AudioPaths. DWORD m_dw3DPoolSize; // Size of 3D pool.

We provide a routine for setting the size of the pool, a routine for allocating a 3D AudioPath, a routine for releasing one when done with it, and a routine for finding the AudioPath with the lowest priority.

// Methods for managing a pool of 3D AudioPaths. DWORD Set3DPoolSize(DWORD dwPoolSize); // Set size of pool. CAudioPath *Alloc3DPath(); // Allocate a 3D AudioPath. void Release3DPath(CAudioPath *pPath); // Return a 3D AudioPath. CAudioPath *GetLowestPriorityPath(); // Get lowest priority 3D AudioPath.

Let's look at each one.

CAudio::Set3DPoolSize()

CAudio::Set3DPoolSize() sets the maximum size that the 3D pool is allowed to grow to. Notice that it doesn't actually allocate any AudioPaths because they should still only be created when needed. Optionally, the caller can pass POOLSIZE_USE_ALL_HARDWARE instead of a pool size. This sets the pool size to the total number of available hardware 3D buffers. This option allows the application to automatically use the optimal number of 3D buffers. Set3DPoolSize() accomplishes this by calling DirectSound's GetCaps() method and using the value stored in Caps.dwFreeHw3DAllBuffers.

DWORD CAudio::Set3DPoolSize(DWORD dwPoolSize) { // If the constant POOLSIZE_USE_ALL_HARDWARE was passed, // call DirectSound's GetCaps method and get the total // number of currently free 3D Buffers. Then, set that as // the maximum. if (dwPoolSize == POOLSIZE_USE_ALL_HARDWARE) { if (m_pDirectSound) { DSCAPS DSCaps; DSCaps.dwSize = sizeof(DSCAPS); m_pDirectSound->GetCaps(&DSCaps); m_dw3DPoolSize = DSCaps.dwFreeHw3DAllBuffers; } } // Otherwise, use the passed value. else { m_dw3DPoolSize = dwPoolSize; } // Return the PoolSize so the caller can know how many were // allocated in the case of POOLSIZE_USE_ALL_HARDWARE. return m_dw3DPoolSize; }

CAudio::Alloc3DPath()

When the application does need a 3D AudioPath, it calls CAudio:: Alloc3DPath(). Alloc3DPath() first scans the list of 3D AudioPaths already in the pool. Alloc3DPath() cannot take any paths that are currently being used, which it tests by checking to see if the AudioPath's Get3DObject() method returns anything. If there are no free AudioPaths in the pool, Alloc3DPath() creates a new AudioPath.

CAudioPath *CAudio::Alloc3DPath() { DWORD dwCount = 0; CAudioPath *pPath = NULL; for (pPath = m_3DAudioPathList.GetHead();pPath;pPath = pPath->GetNext()) { dwCount++; // Get3DObject() returns whatever object this path is currently // rendering. If NULL, the path is inactive, so take it. if (!pPath->Get3DObject()) { // Start the path running again and return it. pPath->GetAudioPath()->Activate(true); return pPath; } } // Okay, no luck. Have we reached the pool size limit? if (dwCount < m_dw3DPoolSize) { // No, so create a new AudioPath. IDirectMusicAudioPath *pIPath = NULL; m_pPerformance->CreateStandardAudioPath( DMUS_APATH_DYNAMIC_3D, // Standard 3D AudioPath. 16, // 16 pchannels should be enough. true, // Activate immediately. &pIPath); if (pIPath) { // Create a CAudioPath object to manage it. pPath = new CAudioPath(pIPath,L"Dynamic 3D"); if (pPath) { // And stick in the pool. m_3DAudioPathList.AddHead(pPath); } pIPath->Release(); } } return pPath; }

CAudio::Release3DPath()

Conversely, CAudio::Release3DPath() takes an AudioPath that is currently being used to render something and stops it, freeing it up to be used again by a different object (or thing).

void CAudio::Release3DPath(CAudioPath *pPath) { // Stop everything that is currently playing on this AudioPath. m_pPerformance->StopEx(pPath->GetAudioPath(),0,0); // Clear its object pointer. pPath->Set3DObject(NULL); // If we had more than we should (pool size was reduced), remove and delete. if (m_3DAudioPathList.GetCount() > m_dw3DPoolSize) { m_3DAudioPathList.Remove(pPath); // The CAudioPath destructor will take care of releasing // the IDirectMusicAudioPath. delete pPath; } // Otherwise, just deactivate so it won't eat resources. else { pPath->GetAudioPath()->Activate(false); } }

CAudio::GetLowestPriorityPath()

GetLowestPriorityPath() scans through the list of AudioPaths and finds the one with the lowest priority. This is typically done to find the AudioPath that would be the best candidate for swapping with an object that has come into view and might be a higher priority. In Mingle, GetLowestPriorityPath() is called by CThingManager when it is reprioritizing AudioPaths.

| Note | Keep in mind that the highest priority possible is the lowest number, or zero, not the other way around. |

CAudioPath * CAudio::GetLowestPriorityPath() { float flPriority = 0.0; // Start with highest possible priority. CAudioPath *pBest = NULL; // Haven't found anything yet. CAudioPath *pPath = m_3DAudioPathList.GetHead(); for (;pPath;pPath = pPath->GetNext()) { // Does this have a priority that is lower than best so far? if (pPath->GetPriority() > flPriority) { // Yes, so stick with it from now on. flPriority = pPath->GetPriority(); pBest = pPath; } } return pBest; }

CThing

CThing manages a sound-emitting object in Mingle. It stores its current position and velocity and updates these every frame. It also maintains a pointer to the 3D AudioPath that it renders through and uses that pointer to directly reposition the 3D coordinates of the AudioPath.

For dynamic routing, CThing also stores a priority number. The priority algorithm is simply the distance from the listener. The shorter the distance, the higher the priority (lower the number). For mathematical simplicity, the priority is calculated as the added squares of the x and y coordinates.

The reassignment algorithm works in two stages. First, it marks the things that need to be reassigned and forces them to stop playing. Once all AudioPath reassignments have been made, it runs through the list and starts the new things running. So, there is state information that needs to be placed in CThing. The variables m_fAssigned and m_fWasRunning are used to track the state of CThing.

CThing uses script routines to start playback. There are five different types of things, as defined by the THING constants, and these help determine which script variables to set and which script routines to call.

// All five types of thing: #define THING_PERSON1 1 // Wandering snob #define THING_PERSON2 2 // Wandering food moocher #define THING_PERSON3 3 // Wandering mosquito person #define THING_PERSON4 4 // Wandering laughing fool #define THING_SHOUT 5 // Sudden shouts on mouse clicks class CThing : public CMyNode { public: CThing(CAudio *pAudio, DWORD dwType, CScript *pScript); ~CThing(); CThing *GetNext() { return (CThing *) CMyNode::GetNext(); }; bool Start(); bool Move(); bool Stop(); void CalcNewPosition(DWORD dwMils); D3DVECTOR *GetPosition() { return &m_vPosition; }; void SetPosition (D3DVECTOR *pVector) { m_vPosition = *pVector; }; void SetAudioPath(CAudioPath *pPath) { m_pPath = pPath; }; CAudioPath *GetAudioPath() { return m_pPath; }; void SetType (DWORD dwType) { m_dwType = dwType; }; DWORD GetType() { return m_dwType; }; float GetPriority() { return m_flPriority; }; void MarkAssigned(CAudioPath *pPath); bool IsAssigned() { return m_fAssigned; }; void ClearAssigned() { m_fAssigned = false; }; bool WasRunning() { return m_fWasRunning; }; bool NeedsPath() { return !m_pPath; }; void StopRunning() { m_pPath = NULL; m_fWasRunning = true; }; private: CAudio * m_pAudio; // Keep pointer to CAudio for convenience. DWORD m_dwType; // Which THING_ type this is. bool m_fAssigned; // Was just assigned an AudioPath. bool m_fWasRunning; // Was unassigned at some point in past. float m_flPriority; // Distance from listener squared. D3DVECTOR m_vPosition; // Current position. D3DVECTOR m_vVelocity; // Direction it is currently going in. CAudioPath *m_pPath; // AudioPath that manages the playback of this. CScript * m_pScript; // Script to invoke for starting sound. };

CThing::Start()

CThing::Start() is called when a thing needs to start making sound. This could occur when the thing is first invoked, or it could occur when it has regained access to an AudioPath. First, Start() makes sure that it indeed has an AudioPath. Once it does, it uses its 3D position to set the 3D position on the AudioPath. It then sets the AudioPath as the default AudioPath in the Performance. Next, Start() calls a script routine to start playback. Although it is possible to hand the AudioPath directly to the script and let it use it explicitly for playback, it's a little easier to just set it as the default, and then there's less work for the script to do.

| Note | There's a more cynical reason for not passing the AudioPath as a parameter. When an AudioPath is stored as a variable in the script, it seems to cause an extra reference on the script, so the script never completely goes away. This is a bug in DX9 that hopefully will get fixed in the future. |

bool CThing::Start() { if (m_pAudio) { if (!m_pPath) { m_pPath = m_pAudio->Alloc3DPath(); } if (m_pPath) { m_pPath->Set3DObject(this); m_pPath->Set3DPosition(&m_vPosition,&m_vVelocity,DS3D_IMMEDIATE); if (m_pScript) { // Just set the AudioPath as the default path. Then, // anything that gets played by // the script will automatically play on this path. m_pAudio->GetPerformance()->SetDefaultAudioPath( m_pPath->GetAudioPath()); // Tell the script which of the four people // (or the shouts) it should play. m_pScript->GetScript()->SetVariableNumber( L"PersonType",m_dwType,NULL); // Then, call the StartTalking Routine, which // will start something playing on the AudioPath. m_pScript->GetScript()->CallRoutine( L"StartTalking",NULL); // No longer not running. m_fWasRunning = false; } return true; } } return false; }

CThing::Stop()

CThing::Stop() is called when a thing should stop making sound. Since it's going to be quiet, there's no need to hang on to an AudioPath. So, Stop() calls CAudio::Release3DPath(), which kills the sound and marks the AudioPath as free for the next taker.

bool CThing::Stop() { if (m_pAudio && m_pPath) { // Release3DPath() stops all audio on the path. m_pAudio->Release3DPath(m_pPath); // Don't point to the path any more cause it has moved on. m_pPath = NULL; return true; } return false; }

CThing::CalcNewPosition()

CThing::CalcNewPosition() is called every frame to update the position of the Thing. In order to update velocity, it's necessary to understand how much time has elapsed because velocity is really the rate of distance changed over time. Since CThing doesn't have its own internal clock, it receives a time-elapsed parameter, dwMils, which indicates how many milliseconds have elapsed since the last call. It can then calculate a new position by using the velocity and time elapsed. CalcNewPosition() also checks to see if the Thing bumped into the edge of the box, in which case it reverses velocity, causing the Thing to bounce back. When done setting the position and velocity, CalcNewPosition() uses the new position to generate a fresh new priority.

void CThing::CalcNewPosition(DWORD dwMils) { if (m_dwType < THING_SHOUT) { // Velocity is in meters per second, so calculate the distance // traveled in dwMils milliseconds. m_vPosition.x += ((m_vVelocity.x * dwMils) / 1000); if ((m_vPosition.x <= (float) -10.0) && (m_vVelocity.x < 0)) { m_vVelocity.x = -m_vVelocity.x; } else if ((m_vPosition.x >= (float) 10.0) && (m_vVelocity.x > 0)) { m_vVelocity.x = -m_vVelocity.x; } m_vPosition.y += ((m_vVelocity.y * dwMils) / 1000); if ((m_vPosition.y <= (float) -10.0) && (m_vVelocity.y < 0)) { m_vVelocity.y = -m_vVelocity.y; } else if ((m_vPosition.y >= (float) 10.0) && (m_vVelocity.y > 0)) { m_vVelocity.y = -m_vVelocity.y; } // We actually track the square of the distance, // since that's all we need for priority. m_flPriority = m_vPosition.x * m_vPosition.x + m_vPosition.y * m_vPosition.y; } if (m_pPath) { m_pPath->SetPriority(m_flPriority); } }

CThing::Move()

Move() is called every frame. It simply transfers its own 3D position to the 3D AudioPath. For efficiency, it uses the DS3D_DEFERRED flag, indicating that the new 3D position command should be batched up with all the other requests. Since CThingManager moves all of the things at one time, it can make a call to the listener to commit all the changes once it has moved all of the things.

bool CThing::Move() { // Are we currently active for sound? if (m_pPath && m_pAudio) { m_pPath->Set3DPosition(&m_vPosition,&m_vVelocity,DS3D_DEFERRED); return true; } return false; }

CThingManager

CThingManager maintains the set of CThings that are milling around the room. In addition to managing the list of CThings, it has routines to create CThings, position them, and keep the closest CThings mapped to active AudioPaths.

class CThingManager : public CMyList { public: CThingManager() { m_pAudio = NULL; m_pScript = NULL; }; void Init(CAudio *pAudio,CScript *pScript,DWORD dwLimit); CThing * GetHead() { return (CThing *) CMyList::GetHead(); }; CThing *RemoveHead() { return (CThing *) CMyList::RemoveHead(); }; void Clear(); CThing * CreateThing(DWORD dwType); CThing * GetTypeThing(DWORD dwType); // Access first CThing of requested type. DWORD GetTypeCount(DWORD dwType); // How many CThings of requested type? void CalcNewPositions(DWORD dwMils); // Calculate new positions for all. void MoveThings(); // Move CThings. void ReassignAudioPaths(); // Maintain optimal AudioPath pairings. void SetAudioPathLimit(DWORD dwLimit); void EnforceAudioPathLimit(); private: void StartAssignedThings(); CAudio * m_pAudio; // Keep pointer to CAudio for convenience. CScript * m_pScript; // Script DWORD m_dwAudioPathLimit; // Max number of AudioPaths. };

CThingManager::CreateThing()

CreateThing() allocates a CThing class of the requested type and installs the current CAudio and CScript in it. CreateThing() doesn't actually start the CThing playing, nor does it connect the CThing to an AudioPath.

CThing *CThingManager::CreateThing(DWORD dwType) { CThing *pThing = new CThing(m_pAudio,dwType,m_pScript); if (pThing) { AddHead(pThing); } return pThing; }

CThingManager::ReassignAudioPaths()

ReassignAudioPaths() is the heart of the dynamic resource system. It makes sure that the highest priority things are matched up with AudioPaths. To do so, it scans through the list of things, looking for each thing that has no AudioPath but has attained a higher priority than the lowest priority thing currently assigned to an AudioPath. When it finds such a match, it steals the AudioPath from the lower priority thing and reassigns the AudioPath to the higher priority thing. At this point, it stops the old thing from making any noise by killing all sounds on the AudioPath, but it doesn't immediately start the new sound playing. Instead, it sets a flag on the new thing, indicating that it has just been assigned an AudioPath, and waits until the next time around to start it. This is done to give the currently playing sound a little time to clear out before the new one starts.

| Note | Under some circumstances, the approach used here of delaying the sound's start until the next frame is unacceptably slow. In those cases, there is an alternate approach. Deactivate the AudioPath by calling IDirectMusicAudioPath::Activate(false), and then reactivate it by passing true to the same call. This causes the AudioPath to be flushed of all sound. When this is done, you can start the new sound immediately. However, there's a greater chance of getting a click in the audio because the old sound was stopped so suddenly. |

void CThingManager::ReassignAudioPaths() { // First, start the things that were set up by the // previous call to ReassignAudioPaths(). StartAssignedThings(); // Now, do a new round of swapping. // Start by getting the lowest priority AudioPath. // Use it to do the first swap. CAudioPath *pLowestPath = m_pAudio->GetLowestPriorityPath(); CThing *pThing = GetHead(); for(; pThing && pLowestPath; pThing = pThing->GetNext()) { if (pThing->NeedsPath() && (pThing->GetPriority() < pLowestPath->GetPriority())) { CThing *pOtherThing = (CThing *) pLowestPath->Get3DObject(); if (pOtherThing) { pOtherThing->StopRunning(); } // Stop the playback of all Segments on the path // in case anything is still making sound. m_pAudio->GetPerformance()->StopEx(pLowestPath->GetAudioPath(),0,0); // Now, set the path to point to this thing. pLowestPath->Set3DObject(pThing); // Give it the new priority. pLowestPath->SetPriority(pThing->GetPriority()); // Mark this thing to start playing on the path next time around. // This gives a slight delay to allow any sounds in // the old path to clear out. pThing->MarkAssigned(pLowestPath); // Okay, done. Now, get the new lowest priority path // and use that to continue swapping... pLowestPath = m_pAudio->GetLowestPriorityPath(); } } }

CThingManager::StartAssignedThings()

After canceling ReassignAudioPaths(), there may be one or more things that have been assigned a new AudioPath but have not started making any noise. This is intentional; it gives the AudioPath a chance to drain whatever sound was in it before the thing newly assigned to it starts making noise. So, StartAssignedThings() is called on the next pass, and its job is simply to cause each newly assigned thing to start making noise.

void CThingManager::StartAssignedThings() { // Scan through all of the CThings... CThing *pThing = GetHead(); for(; pThing; pThing = pThing->GetNext()) { // If this thing was assigned a new AudioPath, then start it. if (pThing->IsAssigned()) { // Get the newly assigned AudioPath. CAudioPath *pPath; if (pPath = pThing->GetAudioPath()) { // Was this previously making sound? // It could have been an AudioPath that was assigned // but was not played yet, in which case we do nothing. if (pThing->WasRunning()) { pThing->Start(); } } // Clear flag so we don't start it again. pThing->ClearAssigned(); } } }

CThingManager::EnforceAudioPathLimit()

EnforceAudioPathLimit() ensures that the number of AudioPaths matches the number set in SetAudioPathLimit(). If the number went down, it kills off low-priority AudioPaths to get back to where we should be. EnforceAudioPathLimit() makes sure that any available things that could be playing are given AudioPaths and turned on if the number of AudioPaths increased.

void CThingManager::EnforceAudioPathLimit() { // First, count how many AudioPaths are currently assigned // to things. This lets us know how many are currently in use. DWORD dwCount = 0; CThing *pThing = GetHead(); for(; pThing; pThing = pThing->GetNext()) { if (pThing->GetAudioPath()) { dwCount++; } } // Do we have more AudioPaths in use than the limit? If so, // we need to remove some of them. if (dwCount > m_dwAudioPathLimit) { // Make sure we don't have any things or AudioPaths // in a halfway state. StartAssignedThings(); dwCount -= m_dwAudioPathLimit; // dwCount now holds the number of AudioPaths that // we can no longer use. while (dwCount) { // Always knock off the lowest priority AudioPaths. CAudioPath *pLowestPath = m_pAudio->GetLowestPriorityPath(); if (pLowestPath) { // Find the thing associated with the AudioPath. pThing = (CThing *) pLowestPath->Get3DObject(); if (pThing) { // Disconnect the thing. pThing->SetAudioPath(NULL); } // Release the AudioPath. This stops all playback // on the AudioPath. Then, because the number of // AudioPaths is above the limit, it removes the AudioPath // from the pool. m_pAudio->Release3DPath(pLowestPath); } dwCount--; } } // On the other hand, if we have fewer AudioPaths in use than // we are allowed, see if we can turn some more on. else if (dwCount < m_dwAudioPathLimit) { // Do we have some turned off that could be turned on? if (GetCount() > dwCount) { // If the total is under the limit, // just allocate enough for all things. if (GetCount() < m_dwAudioPathLimit) { dwCount = GetCount() - dwCount; } // Otherwise, allocate enough to meet the limit. else { dwCount = m_dwAudioPathLimit - dwCount; } // Now, dwCount holds the amount of AudioPaths to turn on. while (dwCount) { // Get the highest priority thing that is currently off. pThing = GetHead(); float flPriority = FLT_MAX; CThing *pHighest = NULL; for (;pThing;pThing = pThing->GetNext()) { // No AudioPath and higher priority? if (!pThing->GetAudioPath() && (pThing->GetPriority() < flPriority)) { flPriority = pThing->GetPriority(); pHighest = pThing; } } if (pHighest) { // We found a high priority AudioPath. // Start it playing. That will cause it to // allocate an AudioPath from the pool. pHighest->Start(); } dwCount--; } } } }

CThingManager::CalcNewPositions()

CalcNewPositions() scans through the list of things and has each calculate its position. This should be called prior to MoveThings(), which sets the new 3D positions on the AudioPaths, and ReassignAudioPaths(), which uses the new positions to ensure that the closest things are assigned to AudioPaths. CalcNewPositions() receives the time elapsed since the last call as its only parameter. This is used to calculate the velocity for each thing.

void CThingManager::CalcNewPositions(DWORD dwMils) { CThing *pThing = GetHead(); for (;pThing;pThing = pThing->GetNext()) { pThing->CalcNewPosition(dwMils); } }

CThingManager::SetAudioPathLimit()

SetAudioPathLimit() is called whenever the maximum number of 3D AudioPaths changes. In Mingle, this occurs when the user changes the number of 3D AudioPaths with the edit box. First, SetAudioPathLimit() sets the internal parameter, m_dwAudioPathLimit, to the new value. Then, it tells CAudio to do the same. Finally, it calls EnforceAudioPathLimit(), which does the hard work of culling or adding and activating 3D AudioPaths.

void CThingManager::SetAudioPathLimit(DWORD dwLimit) { m_dwAudioPathLimit = dwLimit; m_pAudio->Set3DPoolSize(dwLimit); EnforceAudioPathLimit(); }

CThingManager::MoveThings()

MoveThings() scans through the list of things and has each one assign its new position to the AudioPath. Once all things have been moved, MoveThings() calls the Listener's CommitDeferredSettings() method to cause the new 3D positions to take hold.

void CThingManager::MoveThings() { CThing *pThing = GetHead(); for (;pThing;pThing = pThing->GetNext()) { pThing->Move(); } m_pAudio->Get3DListener()->CommitDeferredSettings(); }

Mingle Implementation

The Mingle implementation of CThingManager is quite simple. You can look at the source code in ![]() MingleDlg.cpp. Initialization occurs in CMingleDlg::OnInitDialog(). In it, we load a script, which has routines that will be called by things when they start and stop playback. m_pEffects points to the CAudio instance that manages sound effects.

MingleDlg.cpp. Initialization occurs in CMingleDlg::OnInitDialog(). In it, we load a script, which has routines that will be called by things when they start and stop playback. m_pEffects points to the CAudio instance that manages sound effects.

m_pScript = m_pEffects->LoadScript(L"..\\Media\\Mingle.spt");

Next, set the maximum number of 3D AudioPaths to eight. This is intentionally a bit low so it can be easier to experiment with and hear the results.

// Set the max number of 3D AudioPaths to 8. m_dwMax3DPaths = m_pEffects->Set3DPoolSize(8); // Initialize the thing manager with the CAudio for effects, // the script for playing sounds, and the 3D AudioPath limit. m_ThingManager.Init(m_pEffects,m_pScript,m_dwMax3DPaths);

That's all the initialization needed.

When one of the Talk buttons is clicked, a CThing object is created and told to start making noise. The code is simple:

// Create a new CThing of the requested type. CThing *pThing = m_ThingManager.CreateThing(dwType); if (pThing) { // Successful, so start making sound. pThing->Start(); } Conversely, when one of the Shut Up! buttons is clicked, a CThing object must be removed. This involves stopping the thing from playing, removing it, deleting it, and then checking to see if there is a lower priority thing waiting in the wings to play.

// Fing an instance of the requested type thing. CThing *pThing = m_ThingManager.GetTypeThing(dwType); if (pThing) { // Stop it. This will stop all sound on the // AudioPath and release it. pThing->Stop(); // Kill the thing! m_ThingManager.Remove(pThing); delete pThing; // There might be another thing that now has the // priority to be heard, so check and see. m_ThingManager.EnforceAudioPathLimit(); UpdateCounts(); } The dynamic scheduling occurs at regular intervals. Because Mingle is a windowed app, it uses the Windows timer. In a non-windowed application (like a game), this would more likely be once per frame. The timer code executes a series of operations:

-

Calculate new positions for all CThings. This adjustment of positions assigns new x, y, and z coordinates and assigns new priorities.

-

Draw the things.

-

Use the priorities to make sure that the highest priority things are being heard.

-

Update the 3D positions of the AudioPaths that have things assigned to them.

void CMingleDlg::OnTimer(UINT nIDEvent) { // First, update the positions of all things. // Indicate that this timer wakes up every 30 milliseconds. m_ThingManager.CalcNewPositions(30); // Draw them in their new positions. CClientDC dc(this); DrawThings(&dc); // Now, make sure that all the highest priority // things are being heard. m_ThingManager.ReassignAudioPaths(); // Finally, update their 3D positions. m_ThingManager.MoveThings(); CDialog::OnTimer(nIDEvent); } If at any point during the play the 3D AudioPath limit changes, one call to SetAudioPathLimit() straightens things out.

m_ThingManager.SetAudioPathLimit(m_dwMax3DPaths);

That concludes dynamic 3D resource management!

Low-Latency Sound Effects

We already talked in some depth about how to drop the latency earlier in this chapter, and the code sample for doing so was taken from Mingle. Mingle demonstrates low-latency sound effects with the various complaints heard when you click on the party with your mouse. First, create a CThing that is dedicated just to making these noises whenever the mouse is clicked.

// Create the thing that makes a sound every time the mouse clicks. m_pMouseThing = m_ThingManager.CreateThing(THING_SHOUT); if (m_pMouseThing) { // Allocate an AudioPath up front and hang on to it because // we always want it ready to run the moment the mouse click occurs. m_pMouseThing->SetAudioPath(m_pEffects->Alloc3DPath()); m_pMouseThing->GetAudioPath()->Set3DObject((void *)m_pMouseThing); } When there is a mouse click, translate the mouse coordinates into 3D coordinates and set m_pMouseThing to these coordinates. Then, immediately have it play a sound.

// Set the position of the AudioPath to the new position // of the mouse. m_pMouseThing->SetPosition(&Vector); // Start a sound playing immediately. m_pMouseThing->Start();

Background Ambience

It's not enough to have a handful of individuals wandering around us mumbling, whining, munching, or laughing. To fill in the gaps and make the party feel a lot larger, we'd like to play some background ambience. The ambience track is a short Segment with a set of stereo wave recordings set up to play as random variations. The Segment is actually played by the script's StartParty routine, so there's no special code in Mingle itself for playing background ambience other than the creation of a stereo dynamic AudioPath for the initial default AudioPath, which StartParty uses to play the Segment on. The Segment is set to loop forever, so nothing more needs to be done.

Avoiding Truncated Waves

When a wave is played on an AudioPath that is pitch shifted down, the duration of the wave can be prematurely truncated. This occurs because the wave in the Wave Track has a specific duration assigned to it. The intention is honorable; you can specify just a portion of a wave to play by identifying the start time and duration. To work well with the scheduling of other sounds in music or clock time, the duration is also defined in music or clock time. Therein lies a problem. If you really want the wave to play through in its entirety, there's no way of specifying the duration in samples. This normally isn't a problem, but if the wave gets pitch shifted down during playback (which happens, for example, with Doppler shift), the wave sample duration becomes longer than the duration imposed on it and it stops prematurely.

The simplest solution involves writing some code to force the wave durations to be long enough that they wrap the most dramatic pitch bend you would throw at it. This is actually easy to do, and this is what Mingle does. Through DirectMusic's Tool mechanism, you can write a filter that captures any event type on playback and alters it as needed. So, we write a Tool that intercepts the DMUS_WAVE_PMSG PMsg and multiplies the DMUS_WAVE_PMSG rtDuration field by a reasonable number (say, 10). We place the Tool in the Performance, so it intercepts everything played on all Segments.

Although Tools can be written as COM objects that are created via CoCreateInstance() and authored into Segments or AudioPaths, that level of sophistication isn't necessary here. Instead, we declare a class, CWaveTool, that supports the IDirectMusicTool interface, which is actually very simple to write to. The heart of IDirectMusicTool is one method, ProcessPMsg(), which takes a pmsg and works its magic on it. In the case of CWaveTool, that magic is simply increasing the duration field in the wave pmsg. There are a handful of other methods in IDirectMusicTool that are used to set up what pmsg types that the Tool handles and when the pmsg should be delivered to the Tool; choices are immediately, at the time stamp on the pmsg, or a little ahead of that.

Here are all the IDirectMusicTool methods, as used by CWaveTool:

STDMETHODIMP CWaveTool::Init(IDirectMusicGraph* pGraph) { // Don't need to do anything at initialization. return S_OK; } STDMETHODIMP CWaveTool::GetMsgDeliveryType(DWORD* pdwDeliveryType) { // This tool should process immediately and not wait for the time stamp. *pdwDeliveryType = DMUS_PMSGF_TOOL_IMMEDIATE; return S_OK; } STDMETHODIMP CWaveTool::GetMediaTypeArraySize(DWORD* pdwNumElements) { // We have exactly one media type to process: Waves. *pdwNumElements = 1; return S_OK; } STDMETHODIMP CWaveTool::GetMediaTypes(DWORD** padwMediaTypes, DWORD dwNumElements) { // Return the one type we handle. **padwMediaTypes = DMUS_PMSGT_WAVE; return S_OK; } STDMETHODIMP CWaveTool::ProcessPMsg(IDirectMusicPerformance* pPerf, DMUS_PMSG* pPMSG) { // Just checking to be safe... if (NULL == pPMSG->pGraph) { return DMUS_S_FREE; } // Point to the next Tool after this one. // Otherwise, it will just loop back here forever // and lock up. if (FAILED(pPMSG->pGraph->StampPMsg(pPMSG))) { return DMUS_S_FREE; } // This should always be a wave since we only allow // that media type, but check anyway to be certain. if( pPMSG->dwType == DMUS_PMSGT_WAVE) { // Okay, now the miracle code. // Multiply the duration by ten to reach beyond the // wildest pitch bend. DMUS_WAVE_PMSG *pWave = (DMUS_WAVE_PMSG *) pPMSG; pWave->rtDuration *= 10; } return DMUS_S_REQUEUE; } STDMETHODIMP CWaveTool::Flush(IDirectMusicPerformance* pPerf, DMUS_PMSG* pPMSG, REFERENCE_TIME rtTime) { // Nothing to do here. return S_OK; } Once the CWaveTool is compiling nicely, we still need to stick it in the Performance. Doing so is straightforward. We only need to create one CWaveTool and place it directly in the Performance at initialization time. The following code does so. The code uses an AudioPath to access the Performance Tool Graph. Using an AudioPath is actually simpler than calling the GetGraph() method on the Performance because GetGraph() returns NULL if there is no Graph; DirectMusic doesn't create one unless needed, for efficiency reasons. GetObjectInPath() goes the extra step of creating the Graph if it does not exist already.

// Use the default AudioPath to access the tool graph. IDirectMusicAudioPath *pPath; if (SUCCEEDED(m_pPerformance->GetDefaultAudioPath(&pPath))) { IDirectMusicGraph *pGraph = NULL; pPath->GetObjectInPath(0, DMUS_PATH_PERFORMANCE_GRAPH, // The Performance Tool Graph. 0, GUID_All_Objects,0, // Only one object type. IID_IDirectMusicGraph, // The Graph interface. (void **)&pGraph); if (pGraph) { // Create a CWaveTool CWaveTool *pWaveTool = new CWaveTool; if (pWaveTool) { // Insert it in the Graph. It will process all wave pmsgs that // are played on this Performance. pGraph->InsertTool(static_cast<IDirectMusicTool*>(pWaveTool), NULL,0,0); } pGraph->Release(); } pPath->Release(); } That's it. The Tool will stay in the Performance until the Performance is shut down and released. At that point, the references on the Tool will drop to zero, and its destructor will free the memory.

| Note | This technique has only one downside: If you actually do want to set a duration shorter than the wavelength, it undoes your work. In that scenario, the best thing to do is be more specific about where you do this. You can control which pchannels the Tool modifies, or you can place it in the Segments or AudioPaths where you want it to work, rather than the entire Performance. |

Manipulating the Listener Properties

Working with the Listener is critical for any 3D sound effects work, so CAudio has a method, Get3DListener(), that retrieves the DirectSound IDirectSound3DListener8 interface. This is used for setting the position of the Listener as well as adjusting global 3D sound parameters.

| Note | Get3DListener returns an AddRef()'d instance of the Listener, so it's important to remember to Release() it when done. |

IDirectSound3DListener8 *CAudio::Get3DListener() { IDirectSound3DListener8 *pListener = NULL; IDirectMusicAudioPath *pPath; // Any AudioPath will do because the listener hangs off the primary buffer. if (m_pPerformance && SUCCEEDED(m_pPerformance->GetDefaultAudioPath(&pPath))) { pPath->GetObjectInPath(0, DMUS_PATH_PRIMARY_BUFFER,0, // Access via the primary Buffer. GUID_All_Objects,0, IID_IDirectSound3DListener8,// Query for listener interface. (void **)&pListener); pPath->Release(); } return pListener; } To facilitate the use of the Listener, the Mingle dialog code keeps a copy of the Listener interface and the DS3DLISTENER data structure that can be filled with all of the Listener properties and set at once.

DS3DLISTENER m_ListenerData; // Store current Listener params. IDirectSound3DListener8 *m_pListener; // Use to update the Listener.

In the initialization of the Mingle window, the code gets access to the Listener and then reads the initial parameters into m_ListenerData. From then on, the window code can tweak any parameter and then bulk-update the Listener with the changes.

m_pListener = m_pEffects->Get3DListener(); m_ListenerData.dwSize = sizeof(m_ListenerData); if (m_pListener) { m_pListener->GetAllParameters(&m_ListenerData); } Later, when one or more Listener parameters need updating, just the changed parameters can be altered and then the whole structure is submitted to the Listener. For example, the following code updates the Rolloff Factor in response to dragging a slider.

m_ListenerData.flRolloffFactor = (float) m_ctRollOff.GetPos() / 10; m_pListener->SetAllParameters(&m_ListenerData,DS3D_IMMEDIATE);

Scripted Control of Sound Effects

Mingle uses scripting as much as possible. As we've already seen, all sound effects are played via scripts. There are only three routines in the script file ![]() mingle.spt:

mingle.spt:

-

StartParty: This is called at the beginning of Mingle. It is intended for any initialization that might be needed. It assigns names for the four people, and it starts the background ambience.

-

StartTalking: This is called whenever anything starts making sound. A variable, PersonType, is passed first, indicating which thing type should make the sound.

-

EndParty: This is called when the party is over and it's time to shut down. This isn't entirely necessary, but it's a little cleaner to go ahead and turn off all the sounds before shutting down.

Here's the script:

'Mingle Demo ' 2002 NewBlue Inc. ' Variable set by the application prior to calling StartTalking dim PersonType ' Which object is making the sounds ' Variables retrieved by the application to find out the names ' of the people talking dim Person1Name dim Person2Name dim Person3Name dim Person4Name sub StartTalking if (PersonType = 1) then Snob.play IsSecondary elseif (PersonType = 2) then Munch.play IsSecondary elseif (PersonType = 3) then Mosquito.play IsSecondary elseif (PersonType = 4) then HaHa.play IsSecondary elseif (PersonType = 5) then Shout.play IsSecondary end if end sub sub StartParty Crowd.play IsSecondary PersonType = 1 Person1Name = "Snob" Person2Name = "HorsDeOoovers" Person3Name = "Mosquito Person" Person4Name = "Laughing Fool" end sub sub EndParty Crowd.Stop HaHa.Stop Mosquito.Stop Munch.Stop Shout.Stop Snob.Stop end sub

Here's how the script is called at startup. Right after loading the script, call StartParty to initialize the Person name variable and allow the script to do anything else it might have to. Then, read the names. Since these are strings, the only way we can read them is via variants. Fortunately, there are some convenience routines that take the pain out of working with variants. You must call VariantInit() to initialize the variant. Then, use the variant to retrieve the string from the script. Since the memory for a variant string is allocated dynamically, it must be freed. So, call VariantClear(), and that cleans it up appropriately.

// Call the initialization routine. This should set the Person // name variables as well as start any background ambience. if (SUCCEEDED(m_pScript->GetScript()->CallRoutine(L"StartParty",NULL))) { // We will use a variant to retrieve the name of each Person. VARIANT Variant; char szName[40]; VariantInit(&Variant); if (SUCCEEDED(m_pScript->GetScript()->GetVariableVariant( L"Person1Name",&Variant,NULL))) { // Copy the name to ASCII. wcstombs(szName,Variant.bstrVal,40); // Use it to set the box title. SetDlgItemText(IDC_PERSON1_NAME,szName); // Clear the variant to release the string memory. VariantClear(&Variant); } // Do the same for the other three names... We've already seen how StartTalking is called when we looked at dynamic AudioPaths. EndParty is called when the app window is being closed. If there's anything that the script needs to do on shutdown, it can do it there.

if (m_pScript) { m_pScript->GetScript()->CallRoutine(L"EndParty",NULL); }

Creating Music with the Composition Engine

Although this chapter is all about sound effects, it's important to demonstrate how music and sound effects work together, so I needed to put some music in this but not spend much time on it. Enter DirectMusic's Style and composition technologies.

The Composition Engine can write a piece of music using harmonic (chord progression) guidelines authored in the form of a ChordMap. For the overall shape of the music, the Composition Engine can use a template Segment authored in Producer or actually write a template on the fly using its own algorithm, which is defined by a handful of predefined shapes. The shapes describe different ideas for how the music should progress over time. Examples include rising (increasing in intensity) and falling (decreasing in intensity). A particularly useful shape is Song, which builds a structure with subsections for verse, chorus, etc., that it swaps back and forth. For playback, the chords and phrasing created by the Composition Engine are interpreted by a Style.

As it happens, DX9 ships with almost 200 different Styles and about two dozen ChordMaps. Not only are they a great set of examples for how to write style-based content, but they can be used as a jumping-off point for writing your own Styles. Or, for really low-budget apps like Mingle, you can always use them off the shelf. They are also great for dropping in an application to sample different musical genres as well as making a great placeholder while waiting for the real music to be authored.

It is really easy to use the Composition Engine to throw together a piece of music to play in Mingle. We add ComposeSegment(), which creates a CSegment as an alternative to LoadSegment(), which reads an authored one from disk.

CSegment *CAudio::ComposeSegment( WCHAR *pwzStyleName, // File name of the Style. WCHAR *pwzChordMapName, // File name of the ChordMap. WORD wNumMeasures, // How many measures long? WORD wShape, // Which shape? WORD wActivity, // How busy are the chord changes? BOOL fIntro, BOOL fEnd) // Do we want beginning and/or ending? { // Create a Composer object to build the Segment. CSegment *pSegment = NULL; if (!m_pComposer) { CoCreateInstance( CLSID_DirectMusicComposer, NULL, CLSCTX_INPROC, IID_IDirectMusicComposer, (void**)&m_pComposer); } if (m_pComposer) { // First, load the Style. IDirectMusicStyle *pStyle = NULL; IDirectMusicChordMap *pChordMap = NULL; if (SUCCEEDED(m_pLoader->LoadObjectFromFile( CLSID_DirectMusicStyle, IID_IDirectMusicStyle, pwzStyleName, (void **) &pStyle))) { // We have the Style, so load the ChordMap. if (SUCCEEDED(m_pLoader->LoadObjectFromFile( CLSID_DirectMusicChordMap, IID_IDirectMusicChordMap, pwzChordMapName, (void **) &pChordMap))) { // Hooray, we have what we need. Call the Composition // Engine and have it write a Segment. IDirectMusicSegment8 *pISegment; if (SUCCEEDED(m_pComposer->ComposeSegmentFromShape( pStyle, wNumMeasures, wShape, wActivity, fIntro, fEnd, pChordMap, (IDirectMusicSegment **) &pISegment))) { // Create a CSegment object to manage playback. pSegment = new CSegment; if (pSegment) { // Initialize pSegment->Init(pISegment,m_pPerformance,SEGMENT_FILE); m_SegmentList.AddTail(pSegment); } pISegment->Release(); } pChordMap->Release(); } pStyle->Release(); } } return pSegment; } Using this is simple. When Mingle first opens up, call ComposeSegment() and have it write something long. Set it to repeat forever. Play it and forget about it.

CSegment *pSegment = m_pMusic->ComposeSegment( L"Layback.sty", // Layback style. L"PDPTDIA.cdm", // Pop diatonic ChordMap. 100, // 100 measures. DMUS_SHAPET_SONG, // Song shape. 2, // Relatively high chord activity, true,true); // Need an intro and an ending. if (pSegment) { // Play it over and over again. pSegment->GetSegment()->SetRepeats(DMUS_SEG_REPEAT_INFINITE); m_pMusic->PlaySegment(pSegment,NULL,NULL); } You might have noticed that this is the only sound-producing code in Mingle that is not managed by the script. Unfortunately, scripting does not support ComposeSegmentFromShape(), so we have to call it directly in the program. However, one could have the script manage the selection of Style and ChordMap so that the music choice could be iteratively changed without a recompile of the program.

DirectX Audio has the potential to create truly awesome sound environments. Many of the techniques that apply well to music, like scripting, variations, and even groove level, lend themselves to creating audio environments that are equally rich. The beauty is the vast majority of the work is done on the authoring side with a minimum amount of programming.

The key is in understanding the technologies and mapping out a good strategy. Hopefully this chapter points you in a good direction. You are welcome to use the sample code in any way you'd like.

EAN: 2147483647

Pages: 170