Evolution of the Agile Methodologies

| The people who wrote the agile manifesto think and work in different ways, all at the Ri end of the scale (see the Shu-Ha-Ri scale of skills development, page 17). When writing the manifesto, they were looking to find what was common in their approaches and still preserve their right to differ. The agile manifesto successfully locates commonality across widely varying, strong-minded people and does so in such a way that other Ri-level people can find their own home and be effective. That has been and continues to be good. What it entails is that there is no agreed-upon Shu form of agile development for beginners to follow. Indeed, as the following online conversation between John Rusk and Ilja Preuß[15] points out, not specifying mandatory Shu-level practices is core to the agile approach. Any specific set of Shu practices would be fragile over time and situations.

John Rusk: As Alistair mentioned, the Manifesto is the non-branded resource. But, personally, because it emphasizes values rather than practices, I did not find it to be an ideal starting point for understanding Agile. (Beginners want details, even if they can't handle them!) The Manifesto made much more sense to me after I'd learnt about several of the branded processes. Ilja Preuß: I think there is a dilemma hereto me, not following some cookbook is at the heart of Agility. And there's the dilemma: People just starting out need a Shu-level starter kit. The agile experts don't want to be trapped by simplistic formulae. It's Russ Rufer's quip again: "One Shu doesn't fit all." Looking at the evolution of the agile methodologies, we find a growing aversion to simple formulae and increasing attention to situationally specific strategies (not accidentally one of the six principles in the Declaration of Interdependence). Because most of the branded agile methodologies are formulaic by nature, we find a growing use of unnamed methodologies, or even non-methodology methodologies (a contradiction in terms, I know, but it reflects people's desire to break out of fixed formulae). Let's take a look at the methodology evolution since 2001, changes in testing, the modern agile toolbox, and a long-overdue recognition of the specialty formerly known as user-interface design. The evolution of the Crystal family of methodologies gets its own chapter. XP Second EditionThe first edition of Kent Beck's Extreme Programming: Embrace Change (Beck 2000) changed the industry. His second edition, produced just five years later (Beck 2004), rocked the XP community a second time, but for a different reasonhe reversed the strong position of the first edition, making the practices optional, independent, and variable. In the first edition, he wrote:

The book contains a Shu-level description of XP. Kent was saying, "Here is a useful bundle of practices. Do these things and good things will happen." Beginners need a Shu-level starter kit, and the first edition served this purpose. Over time, it became clear that project teams needed different combinations of XP and things similar to but not exactly like XP. Also, it turned out that many of the practices stand nicely on their own. (Important note: Some practices do not stand on their own but depend on other practicesaggressive refactoring, for example, relies on comprehensive and automated unit tests.) Note the difference in writing in the second edition:

The book contains two big shifts. First, he rejects the idea that XP is something that you can be in compliance with. It doesn't make sense to ask, "Are you in compliance with Kent's attempt to reconcile humanity in his own practice of software development and to share that reconciliation?" He correctly notes in the book that people who are playing in the extreme way will recognize each other. It will be evident to the people in the club who is in the club. Secondly and more significantly, the second edition is written at the Ri level. It contains a number of important things to consider, with the advice that every situation is different, so the practitioner will have to decide how much of each idea, in what order, to apply to each situation. As predicted by the Shu-Ha-Ri model and the dilemma captured by Ilja Preuß, this causes discomfort in people looking for a Shu-level description. Beginners can still benefit from the first edition of the book by using it as their starter kit; as they grow in sophistication, they can buy the second book and look for ways to make ad hoc adjustments. ScrumScrum provides a Shu-level description for a very small set of practices and a Ri-level avoidance of what to do in specific situations. Scrum tells people to think for themselves. Scrum can be summarized (but not executed) very simply:[16]

Scrum avoids saying how the team should develop their software but is adamant that the people act as mature adults in owning their work and taking care of problems. In this sense, it is an essential distillation of the agile principles. The importance of the first three elements of Scrum listed previously should be clear from the theory of software development described all through this book. The fourth point deserves some further attention. Scrum calls for a staff role, the Scrum Master, whose purpose in life is to remove obstacles. Scrum is the only methodology I know that trains its practitioners in the idea that success comes from removing obstacles and giving people what they need to get their work done. This idea was illustrated in the section on the Cone of Silence strategy. There, I described how a project went from hopelessly behind schedule to ahead of schedule from the single act of moving the lead developer upstairs and around a few corners from the rest of the team. It was illustrated again in another conversation about another project. A manager came up to me once to tell me how well Scrum was working for his team.

It seems the four-month estimation was based on his expectation of a certain level of interruptions. Without interruptions, he got done much faster (see the project critical success factor, "Focus", on p. 253). Remember, success is not generated by methodology magic nearly so much as by giving the people what they need to get their work done. Pragmatic and AnonymousAndy Hunt and Dave Thomas, coauthors of the agile manifesto and authors of The Pragmatic Programmer (Hunt 2000), have been defending that it is not process but professional and "pragmatic" thinking that are at the heart of success. They are defending situationally specific, anonymous methodologies, properly referred to as ad hoc.[17]

In the five years since the writing of the manifesto, situationally specific methodologies have become more the norm than the exception. A growing number of teams look at the rules of XP, Scrum, Crystal, FDD, and DSDM and decide that each one in some fashion doesn't fit their situation. They blend them in their own way. The two questions that those teams get asked are: Is their blend agile, and is their blend effective? A bit of thought reveals that the first question is not really meaningful, that it is only the second question that matters. In many cases, the answer is, "more effective than what they were doing before." The alert reader is likely to ask at this point, "What is the difference between an anonymous, ad hoc methodology and Crystal, since Crystal says to tune the methodology to the project and the team?" This question leads to the question: What is the point of following a named methodology at all? A named methodology is important because it is the methodology author's publicly proclaimed, considered effort to identify a set of practices or conventions that work well together and that, when done together, increase the likelihood of success. Thus, in XP first edition, Kent Beck did not say, "Pair programming is a good thing; do pair programming and your project will have a good outcome." He said, "Pair programming is part of a useful package; this package, when taken together, will produce a good outcome." Part of the distress people experienced with the second edition was that he took away his stamp of approval from any particular packaging of the practices, leaving the construction of a particular mix up to the team (in this sense, XP second edition becomes an ad hoc methodology). What got lost was any assurance about the effects of adding and dropping individual practices. Crystal is my considered effort to construct a package of conventions that raises the odds of success and at the same time the odds of being practiced over time. Even though Crystal calls for tailoring, I put bounds on the tailoring, identifying the places where I feel the likelihood of success drops off suddenly. When I look at people working with conventions similar to Crystal's, the first thing I usually notice is that they don't reflect on their work habits very much. Without reflection, they lose the opportunity to improve. (They usually don't deliver the system very often, and they usually don't have very good tests, but those can both be addressed if they reflect seriously.) In other words, there is a difference between a generic ad hoc methodology and a tailoring of Crystal. I feel there is enough significance to naming the key issues to address, and the drop-offs, to make the Crystal package worth retaining as a named package. I include details of what I have learned about the Crystal methodologies in Chapter 6.1. If you want to know if your tailoring is likely to be effective, read the list of practices in "Sweet Spots and the Drop-Off" (p. 290) and in the table shown in "How Agile Are You?" (p. 304) and compare where your practices are with respect to the sweet spots and the drop-offs. The previous line of thinking suggests that other authors should come up with their own recommendations for specific sets of conventions or practices that improve some aspect of a team's work. One such set that I find interesting but that has not yet been published was described by Lowell Lindstrom of Oobeya Group. Lowell runs an end-of-iteration reflection workshop that is more thorough than mine, which I intend to start using. His contains three specific parts, covering product, progress, and process. He creates a flipchart for each and asks the team to reflect on each in turn:

Lindstrom's reflection package is a good addition to any methodology. Combined with Scrum, it makes a good starter kit for teams looking to get out of the Shu stage and into the Ha stage. Predictable, Plan-Driven, and Other CenteringsThe term "plan-driven" was coined by Barry Boehm to contrast with the term "agile." To understand the term and its implications, we need to look at how these sorts of terms center a team's attention. "Agility" is only a declaration of priorities for the development team. It may not be what the organization needs. The organization may prefer predictability, accountability, defect-reduction, or an atmosphere of fun.[18] Each of those priorities drives a different behavior in the development team, and none of them is a wrong priority in itself.

Further, no methodology is in itself agile (or any of the other priorities). The implementation is what is or is not agile. Thus, a group may declare that they wish to be agile, or wish to be predictable, or defect-free, or laid back. Only time will tell if they actually are. Thus, any methodology is at best "would-be agile," "would-be predictable," and so on.[19]

There is, therefore, no opposite to agile development, any more than there is an opposite to jumping animals (imagine being a biologist deciding to specialize in "non-jumping animals"). Saying "non-agile development" only means having a top priority other than agility. But what would the priority be for all the project styles that the agile manifesto was written to counteract? No one has been able to get all those people in a room to decide where their center of attention has been. Actually, agile is almost the wrong word. It describes the mental centering needed for these projects, but it doesn't have a near-opposite comparison term. Adaptive does. I don't wish to suggest that agile should be renamed, and I am careful to give a different meaning to adaptive methodologies compared to agile methodologies: For me, and for some of the people at the writing of the agile manifesto, agile meant being responsive to shifting system requirements and technology, while adaptive meant being responsive to shifting cultural rules. We were discussing primarily the former at the manifesto gathering. Thus, XP first edition was agile and not very adaptive, RUP was adaptive and not very agile, and Crystal and XP second edition are both agile and adaptive. In the last five years, being adaptive has been seen as so valuable that most people now consider being adaptive as part of being agile. Using the word adaptive for a moment allows us to look at its near-opposite comparison term: predictable. In my view, the purpose of that other way of working was to provide predictability in software development.[20]

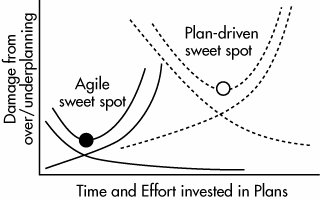

We can sensibly compare processes aimed at increasing predictability with those aimed at increasing adaptiveness to changing requirements, and we can see what we might want from each. However, although some of us might have preferred the word 'predictable,' Barry Boehm chose 'plan-driven' for those processes. Earlier ("Agile only works with the best developers") I described some of the work that Barry Boehm and Rich Turner have been doing with partially agile and partially plan-driven projects (Boehm 2002, Boehm 2003). Figure 5.1-8 shows another. Figure 5.1-8. Different projects call for different amounts of planning (after Boehm 2002, 2003). Figure 5.1-8 shows that for each project, there is a certain type and amount of damage that accrues from not doing enough planning (having a space station explode is a good example). There is also a certain type and amount of damage that accrues from doing too much planning (losing market share during the browser wars of the 1990s is a good example). Figure 5.1-8 shows that the agile approach has a sweet spot in situations in which overplanning causes high damage and underplanning doesn't. The plan-driven approach has a sweet spot when the reverse is true. The figure hints that there are situations in the middle that call for a blending of priorities. This matches the recommendations in this book and the Declaration of Interdependence. In the time since the writing of the agile manifesto, people have been working to understand the blending of the two. Watch over time as more plan-driven organizations find ways to blend the agile ideas into their process to get some of its benefits. Theory of ConstraintsGoldratt's "theory of constraints" has been being examined among agile developers. Three threads from Goldratt's writing deserve mention here. The Importance of the Bottleneck StationIn the book The Goal (Goldratt 1992), Goldratt describes a manufacturing situation in which the performance of a factory is limited by one station. He shows that improving efficiency at the other stations is a waste of money while that one is still the bottleneck. He ends with the point that as the team improves that one station, sooner or later it stops being the bottleneck, and at that moment, improving it any further is a waste of money. I have found it a fruitful line of inquiry to consider that one can "spend" efficiency at the other, nonbottleneck stations to improve overall system output. This surprising corollary is described in more detail on pages 190192 of this book and in (Cockburn 2005e). Few managers bother to understand where their bottleneck really is in the first place, so they are taking advantage of neither the main result nor the corollary. The Theory of Constraints (TOC)The TOC (Goldratt 1992) is very general. It says:

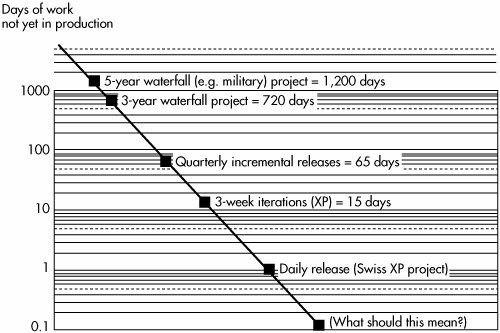

It is not so much the simple statement of the TOC that is interesting, but all the special solutions being catalogued for different situations. A web search for "theory of constraints" and its conferences will turn up more useful references than I can provide here. David Anderson presented a case study of applying the theory of constraints to software development in "From Worst to Best in 9 Months" (Anderson 2005). Critical Chain Project ManagementGoldratt's "critical chain" (Goldratt 1997) is more controversial. "Controversial" in this case means that I think it is hazardous, even though it has backing in the TOC community. Here is why I worry about it. The critical chain idea says to start by making a task-dependency network that includes names of people who will become constraints themselves. For example, in most software projects, the team lead usually gets assigned the most difficult jobs but also has to sit in the most meetings and does the most training. A normal PERT chart does not show this added load, so it is easy to create a schedule in which this person needs to be in multiple places at once. Putting the team lead's name on the tasks and putting that person's tasks into a linear sequence produces a truer schedule (and an unacceptably long one, usually!). One can quickly see that the schedule improves as the load gets removed from the team lead, even when the tasks are assigned to people less qualified. So far, this is a simple application of TOC. Second (and here is the controversial part), ask the people on the team to estimate the length of each task, and then cut each estimate into two halves. Leave one half in place as the new estimate of the task duration, cut the other half in half and put that quarter into a single slippage buffer at the end of the project. Discard the remaining quarter as "time saved" from the people's original estimate. The thinking is that if people are given the shortened length of time to do their work, they will sometimes meet that target and sometimes not, in a statistical fashion. The buffer at the end serves to catch the times that they do not. Because not every task will need its full duration, the end buffer can be half of the original. The hope is that people tend to pad their estimates to show when they can be "safely" done. Having padded the estimate, they have no incentive to get the work done early, so they will take up more time than they strictly need to get the task done. By cutting the estimate in half, they have an incentive to go as fast as they can. By keeping a quarter of the time estimate in the slippage buffer, the project has protection against a normal number of things going wrong. Where I find this thinking hazardous is that it touches upon a common human weaknessit is very easy to abuse from the management side. Indeed, in the only two projects that I found to interview about their use of this technique, they both fell into the trap. The trap is that managers are used to treating the schedule as a promise not to be exceeded. But in critical chain planning, the team members are not supposed to be penalized for exceeding the cut-in-half scheduleafter all, it got cut in half from what they had estimated, on the basis that about half the tasks will exceed the schedule. Critical chain relies on having enlightened managers who understand this and don't penalize the workers for exceeding the halved time estimates. My suspicion is that if the people know that their estimates will get cut in half, and they will get penalized for taking longer than that half, then they will double the estimate before turning it in, removing any benefit that the buffer planning method might have offered. If they don't double their estimates, there is a good chance that they will, in fact, get penalized for taking longer than the cutin-half estimate. That is exactly what I found in the first critical chain interview. The project manager on this fixed-time, fixed-price contract had done the PERT chart, cut the estimates in half, created the slippage buffers as required, and managed the work on the critical path very closely. He was very proud of their resultthey never had to dip into their slippage buffer at all! I hope you can see the problem here. He had gotten his developers to cut their estimates in half and then had ridden them through weekends and overtime so that they would meet all of their cut-in-half estimates without touching the slippage buffer. He was proud of not dipping into the buffers, even though critical chain theory says that he should have dipped into those buffers about half the time. Missing the point of the method, he and his developers had all suffered. The company executives were delighted at delivering a fixed-price contract in 3/4 of the time estimated. After all, they turned an unexpected extra 25% profit on that contract. The sad part was that they gave no bonuses for the overtime put in by the project manager and the team. This is what I mean by critical chain touching upon common human weaknesses. I suspect the developers will double their estimates for future projects. If you are going to use the critical chain technique, be sure to track work time in hours, not days or weeks. Tracking in days and weeks hides overtime work. Tracking in hours keeps the time accounting correct. If the manager in the previous story had scheduled and tracked in hours, he would have had to use those buffers as critical chain theory says he should. This doesn't mean that critical chain doesn't work, only that it easy to misuse. I suspect it only works properly in a well-behaved, high-trust environment and will cease to work as soon as it starts to be misused and people game the system. Lean DevelopmentAgile developers have been studying the lessons from lean manufacturing to understand strategies in sequencing and stacking decisions. This was discussed in "Reconstructing Software Engineering," page 56. As a reminder, inventory in manufacturing matches unvalidated decisions in product design. When the customer requests a feature, we don't actually know that it is a useful feature. When the architect or senior designer creates a design decision, we don't actually know that it is a correct or useful design decision. When the programmer writes some code, we don't know that it is correct or even useful code. The decisions stack up between the user and the business analyst, the business analyst and designer-programmer, the designer-programmer and the tester, and the tester and the deployment person (see Figure 1.1-2). In each case, the more decisions that are stacked up, the greater the uncertainty in delivery of the system. Kent Beck drew a graph (Figure 5.1-9) that shows the way that decisions stack up as the delivery interval gets longer. He drew it on a log scale to capture the time scales involved. To understand it, imagine a project in which a request shows up in the morning and gets designed, programmed, and tested within 24 hours. Let this be the unit measure of decision delay. Figure 5.1-9. Decision "inventory" shown on software projects of different types (thanks to Kent Beck). The problem, as his drawing captures, is that the sponsors of a five-year waterfall project are paying for thousands of times more decisions and not getting validation or recovering their costs on those decisions for five years. The appropriateness (which is to say, the quality) of those decisions decays over time as business and technology change. This means that the value of the investment decays. The sponsors are holding the inventory costs, losing return on investment (ROI). As the delay shrinks from request to delivery, ROI increases because inventory costs drop, the decisions are validated (or invalidated!) sooner, and the organization can start getting business value from the decisions sooner.[22] Kent's graph shows how quickly the queue size increases as the delivery cycle lengthens.

One of the lessons from lean manufacturing is to shrink the queue size between work stations. The target is to have single-piece or continuous flow. It is no accident that the first principle in the Declaration of Interdependence is

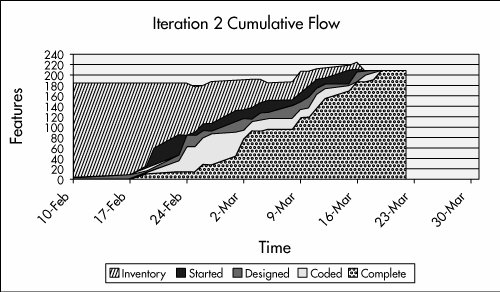

Figure 5.1-9 also shows the relationship between the queue size and delay. In software development, we can't easily see the size of the decision queue. However, the size of the queue is proportional to the delay, and we can measure the delay. Figure 5.1-10 shows how queue size and its analog, delay, can be used in managing a software project. It plots the work completed by each specialist. In this sort of graph, a cumulative flow graph, the queue size corresponds to vertical size in any shaded area, and the delay to the horizontal size in the same shaded area. If you don't know how to measure the number of items in your queue, measure the length of time that decisions stay in your queues. Figure 5.1-10. Cumulative flow graph for a software project used to track pipeline delay and queue size (Anderson 2004). The original caption was "CFD showing lead time fall as a result of reduced WIP" [WIP = work in progress]. David Anderson describes these graphs in his book and experience reports (Anderson 2003, Anderson 2004). He and others have built software development tracking tools that show the number of features in requirements analysis, in design, and in test so that the team can see what their queue size and delays are. David writes that as of 2006, "managing with cumulative flow diagrams is the default management technique in Microsoft MSF methodology shipped with Visual Studio Team System and the cumulative flow diagram is a standard out of the box report with Visual Studio Team System." Queue sizes are what most people associate with lean manufacturing, but there are two more key lessons to extract. Actually, there are certainly more lessons to learn. Read The Machine that Changed the World (Womack 1991) and Toyota Production System (Ohno 1988) to start your own investigation. Here are the two I have selected: Cross-training. Staff at lean manufacturing lines are cross-trained at adjacent stations. That way, when inventory grows at a handoff point, both upstream and downstream workers can pitch in to handle that local bump in queue size. Agile development teams also try to cross-train their developers and testers, or at least sit them together. One of the stranger obstacles to using agile software development techniques is the personnel department. In more than one organization that I have visited, personnel regulations prevented a business analyst from doing any coding or testing. This means that the business analysts are not allowed to prototype their user interfaces using Macromedia's Dreamweaver product because Dreamweaver generates code from the prototyped UI, nor are they allowed to specify their business rules using FIT (Ward Cunningham's Framework for Integrated Tests) because that counted as writing test cases. It is clear to the employees in these organizations that the rules slow down the company. However, even with the best of will, they don't have the political power to change the rules (thus illustrating Jim Highsmith's corollary to the agile catch-phrase "People trump process." Jim follows that with "Politics trump people."). "We're all us, including customers and suppliers." The agile manifesto points to "customer collaboration." Lean organizations extend the "us" group to include the supplier chain and the end purchaser. One (unfortunately, only one) agile project team told me that when they hired an external contract supplier to write subsystem software for them, they wrote the acceptance tests as part of the requirements package (incrementally, of course). It saved the main project team energy. They had extremely low defect rates, with an average time to repair a field defect of less than one day. They suspected that the subcontractor's testing procedures wouldn't be up to their standards. By taking on themselves the burden of creating the tests they needed, the subcontractor wouldn't waste their time shipping them buggy code, the defects in which they would have to first detect and then argue over with the subcontractors. This alone made it worth their time to write the tests themselves. Of course, it simplified the life of the subcontractors because they didn't have to write the acceptance tests. Their story is a good start, but it only hints at what can happen when the entire supply chain is "us." |

EAN: 2147483647

Pages: 126