A Simple Retained-Mode Render Engine

Using OpenGL directly in small or specialized applications (such as the menus Chapter 14, “Overlays and Menus Using JOGL”) is relatively easy. Often the geometry data and OpenGL render calls are all contained in the same methods and possibly only a few display lists are created and used. But programming OpenGL in this direct-access inlined fashion becomes messy when trying to scale up the application in size or functionaltiy. Most full-blown 3D games are much bigger than these easy-to-manage applications, so a better way of organizing and manipulating the scene must be developed.

A major improvement is to create a OpenGL application that uses a technique known as retained-mode rendering.

OpenGL is considered an immediate mode API, meaning that the developer uses commands to tell the OpenGL renderer how to proceed, and then OpenGL does it immediately. (This is somewhat of a misnomer because, in fact, the commands may not execute immediately. OpenGL may queue up the commands. However, the effect is the same as immediate execution in terms of graphical output.) This is a functional approach to graphics, and just like most computing systems, functional control is what is really at the core.

What we want to do is use a more object-oriented approach in which we create 3D objects that exist independently of the specific OpenGL code. That way we can manipulate them independently as well. If we can make an OpenGL renderer that understands these objects, then functionality and scalability are much easier to grow. These independent 3D objects are called retained because we retain the data that describes them separately from the render code. Using this data as the basis for render engine is known as retained-mode rendering. Operating in retained mode is also a stepping-stone to full-blown geometry hierarchies and scene graphs, which we will examine later in the chapter.

Geometry Containers

The first significant object type we need to build for a retained-mode renderer is a geometry container. In a simple retained-mode renderer, geometry containers and the OpenGL code that can render them is all that we need.

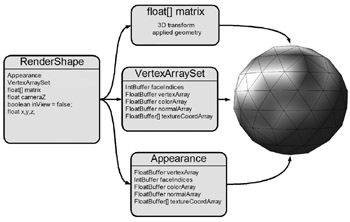

Many different formats and types of geometric data are available that OpenGL supports, and this is one of the things that makes OpenGL so versatile. Some of these types don’t really lend themselves well to a geometry container, and other legacy types may not be as useful now, compared to the latest types. To show a simple working example of retained mode, we will stick to only one category of geometric types, the vertex array, and the two ways OpenGL can render it—what we will call immediate and cached (similar to how we use it in the JOGL chapter sample). The difference here is that we will formalize the related geometric data into one object that we will call a VertexArraySet. Also, we will put all related render-state parameters, such as material and associated textures, into another object called an Appearance and a container for both called a RenderShape. Theses three elements form the core of our retained mode data (see Figure 15.1).

Figure 15.1: Retained mode structures. RenderShape contains references to a float[] matrix, a VertexArraySet object, and an Appearance object.

It is important to understand why all this related data gets divided up this way. All the data could simply be in one class because it all describes one 3D object and will all be combined to render a single 3D object. So why split it up like this?

The major reason is reuse. By reuse, we mean sharing the VertexArraySets and Appearances across many different RenderShapes in a scene. In practice, for even the simplest scenes, it often turns out that Appearance data alone can (and should) be shared across many of the RenderShapes in that scene. The big gains from sharing data in a retained-mode renderer are both in memory savings and improved rendering performance. The less new data to move around and the less new states that must be changed in the render pipeline, the better the render performance. By reusing as much data as possible and setting up the rendering operations to take advantage of the reuse accordingly, we can increase the possible performance for a given scene. Designing the renderer in this way is a careful balance of object-oriented and performance-oriented design.

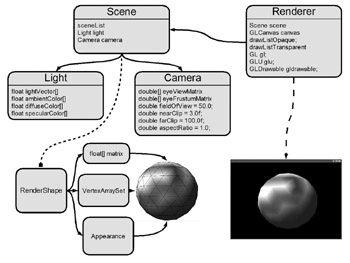

The renderer will also need scene container or be passed a scene container that has all the 3D objects desired to render in a particular frame. The renderer will also need a few other pieces of global information, such as the view transformation or camera position and the direction and lighting configuration. In the procedural reality of OpenGL, lighting is actually a per-object local effect, but it can be treated as a global effect in the retained-mode system if it is meant to be the same for all objects or groups of objects. This is a very limiting lighting model, but it will serve as a solid beginning. The way lighting should be handled is different from game to game and can be one of the more complex areas of 3D engine design. The model here is intended to be the simplest possible but still allow basic control for dynamically lit objects.

To add this new global rendering information to our system we will create several new classes with the relationships shown in Figure 15.2. A new Scene class will have a reference to a Camera class containing basic view parameters, a Light class containing light position or direction, and a container for RenderShapes. The renderer will have a reference to a Scene object and other OpenGL required classes to display the render on the screen.

Figure 15.2: Simple render engine structure.

Now that we have a rough overview, let’s go into more detail with some of the issues of rendering the RenderShapes. With this design and current OpenGL 1.4 capabilities the vertex arrays in VertexArraySet can be set up to use VerterBufferObjects or Display Lists for the fastest performance. But if VerterBufferObjects or Display Lists were created automatically by the renderer for every VertexArraySet, we would have a problem with dynamic geometry data.

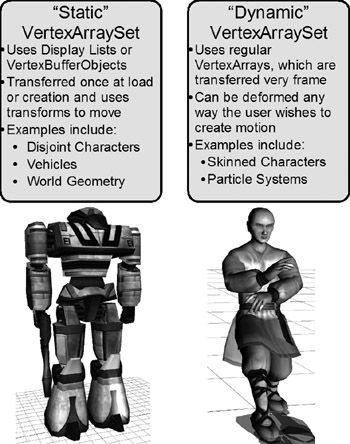

Static and Dynamic Geometry

Some geometry in a scene is static. That is, the geometry will never change from when it is first created. Usually these are elements such as buildings, terrain, trees, and everything we consider world geometry. 3D objects that move around, such as cars or characters, are usually considered dynamic. However, from the perspective of OpenGL vertex arrays, dynamic geometric data is data that actually changes the values in the vertex arrays, not just the transformation of the vertex array. We can easily move objects around by applying transforms in the render pipeline, without ever actually changing the vertex arrays’ values, because the render-time transformation is done only at render time and never affects the original vertex array data. Therefore, static rendered objects could include moving vehicles and objects and some types of characters, all of which can be put into VertexBufferObjects and referenced only with the VBO IDs for rendering. We define these objects that can use VBOs for their internal representation as uniformly transformed because this type of transformation is performed by OpenGL without affecting the vertex array data.

If all vertex arrays were created as VBOs, OpenGL would not correctly render certain 3D objects such as skin, animated characters, particle systems, and other deformable surfaces that are changed by altering the vertex array data. These kinds of geometries must be transferred to OpenGL every time they change, which is usually once every frame. These objects are called nonuniformly deformable because whatever transformations or custom effects are made to the vertex array data are not the same for each vertex, and they need to use regular vertex arrays for their internal representation (see Figure 15.3).

Figure 15.3: Example static and dynamic VertexArraySets.

Static geometry-based objects and characters are made up of several separate vertex arrays grouped together. To create the look of bending joints, separate objects overlap slightly at the joints where local transformations control the final global position and orientation, creating the look of a continuous geometry. These separate transformations don’t actually deform the individual geometries; they only uniformly transform them, similar to how a real toy action figure is constructed.

Dynamic vertex arrays must be used (along with a more complex transformation system) to create the effect of a continuous deformable mesh, more like real skin, cloth, water, or particle systems.

OpenGL doesn’t formally make the distinction between static and dynamic. It is all about which data representation is used and how a given rendering system uses it to render.

Rendering

With all the vertex data and render state data neatly contained in a RenderShape and global camera and light in our Scene, we can begin to implement the Renderer.

Render Pipeline

The renderer has to perform a series of operations in each frame, based on all the retained-mode data. This series is called the render pipeline. There are almost as many variations of render pipelines as there are game engines, but a few key operations are fairly constant. For our engine, our steps will look as follows:

-

Clear frame buffer and Z-buffer.

-

Set the Modelview and Projection transforms from the Camera object’s data.

-

Set the Light states from the Light object’s data.

-

For each RenderShape in the Scene list:

-

Apply the RenderShapes matrix. With OpenGL, we use glPushMatrix() followed by glMultMatrixf(shape.myMatrix) to achieve this.

-

Set the appropriate states for the current RenderShape Appearance.

-

Set the current vertex array ByteBuffers references or Bind IDs for the RenderShapes’s VertexArraySets.

-

Execute the appropriate OpenGL draw function.

-

Reset the matrix state. Similar to step 4a, in OpenGL we use glPopMatrix().

-

-

One frame is complete! Return from display() where JOGL will perform a buffer swap to display the final render on screen. For continuous rendering, as in a game, repeat this procedure from step 1. This is a brief overview of the process, and it should be noted that this describes only the 3D render engine stage of a game engine, not a complete game engine. User input, sounds, and object updates all need to happen before this render stage is executed.

Render Order

Unfortunately, depending on what the 3D objects are in the scene and how complex the final scene is, this basic pipeline may not be enough to get a view-dependent correct render. By view dependent we mean render results that depend on how the view or camera is positioned and oriented. It turns out that simply rendering objects in whatever order they exist in the scene list will probaby not work for most scenes. One reason is that transparent objects will probably not end up rendering correctly. Creating the effect of object transparency is not automatic in modern 3D hardware, but instead requires significant effort to be correctly implemented in a 3D engine. There are a few more things that can done to get a correct scene render, as well as possibly improving render performance.

Opaque and Transparent Graphics

For transparent objects to look right in the final frame, they must be rendered with the proper OpenGL blending function after all other opaque objects have been rendered to actually look transparent. In addition, within that transparent object render pass the transparent objects must also be rendered farthest to nearest from the camera’s perspective, a technique known as Depth or Z-sorting. To be absolutely correct, the 3D objects must be rendered far to near on a face-by-face basis, and for any intersecting geometry those faces would have to be split and sorted. Whew! That could be a lot of work to set up.

Depth-Sorted Transparency

Totally correct scene transparency is often incredibly expensive to compute, especially for dynamic transparent geometry such as particle systems, so real-time 3D engines do the best they can by usually supporting only depth-sorted transparent objects (not individual triangles) and rendering them after all the opaque objects.

To properly support depth-sorted transparency, our renderer will need to have two distinct lists of RenderShapes to draw each frame—the opaque list and the transparent list. That is simple enough to add, but it means that the renderer should probably no longer maintain a reference to the Scene object for accessing the scene list and that some additional process must step through the Scene RenderShapes list and sort it into the opaque and transparent object lists, or bins, in the renderer. Sorting is probably an overglorified word for what needs to be done at this point, but we call it sorting because as the engine becomes more complex, this step will transform into a more complex sort. If our container classes are java.utils.ArrayLists, the next code shows this can be quite simple:

static public void generateRenderBins( ArrayList drawListOpaque, ArrayList drawListTransparent, ArrayList list) { for (int i = 0; i < list.size(); i++) { Object obj = list.get(i); if (obj instanceof RenderShape) { RenderShape renderShape = ((RenderShape)obj); if (renderShape.appearance.hasAlpha) drawListTransparent.add(renderShape); else drawListOpaque.add(renderShape); } } } This functionality could be set in the Renderer class or in the Scene class, or even some new class. The reason we may want to place it in so many locations is that it depends on how the Scene class holds the RenderShapes. It can be a simple ArrayList, such as in this example, or it can be a much more complex structure, more related to the actual game design. In fact, by making the renderer process only an opaque draw list and then a separate transparent draw list, we can make the renderer as dumb (meaning simple) as possible and yet be useful for many different Scene container implementations. Also, this simple renderer will not perform the sort operation on the transparent list, but only just step through what is there and render it in list order. If the list of transparent objects needs sorting, it should be sorted before it is given to the renderer for rendering. That may seem problematic, but it actually makes the renderer more generally useful because some games or portions of games will not need any transparency sorting or may need greater control over how the sorting is done than the renderer’s default implementation.

For example, it may be that in one particular game, the camera does not move freely but rather is constrained in some way. In that case, the transparency may only need to be sorted infrequently at game-specified times. Having the sorting happen automatically by the renderer would mean that the renderer’s method would need to be overridden by the game application code anyway or controlled in a some other game-specific way. Why not just have the game handle that directly because it knows best when and how to do it?

This doesn’t mean we will skip the sorting in our example engine. We will make our engine do a general Z-depth sort on all transparent objects, but the sorting will happened outside of the Renderer class and without its knowledge.

Correctly rendering transparent objects is at least a three-step process, two of the steps being splitting the objects into two opaque and tranparent object lists and ordering the transparent list from far to near. The remaining step is finding the right depth values with which to sort the transparent objects. This is not as simple as it may seem at first because the proper depth value is relative to the camera position, not some inherit object value that can be retrieved. The depth value changes depending on how the camera transform changes, even if the object never moves, because the sort-depth value is relative to the camera’s location and orientation, that is, we need the camera space Z-depth of the object to use in the sort.

If the camera position is maintained as a 3D matrix, a quick transform of the object’s position will do. Depending on how a renderer’s camera data is stored, this may need to be an inverted camera matrix to tranform the object position correctly. Also, some objects may not have an associated matrix, or the matrix they have may not represent the position that we want to use for Z-sorting, especailly if the objects were modeled as translated from the origin without a translation matrix. Therefore, an additional position may need to be stored for the object that represents its intended center point:

static public void computeDrawListTransparentZDepth( Scene scene, ArrayList drawListTransparent) { for (int i = 0; i < drawListTransparent.size(); i++) { float x,y,z; RenderShape renderShape = (RenderShape)drawListTransparent.get(i); // Check if object has a matrix or not // so we can get the position to use // for Z-sorting if (renderShape.myMatrix != null) { // Get object position from matrix x = mat[12]; y = mat[13]; z = mat[14]; } else { // Get object position as stored x = renderShape.x; y = renderShape.y; z = renderShape.z; } // transform the object position in Camera space // but only need to keep the resulting Z coordinate // for later sorting renderShape.cameraZ = VecMatUtils.transformZ( scene.getCamera().eyeViewMatrix, x, y, z); } } The steps go as follows:

-

Create a list of tranparent-only objects. In our case, we generate it from the total list of objects in the Scene list.

-

Compute the Z-depth for each object from the camera’s current frame of reference.

-

Sort the list from farthest to nearest based on each object’s camera Z-depth values.

Incidentally, any popular sort (quicksort, merge sort, and so on) will work here, but a sort that leverages already-sorted lists, such as an insertion sort, is nice because most games have high-frame coherency, that is, from frame to frame the scene doesn’t change too much. This frame-to-frame coherency leads to all kinds of interesting optimizations that can be done, but they complicate the renderer design and are certainly not guaranteed to always be beneficial. We will look at some of these ideas later in this chapter.

Geometry and Matrix Hierarchies

At this point we have a pretty good 3D object renderer. It can handle multiple objects, with different kinds of render states, including textures, lighting, and Z-depth-sorted transparency. Moving objects is a snap with matrix transforms, and even dynamic geometry is optimized. However, manipulating groups of objects together can be quite cumbersome because everything is in list form.

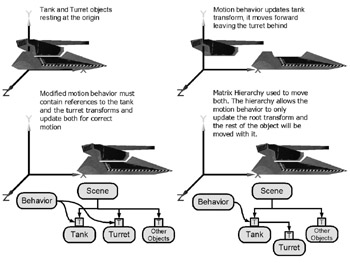

A classic example of the problem of moving groups of objects together is a simple vehicle. Probably the simplest case is a tank with turret. Our example will be a fictional hover tank.

The problem is obvious once we start trying to make the tank drivable. The tank and turret are two different shapes and, therefore, would be two different RenderShapes in our renderer. When the driving motion code executes and updates the tank transform to make it move in a particular direction, it leaves behind the turret object floating in midair. The reason this happens is that the turret does not know it should move with the tank, because it exists as a separate object.

One possible solution to this problem is to modify the tank-driving-motion code to know about the tank body and the turret and to update the turret’s transform to move forward when it does the same for the tank body. For a simple tank and turret example, this is not that bad of a solution. But what if the design calls for more articulated parts, such as another weapon or maybe a hatch on the tank body? The tank motion code would need to be written to know about all the separate parts of a tank to move all those objects as well. This can get to be tedious and error prone as object groupings become more and more complex.

What we need is a geometry hierarchy. A geometry hierarchy replaces the list-based scene structure with a tree structure of groups of objects. What makes it fix our moving-object problem is that the tree will also contain local transformation matrices between grouped objects and the final transformation state of any leaf RenderShape will be the matrix concatenation of all the tree transformations from the root to the RenderShape.

Instead of representing the whole tank as a list of independent RenderShapes, it can be a simple hierarchy of transformed objects, where the final global object transformation is inherited from all the transforms above it in the hierarchy (see Figure 15.4).

Figure 15.4: Transformation hierarchy.

Matrix hierarchies are not an automatic creation, just as correct transparency isn’t automatic. That is, simply creating the hierarchy data structures does not also create the correct final render; it will take more work than that. The transform state of the render pipeline needs to be modified to follow the matrix hierarchy for each final object that must be rendered.

One possible implementation of dealing with the hierarchy is to make the render engine itself contain a reference to the hierarchical structure, and many 3D engines work this way. Unfortunately, this complicates the render engine again by forcing it to know how to process tree structures but, more important, ultimately it limits the render engine’s general usefulness by forcing it to be based on a specific tree implementation or interface.

To avoid this, we will keep the hierarchical structure abstracted from the final render engine’s data. The render engine will operate as it always has, simply rendering out a list of singly transformed objects. However, we will build a new structure for containing the objects in a transform hierarchy that is held by the Scene object. When the Scene executes its usual process of generating the opaque and transparent render bins for the renderer, it will also perform the extra step of flattening the transform hierarchy, also known as converting from local to global coordinates. It will compute the final per-object transform, based on the hierarchies’ transformations and the objects’ hierarchical relationships.

To do this, the Scene class will perform a recursive depth-first traversal of the entire hierarchy, multiplying each matrix to its child as it descends the hierarchy until it reaches an actual RenderShape. It will then set the RenderShape myMatrix to the current accumulation. This accumulated transform is called the global transform and is what the renderer will use for that particular object. It is possible to do this directly in OpenGL using its push and pop matrix calls, but then the final transform would not be computed by the engine and would not be readily available for use as the object transform for depth-sorting transparencies or other collision processing. In addition, most OpenGL matrix-stack implementations are limited to a max size of 32, and in a complex scene this may fall short of the scene hierarchy depth.

To set up this transform hierarchy we must make a few new classes. We will use a Java object as our base, Group to act as the branching node that holds other nodes using a java.util.ArrayList, and TransformGroup to be a special type of group that contains the local matrix at that point in the hierarchy.

public class Group { ArrayList children = new ArrayList(); /** * <return */ public ArrayList getChildren() { return children; } } public class TransformGroup extends Group { Matrix4f matrix = new Matrix4f(); /** * <return */ public Matrix4f getMatrix() { return matrix; } /** * <param matrix4f */ public void setMatrix(Matrix4f matrix4f) { matrix = matrix4f; } } Because our Group class is using ArrayLists to act as its container mechanism, we can add any Java object to it, including other Groups, TransformGroups, and the actual RenderShapes the renderer will render. The modified generateRenderBins(), which is now recursive and incorporates the flattening operation, looks like this:

static public void generateRenderBins( ArrayList drawListOpaque, ArrayList drawListTransparent, Object node, Matrix4f matIn) { // Object is RenderShape, place into // appropriate bin and assign // current matrix accumulation if (node instanceof RenderShape) { RenderShape renderShape = ((RenderShape)node); renderShape.myMatrix = matIn.toFloatArray(); if (renderShape.appearance.hasAlpha) drawListTransparent.add(renderShape); else drawListOpaque.add(renderShape); } // Object is TransformGroup // Acculumation current matrix transform // and then recursively call children if (node instanceof TransformGroup) { TransformGroup transformGroup = (TransformGroup)node; // New matrix for keeping the global current global // accumulation Matrix4f matG = new Matrix4f(); matG.mult( matIn, transformGroup.mat ); Group group = (Group)node; for (int i = 0; i < group.getChildren().size(); i++) { // recursion call generateRenderBins( drawListOpaque, drawListTransparent, group.getChildren().get(i), matG); } } // Object is Group // Recursively call children else if (node instanceof Group) { Group group = (Group)node; for (int i = 0; i < group.getChildren().size(); i++) { // recursion call generateRenderBins( drawListOpaque, drawListTransparent, group.getChildren().get(i), matIn); } } } When this is called, it passes the root of the hierarchy and a Matrix4f, which is a identity matrix. Several optimizations are done in production systems, for example changing the TransformGroup class to contain two matrices—one that is the local and one that is global—so during this traversal the need to keep creating new Matrix4f matrices for the current global matrix is eliminated. Also, the initial passed-in identity matrix is a bit wasteful but is needed to keep the recursion as simple as possible. Allowing for a NULL matrix to be used is also a good improvement.

Putting this all together in a custom Animator class, the final render cycle might look like this:

while (!renderStop) { generateRenderBins(renderer.drawListOpaque,renderer.drawListTransp arent,renderer.getScene().getSceneList()); computeDrawListTransparentZDepth(renderer.getScene(), renderer.drawListTransparent); renderer.sortDrawListTransparentByDepth(); renderer.render(); } What we have created here is a versatile and useful system for general object rendering. It doesn’t hide the details, but it also isn’t overly complex. It’s still not quite complete for many types of applications. For example, if we try to render really large and complex scenes, a city environment perhaps, we will get a very poor performance because we would be processing every object in the scene, even though the majority of the scene would not actually be in view of the camera. It turns out this particular problem has a high variance in terms of optimal solutions, depending on the type of scene itself. We will look at several approaches of dealing with this, but first we will take a slight detour and reexamine this rendering engine from a more top-down perspective. Geometry and matrix hierarchies are a great way to handle groups of objects cleanly and efficiently. However, in 3D graphics there exists an immediate successor to the simple geometry hierarchies, called the scene graph, which adds even more functionality, albeit by adding more complexity as well.

EAN: 2147483647

Pages: 171