Security Architecture and Design

Overview

The security architecture of an information system is fundamental to enforcing an organization’s information security policy. The security architecture comprises security mechanisms and services that are part of the system architecture and designed to meet the system security requirements. Therefore, it is important for security professionals to understand the underlying computer architectures, protection mechanisms, distributed environment security issues, and formal models that provide the framework for the security policy. In addition, professionals should have knowledge of the assurance evaluation, certification and accreditation guidelines, and standards. We address the following topics in this chapter:

- Computer organization

- Hardware components

- Software/firmware components

- Open systems

- Distributed systems

- Protection mechanisms

- Evaluation criteria

- Certification and accreditation

- Formal security models

- Confidentiality models

- Integrity models

- Information flow models

Computer Architecture

The term computer architecture refers to the organization of the fundamental elements composing the computer. From another perspective, it refers to the view a programmer has of the computing system when viewed through its instruction set. The main hardware components of a digital computer are the Central Processing Unit (CPU), memory, and input/output devices. The basic CPU of a general-purpose digital computer consists of an Arithmetic Logic Unit (ALU), control logic, one or more accumulators, multiple general-purpose registers, an instruction register, a program counter, and some on-chip local memory. The ALU performs arithmetic and logical operations on the binary words of the computer.

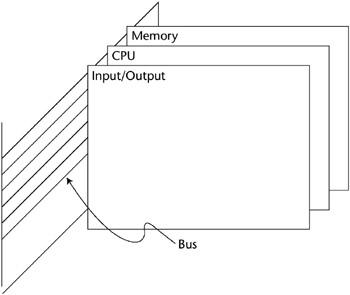

A group of conductors called a bus interconnects these computer elements. The bus runs in a common plane, and the different computer elements are connected to it. A bus can be organized into subunits, such as the address bus, data bus, and control bus. A diagram of the organization of a bus is shown in Figure 5-1.

Figure 5-1: A computer bus.

Memory

Several types of memory are used in digital computer systems. The principal types of memory and their definitions are as follows:

- Cache memory. A relatively small amount (when compared to primary memory) of very high-speed random-access memory (RAM) that holds the instructions and data from primary memory that have a high probability of being accessed during the currently executing portion of a program. Cache logic attempts to predict which instructions and data in main memory will be used by a currently executing program. It then moves these items to the higher-speed cache in anticipation of the CPU requiring these programs and data. Properly designed caches can significantly reduce the apparent main memory access time and thus increase the speed of program execution.

- Random Access Memory (RAM). Memory whose locations can be directly addressed and in which the data that is stored can be altered. RAM is volatile; that is, the data is lost if power to the system is turned off. Dynamic RAM (DRAM) stores the information by using capacitors that are subject to parasitic currents that cause the charges to decay over time. Therefore, the data on each RAM bit must be periodically refreshed. Refreshing is accomplished by reading and rewriting each bit every few milliseconds. In contrast, static RAM (SRAM) uses latch circuits to store the bits and does not need to be refreshed. Both types of RAM, however, are volatile.

- RDRAM Memory (Rambus DRAM). Based on Rambus Signaling Level (RSL) technology introduced in 1992, RSL RDRAM devices provide systems with 16 MB to 2 GB of memory capacity at speeds of up to 1066 MHz. The RDRAM channel achieves high speeds through the use of separate control and address buses, a highly efficient protocol, lowvoltage signaling, and precise clocking to minimize skew between clock and data lines. As of this writing, RSL technology is approaching 1200 MHz speeds.

- Programmable Logic Device (PLD). An integrated circuit with connections or internal logic gates that can be changed through a programming process. Examples of a PLD are a Read Only Memory (ROM), a Programmable Array Logic (PAL) device, the Complex Programmable Logic Device (CPLD), and the Field Programmable Gate Array (FPGA). Programming of these devices is accomplished by blowing fuse connections on the chip, using an antifuse that makes a connection when a high voltage is applied to the junction, through mask programming when a chip is fabricated, and by using SRAM latches to turn a Metal Oxide Semiconductor (MOS) transistor on or off. This last technology is volatile because the power to the chip must be maintained for the chip to operate.

- Read-Only Memory (ROM). Nonvolatile storage whose locations can be directly addressed. In a basic ROM implementation, data cannot be altered dynamically. Nonvolatile storage retains its information even when it loses power. Some ROMs are implemented with one-way fusible links, and their contents cannot be altered. Other types of ROMs - such as Erasable, Programmable Read-Only Memories (EPROMs), Electrically Alterable Read Only Memories (EAROMs), Electrically Erasable Programmable Read Only Memories (EEPROMs), Flash memories, and their derivatives - can be altered by various means, but only at a relatively slow rate when compared to normal computer system reads and writes. ROMs are used to hold programs and data that should normally not be changed or that are changed infrequently. Programs stored on these types of devices are referred to as firmware.

- Real or primary memory. The memory directly addressable by the CPU and used for the storage of instructions, and data associated with the program that is being executed. This memory is usually high-speed RAM.

- Secondary memory. A slower memory (such as magnetic disks) that provides nonvolatile storage.

- Sequential memory. Memory from which information must be obtained by sequentially searching from the beginning rather than directly accessing the location. A good example of a sequential memory access is reading information from a magnetic tape.

- Virtual memory. This type of memory uses secondary memory in conjunction with primary memory to present a CPU with a larger, apparent address space of the real memory locations. Essentially, the secondary memory space appears to augment and increase the size of primary RAM. This apparent increase in primary memory space is accomplished through swapping or paging operations between primary and secondary memory. In swapping, the totality of the memory area in secondary memory required for running a particular program is moved to primary memory. When the program execution is completed, the associated memory space is returned to secondary memory. Paging entails moving a defined secondary memory area, called a page or page frame associated with a program, into primary memory for execution. Multiple page frames are rotated into and out of primary memory until the program execution is complete.

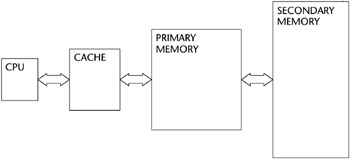

A typical memory hierarchy is shown in Figure 5-2.

Figure 5-2: A computer memory hierarchy.

There are several ways for a CPU to address memory. These options provide flexibility and efficiency when programming different types of applications, such as searching through a table or processing a list of data items. The following are some of the commonly used addressing modes:

- Register addressing. Addressing the registers within a CPU, or other special purpose registers that are designated in the primary memory.

- Direct addressing. Addressing a portion of primary memory by specifying the actual address of the memory location. The memory addresses are usually limited to the memory page that is being executed or to page zero.

- Absolute addressing. Addressing all the primary memory space.

- Indexed addressing. Developing a memory address by adding the address defined in the program’s instruction to the contents of an index register. The computed effective address is used to access the desired memory location. Thus, if an index register is incremented or decremented, a range of memory locations can be accessed.

- Implied addressing. Used when operations that are internal to the processor must be performed, such as clearing a carry bit that was set as a result of an arithmetic operation. Because the operation is being performed on an internal register that is specified within the instruction itself, there is no need to provide an address.

- Indirect addressing. Addressing where the address location that is specified in the program instruction contains the address of the final desired location.

- An associated concept is memory protection.

- Memory protection. Preventing one program from accessing and modifying the memory space contents that belong to another program. Memory protection is implemented by the operating system or by hardware mechanisms.

Instruction Execution Cycle

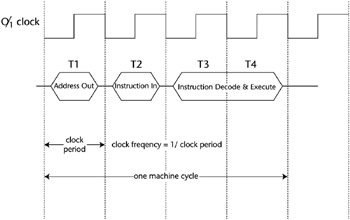

A basic machine cycle consists of two phases: fetch and execute. In the fetch phase, the CPU presents the address of the instruction to memory, and it retrieves the instruction located at that address. Then, during the execute phase, the instruction is decoded and executed. This cycle is controlled by and synchronized with the CPU clock signals. Because of the need to refresh dynamic RAM, multiple clock signals known as multi-phase clock signals are needed. Static RAM does not require refreshing and uses single-phase clock signals. In addition, some instructions may require more than one machine cycle to execute, depending on their complexity. A typical machine cycle showing a single-phase clock is shown in Figure 5-3. Note that in this example, four clock periods are required to execute a single instruction.

Figure 5-3: A typical machine cycle.

A computer can be in a number of different states during its operation. When a computer is executing instructions, this situation is sometimes called the run or operating state. When application programs are being executed, the machine is in the application or problem state because it is (hopefully) calculating the solution to a problem. For security purposes, user applications are permitted to access only a subset of the total instruction set that is built into the computer in this state. This subset is known as the nonprivileged instructions. Privileged instructions are restricted to certain users such as the system administrator or an individual who is authorized to use those instructions or to the operating system itself. A computer is in a supervisory state when it is executing these privileged instructions. The computer can be in a wait state, for example, if it is accessing a slow memory relative to the instruction cycle time, which causes it to extend the cycle.

After examining a basic machine cycle, it is obvious that there are opportunities for enhancing the speed of retrieving and executing instructions. Some of these methods include overlapping the fetch and execute cycles, exploiting opportunities for parallelism, anticipating instructions that will be executed later, fetching and decoding instructions in advance, and so on. Modern computer design incorporates these methods, and their key approaches are provided in the following definitions:

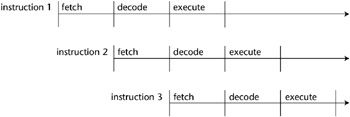

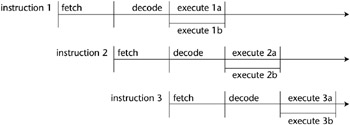

- Pipelining. Increases the performance of a computer by overlapping the steps of different instructions. For example, if the instruction cycle is divided into three parts - fetch, decode, and execute - instructions can be overlapped (as shown in Figure 5-4) to increase the execution speed of the instructions.

Figure 5-4: Instruction pipelining. - Complex Instruction Set Computer (CISC). A computer that uses instructions that perform many operations per instruction. This concept is based on the fact that in earlier technologies, the instruction fetch was the longest part of the cycle. Therefore, by packing the instructions with several operations, the number of fetches could be reduced.

- Reduced Instruction Set Computer (RISC). A computer that uses instructions that are simpler and require fewer clock cycles to execute. This approach was a result of the increase in the speed of memories and other processor components, which enabled the fetch part of the instruction cycle to be no longer than any other portion of the cycle. In fact, performance was limited by the decoding and execution times of the instruction cycle.

- Scalar Processor. A processor that executes one instruction at a time.

- Superscalar Processor. A processor that enables the concurrent execution of multiple instructions in the same pipeline stage as well as in different pipeline stages.

- Very-Long Instruction Word (VLIW) Processor. A processor in which a single instruction specifies more than one concurrent operation. For example, the instruction might specify and concurrently execute two operations in one instruction. VLIW processing is illustrated in Figure 5-5.

Figure 5-5: Very-Long Instruction Word (VLIW) processing. - Multi-programming. Executes two or more programs simultaneously on a single processor (CPU) by alternating execution among the programs.

- Multi-tasking. Executes two or more subprograms or tasks at the same time on a single processor (CPU) by alternating execution among the tasks.

- Multiprocessing. Executes two or more programs at the same time on multiple processors. In symmetric multiprocessing, the processors share the same operating system, memory, and data paths, while in massively parallel multiprocessing, large numbers of processors are used. In this architecture, each processor has its own memory and operating system, but communicates and cooperates with all the other processors.

Input Output Structures

A processor communicates with outside devices through interface devices called input/output (I/O) interface adapters. In many cases, these adapters are complex devices that provide data buffering and timing and interrupt controls. Adapters have addresses on the computer bus and are selected by the computer instructions. If an adapter is given an address in the memory space and thus takes up a specific memory address, this design is known as memory-mapped I/O. The advantage of this approach is that a CPU sees no difference in instructions between the I/O adapter and any other memory location. Therefore, all the computer instructions that are associated with memory can be used for the I/O device. On the other hand, in isolated I/O a special signal on the bus indicates that an I/O operation is being executed. This signal distinguishes an address for an I/O device from an address to memory. The signal is generated as a result of the execution of a few selected I/O instructions in the computer’s instruction set. The advantage of an isolated I/O is that its addresses do not use up any addresses that could be used for memory. The disadvantage is that the I/O data accesses and manipulations are limited to a small number of specific I/O instructions in the processor’s instruction set. Both memory-mapped and isolated I/Os are termed programmed I/Os.

In a programmed I/O, data transfers are a function of the speed of the instruction’s execution, which manipulates the data that goes through a CPU. A faster alternative is direct memory access (DMA). With DMA, data is transferred directly to and from memory without going through a CPU. DMA controllers accomplish this direct transfer in the time interval between the instruction executions. The data transfer rate in DMA is limited primarily by the memory cycle time. The path of the data transfer between memory and a peripheral device is sometimes referred to as a channel.

Another alternative to moving data into and out of a computer is through the use of interrupts. In interrupt processing, an external signal interrupts the normal program flow and requests service. The service may consist of reading data or responding to an emergency situation. Adapters provide the interface for handling the interrupts and the means for establishing priorities among multiple interrupt requests. When a CPU receives an interrupt request, it will save the current state of the information related to the program that is currently running, and it will then jump to another program that services the interrupt. When the interrupt service is completed, the CPU restores the state of the original program and continues processing. Multiple interrupts can be handled concurrently by nesting the interrupt service routines. Interrupts can be turned off or masked if a CPU is executing high-priority code and does not want to be delayed in its processing. A security issue associated with interrupts is interrupt redirection. With this type of attack, an interrupt is redirected to a location in memory to begin execution of malicious code.

Software

The CPU of a computer is designed to support the execution of a set of instructions associated with that computer. This set consists of a variety of instructions such as “add with carry,” “rotate bits left,” “move data,” and “jump to location X.” Each instruction is represented as a binary code that the instruction decoder of the CPU is designed to recognize and execute. These instructions are referred to as machine language instructions. The code of each machine language instruction is associated with an English-like mnemonic to make it easier for people to work with the codes. This set of mnemonics for the computer’s basic instruction set is called its assembly language, which is specific to that particular model computer. Thus, there is a one-to-one correspondence of each assembly language instruction to each machine language instruction. For example, in a simple 8-bit instruction word computer, the binary code for the “add with carry” machine language instruction may be 10011101, and the corresponding assembly language mnemonic could be ADC. A programmer who is writing this code at the machine language level would write the code, using mnemonics for each instruction. Then the mnemonic code would be passed through another program, called an assembler, that would perform the one-to-one translation of the assembly language code to the machine language code. The code generated by the assembler running on the computer is called the object code, and the original assembly code is called the source code. The assembler software can be resident on the computer being programmed and thus is called a resident assembler. If the assembler is being run on another kind of computer, the assembler is called a cross-assembler. Cross-assemblers can run on various types and models of computers. A disassembler reverses the function of an assembler by translating machine language into assembly language.

If a group of assembly language statements is used to perform a specific function, they can be defined to the assembler with a name called a macro. Then, instead of writing the list of statements, the macro can be called, causing the assembler to insert the appropriate statements.

Because it is desirable to write software in higher-level, English-like statements, high-level or high-order languages are employed. In these languages, one statement usually requires several machine language instructions for its implementation. Therefore, in contrast to assembly language, there is a one-to-many relationship of high-level language instructions to machine language instructions. Pascal, FORTRAN, BASIC, and Java are examples of high-level languages. High-level languages are converted to the appropriate machine language instructions through either an interpreter or compiler programs. An interpreter operates on each high-level language source statement individually and performs the indicated operation by executing the corresponding predefined sequence of machine language instructions. Thus, the instructions are executed immediately. Java and BASIC are examples of interpreted languages. In contrast, a compiler translates the entire software program into its corresponding machine language instructions. These instructions are then loaded in the computer’s memory and are executed as a program package. FORTRAN is an example of a compiled language. From a security standpoint, a compiled program is less desirable than an interpreted one because malicious code can be resident somewhere in the compiled code, and it is difficult to detect in a very large program.

High-level languages have been grouped into five generations, labeled 1GL (first-generation language), 2GL, and so forth. The following is a list of these languages:

- 1 GL - A computer’s machine language

- 2 GL - An assembly language

- 3 GL - FORTRAN, BASIC, PL/1, and C languages

- 4 GL - NATURAL, FOCUS, and database query languages

- 5 GL - Prolog, LISP, and other artificial intelligence languages that process symbols or implement predicate logic

The program (or set of programs) that controls the resources and operations of the computer is called an operating system (OS). Operating systems perform process management, memory management, system file management, and I/O management. Windows XP, Windows 2000, Linux, and Unix are some examples of these operating systems.

An operating system is loaded into the computer’s RAM by a small loading, bootstrap, or boot program. This operation is sometimes referred to as the Initial Program Load (IPL) on large systems. The software in a computer system is usually designed in layers, with the operating system serving as the core major layer and the applications running at a higher level and using the OS resources. The operating system itself is designed in layers, with less complex functions forming the lower layers and higher layers performing more complex operations built on the lower-level primitive elements. Security services are better run in the lower layers, because they run faster and require less overhead. Also, access to the lower, critical layers can be restricted according to the privileges assigned to an individual or process.

An OS provides all the functions necessary for the computer system to execute programs in a multitasking mode. It also communicates with I/O systems through a controller. A controller is a device that serves as an interface to the peripheral and runs specialized software to manage communications with another device. For example, a disk controller is used to manage the information exchange and operation of a disk drive.

Open and Closed Systems

Open systems are vendor-independent systems that have published specifications and interfaces in order to permit operations with the products of other suppliers. One advantage of an open system is that it is subject to review and evaluation by independent parties. Usually, this scrutiny will reveal any errors or vulnerabilities in that product.

A closed system uses vendor-dependent proprietary hardware or software that is usually not compatible with other systems or components. Closed systems are not subject to independent examination and may have vulnerabilities that are not known or recognized.

Distributed Architecture

The migration of computing from the centralized model to the client-server model has created a new set of issues for information system security professionals. In addition, this situation has been compounded by the proliferation of desktop PCs and workstations. A PC on a user’s desktop may contain documents that are sensitive to the business of an organization and that can be compromised. In most operations, a user also functions as the systems administrator, programmer, and operator of the desktop platform. The major concerns in this scenario are as follows:

- Desktop systems can contain sensitive information that may be at risk of being exposed.

- Users may generally lack security awareness.

- A desktop PC or workstation can provide an avenue of access into the critical information systems of an organization.

- Modems that are attached to desktop machines can make the corporate network vulnerable to dial-in attacks.

- Downloading data from the Internet increases the risk of infecting corporate systems with malicious code or an unintentional modification of the databases.

- A desktop system and its associated disks may not be protected from physical intrusion or theft.

- Proper backup may be lacking.

Security mechanisms can be put into place to counter the security vulnerabilities that can exist in a distributed environment. Such mechanisms are as follows:

- E-mail and download/upload policies

- Robust access control, which includes biometrics to restrict access to desktop systems

- Graphical user interface (GUI) mechanisms to restrict access to critical information

- File encryption

- Separation of the processes that run in privileged and nonprivileged processor states

- Protection domains

- Protection of sensitive disks by locking them in nonmovable containers and by physically securing the desktop system or laptop

- Distinct labeling of disks and materials according to their classification or an organization’s sensitivity

- A centralized backup of desktop system files

- Regular security awareness training sessions

- Control of software installed on desktop systems

- Encryption and hash totals for use in sending and storing information

- Logging of transactions and transmissions

- Application of other appropriate physical, logical, and administrative access controls

- Database management systems restricting access to sensitive information

- Protection against environmental damage to computers and media

- Use of formal methods for software development and application, which includes libraries, change control, and configuration management

- Inclusion of desktop systems in disaster recovery and business continuity plans

Protection Mechanisms

In a computational system, multiple processes may be running concurrently. Each process has the capability to access certain memory locations and to execute a subset of the computer’s instruction set. The execution and memory space assigned to each process is called a protection domain. This domain can be extended to virtual memory, which increases the apparent size of real memory by using disk storage. The purpose of establishing a protection domain is to protect programs from all unauthorized modification or executional interference.

Security professionals should also know that a Trusted Computing Base (TCB) is the total combination of protection mechanisms within a computer system, which includes the hardware, software, and firmware that are trusted to enforce a security policy. The TCB components are responsible for enforcing the security policy of a computing system, and therefore these components must be protected from malicious and untrusted processes. The TCB must also provide for memory protection and ensure that the processes from one domain do not access memory locations of another domain.

The security perimeter is the boundary that separates the TCB from the remainder of the system. A trusted path must also exist so that a user can access the TCB without being compromised by other processes or users. A trusted computer system is one that employs the necessary hardware and software assurance measures to enable its use in processing multiple levels of classified or sensitive information. This system meets the specified requirements for reliability and security.

Resources can also be protected through the principle of abstraction. Abstraction involves viewing system components at a high level and ignoring or segregating its specific details. This approach enhances the capability to understand complex systems and to focus on critical, high-level issues. In object-oriented programming, for example, methods (programs), and data are encapsulated in an object that can be viewed as an abstraction. This concept is called information hiding because the object’s functioning details are hidden. Communication with this object takes place through messages to which the object responds as defined by its internal method.

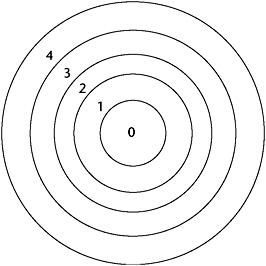

Rings

One scheme that supports multiple protection domains is the use of protection rings. These rings are organized with the most privileged domain located in the center of the ring and the least-privileged domain in the outermost ring. This approach is shown in Figure 5-6.

Figure 5-6: Protection rings.

The operating system security kernel is usually located at Ring 0 and has access rights to all domains in that system. A security kernel is defined as the hardware, firmware, and software elements of a trusted computing base that implement the reference monitor concept. A reference monitor is a system component that enforces access controls on an object. Therefore, the reference monitor concept is an abstract machine that mediates all access of subjects to objects. The security kernel must:

- Mediate all accesses

- Be protected from modification

- Be verified as correct

In the ring concept, access rights decrease as the ring number increases. Thus, the most trusted processes reside in the center rings. System components are placed in the appropriate ring according to the principle of least privilege. Therefore, the processes have only the minimum privileges necessary to perform their functions.

The ring protection mechanism was implemented in MIT’s MULTICS time-shared operating system, which was enhanced for secure applications by the Honeywell Corporation. MULTICS was initially targeted for use on specific hardware platforms because some of its functions could be implemented through the hardware’s customization. It was designed to support 64 rings, but in practice only eight rings were defined.

There are also other related kernel-based approaches to protection:

- Using a separate hardware device that validates all references in a system.

- Implementing a virtual machine monitor, which establishes a number of isolated virtual machines that are running on the actual computer. The virtual machines mimic the architecture of a real machine in addition to establishing a multilevel security environment. Each virtual machine can run at a different security level.

- Using a software security kernel that operates in its own hardware protection domain.

Logical Security Guard

A logical security guard is an information security service that can be used to augment an existing multilevel security system by serving as a trusted intermediary between untrusted entities. For example, a user at a low sensitivity level may want to query a database at a higher sensitivity level. The logical security guard mechanism is trusted to handle the communication between the two entities and ensure that no information is allowed to flow from the higher sensitivity level to the lower sensitivity level.

Enterprise Architecture Issues

An enterprise can be defined as a systematic and focused activity of some scope. An enterprise architecture is a structural description of an enterprise that includes its purpose, how that purpose is achieved, and the enterprise’s corresponding modes of operation. The enterprise architecture reflects the requirements of its associated applications and the needs of its users.

A number of reference models have been developed to describe enterprise architectures. The U.S. Office of Management and Budget (OMB), through its Federal Enterprise Architecture Program Management Office, published the following five federated enterprise architecture reference models:

- The Data and Information Reference Model - Addresses the different types of data and relationships associated with business line and program operations

- The Performance Reference Model - Presents general performance measures and outputs to use as metrics for evaluating business objectives and goals

- The Business Reference Model - Describes federal government business operations independent of the agencies that conduct these operations

- The Service Component Reference Model - Identifies and classifies IT service components that support federal agencies and promotes reuse of these components in the various agencies

- The Technical Reference Model - Describes standards and technology for supporting delivery of service components

Another enterprise architecture framework that is widely recognized is the “Zachman Framework for Defining and Capturing an Architecture,” developed by John Zachman. This framework comprises six perspectives for viewing an enterprise architecture and six associated models. These perspectives and their related models are summarized as follows:

- Strategic Planner (perspective) - How the entity operates (model)

- System User (perspective) - What the entity uses to operate (model)

- System Designer (perspective) - Where the entity operates (model)

- System Developer (perspective) - Who operates the entity (model)

- Subcontractor (perspective) - When entity operations occur (model)

- System Itself (perspective) - Why the entity operates (model)

Another enterprise related activity is enterprise access management (EAM), which provides Web-based enterprise systems services. An example of these services is single sign-on (SSO), discussed in Chapter 3. One approach to enterprise SSO is for Web applications residing on different servers in the same domain to use nonpersistent, encrypted cookies on the client interface. A cookie is provided to each application the user wishes to access.

Security Labels

A security label is assigned to a resource to denote a type of classification or designation. This label can then indicate special security handling, or it can be used for access control. Once labels are assigned, they usually cannot be altered and are an effective access control mechanism. Because labels must be compared and evaluated in accordance with the security policy, they incur additional processing overhead when used.

Security Modes

An information system operates in different security modes that are determined by the information system’s classification level and the clearance of the users. A major distinction in its operation is between the system-high mode and the multilevel security mode. In the system-high mode of operation, a system operates at the highest level of information classification, where all users must have clearances for the highest level. However, not all users may have a need to know for all the data. The multilevel mode of operation supports users who have different clearances and data at multiple classification levels. Additional modes of operation are defined as follows:

- Dedicated. All users have a clearance or an authorization and a need to know for all information that is processed by an information system; a system might handle multiple classification levels.

- Compartmented. All users have a clearance for the highest level of information classification, but they do not necessarily have the authorization and a need to know for all the data handled by the computer system.

- Controlled. This is a type of multilevel security where a limited amount of trust is placed in the system’s hardware/software base along with the corresponding restrictions on the classification of the information levels that can be processed.

- Limited access. This is a type of system access in which the minimum user clearance is not cleared and the maximum data classification is unclassified but sensitive.

Additional Security Considerations

Vulnerabilities in the system security architecture can lead to violations of the system’s security policy. Typical vulnerabilities that are architecturally related include the following:

- Covert channel. An unintended communication path between two or more subjects sharing a common resource, which supports the transfer of information in such a manner that it violates the system’s security policy. The transfer usually takes place through common storage areas or through access to a common path that can use a timing channel for the unintended communication.

- Lack of parameter checking. The failure to check the size of input streams specified by parameters. Buffer overflow attacks exploit this vulnerability in certain operating systems and programs.

- Maintenance hook. A hardware or software mechanism that was installed to permit system maintenance and to bypass the system’s security protections. This vulnerability is sometimes referred to as a trap door or back door.

- Time of Check to Time of Use (TOC/TOU) attack. An attack that exploits the difference in the time that security controls were applied and the time the authorized service was used.

Recovery Procedures

Whenever a hardware or software component of a trusted system fails, it is important that the failure does not compromise the security policy requirements of that system. In addition, the recovery procedures should not provide an opportunity for violation of the system’s security policy. If a system restart is required, the system must restart in a secure state. Startup should occur in the maintenance mode, which permits access only by privileged users from privileged terminals. This mode supports the restoring of the system state and the security state.

When a computer or network component fails and the computer or the network continues to function, it is called a fault-tolerant system. For fault tolerance to operate, the system must be capable of detecting that a fault has occurred, and the system must then have the capability to correct the fault or operate around it. In a fail-safe system, program execution is terminated and the system is protected from being compromised when a hardware or software failure occurs and is detected. In a system that is fail-soft or resilient, selected, noncritical processing is terminated when a hardware or software failure occurs and is detected. The computer or network then continues to function in a degraded mode. The term failover refers to switching to a duplicate “hot” backup component in real time when a hardware or software failure occurs, which enables the system to continue processing.

A cold start occurs in a system when there is a TCB or media failure and the recovery procedures cannot return the system to a known, reliable, secure state. In this case, the TCB and portions of the software and data may be inconsistent and require external intervention. At that time, the maintenance mode of the system usually has to be employed.

Assurance

Assurance is simply defined as the degree of confidence in the satisfaction of security needs. The following sections summarize guidelines and standards that have been developed to evaluate and accept the assurance aspects of a system.

Evaluation Criteria

In 1985, the Trusted Computer System Evaluation Criteria (TCSEC) was developed by the National Computer Security Center (NCSC) to provide guidelines for evaluating vendors’ products for the specified security criteria. TCSEC provides the following:

- A basis for establishing security requirements in the acquisition specifications

- A standard of the security services that should be provided by vendors for the different classes of security requirements

- A means to measure the trustworthiness of an information system

The TCSEC document, called the Orange Book because of its cover color, is part of a series of guidelines with covers of different colors called the Rainbow Series. In the Orange Book, the basic control objectives are security policy, assurance, and accountability. TCSEC addresses confidentiality but does not cover integrity. Also, functionality (security controls applied) and assurance (confidence that security controls are functioning as expected) are not separated in TCSEC as they are in other evaluation criteria developed later. The Orange Book defines the major hierarchical classes of security with the letters D through A as follows:

- D - Minimal protection

- C - Discretionary protection (C1 and C2)

- B - Mandatory protection (B1, B2, and B3)

- A - Verified protection; formal methods (A1)

The DoD Trusted Network Interpretation (TNI) is analogous to the Orange Book. It addresses confidentiality and integrity in trusted computer/ communications network systems and is called the Red Book. The TNI incorporates integrity labels, cryptography, authentication, and non-repudiation for network protection.

The Trusted Database Management System Interpretation (TDI) addresses the trusted database management systems.

The European Information Technology Security Evaluation Criteria (ITSEC) addresses C.I.A. issues. It was endorsed by the Council of the European Union in 1995 to provide a comprehensive and flexible evaluation approach. The product or system to be evaluated by ITSEC is defined as the Target of Evaluation (TOE). The TOE must have a security target, which includes the security enforcing mechanisms and the system’s security policy.

ITSEC separately evaluates functionality and assurance, and it includes 10 functionality classes (F), eight assurance levels (Q), seven levels of correctness (E), and eight basic security functions in its criteria. It also defines two kinds of assurance. One assurance measure is the correctness of the security functions’ implementation, and the other is the effectiveness of the TOE while in operation.

The ITSEC ratings are in the form F-X,E, where functionality and assurance are listed. The ITSEC ratings that are equivalent to TCSEC ratings are as follows:

- F-C1, E1 = C1

- F-C2, E2 = C2

- F-B1, E3 = B1

- F-B2, E4 = B2

- F-B3, E5 = B3

- F-B3, E6 = A1

The other classes of the ITSEC address high integrity and high availability.

TCSEC, ITSEC, and the Canadian Trusted Computer Product Evaluation Criteria (CTCPEC) have evolved into one set of evaluation criteria called the Common Criteria. The Common Criteria define a Protection Profile (PP), which is an implementation-independent specification of the security requirements and protections of a product that could be built. Policy requirements of the Common Criteria include availability, information security management, access control, and accountability. The Common Criteria terminology for the degree of examination of the product to be tested is the Evaluation Assurance Level (EAL). EALs range from EA1 (functional testing) to EA7 (detailed testing and formal design verification). The Common Criteria TOE refers to the product to be tested. A Security Target (ST) is a listing of the security claims for a particular IT security product. Also, the Common Criteria describe an intermediate grouping of security requirement components as a package. The term functionality in the Common Criteria refers to standard and well-understood functional security requirements for IT systems. These functional requirements are organized around TCB entities that include physical and logical controls, startup and recovery, reference mediation, and privileged states.

Because of the large amount of effort required to implement the government-sponsored evaluation criteria, commercial organizations have developed their own approach, called the Commercial Oriented Functionality Class (COFC). The COFC defines a minimal baseline security functionality that is more representative of commercial requirements than government requirements. Some typical COFC ratings are:

- CS1, which corresponds to C2 in TCSEC

- CS2, which requires audit mechanisms, access control lists, and separation of system administrative functions

- CS3, which specifies strong authentication and assurance, role-based access control, and mandatory access control

Certification and Accreditation

In many environments, formal methods must be applied to ensure that the appropriate information system security safeguards are in place and that they are functioning per the specifications. In addition, an authority must take responsibility for putting the system into operation. These actions are known as certification and accreditation (C&A).

Formally, the definitions are as follows:

- Certification. The comprehensive evaluation of the technical and nontechnical security features of an information system and the other safeguards, which is created in support of the accreditation process to establish the extent to which a particular design and implementation meets the set of specified security requirements

- Accreditation. A formal declaration by a Designated Approving Authority (DAA) by which an information system is approved to operate in a particular security mode by using a prescribed set of safeguards at an acceptable level of risk

The certification and accreditation of a system must be checked after a defined period of time or when changes occur in the system or its environment. Then, recertification and reaccreditation are required.

A detailed discussion of certification and accreditation is given in Chapter 11.

DITSCAP and NIACAP

Two U.S. defense and government certification and accreditation standards have been developed for the evaluation of critical information systems. These standards are the Defense Information Technology Security Certification and Accreditation Process (DITSCAP) and the National Information Assurance Certification and Accreditation Process (NIACAP).

DITSCAP

The DITSCAP establishes a standard process, a set of activities, general task descriptions, and a management structure to certify and accredit the IT systems that will maintain the required security posture. This process is designed to certify that the IT system meets the accreditation requirements and that the system will maintain the accredited security posture throughout its life cycle. These are the four phases of the DITSCAP:

- Phase 1, Definition. Phase 1 focuses on understanding the mission, the environment, and the architecture in order to determine the security requirements and level of effort necessary to achieve accreditation.

- Phase 2, Verification. Phase 2 verifies the evolving or modified system’s compliance with the information agreed on in the System Security Authorization Agreement (SSAA). The objective is to use the SSAA to establish an evolving yet binding agreement on the level of security required before system development begins or changes to a system are made. After accreditation, the SSAA becomes the baseline security configuration document.

- Phase 3, Validation. Phase 3 validates the compliance of a fully integrated system with the information stated in the SSAA.

- Phase 4, Post Accreditation. Phase 4 includes the activities that are necessary for the continuing operation of an accredited IT system in its computing environment and for addressing the changing threats that a system faces throughout its life cycle.

NIACAP

The NIACAP establishes the minimum national standards for certifying and accrediting national security systems. This process provides a standard set of activities, general tasks, and a management structure to certify and accredit systems that maintain the information assurance and the security posture of a system or site. The NIACAP is designed to certify that the information system meets the documented accreditation requirements and will continue to maintain the accredited security posture throughout the system’s life cycle.

There are three types of NIACAP accreditation:

- Site accreditation. Evaluates the applications and systems at a specific, self-contained location.

- Type accreditation. Evaluates an application or system that is distributed to a number of different locations.

- System accreditation. Evaluates a major application or general support system.

The NIACAP is composed of four phases: Definition, Verification, Validation, and Post Accreditation. These are essentially identical to those of the DITSCAP.

The DITSCAP and NIACAP are presented in greater detail in Chapter 11.

DIACAP

Because of the extensive effort and time required to implement the DITSCAP, the U.S. DoD has developed a replacement C&A process, DIACAP.

DIACAP, the Defense Information Assurance Certification and Accreditation Process, is a completely new process for use by the DOD and applies to the “acquisition, operation and sustainment of all DoD-owned or controlled information systems that receive, process, store, display or transmit DoD information, regardless of classification or sensitivity of the information or information system. This includes Enclaves, AIS Applications (e.g., Core Enterprise Services), Outsourced Information Technology (IT)-Based Processes, and Platform IT Interconnections.”

DIACAP is covered in detail in Chapter 11.

The Systems Security Engineering Capability Maturity Model (SSE CMM)

The Systems Security Engineering Capability Maturity Model (SSE-CMM®) is based on the premise that if one can guarantee the quality of the processes that are used by an organization, then one can guarantee the quality of the products and services generated by those processes. The model was developed by a consortium of government and industry experts and is now under the auspices of the International Systems Security Engineering Association (ISSEA) at www.issea.org. The SSE-CMM has the following salient points:

- It describes those characteristics of security engineering processes essential to ensure good security engineering.

- It captures industry’s best practices.

- It provides an accepted way of defining practices and improving capability.

- It provides measures of growth in capability of applying processes.

The SSE-CMM addresses the following areas of security:

- Operations Security

- Information Security

- Network Security

- Physical Security

- Personnel Security

- Administrative Security

- Communications Security

- Emanations Security

- Computer Security

The SSE-CMM methodology and metrics provide a reference for comparing existing systems’ security engineering best practices against the essential systems security engineering elements described in the model. It defines two dimensions that are used to measure the capability of an organization to perform specific activities. These dimensions are domain and capability. The domain dimension consists of all the practices that collectively define security engineering. These practices are called Base Practices (BPs). Related BPs are grouped into Process Areas (PAs). The capability dimension represents practices that indicate process management and institutionalization capability. These practices are called Generic Practices (GPs) because they apply across a wide range of domains. The GPs represent activities that should be performed as part of performing BPs.

For the domain dimension, the SSE-CMM specifies 11 security engineering PAs and 11 organizational and project-related PAs, each consisting of BPs. BPs are mandatory characteristics that must exist within an implemented security engineering process before an organization can claim satisfaction in a given PA. The 22 PAs and their corresponding BPs incorporate the best practices of systems security engineering. The PAs are listed in the following subsections.

Security Engineering

- PA01 - Administer Security Controls

- PA02 - Assess Impact

- PA03 - Assess Security Risk

- PA04 - Assess Threat

- PA05 - Assess Vulnerability

- PA06 - Build Assurance Argument

- PA07 - Coordinate Security

- PA08 - Monitor Security Posture

- PA09 - Provide Security Input

- PA10 - Specify Security Needs

- PA11 - Verify and Validate Security

Project and Organizational Practices

- PA12 - Ensure Quality

- PA13 - Manage Configuration

- PA14 - Manage Project Risk

- PA15 - Monitor and Control Technical Effort

- PA16 - Plan Technical Effort

- PA17 - Define Organization’s Systems Engineering Process

- PA18 - Improve Organization’s Systems Engineering Process

- PA19 - Manage Product Line Evolution

- PA20 - Manage Systems Engineering Support Environment

- PA21 - Provide Ongoing Skills and Knowledge

- PA22 - Coordinate with Suppliers

The GPs are ordered in degrees of maturity and are grouped to form and distinguish among five levels of security engineering maturity. The attributes of these five levels are as follows:

- Level 1

- 1.1 BPs Are Performed

- Level 2

- 2.1 Planning Performance

- 2.2 Disciplined Performance

- 2.3 Verifying Performance

- 2.4 Tracking Performance

- Level 3

- 3.1 Defining a Standard Process

- 3.2 Perform the Defined Process

- 3.3 Coordinate the Process

- Level 4

- 4.1 Establishing Measurable Quality Goals

- 4.2 Objectively Managing Performance

- Level 5

- 5.1 Improving Organizational Capability

- 5.2 Improving Process Effectiveness

The corresponding descriptions of the five levels are given as follows:[*]

- Level 1, “Performed Informally,” focuses on whether an organization or project performs a process that incorporates the BPs. A statement characterizing this level would be, “You have to do it before you can manage it.”

- Level 2, “Planned and Tracked,” focuses on project-level definition, planning, and performance issues. A statement characterizing this level would be, “Understand what’s happening on the project before defining organization-wide processes.”

- Level 3, “Well Defined,” focuses on disciplined tailoring from defined processes at the organization level. A statement characterizing this level would be, “Use the best of what you’ve learned from your projects to create organization-wide processes.”

- Level 4, “Quantitatively Controlled,” focuses on measurements being tied to the business goals of the organization. Although it is essential to begin collecting and using basic project measures early, measurement and use of data are not expected organization-wide until the higher levels have been achieved. Statements characterizing this level would be, “You can’t measure it until you know what ‘it’ is” and “Managing with measurement is meaningful only when you’re measuring the right things.”

- Level 5, “Continuously Improving,” gains leverage from all the management practice improvements seen in the earlier levels and then emphasizes the cultural shifts that will sustain the gains made. A statement characterizing this level would be, “A culture of continuous improvement requires a foundation of sound management practice, defined processes, and measurable goals.”

[*]Source: The Systems Security Engineering Capability Maturity Model v2.0 (SSE-CMM Project, 1999).

Information Security Models

Models are used in information security to formalize security policies. These models may be abstract or intuitive and will provide a framework for the understanding of fundamental concepts. In this section, three types of models are described: access control models, integrity models, and information flow models.

Access Control Models

Access control philosophies can be organized into models that define the major and different approaches to this issue. These models are the access matrix, the Take-Grant model, the Bell-LaPadula confidentiality model, and the state machine model.

The Access Matrix

The access matrix is a straightforward approach that provides access rights to subjects for objects. Access rights are of the type read, write, and execute. A subject is an active entity that is seeking rights to a resource or object. A subject can be a person, a program, or a process. An object is a passive entity, such as a file or a storage resource. In some cases, an item can be a subject in one context and an object in another. A typical access control matrix is shown in Figure 5-7.

Open table as spreadsheet

Open table as spreadsheet

|

Subject Object |

File Income |

File Salaries |

Process Deductions |

Print Server A |

|---|---|---|---|---|

|

Joe |

Read |

Read/Write |

Execute |

Write |

|

Jane |

Read/Write |

Read |

None |

Write |

|

Process Check |

Read |

Read |

Execute |

None |

|

Program Tax |

Read/Write |

Read/Write |

Call |

Write |

Figure 5-7: Example of an access matrix.

The columns of the access matrix are called Access Control Lists (ACLs), and the rows are called capability lists. The access matrix model supports discretionary access control because the entries in the matrix are at the discretion of the individual(s) who have the authorization authority over the table. In the access control matrix, a subject’s capability can be defined by the triple (object, rights, and random number). Thus, the triple defines the rights that a subject has to an object along with a random number used to prevent a replay or spoofing of the triple’s source. This triple is similar to the Kerberos tickets previously discussed in Chapter 2.

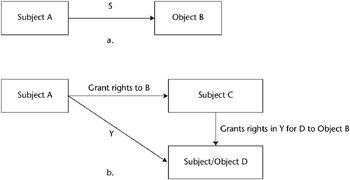

Take-Grant Model

The Take-Grant model uses a directed graph to specify the rights that a subject can transfer to an object or that a subject can take from another subject. For example, assume that Subject A has a set of rights (S) that includes Grant rights to Object B. This capability is represented in Figure 5-8a. Then, assume that Subject A can transfer Grant rights for Object B to Subject C and that Subject A has another set of rights, (Y), to Object D. In some cases, Object D acts as an object, and in other cases it acts as a subject. Then, as shown by the diagonal arrow in Figure 5-8b, Subject C can grant a subset of the Y rights to Subject/Object D because Subject A passed the Grant rights to Subject C.

Figure 5-8: Take-Grant model illustration.

The Take capability operates in an identical fashion as the Grant illustration.

Bell-LaPadula Model

The Bell-LaPadula Model was developed to formalize the U.S. Department of Defense (DoD) multilevel security policy. The DoD labels materials at different levels of security classification. As previously discussed, these levels are Unclassified, Confidential, Secret, and Top Secret - ordered from least sensitive to most sensitive. An individual who receives a clearance of Confidential, Secret, or Top Secret can access materials at that level of classification or below. An additional stipulation, however, is that the individual must have a need to know for that material. Thus, an individual cleared for Secret can access only the Secret-labeled documents that are necessary for that individual to perform an assigned job function. The Bell-LaPadula model deals only with the confidentiality of classified material. It does not address integrity or availability.

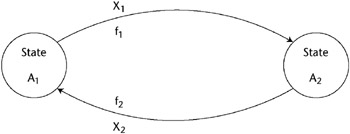

The Bell-LaPadula model is built on the state machine concept. This concept defines a set of allowable states (Ai) in a system. The transition from one state to another upon receipt of input(s) (Xj) is defined by transition functions (fk). The objective of this model is to ensure that the initial state is secure and that the transitions always result in a secure state. The transitions between two states are illustrated in Figure 5-9.

Figure 5.9: State transitions defined by the function f with an input X.

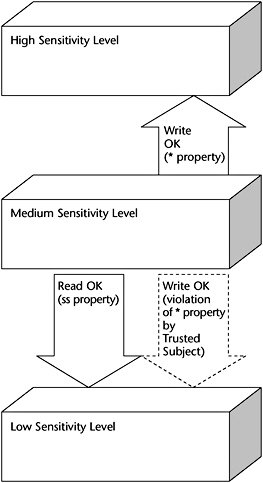

The Bell-LaPadula model defines a secure state through three multilevel properties. The first two properties implement mandatory access control, and the third one permits discretionary access control. These properties are defined as follows:

- The Simple Security Property (ss Property). States that reading of information by a subject at a lower sensitivity level from an object at a higher sensitivity level is not permitted (no read up). In formal terms, this property states that a subject can read an object only if the access class of the subject dominates the access class of the object. Thus, a subject can read an object only if the subject is at a higher sensitivity level than the object.

- The * (star) Security Property. States that writing of information by a subject at a higher level of sensitivity to an object at a lower level of sensitivity is not permitted (no write-down). Formally stated, under * property constraints, a subject can write to an object only if the access class of the object dominates the access class of the subject. In other words, a subject at a lower sensitivity level can write only to an object at a higher sensitivity level.

- The Discretionary Security Property. Uses an access matrix to specify discretionary access control.

There are instances where the * (star) property is too restrictive and it interferes with required document changes. For instance, it might be desirable to move a low-sensitivity paragraph in a higher-sensitivity document to a lower-sensitivity document. The Bell-LaPadula model permits this transfer of information through a Trusted Subject. A Trusted Subject can violate the * property, yet it cannot violate its intent. These concepts are illustrated in Figure 5-10.

Figure 5-10: The Bell-LaPadula Simple Security and * properties.

In some instances, a property called the Strong * Property is cited. This property states that reading or writing is permitted at a particular level of sensitivity but not to either higher or lower levels of sensitivity.

This model defines requests (R) to the system. A request is made while the system is in the state v1; a decision (d) is made upon the request, and the system changes to the state v2. (R, d, v1, v2) represents this tuple in the model. Again, the intent of this model is to ensure that there is a transition from one secure state to another secure state.

The discretionary portion of the Bell-LaPadula model is based on the access matrix. The system security policy defines who is authorized to have certain privileges to the system resources. Authorization is concerned with how access rights are defined and how they are evaluated. Some discretionary approaches are based on context-dependent and content-dependent access control. Content-dependent control makes access decisions based on the data contained in the object, whereas context-dependent control uses subject or object attributes or environmental characteristics to make these decisions. Examples of such characteristics include a job role, earlier accesses, and file creation dates and times.

As with any model, the Bell-LaPadula model has some weaknesses. These are the major ones:

- The model considers normal channels of the information exchange and does not address covert channels.

- The model does not deal with modern systems that use file sharing and servers.

- The model does not explicitly define what it means by a secure state transition.

- The model is based on a multilevel security policy and does not address other policy types that may be used by an organization.

Integrity Models

In many organizations, both governmental and commercial, integrity of the data is as important or more important than confidentiality for certain applications. Thus, formal integrity models evolved. Initially, the integrity model was developed as an analog to the Bell-LaPadula confidentiality model and then became more sophisticated to address additional integrity requirements.

The Biba Integrity Model

Integrity is usually characterized by the three following goals:

- The data is protected from modification by unauthorized users.

- The data is protected from unauthorized modification by authorized users.

- The data is internally and externally consistent; the data held in a database must balance internally and correspond to the external, real-world situation.

To address the first integrity goal, the Biba model was developed in 1977 as an integrity analog to the Bell-LaPadula confidentiality model. The Biba model is lattice-based and uses the less-than or equal-to relation. A lattice structure is defined as a partially ordered set with a least upper bound (LUB) and a greatest lower bound (GLB). The lattice represents a set of integrity classes (ICs) and an ordered relationship among those classes. A lattice can be represented as (IC, ≤, LUB, GUB).

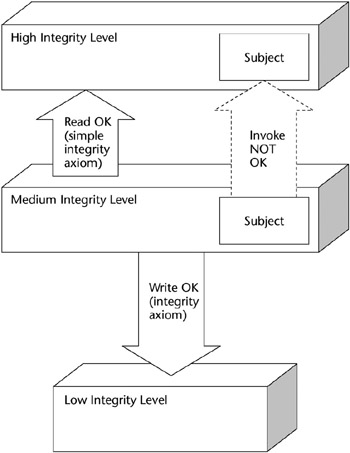

Similar to the Bell-LaPadula model’s classification of different sensitivity levels, the Biba model classifies objects into different levels of integrity. The model specifies the three following integrity axioms:

- The Simple Integrity Axiom. States that a subject at one level of integrity is not permitted to observe (read) an object of a lower integrity (no read-down). Formally, a subject can read an object only if the integrity access class of the object dominates the integrity class of the subject.

- The * (star) Integrity Axiom. States that an object at one level of integrity is not permitted to modify (write to) an object of a higher level of integrity (no write-up). In formal terms, a subject can write to an object only if the integrity access class of the subject dominates the integrity class of the object.

- Invocation Property. Prohibits a subject at one level of integrity from invoking a subject at a higher level of integrity. This property prevents a subject at one level of integrity from invoking a utility such as a piece of software that is at a higher level of integrity. If this invocation were possible, the software at the higher level of integrity could be used to access data at that higher level.

These axioms and their relationships are illustrated in Figure 5-11.

Figure 5-11: The Biba model axioms.

The Clark-Wilson Integrity Model

The approach of the Clark-Wilson model (1987) was to develop a framework for use in the real-world, commercial environment. This model addresses the three integrity goals and defines the following terms:

- Constrained data item (CDI). A data item whose integrity is to be preserved

- Integrity verification procedure (IVP). The procedure that confirms that all CDIs are in valid states of integrity

- Transformation procedure (TP). A procedure that manipulates the CDIs through a well-formed transaction, which transforms a CDI from one valid integrity state to another valid integrity state

- Unconstrained data item. Data items outside the control area of the modeled environment, such as input information

The Clark-Wilson model requires integrity labels to determine the integrity level of a data item and to verify that this integrity was maintained after an application of a TP. This model incorporates mechanisms to enforce internal and external consistency, a separation of duty, and a mandatory integrity policy.

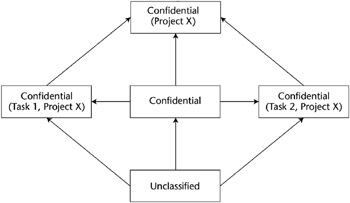

Information Flow Models

An information flow model is based on a state machine, and it consists of objects, state transitions, and lattice (flow policy) states. In this context, objects can also represent users. Each object is assigned a security class and value, and information is constrained to flow in the directions that are permitted by the security policy. An example is shown in Figure 5-12.

Figure 5-12: An information flow model.

In Figure 5-12, information flows from Unclassified to Confidential in Tasks in Project X and to the combined tasks in Project X. This information can flow in only one direction.

Non-Interference Model

This model is related to the information flow model with restrictions on the information flow. The basic principle of this model is that a group of users (A), who are using the commands (C), do not interfere with the user group (B), who are using commands (D). This concept is written as A, C:| B, D. Restating this rule, the actions of Group A who are using commands C are not seen by users in Group B using commands D.

Chinese Wall Model

The Chinese Wall model, developed by Brewer and Nash, is designed to prevent information flow that might result in a conflict of interest in an organization representing competing clients. For example, in a consulting organization, analyst Joe may be performing work for the Ajax corporation involving sensitive Ajax data while analyst Bill is consulting for a competitor, the Beta corporation. The principle of the Chinese Wall is to prevent compromise of the sensitive data of either or both firms because of weak access controls in the consulting organization.

Composition Theories

In most applications, systems are built by combining smaller systems. An interesting situation to consider is whether the security properties of component systems are maintained when they are combined to form a larger entity.

John McLean studied this issue in 1994.[*] He defined two compositional constructions: external and internal. The following are the types of external constructs:

- Cascading. The condition in which one system’s input is obtained from the output of another system

- Feedback. The condition in which one system provides the input to a second system, which in turn outputs to the input of the first system

- Hookup. A system that communicates with another system as well as with external entities

The internal composition constructs are intersection, union, and difference.

The general conclusion of this study was that the security properties of the small systems were maintained under composition (in most instances) in the cascading construct, yet are also subject to other system variables for the other constructs.

[*]J. McLean, “A General Theory of Composition for Trace Sets Closed under Selective Interleaving Functions,” Proceedings of 1994 IEEE Symposium on Research in Security and Privacy (IEEE Press, 1994), pp. 79–93.

Assessment Questions

You can find the answers to the following questions in Appendix A.

|

1. |

What does the Bell-LaPadula model not allow?

|

|

|

2. |

In the * (star) security property of the Bell-LaPadula model:

|

|

|

3. |

The Clark-Wilson model focuses on data’s:

|

|

|

4. |

The * (star) property of the Biba model states that:

|

|

|

5. |

Which of the following does the Clark-Wilson model not involve?

|

|

|

6. |

The Take-Grant model:

|

|

|

7. |

The Biba model addresses:

|

|

|

8. |

Mandatory access controls first appear in the Trusted Computer System Evaluation Criteria (TCSEC) at the rating of:

|

|

|

9. |

In the access control matrix, the rows are:

|

|

|

10. |

What information security model formalizes the U.S. Department of Defense multilevel security policy?

|

|

|

11. |

A Trusted Computing Base (TCB) is defined as:

|

|

|

12. |

Memory space insulated from other running processes in a multiprocessing system is part of a:

|

|

|

13. |

The boundary separating the TCB from the remainder of the system is called the:

|

|

|

14. |

The system component that enforces access controls on an object is the:

|

|

|

15. |

Which one the following is not one of the three major parts of the Common Criteria (CC)?

|

|

|

16. |

A computer system that employs the necessary hardware and software assurance measures to enable it to process multiple levels of classified or sensitive information is called a:

|

|

|

17. |

For fault tolerance to operate, a system must be:

|

|

|

18. |

Which of the following choices describes the four phases of the National Information Assurance Certification and Accreditation Process (NIACAP)?

|

|

|

19. |

In the Common Criteria, an implementation-independent statement of security needs for a set of IT security products that could be built is called a:

|

|

|

20. |

The termination of selected, noncritical processing when a hardware or software failure occurs and is detected is referred to as:

|

|

|

21. |

Which one of the following is not a component of a CC Protection Profile?

|

|

|

22. |

Content-dependent control makes access decisions based on:

|

|

|

23. |

The term failover refers to:

|

|

|

24. |

Primary storage is:

|

|

|

25. |

In the Common Criteria, a Protection Profile:

|

|

|

26. |

Context-dependent control uses which of the following to make decisions?

|

|

|

27. |

The secure path between a user and the Trusted Computing Base (TCB) is called:

|

|

|

28. |

In a ring protection system, where is the security kernel usually located?

|

|

|

29. |

Increasing performance in a computer by overlapping the steps of different instructions is called:

|

|

|

30. |

Random-access memory is:

|

|

|

31. |

In the National Information Assurance Certification and Accreditation Process (NIACAP), a type accreditation performs which one of the following functions?

|

|

|

32. |

Processes are placed in a ring structure according to:

|

|

|

33. |

The MULTICS operating system is a classic example of:

|

|

|

34. |

What are the hardware, firmware, and software elements of a Trusted Computing Base (TCB) that implement the reference monitor concept called?

|

|

Answers

|

1. |

Answer: a The answer a is correct. The other options are not prohibited by the model. |

|

2. |

Answer: c The correct answer is c by definition of the star property. |

|

3. |

Answer: a The answer a is correct. The Clark-Wilson model is an integrity model. |

|

4. |

Answer: b |

|

5. |

Answer: c The answer c is correct. Answers a, b, and d are parts of the Clark-Wilson model. |

|

6. |

Answer: b |

|

7. |

Answer: d The answer d is correct. The Biba model is an integrity model. Answer a is associated with confidentiality. Answers b and c are specific to the Clark-Wilson model. |

|

8. |

Answer: c |

|

9. |

Answer: d The answer d is correct. Answer a is incorrect because the access control list is not a row in the access control matrix. Answer b is incorrect because a tuple is a row in the table of a relational database. Answer c is incorrect because a domain is the set of allowable values a column or attribute can take in a relational database. |

|

10. |

Answer: d The answer d is correct. The Bell-LaPadula model addresses the confidentiality of classified material. Answers a and c are integrity models, and answer b is a distracter. |

|

11. |

Answer: a The answer a is correct. Answer b is the security perimeter. Answer c is the definition of a trusted path. Answer d is the definition of a trusted computer system. |

|

12. |

Answer: a |

|

13. |

Answer: d The answer d is correct. Answers a and b deal with security models, and answer c is a distracter. |

|

14. |

Answer: c |

|

15. |

Answer: b The correct answer is b, a distracter. Answer a is Part 1 of the CC. It defines general concepts and principles of information security and defines the contents of the Protection Profile (PP), Security Target (ST), and the Package. The Security Functional Requirements, answer c, are Part 2 of the CC, which contains a catalog of well-defined standard means of expressing security requirements of IT products and systems. Answer d is Part 3 of the CC and comprises a catalog of a set of standard assurance components. |

|

16. |

Answer: c The correct answer is c, by definition of a trusted system. Answers a and b refer to open, standard information on a product as opposed to a closed or proprietary product. Answer d is a distracter. |

|

17. |

Answer: a The correct answer is a, the two conditions required for a fault-tolerant system. Answer b is a distracter. Answer c is the definition of fail-safe, and answer d refers to starting after a system shutdown. |

|

18. |

Answer: b |

|

19. |

Answer: c The answer c is correct. Answer a, ST, is a statement of security claims for a particular IT product or system. A Package, answer b, is defined in the CC as “an intermediate combination of security requirement compo-nents.” A TOE, answer d, is “an IT product or system to be evaluated.” |

|

20. |

Answer: c |

|

21. |

Answer: c The answer c is correct. Product-specific security requirements for the product or system are contained in the Security Target (ST). Additional items in the PP are:

|

|

22. |

Answer: a The answer a is correct. Answer b is context-dependent control. Answers c and d are distracters. |

|

23. |

Answer: a Failover means switching to a “hot” backup system that maintains duplicate states with the primary system. Answer b refers to fail-safe, and answers c and d refer to fail-soft. |

|

24. |

Answer: a The answer a is correct. Answer b refers to secondary storage. Answer c refers to virtual memory, and answer d refers to sequential memory. |

|

25. |

Answer: d The answer d is correct. Answer a is a distracter. Answer b is the product to be evaluated. Answer c refers to TCSEC. |

|

26. |

Answer: a The answer a is correct. Answer b refers to content-dependent characteristics, and answers c and d are distracters. |

|

27. |

Answer: b Answer a, trusted distribution, ensures that valid and secure versions of software have been received correctly. Trusted facility management, answer c, is concerned with the proper operation of trusted facilities as well as system administration and configuration. Answer d, the security perimeter, is the boundary that separates the TCB from the remainder of the system. Recall that the TCB is the totality of protection mechanisms within a computer system that are trusted to enforce a security policy. |

|

28. |

Answer: c |

|