5.3 Security technology and solutions

|

|

5.3 Security technology and solutions

Given the potential threats already described, there are a number of technologies available to combat network and system vulnerabilities. Underpinning these technologies are a number of techniques that are typically utilized in various combinations to build products, including the following:

-

Encryption: to protect data and passwords

-

Authentication and authorization: to prevent improper access

-

Integrity checking and message authentication codes: to protect against improper alteration of messages

-

Nonrepudiation: to make sure that an action cannot be denied by the person who performed it

-

Digital signatures and certificates: to ascertain a party's identity

-

One-time passwords and two-way random number handshakes: to mutually authenticate parties of a conversation

-

Frequent key refresh, strong keys, and prevention of deriving future keys: to protect against breaking of keys (cryptanalysis)

-

Address concealment: to protect against denial-of-service attacks

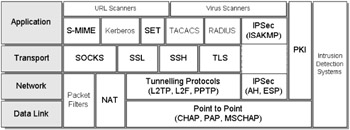

The security threat is constantly evolving, and a combination of techniques, each dynamically changing to match new threats, must be implemented in order to offer any hope of integrity. Increasingly, many organizations (particularly in the finance sector) are considering migration to a full Public Key Infrastructure (PKI), and next-generation Intrusion Detection Systems (IDS) to complement and further strengthen their security infrastructures. There is increasing research that focuses on systems that adapt and learn proactively, with the ability to feed back changes dynamically to perimeter protection systems such as firewalls. (See Figure 5.4.)

Figure 5.4: Security solutions in context.

This section discusses some of the technologies available today for designing secure networks. The key technologies include the following:

-

Network Address Translation (NAT)

-

Firewalls, packet filter routers, proxy servers (e.g., SOCKS)

-

Remote access security: AAA services, Kerberos, RADIUS, TACACS, PAP, and CHAP

-

End-to-end security solutions: Secure Sockets Layer (SSL), SSH, IP Security Architecture (IPSec), S-MIME

-

Secure Electronic Transactions (SET)

-

Public Key Infrastructure (PKI)

-

Virus scanners

-

URL scanners

-

Intrusion Detection Systems (IDSs)

-

Virtual Private Networks (VPNs)

5.3.1 Cryptography

Traditional cryptography is based on the premise that both the sender and receiver are using an identical secret key. This model is generally referred to as secret-key or symmetric cryptography. There are two basic problems with its use: key distribution and scalability.

The process of key generation, transmission, and storage is referred to as key management.

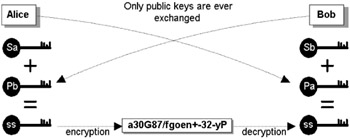

In the Diffie-Helman (D-H) model, each user holds a pair of keys: a public key and a private key. Public keys are published openly, but each user's private key remains secret and is never transmitted. Each public-private key pair is tightly related mathematically (e.g., via modulo arithmetic); information encrypted with the public key can be decrypted only with the corresponding private key and vice versa. In Figure 5.5, for example, Alice takes her secret key (Sa) and performs a calculation using Bob's public key (Pb) to give the shared secret key (ss). Bob performs a corresponding calculation using his secret key (Sb) and Alice's public key (Pa). The shared secret key is identical in both cases and can be used to encrypt and decrypt.

Figure 5.5: The process of agreeing on a shared secret key, using the Diffie-Helman technique, by exchanging only the public key of the peer.

The real advantage of asymmetric cryptography is that the sender and receiver do not generally need to disclose private keys; another is that the communications channel can be an open or public network (such as the Internet). The problem is that public keys need to be held in a trusted authenticated repository that is accessible to users (Certificate Authority [CA]), and this is an area where the model is less elegant and somewhat controversial.

Encryption algorithms

In symmetric and asymmetric cryptography, messages and data are encrypted, decrypted, or manipulated using a number of specialized algorithms. The simplest algorithm used in cryptographic products (although not strictly a cryptographic technique at all and hardly secure) is an exclusive OR (XOR) operation, whereby bits in the message string are simply flipped. There are several well-known encryption techniques used with symmetric schemes, including the following:

-

DES—Data Encryption Standard, as defined in FIPS PUB 46-1. Commonly used for data encryption.

-

IDEA—International Data Encryption Algorithm. Commonly used for data encryption.

-

CAST—Commonly used for data encryption.

-

Skipjack RC2/RC4—RC4 is commonly used for data encryption applications such as IPSec.

There are also a number of additional encryption techniques used with asymmetric schemes, including the following:

-

Triple DES—Commonly used for key encryption.

-

RSA.

-

DSS—Digital Signature Standard.

-

MD2, MD4, MD5—RSA Data Security, Inc.'s, Message Digest Algorithms (MD2 is defined in [9]; MD4 is defined in [10]; and MD5 is defined in [11]). MD5 is commonly used for authentication.

-

SHS/SHA—Secure Hash Standard/Secure Hash Algorithm. SHA-1 is commonly used for authentication.

Cryptography is the subject of a book in itself, and while I have barely skimmed over it here, I refer interested readers to [1], which covers this subject in the full depth it deserves.

5.3.2 Public Key Infrastructure (PKI)

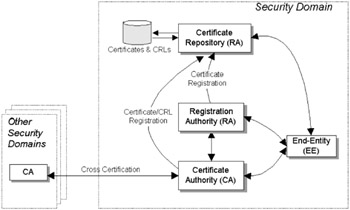

The Public Key Infrastructure (PKI) builds upon the foundations of asymmetric cryptography to establish a security infrastructure suitable for secure transactions such as electronic commerce. The PKI is a set of hardware, software, policies, and procedures needed to create, manage, store, distribute, and revoke digital certificates based on public key cryptography. (See Figure 5.6.)

Figure 5.6: PKI architectural model. Each CA is responsible for a security domain. CAs may perform cross-certification with other CAs.

The PKI provides services such as key management, certificate distribution, certificate handling, a trusted time service, and support for nonrepudiation. PKI services also require facilities to store an entity's sensitive information. For further information about the PKI, the interested reader is referred to [12].

X.509 digital certificates

Certificates are cryptographically sealed data objects that validate the binding of a public key to an identity (such as a person or device) via a digital signature. Certificates are issued and held by a trusted third party, in this case Certificate Authority (CA). Certificates are used to verify a claim that a public key does in fact belong to a given entity and prevent a malicious user from using a bogus key to impersonate someone else. The certificate is digitally signed by computing its hash value and encrypting this with an issuer's private key. If any bit is changed or corrupted in the certificate, the recalculated hash value will be different and the signature will be invalid. If the client already possesses the issuer's public key and trusts the issuer to verify the identity of the server, then the client can be sure that the public key in the certificate is the public key of the server. A malicious user would have to know either the private key of the server or the private key of the issuer to successfully impersonate the server. An X.509 digital certificate contains a number of objects, including the following:

-

The version number (currently X.509 is at version 3)

-

The serial number of the certificate

-

The sign algorithm ID (the algorithm type used by the issuer to sign this certificate)

-

The issuer's name (the name of the certificate authority that issued the certificate)

-

The validity period (the lifetime of the certificate, a valid start and expiration date)

-

The subject's name (the user ID)

-

The subject's public key

-

The issuer's unique ID

-

The subject's unique ID

-

Optional extensions

-

The digital signature of the issuing certificate authority

Certificate data are written in Abstract Syntax Notation 1 (ASN.1) and subsequently converted into binary data along with ASN.1 Distinguished Encoding Rules (DER) to enable certificate data to be independent of specific platform encoding rules. The most widely accepted format for certificates is defined by the ITU-T X.509 international standard; thus, certificates can be read or written by any application complying with X.509.

Digital signatures

A digital signature is the electronic metaphor for a written signature; it provides proof that information sent by a user did indeed come from that user and can be used to prove message integrity. When a message is sent by a user, it can be signed with a digital signature, using the sender's private key. Since all users have access to the sender's public key, the public key can be used to verify the signed message. If the signature can be decrypted using the sender's public key, then only that sender could have created the message using his or her private key. For example, a CA normally signs a certificate with a digital signature computed using its own private key. Anyone can verify the signature by using the CA's public key. If either a message or a certificate is digitally signed, then any tampering with the content is immediately detectable. In this way public key cryptosystems provide both confidentiality (no one can read a message except the receiver) and authenticity (no one can write a message except the sender).

Certificate Authorities (CAs)

Certificates are issued by a Certificate Authority (CA), which can be any trusted central administration willing to vouch for the identities of those to whom it issues certificates and their association with a given key. One way to authenticate entities involves enclosing one or more certificates with every signed message. The receiver of the message would verify the certificate using the CA's public key and, now confident of the public key of the sender, verify the message's signature. There may be several certificates enclosed with the message forming a hierarchical chain, wherein one certificate testifies to the authenticity of the previous certificate. At the end of a certificate hierarchy is a top-level CA, which is trusted without a certificate from any other certifying authority or whose certificate can be self-signed (i.e., the top-level certifying authority uses its own private key to sign its own certificate). Of course, CA's themselves require certificates, and this is part of the reason for this hierarchy. The public key of the top-level CA must be independently known—for example, by being widely published or securely distributed. If the top-level certifying authority has a self-signed certificate, the resulting signature has to be independently known.

Registration Authorities (RAs)

The Registration Authority (RA) is an optional component in a PKI. Often this functionality is bundled with the CA. If a distinct RA is implemented, then the RA is a trusted end entity, certified by the CA and acts as a subordinate server of the CA. The CA can delegate some of its management functions to the RA (e.g., the RA may perform personal authentication tasks, report revoked certificates, generate keys, or archive key pairs). The RA, however, does not issue certificates or CRLs. The RA was introduced to solve an issue where CAs are not sufficiently "Godlike" to authenticate certificates. In essence, the CA-RA model splits responsibilities so that the CA issues keys and the RA authenticates them. RAs are established between users and CAs and may perform user-oriented functions, such as personal authentication, name assignment, key generation, archiving of key pairs, and so on. The standards also define the messages and protocols between CAs, RAs, and users.

Certificate Repositories (CRs)

Because the X.509 certificate format is a natural fit to an X.500 directory, a CR is best implemented as a directory, and it is then able to be accessed by the dominant Directory Access Protocol, the Lightweight Directory Access Protocol (LDAP). Although not recommended, there are other ways to obtain certificates or CRL information if a CR is not implemented in a directory, generally speaking a directory is much the preferred route.

Certificate Revocation Lists (CRLs)

A CRL is a list of certificates that have been prematurely revoked (i.e., invalidated before their scheduled expiration date) and serves to blacklist bad certificates that can be consulted by all interested parties. CRLs are maintained by CAs, and although CRLs are stored in a distributed manner, there may be central repositories for CRLs (e.g., network sites containing the latest CRLs from many organizations).

Current use of public key infrastructures

At the time of writing very few organizations have implemented a PKI. It is considered somewhat immature and expensive, and therefore only large organizations, such as financial institutions, are developing migration plans for deploying a PKI. Many of the larger financial institutions are currently using token cards or starting to implement VPNs. Having said that, PKI components (primarily the cryptographic algorithms) have already been deployed in widely used network protocols and applications, as follows:

-

Secure Multipurpose Internet Mail Extensions (S/MIME) is a secure mail application developed by RSA Data Security, Inc. S/MIME is an emerging standard for interoperable secure e-mail and adds digital signatures and encryption to Internet MIME messages. For further information, the interested reader is directed to [13].

-

The Secure Electronic Transaction (SET) specification is an open technical standard for supporting e-commerce transactions. The core protocol of SET is based on digital certificates. For further information, the interested reader is directed to [14].

-

The Secure Sockets Layer (SSL) protocol uses RSA public key cryptography and is capable of client authentication, server authentication, and encrypted SSL connection. For further information, the interested reader is directed to [15].

-

IPSec is a set of specifications for securing IP datagrams using authentication, integrity, and confidentiality services based on cryptography. For further information, the interested reader is directed to [16].

-

Point-to-Point Protocol (PPP) uses the Challenge Handshake Authentication Protocol (CHAP), which uses encryption. For further information, the interested reader is directed to [17].

At present the only experience the vast majority of users will have with PKI is using SSL on the Internet to buy products. Nevertheless, warts and all, the PKI is likely to be the cornerstone of future e-commerce. Vendors of PKI solutions and components include Baltimore Technologies [18], Entrust Technologies [19], RSA Security Inc. [20], and VeriSign Inc. [21]. For further information on PKI, the interested reader is referred to [2].

5.3.3 Network Address Translation (NAT)

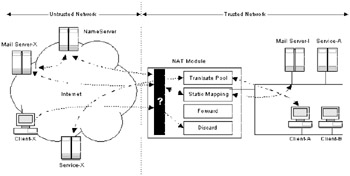

The basic concepts behind Network Address Translation (NAT) were introduced in Chapter 2, where it was used to combat IP address depletion and resolve illegal IP addressing schemes. NAT also has close associations with network security, because of its ability to hide the details of the network behind a firewall or other NAT-enabled device. When NAT translates IP addresses, it enables devices that communicate with untrusted public networks (such as the Internet) to hide their real addresses. Clearly, from a hacker's perspective, it is much harder to attack a resource where the real address is unknown. Note also that the virtual NAT addresses may not be consistent, since they are assigned on the fly.

NAT must be preconfigured with statically mapped virtual addresses associated with specific services. Figure 5.7 shows the general functionality of NAT. For example, in Figure 5.7 an external name server could have an entry for an internal mail gateway inside the trusted network (Mail Server I). When an external mail server (Mail Server-X) does a lookup via the name server, the name server resolves the public host name of the internal mail gateway to the virtual IP address. The remote mail server can then send a connection request to this virtual IP address. When that request hits the NAT box on the untrusted external interface, NAT resolves the static mapping between the public IP address and a secure IP address and forwards the connection request to the internal mail gateway.

Figure 5.7: NAT configuration.

NAT also poses particular problems in secure networking environments, as follows:

-

Some protocols and services have the unfortunate habit of passing addressing information inside application data (i.e., above the Transport Layer). You can either choose to discard these protocols in your rule base or install a more sophisticated version of NAT that is protocol aware.

-

NAT is often run directly on firewalls as an additional security measure. This places an additional processing burden on what may already be a stressed system. It is not unusual to see a 20 percent degradation in overall throughput on firewalls with NAT activated.

-

Since NAT changes address information in an IP packet, end-to-end IPSec authentication (if used) will always fail its integrity check under the AH protocol. This is because any change to any bit in the datagram will invalidate the integrity check, generated at source. However, IPSec has many features that negate the need for NAT.

5.3.4 AAA security services

Remote dial-in access has long been recognized as inherently insecure, and the Remote Access Server (RAS) or Network Access Server (NAS) is a vital function of any internetwork. With the rise of mobile computing there is an increasing demand for transparent but secure connectivity to corporate network resources from a variety of mobile computing devices, such as notebook computers, palmtop devices, and WAP-enabled phones for basic e-mail access.

AAA security services model

The triple A (AAA) security model was developed primarily to address the issue of securing remote access, by implementing three functions—authentication, authorization, and accounting—as follows:

-

Authentication determines who a user (or entity) is. Authentication can take many forms. Traditional authentication utilizes a name and a fixed password. Most computers work this way; however, fixed passwords have limitations, mainly in the area of security. Many modern authentication mechanisms utilize one-time passwords or a challenge response query. Authentication generally takes place when the user first logs in to a machine or requests a service of it.

-

Authorization determines what a user is allowed to do. In general, authentication precedes authorization, but this is optional. An authorization request may indicate that the user is not authenticated, and in this case it is up to the authorization agent to determine if an unauthenticated user is allowed to use the services requested. In current remote authentication protocols, authorization does not simply provide yes or no answers, but it may customize the service for a particular user. Two of the most popular authentication services are Remote Authentication Dial-In User Service (RADIUS) and Terminal Access Controller Access Control System (TACACS), with Kerberos becoming increasingly popular since its integration with Windows 2000. These services are all described later in this section.

-

Accounting is typically the third action after authentication and authorization. But again, neither authentication nor authorization are required. Accounting is the action of recording what a user is doing, or has done. In the distributed client/server security database model, a number of communications servers, or clients, authenticate a dial-in user's identity through a single, central database, or authentication server. The authentication server stores all information about users, their passwords, and access privileges. A central location for authentication data is more secure than scattering the user information on different devices throughout a network. A single authentication server can support hundreds of communications servers, serving up to tens of thousands of users. Communications servers can access an authentication server locally, via a LAN, or over remote wide area circuits. Several remote access vendors and the IETF have been in the forefront of this remote access security effort and the means whereby such security measures are standardized. RADIUS and TACACS are two such cooperative ventures that have evolved out of the Internet standardizing body and remote access vendors.

Fundamental to security is the ability to validate that a user is who he or she says he or she is, and we call this process authentication. There are many techniques available for deploying authentication in a networked environment; several are as follows, in increasing strength:

-

Static user name/password

-

Aging user name/password

-

One-Time Passwords (OTPs) (e.g., S/Key for terminal users, PAP for point-to-point links)

-

Token cards/soft tokens (employs OTPs)

-

Biometrics (fingerprint, face, and iris scanning)

There are also some services that employ the full AAA model, including Kerberos, TACACS, and RADIUS. We will now briefly review some of the key protocols and services available for authentication.

Static and aging passwords

Static passwords represent the lowest level of authentication available. Experience shows that people are not good at remembering passwords, and therefore passwords tend to be written down and are often easy for a hacker to guess (e.g., the name of your sister or your favorite car). Aging passwords are slightly better in that user are forced at regular intervals to change their passwords. Passwords are easily and rapidly defeated by dictionary attacks, so it is recommended that you choose a password that is an unusual combination of letters and digits.

PAP

The Password Authentication Protocol (PAP) is a way to keep unauthorized remote users from accessing a network. It is typically used to control point-to-point, router-to-router communications and dial-in access. When PAP is enabled, a remote device (e.g., a PC, workstation, router, or communication server) is required to provide a static password known only to the peer device or NAS. If the correct password is not provided, access is denied. PAP is typically supported on router serial lines using Point-to-Point Protocol (PPP) encapsulation. Although effective, it is quite weak in that the password is static, and the password is transferred as plaintext. For further information about PAP, the interested reader is referred to [22, 23].

CHAP

The Challenge Handshake Authentication Protocol (CHAP) is essentially a smarter form of PAP. It is commonly used to control router-to-router communications and dial-in access. When CHAP is enabled, a remote device (e.g., a PC, workstation, router, or communication server) attempting to connect to a local router is challenged to provide a secret. If the correct response is not provided, network access is denied. CHAP is bidirectional; each peer can challenge the other. CHAP also allows for the periodic reissuing of challenges (using different and difficult to predict challenge numbers). CHAP is becoming popular because it does not require a secret password to be sent over the network. CHAP is typically supported on router serial lines using Point-to-Point Protocol (PPP) encapsulation. Because of its dynamic nature, CHAP is considered more secure than PAP. For further information about CHAP, the interested reader is referred to [23].

Simple Key Management for IP (SKIP)

Simple Key Management Protocol for IP (SKIP) is an authentication scheme developed by Sun. It is commonly integrated into firewalls as a user authentication mechanism because of its simplicity. SKIP has been presented to the IETF Security Working Group. It uses Diffie-Helman 1,024-bit public key—based authentication algorithms for long-term key set up, as well as session and traffic key generation, along with DES, RC2, and RC4—based traffic encryption. SKIP uses one-time passwords (OTPs), which are keys sent unencrypted over the network. Note that keys are not exchanged; SKIP relies on a list of keys. SKIP also includes pipelining of traffic key generation and on-the-fly traffic key changing. A host-based implementation has been implemented, offering a solution to remote network access through authenticated IP tunnels. For further information about SKIP, the interested reader is referred to [24].

Token cards

In recent years a small number of companies have produced tamper-proof smart cards, which typically produce time-based keys for use with authentication schemes. For example, Security Dynamics produces a SecureID card. This scheme works as follows: When users log in, they are prompted for both their user name and a key. The key is generated by the user typing a secret four-digit PIN number into the smart card and then pressing a button to invoke the key-generation algorithm. The key will vary depending upon the time of day. This key is passed to the NAS (or separate token server), which runs the same key-generation algorithm and has a clock synchronized with the card. The card itself is claimed to be tamper proof, and this is a very secure mechanism for dynamic user authentication and is employed by several large organizations, such as financial institutions. It is also supported by several firewalls as an optional authentication scheme. Other vendors in this field include Enigma Logic and DES Card.

Biometrics

Biometrics is an emerging technology to assist in authentication. Currently the main techniques include thumbprint scans, face recognition, iris scans, retinal scans, signature geometry, hand geometry, and voice scans. These techniques work with varying degrees of success, though this is currently limited by the technology available (e.g., thumbprint scans are more reliable than face scans). The technology typically enables the administrator to tune the degree of rigor in the biometric to err on the side of a false-positive or false-negative result (i.e., increase the possibility of impostors fooling the test, or make the system so rigorous that even genuine users have difficulty proving they are who they claim to be). This is again a compromise between security system and ease of use. The general consensus is that biometrics will improve to the point where it will become normal practice as a challenge mechanism to authenticate users' many everyday situations.

Remote Authentication Dial-In User Service (RADIUS)

The Remote Authentication Dial-In User Service (RADIUS) protocol is currently the most popular method for managing remote user authentication and authorization, and was originally designed primarily to manage secure access for dispersed serial line and modem pools, since historically these have proved difficult to administer. RADIUS is a very lightweight, UDP-based protocol. An IETF Working Group for RADIUS was formed in January 1996 to address the standardization of the RADIUS protocol [25]. RADIUS is designed to be extensible; all transactions are comprised of three tuples of variable length <Attribute><Length><Value>. New attribute values can be added without disturbing existing implementations of the protocol.

Because RADIUS is designed to carry authorization data and is widely deployed, it is used as one method for transferring the data required to set up dynamic tunnels for VPNs. For further information about RADIUS, the interested reader is referred to [25].

TACACS

The Defense Data Network (DDN) originally developed Terminal Access Controller Access Control System (TACACS) to control access to its TAC terminal servers. TACACS is now an industry standard protocol, specified in [26]. It is useful, however, to recognize the various flavors of TACACS currently installed in networks. They are as follows:

-

TACACS is a simple UDP-based access control protocol originally developed by BBN for the MILNET. TACACS operates in a manner similar to RADIUS and is typically used to protect modem access into a network. TACACS also provides access control for routers, network access servers, and other networked devices via one or more centralized security servers. TACACS receives authentication requests from an NAS client and forwards the user name and password information to a centralized security server. The centralized server can either be a TACACS database or an external security database.

-

XTACACS (extended TACACS) is a version of TACACS with extensions that Cisco added to the basic TACACS protocol to support advanced features.

-

TACACS+ is another Cisco extension of TACACS. TACACS+ improves on TACACS and XTACACS by separating the AAA functions and by encrypting all traffic between the NAS and the daemon. It allows any authentication mechanism to be utilized with TACACS+ clients and uses TCP to ensure reliable delivery. The protocol allows the client to request fine-grained access control from the daemon. A key benefit to separating authentication from authorization is that authorization (and per user profiles) can be a dynamic process. TACACS+ can be integrated with other negotiations (such as a PPP negotiation) for far greater flexibility.

In all three cases the TACACS daemon should listen at port 49 (the login port assigned for the TACACS protocol). This port is reserved for both UDP and TCP. For further information, the interested reader is referred to [26–28].

Kerberos

Kerberos is an encryption-based security system that provides mutual authentication between the users and the servers in a network environment. Kerberos uses a private key encryption service based on DES. Although Kerberos provides a full AAA service, it is primarily used for authentication. The Kerberos Network Authentication Service version 5 is described in [29]. In a Kerberos environment at least one secure host will be running as the Kerberos server (referred to as the trusted server, or the Key Distributed Center—KDC); all other clients and servers on the network are assumed to be untrustworthy. The trusted server provides authentication services for all other clients and services. Each client and server (referred to as the principals) hold a private DES key. The trusted server holds a database of the names and private keys associated with all clients and servers allowed to use its services. It is assumed that the principals keep their passwords secure. For further information about Kerberos. the interested reader is referred to [30, 31].

5.3.5 Protocol-based security services

Secure Sockets Layer (SSL)

Secure Sockets Layer (SSL) is a de facto security protocol initially developed by Netscape Communications Corporation [32] in cooperation with RSA Security, Inc. [20]. SSL works by using a private key to encrypt data that are transferred over the SSL connection. The main aim of the SSL protocol is to provide a secure, reliable pipe between two communicating applications by providing both encryption and authentication features. It was designed to improve security for services such as HTTP, Telnet, NNTP, and FTP. SSL provides an alternative to the standard TCP/IP socket API, so in theory it is possible to run any TCP/IP socket application over a secure SSL interface without changing the application. SSL version 3 is an open protocol and one of the most popular security mechanisms deployed on the Internet. It is documented in an IETF draft, although the IETF has renamed SSL Transport Layer Security (TLS) [33]. SSLv3 and SSLv2 are backward compatible; the main enhancements in SSLv3 are support for client authentication and more ciphering types in the cipher specification. The SSL protocol provides the following security services:

-

Server authentication

-

Client authentication (an optional service)

-

Integrity of communication over an SSL connection

-

Confidentiality of communication over an SSL connection

SSL sits between the Transport Layer and the Application Layer (see Figure 5.4) and is designed to protect the pipe (i.e., IP datagrams) and not individual objects being communicated over the pipe. This means that SSL cannot provide nonrepudiation services or protect individual objects (such as a Web page). SSL is composed of two layers: the SSL Handshake Protocol is the upper layer, comprising a protocol for initial authentication and transfer of encryption keys between the client and server. The SSL Record Protocol is the lower layer and comprises a reliable protocol for encapsulating and transferring data (using a variety of predefined cipher and authentication combinations).

Operation

By convention, Web pages that require an SSL connection are prefixed using the special URL method https: rather than http:. An SSL-protected HTTP transfer also uses port 443, rather than HTTP's default port 80. To access a secure Web server an SSL-enabled browser is required (sites often allow normal HTTP access if the browser does not support SSL, but any transactions are at the user's risk). The two most popular Web browsers, Netscape Navigator and Microsoft's Internet Explorer both support SSL. Today, SSL is primarily used for transmitting private documents or securing transactions over the Internet. SSL has been primarily used for HTTP access, although Netscape has stated an intention to employ it for other application types, such as NNTP and Telnet, and there are several free implementations available on the Internet. Many e-commerce Web sites use SSL to provide secure connections for transferring credit card numbers and other sensitive user data. IBM uses SSL to secure TN3270 sessions. As an example, an SSL session using HTTP is initiated as follows:

-

The user requests a document using the special URL prefix https:, either by typing it into the URL, or by clicking on a link (e.g., https://www.testmysecurity.com) via the Web browser.

-

The client (an SSL-enabled browser) recognizes the SSL request and establishes a connection through TCP port 443 to the SSL-enabled Web server.

-

The client then initiates the SSL handshaking phase, using the SSL Record Protocol as a carrier. At this point there is no encryption or integrity checking built into the connection.

An SSL session operates in two basic states: session and connection. The SSL handshake protocol coordinates the states of the client and the server. In addition, there are read and write states defined to coordinate the encryption in accordance with the change cipher specification messages. SSL has two phases, as follows:

-

Phase 1—negotiation and authentication: During this phase the server and client authenticate each other, and the SSL Handshake Protocol negotiates the cipher suites to be used (i.e., the cryptographic algorithms and the Key Exchange Algorithms [KEA] to be used—RSA, D-H). A large number of encryption, hash, and digital signature algorithms are supported by SSL within the specifications (although most real implementations support only a few).

-

Phase 2—data: During this phase the raw data are encapsulated in a simple SSL encapsulation protocol (the SSL Record Protocol) and transmitted. The sender takes messages from upper-layer services, fragments them to manageable blocks, and optionally compresses the data. It then applies a Message Authentication Code (MAC), encrypts the data, and transmits the result to the Transport Layer. The receiver takes incoming data from the Transport Layer, decrypts these data, and verifies the data using the negotiated MAC key. It then decompresses the data (if compression was enabled), reassembles the message, and transmits the message to the appropriate upper-layer service.

In practice SSL works well; however, the main problem with SSL is its use of certificates. The SSL authentication and key exchange algorithms are largely based on X.509 certificate techniques, and SSL, therefore, relies on interfacing with a PKI. Most current SSL implementations are, however, not integrated into PKI and have no means to generate or retrieve certificates. It is the user's responsibility to manually check the certificate sent by a server to ensure that the session is, indeed, connected to the intended server. If the certificate does not originate from the organization you are attempting to connect to, then you should be suspicious, since this could potentially be the signature of a man-in-the-middle attack (some companies, unfortunately, source their signature from external organizations and this can be very misleading). There are various competing or overlapping technologies with SSL, such as IPSEC, S-MIME, and SSH.

Secure HTTP (S-HTTP)

S-HTTP was developed by Enterprise Integration Technologies (EIT) (acquired by Verifone, Inc., in 1995). S-HTTP was designed to secure HTTP access and is a superset of the HTTP protocol, which provides a number of security features, including client/server authentication, spontaneous encryption, and request-response nonrepudiation. As with SSL, S-HTTP is also used to secure Web-oriented transactions over the Internet, although SSL is much more commonly deployed. Whereas SSL creates a secure client/server connection, over which any amount of data can be sent securely, S-HTTP is designed to transmit individual messages securely. SSL and S-HTTP can, therefore, be viewed as complementary rather than competing technologies. Both protocols have been approved by the IETF.

S-HTTP uses shared, private, or public keys to authenticate access and ensure confidentiality via encryption and digital signatures. The encryption and signature are controlled through a CGI script. Unfortunately, S-HTTP currently works only on SunOS 4.1.3, Solaris 2.4, Irix 5.2, HP-UX 9.03, DEC OSF/1, and AIX 3.2.4. Note that S-HTTP should not be confused with HTTPS.

SSH

SSH was developed by the Finnish company F-Secure (formerly DataFellows) and essentially provides secure Telnet (and rsh) access, as well as secure file transfer (via SFTP or SCP), replacing the insecure FTP protocol. SSH can also be used to create a local proxy server for Internet services, providing a secure transmission tunnel for data and e-mail (e.g., PoP, IMAP, SMTP). SSH protects TCP/IP-based terminal connections in UNIX, Windows, and Macintosh environments. Its primary use to date has been for secure remote device configuration. For example, many routers and firewalls have Web servers installed and are now remotely configurable via popular Web browsers. SSH provides a way of securing the Web communication. SSH requires client software to be installed, as well as the SSH server application. For further information, the interested reader is referred to [34].

Secure Multipurpose Internet Mail Extension (S-MIME)

MIME is the official proposed standard format for extended Internet e-mail. Internet e-mail messages comprise two parts: a header and a body. Secure Multipurpose Internet Mail Extension (S-MIME) provides a consistent way to send and receive secure MIME data via the use of digital signatures and encryption. S-MIME is similar in concept to SSL but application specific. It can be used for securing other applications (such as protecting Web pages or EDI messages). S-MIME provides the following cryptographic security services for electronic messaging applications:

-

Authentication

-

Message integrity and nonrepudiation of origin (via digital signatures)

-

Privacy and data security (via encryption).

S-MIME relies on public key technology and uses X.509 certificates to establish the identities of the communicating parties (as defined in RFC 1521). It is typically implemented in end systems and hosts, not in routers or firewalls.

Pretty Good Privacy (PGP)

PGP is a technique for encrypting messages developed by Philip Zimmer-man. PGP is one of the most common ways to protect messages on the Internet, because it is effective, easy to use, and free. PGP is based on the public key, which uses two keys. One is a public key that you disseminate to anyone from whom you want to receive a message; the other is a private key that you use to decrypt messages that you receive. To encrypt a message using PGP, you need the PGP encryption package, which is available for free from a number of sources. The official repository is at the Massachusetts Institute of Technology.

Secure Electronic Transaction (SET)

The SET specifications emerged through an agreement by MasterCard International and Visa International to cooperate on the creation of a single electronic credit card system, enabling secure credit card transactions over the Internet. Prior to SET, each organization had proposed its own protocol and each had received support from a number of networking and computing companies. There are several major supporters of the SET specification (e.g., IBM, Microsoft, Netscape, and GTE). SET is a complex standard—for further information, see [14]; this site also maintains a list of cooperating organizations and companies and their status with regard to deploying SET.

IPSec

IP Security (IPSec) is a set of IETF standards for use with IPv4 and IPv6. IPSec provides a standards-based mechanism for protecting IP datagrams, using authentication, integrity, and privacy at the packet level. IPSec can be used to protect IP packets by tunneling them over untrusted networks such as the Internet. The integrity and authentication services are not provided using digital signatures, but use a special form of key seeded hashes called HMAC (Keyed Hashing for Message Authentication—essentially a form of digital signature using symmetric key techniques without the benefit of private-public key technology [35]). This authentication header can be appended to the datagram. Confidentiality is also provided by the IP Encapsulating Security Payload (ESP), which encrypts the complete datagram payload and header and prepends another cleartext header to the datagram. This makes IPSec extremely useful for creating virtual private networks, and there is now growing support from router, firewall, and some host vendors (such as NorTel, Cisco, RAD, Nokia, and Checkpoint).

5.3.6 Firewalls

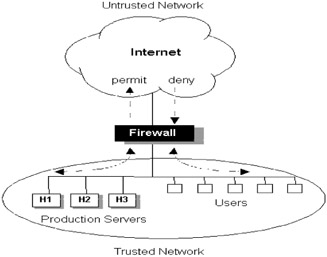

Firewalls are probably the most widely publicized security technique at present, and many networks have successfully installed first- or second-generation firewall technology. The classic definition of a firewall is a system that enforces security policy between a trusted internal network and an untrusted external network (such as the Internet), as illustrated in Figure 5.8. In this model we trust everybody on the internal network and nobody outside. Access to external resources may be permitted; access to internal resources is not.

Figure 5.8: Traditional firewall concepts.

Types of Firewalls

Firewalls have evolved from two different directions, and the technology is now finally starting to merge into a hybrid product. First, there are firewalls that have clearly been derived from router implementations. Second, there are firewalls that have evolved from host-gateway implementations, where standard server applications (such as the Telnet daemon on UNIX) have been modified to monitor and relay sessions for secure end-to-end communications. A new generation of products uses a technique called stateful inspection. This uses object-oriented techniques and builds dynamic data structures for flows through the firewall to model and control application behavior. For many network managers it is not a great leap to go from static filters (sometimes called Access Control Lists—ACLs) on routers to a more stateful rule base on a firewall.

Firewalls can be implemented either as an embedded application running on proprietary platforms (such as Cisco's PIX) or as a set of software modules running on general-purpose operating systems such as Windows, UNIX, or LINUX (e.g., Checkpoint's FireWall-1). Firewall architectures can be broadly classified into three groups, as follows:

-

Packet-filtering routers/circuit-level gateways

-

Proxy servers/application gateways

-

Stateful firewalls

Each of these architectures has its own advantages and disadvantages, as discussed in the following text.

Packet-filtering routers

Routers have historically been convenient places to deploy security policy and offer several basic features of interest, including the following:

-

Route filtering—Controlling routing information is an important part of the security strategy, and some of the more advanced routing protocols provide features that can be used as part of a security strategy. You may be able to insert a filter on the advertised routes, so that specific routes are not advertised to parts of the network. Routing protocols such as OSPF and ISIS can authenticate other routers before they become neighbors. These authentication mechanisms are typically protocol specific and often quite weak, but they do help to improve network stability by preventing unauthorized routers or hosts from participating in the routing protocol.

-

Packet filtering—Many firewalls today are basically routers with advanced filtering techniques. Cisco's terminology for packet filters is Access Control Lists (ACLs), and this term is now commonly understood to be a generic term for packet filters across the industry. Packet filtering is commonly implemented in routers, mainly because the router is often located at positions in the network where traffic and administrative domains are joined. As part of the routing process, packets arriving at an interface are examined and the router compares data in the packet headers with a list of defined filtering rules and makes logical decisions regarding whether or not to forward the packet, discard it, and/or generate events.

When performing packet filtering, the following information is typically examined in IP packets:

-

Source IP address

-

Destination IP address

-

Source TCP/UDP port

-

Destination TCP/UDP port

-

Encapsulated protocol ID (TCP, UDP, IP tunnel, or ICMP)

-

ICMP message type

Many common IP services use well-known TCP and UDP port numbers, and it is often simple to allow or deny these services by configuring address and port information in the filter. For example, a Telnet server listens for connections on TCP port 23 (0x17). By setting a filter on port 23 for a specific interface, Telnet connections can be permitted or denied in either direction. This would, for example, allow us to implement a rule that says that Telnet connections to the Internet are allowed but Telnet from the Internet must be disallowed. For example, under Cisco IOS:

define filter 1 if 3 ip addr any tcp port 23 incoming action deny define filter 2 if 3 ip addr any tcp port 23 outgoing action permit

Packet-filtering rules are relatively straightforward and can be used to implement part of a security policy. One of the problems with this approach, however, is the static nature of filters and the level of granularity offered. Application behavior can be very hard to capture in a static filter; some applications are dynamic in their use of ports (NFS, HTML, and TFTP) and may use embedded addresses (such as NetBIOS). For example, NFS uses Remote Procedure Calls (RPCs), where each call can utilize different ports for each connection dynamically. With packet filters we are restricted to protocols up to the socket layer (transport interface). Setting custom filters, which dig deeper into user data, is not a good idea and is potentially very CPU intensive. This leads in to another potential problem performance. As the number of ACLs increases, this is likely to degrade performance significantly on low-end systems due to the time required to parse each of these rules in sequence. Once this starts to become a real problem, it is time to invest in a more stateful firewall (or a router that maintains a connection table that can be hooked into the firewall function). Some routers implement custom code to deal with well-known attacks without sacrificing performance. For example, advanced filtering rules can check IP options, fragment offset, and so on. There may be specific features to handle SYN attacks (these are attacks that exploit the fact that TCP connection requests consume resources on hosts that wait for the three-way TCP handshake to be completed).

Routers operate at the network level and cannot provide a complete solution. They are unable to provide security even for some of the most basic services and protocols. Routers have the following problems when deploying security:

-

Packet filters can be difficult to deploy, synchronize, and maintain in a large internetwork.

-

Routers only access a limited part of the packet's header information and therefore do not understand content. Higher-level applications are invisible to routers above the socket layer.

-

Router ACLs are static; they do not have the flexibility or knowledge to deal with dynamic changes in protocol or application states. To handle more complex, higher-level protocols and applications we need a more stateful monitoring of the traffic flows.

-

Routers do not provide the sophisticated event logging and alert mechanisms required for security audits.

Another potential pitfall of general-purpose packet filter—based firewalls is that they can inherit underlying bugs in the operating system (i.e., bugs that may not affect routing operations but can represent serious security flaws when used in a firewall context).

Proxy servers/application gateways

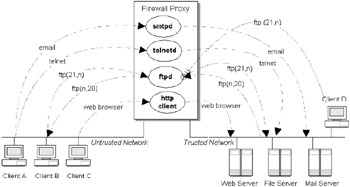

Proxies are usually host implementations of firewalls, comprising two or more network interfaces and supporting relay services for common applications in software. Their primary advantage is their statefulness. Proxies provide partial awareness of protocol states and full awareness of application states. Proxies are also capable of processing and manipulating information. Figure 5.9 illustrates the general model for a proxy. There are several disadvantages in using application-level proxies as firewalls, including the following:

-

Each new service requires its own proxy. This limits the ability to respond to new types of services and also severely limits scalability.

-

Proxies are not available for UDP, RPC, and other services from common protocol groups.

-

Implementation of a specific proxy server is resource intensive and limits performance.

-

Proxies may not be aware of issues in lower-level protocols and services.

-

Proxies are rarely transparent to users.

-

Proxies can be vulnerable to OS and application-level bugs (e.g., if a general-purpose operating system is used and has not been sufficiently tested/hardened for secure environments).

Figure 5.9: General model for a proxy server handling e-mail, Web, terminal, and file transfer traffic.

Proxies are effectively the first generation of true firewalls and for many years have been deployed successfully to protect users and services, especially when interfacing with the Internet. As application and traffic demands grow, their relative inflexibility, lack of transparency, and lack of scalability have forced users to look for more powerful hybrid solutions, such as those products supporting stateful technology.

SOCKS

SOCKS is often referred to as a circuit-level gateway, a more transparent version of a proxy where the user does not have to connect to the firewall first before requesting the second connection to the destination server. SOCKS is basically a set of library calls for use on a proxy server. This library must be compiled onto the SOCKS server to replace standard system calls. Special code is required for clients and a separate set of configuration profiles on the firewall. Content servers do not require any modification, since they are unaware that the connection requests are coming from the SOCKS server and not the client. The majority of Web browsers support SOCKS, and you can get SOCKS-enabled TCP/IP stacks for most platforms.

SOCKS operates transparently from the user's perspective. The client initiates a connection to the server using the server's IP address. In practice the session is directed through the SOCKS server, which validates the source address and userID and, if authorized, establishes a connection to the desired server transparently (the session is relayed via the SOCKS server using two sessions). The functionality offered by SOCKS depends on the version of software you are using. SOCKSv4 supports only outbound TCP sessions (it has weak authentication and is, therefore, inappropriate for sessions coming into a trusted network from an untrusted network). SOCKSv5 supports both TCP and UDP sessions and supports several authentication methods, including the following:

-

User name/password authentication

-

One-time password generators

-

Kerberos

-

Remote Authentication Dial-In User Services (RADIUS)

-

Password Authentication Protocol (PAP)

-

IPSec authentication method

SOCKSv5 also supports several encryption standards, including DES, Triple DES, and IPSEC, and the tunneling protocols PPTP, L2F, and L2TP. SOCKSv5 also supports both SKIP and ISAKMP/Oakley key management systems. The SOCKSv5 server listens for connections on port 1080 and supports both TCP and UDP connections. SOCKSv5 is appropriate for use with both outbound and inbound sessions. For additional information, refer to [36–39].

Stateful firewalls

Checkpoint Software Technologies [4] was the first vendor to produce a product employing so-called stateful inspection technology.

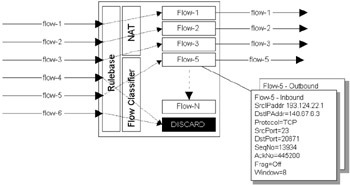

The stateful inspection module (implemented in the kernel to minimize context switches) accesses and analyzes data derived from all communication layers. This state and context information is cached and updated dynamically, providing valuable data for tracking both connection-oriented flows (e.g., TCP) and connectionless flows (from protocols such as RPC and those based on UDP). The conceptual architecture of such a system is illustrated in Figure 5.10. The ability to handle stateless services is a significant advantage over proxy servers. When used with a user-configured security rule set, this state information provides the necessary real-time information to make decisions about forwarding traffic, encrypting packets, and generating alarms and events. Any traffic not explicitly allowed by the security rules is discarded by default, and real-time security alerts are generated. This architecture is much more flexible and manageable than the proxy approach, since it takes a generic approach to content. There are advocates of the proxy approach who maintain that it is inherently more secure; however, the market is clearly indicating its preference, with Checkpoint Firewall-1 being the dominant firewall platform today by far.

Figure 5.10: Conceptual architecture of a stateful firewall.

In Figure 5.10, incoming traffic is compared with the rule-base and, if acceptable, classified as a connection-oriented or connectionless flow/session, based on features such as addressing and port numbers. Once classified the flow is assigned resources (normally a data structure is created to maintain status information and counters on this flow). All activity on a flow is monitored and updated to ensure that the state of the session is known at any particular time. The figure illustrates the type of information monitored for a Telnet session in Flow-5, including caching of sequence numbers to avoid session hijacking. If multiple firewalls are deployed in a clustered configuration, then these session states need to be regularly synchronized.

Personal firewalls

The latest development in firewall technology is the migration of firewall software onto end systems such as laptops, workstations, and servers. Basic features include IP packet filtering rules for inbound and outbound traffic (e.g., the administrator could define a policy that blocks all incoming connections to workstations), a database of predefined network services (such as SMTP e-mail, Windows file sharing, HTTP, and FTP), and IP packet logging for auditing the possible uses as evidence of attempted attacks. At present this technology is fairly immature, but this represents an interesting area for the future. Examples include McAfee's Personal Firewall [41], and F-Secure's Distributed Firewall [34]. Products are also available from Checkpoint [4].

Limitations of firewalls

While traditional firewalls are extremely useful security devices, they are point solutions and do not scale well or provide complete integrity. There is currently a move to implement more pervasive and robust techniques under the umbrella of the PKI. It is important to recognize that the nature of security threats is dynamic. So a firewall is always potentially vulnerable to a new form of attack. The network administrator must be vigilant in examining all relevant event logs and alarms.

5.3.7 Virus protection systems

Viruses probably represent the most immediate security threat to internetworks. A virus is an executable program or code that typically attaches itself to benign objects and replicates itself. A useful database of virus types is maintained by F-Secure [42]. Virus types are generally categorized as follows:

-

Viruses are pieces of executable code that are self-replicating. They replicate by attaching to other executable code and so may be distributed throughout an internetwork environment rapidly by piggybacking on data transfers and downloads. Viruses are broadly divided into three classes: file infectors, boot sector viruses, and macros.

-

Worms are programs that are specifically designed to search out vulnerable systems and then run on those systems, creating copies and spreading further to other systems. Worms do not require a host program to replicate, and most worms are network aware and do not require user invocation. The ILOVEYOU VB-Script worm is an example of a less sophisticated form that required the user to activate an executable in the form of an e-mail attachment.

-

Trojan Horses are executable programs that typically perform some useful or amusing function, while also activating a more malicious function during execution. For example, this could be a game or animated cartoon that secretly destroys files or copies passwords, or a background program that scans the keyboard buffer looking for sensitive information such as credit card data. Perhaps the most infamous example of a Trojan Horse is Back Orifice [LOPHT].

-

Logic bombs are executable code that are dormant until activated by some event (such as a change in date). Once the event trigger has occurred, a virus or worm is typically activated. For example, destructive code could be buried unnoticed inside an OS, to be activated on a specific day of a specific year. There have been several well-publicized cases of disgruntled employees engineering such occurrences.

Part of the problem with viruses is that they are now far easier to write and distribute. Viruses are frequently transported in e-mail, most commonly as embedded Visual BASIC for Applications (VBA) macros or attachments to office documents in the form of ActiveX components, Visual BASIC Script (VBS), Java applets, and other forms of applets.

Special antivirus software is now very widely deployed. Most of these tools work by scanning files and e-mail attachments against a database of virus signatures (chunks of code associated with particular viruses). If a match is found, then the file is typically disinfected automatically (by removing the offending code). An important aspect of virus protection is that viruses mutate regularly, and therefore vendors of such products provide regular upgrades for the virus signature library, which must be downloaded frequently to maintain system integrity. There are two general approaches being offered in security solutions today, as follows:

-

Centrally managed at the point of ingress—Virus protection may be deployed at the public-private interface, either in dedicated standalone hardware or integrated with the firewall. All untrusted content passing through the firewall is vectored off to the screening device, where content is either disinfected or stripped away before forwarding on to the user.

-

End-user managed—Virus protection systems may also be deployed directly on end-system hardware, typically as a background application running on the user's desktop device.

The latter approach may be considerably more expensive, particularly for a large enterprise, since it relies on users to regularly upgrade their virus library. There are many vendors of antivirus software; examples include McAffee's VirusScan [41], F-Secure [34], and Norton's Antivirus [44].

5.3.8 URL protection systems

A database of Web or FTP sites is maintained, classified according to a set of criteria (e.g., commercial enterprise, travel, and so on). Permission is set out for users based on what sites may be accessed (typically the policy is to allow access to all sites except for certain site types). The problem with this technology is that new sites are appearing daily, and a high level of manual investigation is required to classify sites. As a consequence, maintenance of the URL classification database is very labor intensive and not guaranteed to be foolproof. Users must also resynchronize databases on a daily basis. URL scanners are typically deployed, either on or closely coupled with firewalls. New URL requests are intercepted and compared with the database and either passed through transparently or rejected. Examples include Web-Sense [45], Symantec I-Gear [44], and Content Technologies MIME-sweeper [46].

5.3.9 E-mail protection systems

E-mail protection is closely linked with virus protection, although e-mail protection tools typically include additional protection against spam attacks and the loss of proprietary information. By guaranteeing that only approved attachments and desired content enter or leave the network, these products ensure that viruses do not enter or leave the network. Examples include Content Technologies MAILsweeper [46], and Symantec Mail-Gear [44].

5.3.10 Intrusion Detection Systems (IDS)

Intrusion Detection Systems (IDSs) are a complementary technology closely associated with firewalls; however, an IDS should only be deployed once strong firewall policy and authentication processes have been deployed. Intrusion detection offers an added layer of security. If the firewall were represented by the checkout staff in a supermarket, then the IDS system would be the plainclothes security staff pounding the aisles searching for shoplifters. While firewalls are good at enforcing policy and handling specific security attacks on a protocol or session basis, they are not currently capable of identifying more sophisticated distributed or composite attacks. IDSs are designed to scan for both misuse attacks and anomalies, either at the packet level or within event logs generated by firewalls, using a combination of rule-based technology and artificial intelligence. For example, an IDS might be configured with a rule to scan for several different types of events over a specific time period. A more dynamic learning IDS might scan events and classify events as interesting, anomalous, or threatening and modify its own rule base on the fly. An important issue IDS must deal with is the reporting of so-called false positives (or metaphorically crying wolf), since this could result in a form of denial of service or at minimum result in a lack of credibility in the IDS system. Note that hackers are already mounting sophisticated attacks to create such problems.

Because of the processing power required, IDSs are typically deployed behind the firewall, running as a second-tier security barrier. Some IDS systems are closely coupled with particular firewall technology, so that anomalous activity picked up by the IDS could, in theory, be fed back into the firewall rule base. A good IDS system should warn you that an attack is taking place at the time of attack, although many IDS systems are much less proactive. This technology is relatively immature, and there is a clear requirement for IDS data collection points to be coordinated, since a standalone IDS is not much use in a large internetwork with multiple ingress and egress points. There is also potential harm in integrating complementary data-gathering technologies, such as RMON, since one of the problems with IDS is the ability to capture and coordinate data in real time from many data sources.

Honeypots and burglar alarms are really subsets of IDS functionality and are therefore described here briefly. A honeypot is typically a server, dressed up to look interesting enough for a hacker to try to attempt access. For example, you could call your server finance.mycompany.com and then populate it with all sorts of interesting data. The idea is to entice the hacker in, and then record all activity to try trace the source and assist possible subsequent prosecution. A burglar alarm is a way of signaling that somebody is using a feature of a system that is not supposed to be used, thereby alarming the network staff to the possibility of an intruder. For example, a dummy user account may sound an alarm if used.

Examples of commercial IDS include Internet Security Systems (ISS) RealSecure [47], Intrusion Detection's Kane Security Monitor [48], Cisco's NetRanger [28], Network Associates CyberCop [49], Axent Technologies OmniGuard-Intruder Alert [50], Trusted Information Systems Stalkers [51], and Advisor Technologies's event log analysis system [52]. There are also many noncommercial tools available, including SHADOW [6]. For further information on IDS systems, the interested reader is referred to [6, 53].

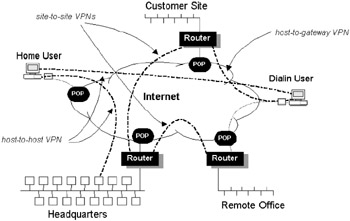

5.3.11 Virtual Private Network

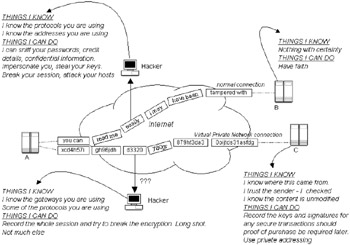

A Virtual Private Network (VPN) is generally characterized as an extension of an enterprise's private intranet across a public network, such as the Internet, creating a secure private pipe or private tunnel.

The term virtual means that the network is simulated to appear to be a single continuous private entity, when in reality it may be a collection of many disparate interconnected networks and technologies. The term private means that information flow over this virtual pipe is encapsulated, encrypted, and authenticated in such a way that it is impenetrable to hackers (i.e., data cannot be eavesdropped and decoded while in transit—at least that's the theory). In practice VPNs may be established between any intelligent network devices, even between two laptop computers (equipped with appropriate protocol support). Confidentiality may also extend beyond the user data; VPN tunneling enables internal addressing details and application protocols to be obfuscated. Figure 5.11 illustrates the key differences between a normal user connection and a VPN connection, and the information and actions available to a malicious user in each case. In Figure 5.11, Host A has a normal connection to Host B (e.g., a standard TCP session) and a VPN connection to Host C. The VPN connection is encrypted and authenticated and may or may not use tunneling techniques to hide the original site details and protocols. Anybody with access to an Internet backbone or ISP link can retrieve confidential data, keys, and passwords from a standard session, replay the session, mount a man-in-the-middle attack, and retrieve sensitive site topology information. All of these details can be hidden inside a VPN.

Figure 5.11: VPN and normal session characteristics.

Types of VPNs

VPNs can be broadly divided into two basic modes, dial or dedicated, as follows.

-

Dial VPNs offer remote access to intranets for mobile users and telecommuters. This is the most common form of VPN deployed today. Figure 5.12 illustrates the concept of a dial VPN for remote users. VPN access is outsourced, with the service provider managing modem banks to ensure reliable connectivity, while the organization manages intranet user authentication. In Figure 5.12, note that access is via the local ISP PoPs (points of presence) and is, therefore, charged at local call rates regardless of end-to-end distance. Many VPNs can be set up in this way, all overlaying each other and completely independent. For example, an enterprise could have a site-to-site VPN to other customer offices and suppliers. Quality (throughput, delay, etc.) is not guaranteed at present. The VPN may not, in fact, allow access to all of the HQ network. For specific customers there can be a DMZ (demilitarized zone) implemented at HQ for semitrusted access over the VPN. Roaming users and home users hook into HQ just as if they were directly connected.

Figure 5.12: Simple VPN deployment over the Internet. -

Dedicated VPNs are typically implemented as high-speed connections between private sites connecting multiple users and services. CPE devices such as routers and firewalls typically create the tunnels and enforce policy. Dedicated IVPNs are typically employed to connect the intranet backbone to remote offices or extranet partners over the wide area network.

To counteract this threat VPN traffic is typically authenticated, encrypted, and optionally encapsulated (tunneled) inside a Layer 3 protocol so that its contents are not readily available to other users accessing the same wide area media. Traffic from one virtual network connection passes alongside encrypted and unencrypted traffic from many other public or private networks over a common infrastructure (rather like ships in the night). Sophisticated VPN technologies (such as IPSec) exchange and frequently update encryption keys in a way that makes line tapping, man-in-the-middle attacks, and spoofing virtually impossible.

Issues with VPNs

VPNs are designed to resolve some of the key issues that occur when attempting to transfer data reliably over public networks such as the Internet and between end sites that may or may not run IP protocols or may have private (i.e., unregistered) IP addressing schemes. Some of these problems currently have solutions; others are still being worked on, as follows:

-

Protocol encapsulation—Although the Internet and many service provider backbones are based exclusively on IP, many enterprise networks still rely on protocols such as IPX, AppleTalk, and NetBEUI. To carry these protocols over an IP backbone they must be encapsulated and decapsulated at the backbone ingress and egress points, until either protocol conversion or a native IP stack is installed at the enterprise. Encapsulation increases packet sizes.

-

Address transparency—For non-IP enterprise networks, tunneling is possibly the best way to carry non-IP traffic over a pure IP core, since end-to-end addressing information is preserved but remains transparent to the core. Furthermore, many IP enterprises have private or unregistered IP addressing schemes that cannot directly interface with the core, and Network Address Translation (NAT) is only a partial solution.

-

Security—Any information passed over a public network such as the Internet is open to attack from malicious users or competitors. VPNs use encryption algorithms and public-private key techniques to assure data integrity and strong authentication to assure trust.

-

Reliability—The Internet currently has no end-to-end quality of service, and for now we have to expect best-effort delivery in most situations. For many businesses this is simply not acceptable, and this is one of the key factors constraining VPN deployment. Holistic architectures, such as differentiated services, are required to assure end-to-end performance and reliability. Without QoS many enterprises cannot migrate to full-scale VPN deployment, especially those with mission- or time-critical applications. This is an area of intense research.

-

Management and maintenance—Diagnosing problems on a private network is hard enough—imagine what the task would be like over a VPN. VPNs running over the Internet could be extremely difficult to debug. There may be several technologies and service providers involved in an end-to-end VPN path, and cooperation between providers will be critical to avoid excessive downtime. Furthermore, with end-to-end VPNs (where the VPN is effectively invisible to the provider) providers may have real problems engineering appropriate QoS and providing any useful reports.

|

|