WebSphere Connection Pool Manager

The WebSphere connection pool manager is an essential component to the connectivity of your WebSphere-based applications to the application database. Essentially, the connection pool manager is the management construct in which your JDBC drivers operate .

The concept behind a pool manager is to provide you with a pool of already connected interfaces to a database or any remote system. Pooling is a key part of performance management. The reason is that connections and disconnections are expensive, both from a network and a system resource utilization point of view.

Each time a connection has to be established, there's overhead in opening a network connection from the client, opening a network connection to the server, logging into the remote system (in this case, a database), and, at the completion of the transaction, closing the connection.

The pool manager removes all the middle ground overhead by pre-establishing a defined number of connections to the database and leaving them open for the duration of the application server's uptime. As new application session or client transactions need to make SQL queries, by coding in references in your application code to call the pool manager classes rather than manually establishing a new connection, you'll notice a great improvement in performance.

You'll also be able to stretch the longevity of your database platform by reducing its load. So, the pool manager is the governing logic within your application server JVM that controls the opening and closing of connections, pooled connection management, and other JDBC- related parameters.

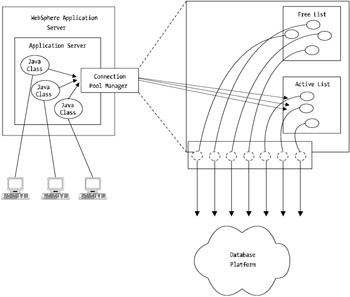

Figure 11-8 shows how a pooled connection infrastructure operates.

Figure 11-8: WebSphere pool manager connection architecture

Figure 11-8 shows a WebSphere application server, three active clients, an expanded view of the connection pool manager, and an interface layer to a database. In the figure, there are seven connections allocated to the JDBC connection pool. That is, the sum of all active and free connections. As you can see, all seven connections are actively connected to the database, but only three are "in use" by application clients .

If a fourth client logged in, a connection object from the free list would move to the active list, and the reference or handle to that connection object would be provided back to the client application. This is the basic form of a connection pool manager.

Within WebSphere, you have JDBC connection pool resources. Both WebSphere 4 and 5 have similar options that allow you to alter the characteristics of your JDBC connections. I touched on this topic in Chapters 5 and 6 when I discussed queuing models and how to set the JDBC minimum and maximum number of connections to your backend database within the bounds of a queuing model. Putting the queuing model aside for the moment, you'll now see the important settings for the JDBC connection pool manager.

Table 11-8 lists the main areas within the JDBC connection pool manager where configuration can be tuned to gain performance.

| Setting | Description |

|---|---|

| Minimum Connections | The initial startup (bootstrap) number of connections each instance of the JDBC pool manager will launch. |

| Maximum Connections | The maximum number of connections available to applications within the particular JDBC pool manager. |

| Connection Timeout | Interval after which an attempted connection to a database will timeout. |

| Idle Timeout | WebSphere 4 setting allowing you to tune how long an unallocated or idled connection will remain active before being returned to the free pool list. |

| Orphan Timeout | WebSphere 4 setting allowing you to configure how long an application can hold a connection open without using it before being returned to the free pool list. |

| Unused Timeout | WebSphere 5 setting, similar to the Idle Timeout setting for WebSphere 4. |

| Aged Timeout | WebSphere 5 setting to remove connections from the active list after a defined period of time. This value disregards any I/O and other settings. |

| Purge Policy | WebSphere 5 setting allowing you to define what will happen when a StaleConnectionException is received from the pool manager. |

You'll first explore the generic WebSphere settings.

General WebSphere JDBC Connection Pool Tuning

The three primary settings common to both WebSphere 4 and 5 are the following:

-

Minimum Pool Size/Minimum Connections

-

Maximum Pool Size/Maximum Connections

-

Connection Timeout

Although these settings may appear to be simplistic from the outset, there are a number of hidden effects based on how you set them.

Minimum Pool Size

The Minimum Pool Size setting is the value for the startup minimum number of connections available in the pool. This is the current specification for this setting but may change in future releases of WebSphere. This setting provides the number of connections in the active pool at which the data source connection manager will stop cleaning up. Ultimately, after a period of time, or via thorough system modeling, you should be able to understand what this setting should be.

As a safe value, set this value to half the value of the Maximum Connections setting.

Maximum Pool Size

The Maximum Pool Size setting is a little more involved.

This setting needs to reflect a value that represents the maximum number of connections to the database, at any one time, from each application server or clone in your environment.

Don't confuse this with being a total number of connections to the database for your particular application servers. Each application server or application server clone will instantiate it own instance of the JDBC connection pool associated with your defined context (in other words, the JNDI context associated with each particular JDBC data source). Therefore, if you have a JDBC connection pool or data source with 30 connections as a maximum, each of your application servers or application server clones will obtain an instance of the JDBC connection pool or data source with 30 connections maximum.

That means, an application with four application server clones would have a maximum of 120 concurrent database connections available to it.

This is an important point. As a rule of thumb, try not to exceed 75 connections per application server unless you have very small and very short transactions (in other words, a low transaction characteristic). The reason is this: Each connection in the data source requires a thread. If you remember from earlier chapters, some equations relate to how many threads should be active per application server per CPU. Therefore, if you have an application server requiring more than 100 connections concurrently, this would require more than 100 threads to be configured for the application server container.

More than 100 threads on a single JVM isn't a bad thing; however, if you have heavyweight transactions, then this is going to cause you grief unless you can spread out your load (distribute it) via more clones and application servers.

Therefore, follow the rule of thumb that states each application server instance (clone) will have a maximum of 75 “100 JDBC connections, with each application server instance (clone) having 75 “100 threads active. Therefore, more than 100 threads means you need to distribute your load more effectively with more clones.

| Note | This is a rule of thumb; there will be instances where this rule doesn't apply. Use this as a guide where other modeling doesn't suggest otherwise . |

You should also ensure that you don't hit deadlocks in your JVM by ensuring that there are always sufficient threads available. As each active JDBC connection consumes a thread, if you have 50 threads and tried to get 50 JDBC connections concurrently, your JVM would suffer a deadlock and start to fail. IBM recommends to always set the number of threads to be one more than that of the JDBC Maximum Connections setting. However, I recommend you set the number of threads to be 10 percent higher than the JDBC Maximum Connections setting.

This caters to any unforeseen or loose threads within your JVM. Therefore, for an application server that has a maximum connection pool of 75, your JVM Thread setting should be approximately 82.

Connection Timeout

Connection Timeout is a setting that helps to ensure your application can be coded to handle poorly performing database servers. This setting should be set to a relatively low value of between 10 and 30 seconds so that end user response times aren't exceptionally high.

The default setting is 180 seconds, which is way too long for an end user to be expected to sit and wait. If your connection to the database times out after 180 seconds, then you have bigger issues on your hands than a high (or low) Connection Timeout setting!

As a guide, don't set this value to be less than 10 seconds. There may be some form of deadlock occurring within a database that can take a few seconds to clear. If this happens, you don't want your connection to time out after a few seconds because this can overload the database and the current problems it may be trying to resolve.

WebSphere 4 JDBC Connection Pool Tuning

Table 11-8 lists two specific WebSphere 4 settings that you can tune to optimize the performance of the data source:

-

Idle Timeout

-

Orphan Timeout

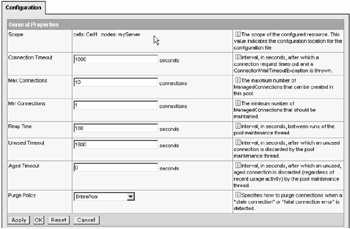

You'll now look at these two settings in more detail. Figure 11-9 shows where you configure the JDBC connection pool.

Figure 11-9: Configuring the WebSphere 4 pool manager connection

Idle Timeout

The Idle Timeout setting allows you to tune the JDBC connection pool data source so that connections that are idle or unallocated can be returned to the pool for another process to use. This setting helps to ensure that there are sufficiently free connections available in the pool for your applications to use, rather than allowing connections to remain open and wait for activity. Occasionally, this may be a desired requirement to have long-standing connections active; however, database accesses aren't asynchronous, so you should expect response times within a minute for online-based applications, even for large queries.

Idle Timeout should be set higher than your connection timeout value yet lower than your Orphan Timeout value. As a guide, this setting should be between two times and three times the value of the Connection Timeout setting. This provides a proportionally set value for idle timeouts, based on your perceived (or modeled ) connection startup (timeout) time.

Also consider tuning this value if you notice that active connections are starting to build up in the pool manager that don't reflect the number of concurrent users on your systems or the SQL query rate isn't representative of the number of active connections.

Orphan Timeout

The Orphan Timeout setting allows you to tune the data source to cater for connections that are no longer owned by application components . If, for example, a particular thread within an application fails and doesn't call a close() method, the connection may be left in an open state, with no "owner." This typically only applies to BMP and native JDBC-based applications. One of the benefits of CMP is that the container will manage the handlers between connection and application clients quite well (for more than 90 percent of all situations from my experience).

This state is somewhat analogous to the Zombie Process state that can occur in Unix where processes are detached from parent processes because of a crash or failure.

This setting allows you to reclaim connections back into the free list pool that may be also left over after poorly written application code. If developers aren't closing off their connections properly, or there are other problems with their data source binding application code, this value can help to alleviate wasted resources (but it's no substitute for having your developers fix the root problem!).

As a guide, this setting should be twice that of the Idle Timeout setting to ensure that all connections and transactions can take place within sufficient time while not impacting application processing and limiting connection and query failure prematurely.

WebSphere 5 JDBC Connection Pool Tuning

WebSphere 5 introduced and replaced some of the WebSphere 4 JDBC connection pool settings. It's possible, and required under some circumstances, to use the version 4 data source structure, especially for EJB 1.1 beans.

However, for EJB 2.0 and other compliant J2EE components that can use the newer version 5 JDBC pool manager, there are three new settings that can help increase performance of your database accesses. Figure 11-10 shows the JDBC data source configuration area within WebSphere 5.

Figure 11-10: WebSphere 5 Pool Manager Connection dialog box

As you can see, the configuration window for WebSphere 5 is somewhat different than in version 4. The new configuration settings available for WebSphere 5 are as follows :

-

Unused Timeout

-

Aged Timeout

-

Purge Policy

You'll now look at each of those in more detail.

Unused Timeout

The Unused Timeout setting gives you the ability to set a timeout for when an unused or unallocated connection is returned to the free pool list. This setting is almost identical to the WebSphere 4 Idle Timeout value. For that reason, I recommend you use the same principles to configure it. That is, set this value to be two to three times that of your Connection Timeout value.

Consider tuning this value if you notice that active connections are starting to build up in the pool manager that don't reflect the number of concurrent users on your systems or the SQL query rate isn't representative of the number of active connections.

Aged Timeout

The Aged Timeout setting is a new setting in WebSphere 5 that, although similar to the Orphaned Connection Timeout setting in WebSphere 4, it does provide greater scope to clean out any unwanted or old connections. However, you can use the same policy as a guide for this setting. Set Aged Timeout to be two times that of the Unused Timeout value.

If you're changing this setting "cold turkey ," be sure to monitor it carefully . Unlike the Orphan Timeout setting, the Aged Timeout setting will kill off a connection, regardless of its state, after the defined interval has been reached.

You want to avoid having large transactions terminated by this setting. A backup litmus test for setting this value is to understand what length of time the largest transaction will take to complete in your environment. Although it's a hard item to measure (many variables can affect it), it should help you understand what the value should be; set the Aged Timeout to be two times the value of the longest transaction you may have during peak load.

Your Stress and Volume Testing (SVT) should be able to help you understand this value.

Purge Policy

The Purge Policy setting is another new WebSphere 5 setting. It provides an additional WebSphere-based controlling mechanism to handle StaleConnectionExceptions and FatalConnectionExceptions .

This setting allows you to reset all your connections in your pool manager quickly when there are critical problems on your backend database. You need to be careful of this setting. There are two options:

-

Purge Entire Pool

-

Purge Failing Connection Only

You need to be aware that if a single connection attempt throws a StaleConnectionException ”meaning that there are no new connection threads available ”all existing connections not in use will be dropped from the pool's concurrently active list. There is, however, a chance that active connections maybe dropped prematurely.

This may be helpful for situations where you want to restart all queries from scratch when there may be a number of malformed connections to the database. If, for example, connections are building up and new connections are timing out and receiving StaleConnectionException messages, setting this value to Purge Entire Pool allows your pool manager to start from a "clean slate."

All connection requests after a purge need to issue a new getConnection() call to the data source provider. This ensures that only those requests that are required will re-establish themselves to the database.

This setting has pros and cons. It gives you the ability to have WebSphere reset all connections (new attempts and actives) in the event that there are resource exhaustion problems with the database, network, or some other component that cause a banking of database connections. Yet, it also has a potential of starting a flip-flop effect where subsequent database connection requests are met with exceptions, and a continuous purging cycle starts.

By setting the value to Purge Failing Connection Only, you may just be hiding the larger problem. If this setting is configured, you'll find that as each connection met with an exception is purged, additional connection requests will potentially be thrown exceptions, causing the cleanup period to be exaggerated from subseconds to potentially seconds or even minutes.

I recommend staying with the default setting of Purge Entire Pool unless you have a specific application need to only reset the active connection (for example, long multiphase transactions).

EAN: 2147483647

Pages: 111