Section 6.5. VFS System Calls and the Filesystem Layer

6.5. VFS System Calls and the Filesystem LayerUntil this point, we covered all the structures that are associated with the VFS and the page cache. Now, we focus on two of the system calls used in file manipulation and trace their execution down to the kernel level. We see how the open(), close(), read(), and write() system calls make use of the structures previously described. We mentioned that in the VFS, files are treated as complete abstractions. You can open, read, write, or close a file, but the specifics of what physically happens are unimportant to the VFS layer. Chapter 5 covers these specifics. Hooked into the VFS is the filesystem-specific layer that translates the VFS' file I/O to pages and blocks. Because you can have many specific filesystem types on a computer system, like an ext2 formatted hard disk and an iso9660 cdrom, the filesystem layer can be divided into two main sections: the generic filesystem operations and the specific filesystem operations (refer to Figure 6.3). Following our top-down approach, this section traces a read and write request from the VFS call of read(), or write(), tHRough the filesystem layer until a specific block I/O request is handed off to the block device driver. In our travels, we move between the generic filesystem and specific filesystem layer. We use the ext2 filesystem driver as the example of the specific filesystem layer, but keep in mind that different filesystem drivers could be accessed depending on what file is being acted upon. As we progress, we will also encounter the page cache, which is a construct within Linux that is positioned in the generic filesystem layer. In older versions of Linux, a buffer cache and page cache exist, but in the 2.6 kernel, the page cache has consumed any buffer cache functionality. 6.5.1. open ()When a process wants to manipulate the contents of a file, it issues the open()system call: ----------------------------------------------------------------------- synopsis #include <sys/types.h> #include <sys/stat.h> #include <fcntl.h> int open(const char *pathname, int flags); int open(const char *pathname, int flags, mode_t mode); int creat(const char *pathname, mode_t mode); ----------------------------------------------------------------------- The open syscall takes as its arguments the pathname of the file, the flags to identify access mode of the file being opened, and the permission bit mask (if the file is being created). open() returns the file descriptor of the opened file (if successful) or an error code (if it fails). The flags parameter is formed by bitwise ORing one or more of the constants defined in include/linux/fcntl.h. Table 6.9 lists the flags for open() and the corresponding value of the constant. Only one of O_RDONLY, O_WRONLY, or O_RDWR flags has to be specified. The additional flags are optional.

Let's look at the system call: ----------------------------------------------------------------------- fs/open.c 927 asmlinkage long sys_open (const char __user * filename, int flags, int mode) 928 { 929 char * tmp; 930 int fd, error; 931 932 #if BITS_PER_LONG != 32 933 flags |= O_LARGEFILE; 934 #endif 935 tmp = getname(filename); 936 fd = PTR_ERR(tmp); 937 if (!IS_ERR(tmp)) { 938 fd = get_unused_fd(); 939 if (fd >= 0) { 940 struct file *f = filp_open(tmp, flags, mode); 941 error = PTR_ERR(f); 942 if (IS_ERR(f)) 943 goto out_error; 944 fd_install(fd, f); 945 } 946 out: 947 putname(tmp); 948 } 949 return fd; 950 951 out_error: 952 put_unused_fd(fd); 953 fd = error; 954 goto out; 955 } ----------------------------------------------------------------------- Lines 932934Verify if our system is non-32-bit. If so, enable the large filesystem support flag O_LARGEFILE. This allows the function to open files with sizes greater than those represented by 31 bits. Line 935The getname() routine copies the filename from user space to kernel space by invoking strncpy_from_user(). Line 938The get_unused_fd() routine returns the first available file descriptor (or index into fd array: current->files->fd) and marks it busy. The local variable fd is set to this value. Line 940The filp_open() function performs the bulk of the open syscall work and returns the file structure that will associate the process with the file. Let's take a closer look at the filp_open() routine: ----------------------------------------------------------------------- fs/open.c 740 struct file *filp_open(const char * filename, int flags, int mode) 741 { 742 int namei_flags, error; 743 struct nameidata nd; 744 745 namei_flags = flags; 746 if ((namei_flags+1) & O_ACCMODE) 747 namei_flags++; 748 if (namei_flags & O_TRUNC) 749 namei_flags |= 2; 750 751 error = open_namei(filename, namei_flags, mode, &nd); 752 if (!error) 753 return dentry_open(nd.dentry, nd.mnt, flags); 754 755 return ERR_PTR(error); ----------------------------------------------------------------------- Lines 745749The pathname lookup functions, such as open_namei(), expect the access mode flags encoded in a specific format that is different from the format used by the open system call. These lines copy the access mode flags into the namei_flags variable and format the access mode flags for interpretation by open_namei(). The main difference is that, for pathname lookup, it can be the case that the access mode might not require read or write permission. This "no permission" access mode does not make sense when trying to open a file and is thus not included under the open system call flags. "No permission" is indicated by the value of 00. Read permission is then indicated by setting the value of the low-order bit to 1 whereas write permission is indicated by setting the value of the high-order bit to 1. The open system call flags for O_RDONLY, O_WRONLY, and O_RDWR evaluate to 00, 01, and 02, respectively as seen in include/asm/fcntl.h. The namei_flags variable can extract the access mode by logically bit ANDing it with the O_ACCMODE variable. This variable holds the value of 3 and evaluates to true if the variable to be ANDed with it holds a value of 1, 2, or 3. If the open system call flag was set to O_RDONLY, O_WRONLY, and O_RDWR, adding a 1 to this value translates it into the pathname lookup format and evaluates to true when ANDed with O_ACCMODE. The second check just assures that if the open system call flag is set to allow for file truncation, the high-order bit is set in the access mode specifying write access. Line 751The open_namei() routine performs the pathname lookup, generates the associated nameidata structure, and derives the corresponding inode. Line 753The dentry_open() is a wrapper routine around dentry_open_it(), which creates and initializes the file structure. It creates the file structure via a call to the kernel routine get_empty_filp(). This routine returns ENFILE if the files_stat.nr_files is greater than or equal to files_stat.max_files. This case indicates that the system's limit on the total number of open files has been reached. Let's look at the dentry_open_it() routine:

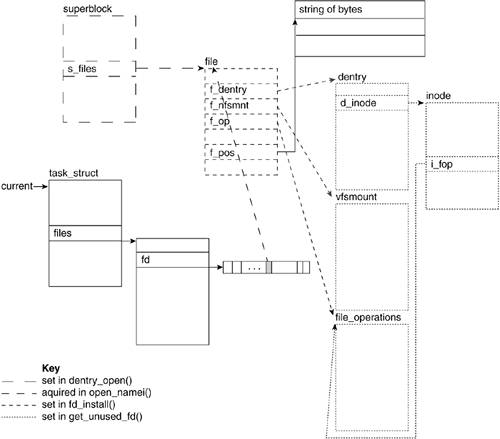

Line 852The file struct is assigned by way of the call to get_empty_filp(). Lines 855856The f_flags field of the file struct is set to the flags passed in to the open system call. The f_mode field is set to the access modes passed to the open system call, but in the format expected by the pathname lookup functions. Lines 866869The files struct's f_dentry field is set to point to the dentry struct that is associated with the file's pathname. The f_vfsmnt field is set to point to the vmfsmount struct for the filesystem. f_pos is set to 0, which indicates that the starting position of the file_offset is at the beginning of the file. The f_op field is set to point to the table of operations pointed to by the file's inode. Line 870The file_move() routine is called to insert the file structure into the filesystem's superblock list of file structures representing open files. Lines 872877This is where the next level of the open function occurs. It is called here if the file has more file-specific functionality to perform to open the file. It is also called if the file operations table for the file contains an open routing. This concludes the dentry_open_it() routine. By the end of filp_open(), we will have a file structure allocated, inserted at the head of the superblock's s_files field, with f_dentry pointing to the dentry object, f_vfsmount pointing to the vfsmount object, f_op pointing to the inode's i_fop file operations table, f_flags set to the access flags, and f_mode set to the permission mode passed to the open() call. Line 944The fd_install() routine sets the fd array pointer to the address of the file object returned by filp_open(). That is, it sets current->files->fd[fd]. Line 947The putname() routine frees the kernel space allocated to store the filename. Line 949The file descriptor fd is returned. Line 952The put_unused_fd() routine clears the file descriptor that has been allocated. This is called when a file object failed to be created. To summarize, the hierarchical call of the open() syscall process looks like this: sys_open:

Figure 6.16 illustrates the structures that are initialized and set and identifies the routines where this was done. Figure 6.16. Filesystem Structures Table 6.10 shows some of the sys_open() return errors and the kernel routines that find them.

6.5.2. close ()After a process finishes with a file, it issues the close() system call: synopsis #include <unistd.h> int close(int fd); ----------------------------------------------------------------------- The close system call takes as parameter the file descriptor of the file to be closed. In standard C programs, this call is made implicitly upon program termination. Let's delve into the code for sys_close(): ----------------------------------------------------------------------- fs/open.c 1020 asmlinkage long sys_close(unsigned int fd) 1021 { 1022 struct file * filp; 1023 struct files_struct *files = current->files; 1024 1025 spin_lock(&files->file_lock); 1026 if (fd >= files->max_fds) 1027 goto out_unlock; 1028 filp = files->fd[fd]; 1029 if (!filp) 1030 goto out_unlock; 1031 files->fd[fd] = NULL; 1032 FD_CLR(fd, files->close_on_exec); 1033 __put_unused_fd(files, fd); 1034 spin_unlock(&files->file_lock); 1035 return filp_close(filp, files); 1036 1037 out_unlock: 1038 spin_unlock(&files->file_lock); 1039 return -EBADF; 1040 } ----------------------------------------------------------------------- Line 1023The current task_struct's files field point at the files_struct that corresponds to our file. Lines 10251030These lines begin by locking the file so as to not run into synchronization problems. We then check that the file descriptor is valid. If the file descriptor number is greater than the highest allowable file number for that file, we remove the lock and return the error EBADF. Otherwise, we acquire the file structure address. If the file descriptor index does not yield a file structure, we also remove the lock and return the error as there would be nothing to close. Lines 10311032Here, we set the current->files->fd[fd] to NULL, removing the pointer to the file object. We also clear the file descriptor's bit in the file descriptor set referred to by files->close_on_exec. Because the file descriptor is closed, the process need not worry about keeping track of it in the case of a call to exec(). Line 1033The kernel routine __put_unused_fd() clears the file descriptor's bit in the file descriptor set files->open_fds because it is no longer open. It also does something that assures us of the "lowest available index" assignment of file descriptors: ----------------------------------------------------------------------- fs/open.c 897 static inline void __put_unused_fd(struct files_struct *files, unsigned int fd) 898 { 899 __FD_CLR(fd, files->open_fds); 890 if (fd < files->next_fd) 891 files->next_fd = fd; 892 } ----------------------------------------------------------------------- Lines 890891The next_fd field holds the value of the next file descriptor to be assigned. If the current file descriptor's value is less than that held by files->next_fd, this field will be set to the value of the current file descriptor instead. This assures that file descriptors are assigned on the basis of the lowest available value. Lines 10341035The lock on the file is now released and the control is passed to the filp_close() function that will be in charge of returning the appropriate value to the close system call. The filp_close() function performs the bulk of the close syscall work. Let's take a closer look at the filp_close() routine: ----------------------------------------------------------------------- fs/open.c 987 int filp_close(struct file *filp, fl_owner_t id) 988 { 989 int retval; 990 /* Report and clear outstanding errors */ 991 retval = filp->f_error; 992 if (retval) 993 filp->f_error = 0; 994 995 if (!file_count(filp)) { 996 printk(KERN_ERR "VFS: Close: file count is 0\n"); 997 return retval; 998 } 999 1000 if (filp->f_op && filp->f_op->flush) { 1001 int err = filp->f_op->flush(filp); 1002 if (!retval) 1003 retval = err; 1004 } 1005 1006 dnotify_flush(filp, id); 1007 locks_remove_posix(filp, id); 1008 fput(filp); 1009 return retval; 1010 } ----------------------------------------------------------------------- Lines 991993These lines clear any outstanding errors. Lines 995997This is a sanity check on the conditions necessary to close a file. A file with a file_count of 0 should already be closed. Hence, in this case, filp_close returns an error. Lines 10001001Invokes the file operation flush() (if it is defined). What this does is determined by the particular filesystem. Line 1008fput() is called to release the file structure. The actions performed by this routine include calling file operation release(), removing the pointer to the dentry and vfsmount objects, and finally, releasing the file object. The hierarchical call of the close() syscall process looks like this: sys_close():

Table 6.11 shows some of the sys_close() return errors and the kernel routines that find them.

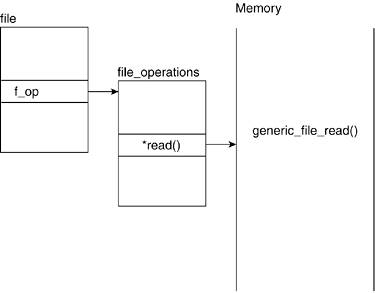

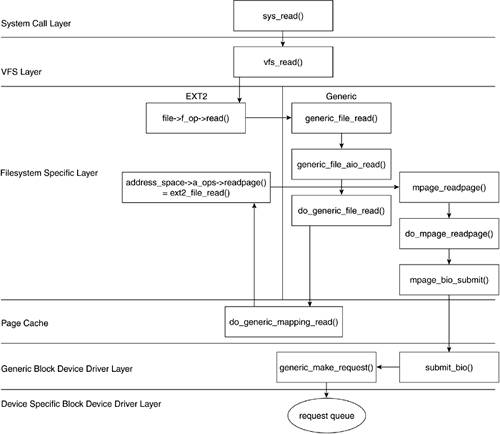

6.5.3. read()When a user level program calls read(), Linux translates this to a system call, sys_read(): ----------------------------------------------------------------------- fs/read_write.c 272 asmlinkage ssize_t sys_read(unsigned int fd, char __user * buf, size_t count) 273 { 274 struct file *file; 275 ssize_t ret = -EBADF; 276 int fput_needed; 277 278 file = fget_light(fd, &fput_needed); 279 if (file) { 280 ret = vfs_read(file, buf, count, &file->f_pos); 281 fput_light(file, fput_needed); 282 } 283 284 return ret; 285 } ----------------------------------------------------------------------- Line 272sys_read() takes a file descriptor, a user-space buffer pointer, and a number of bytes to read from the file into the buffer. Lines 273282A file lookup is done to translate the file descriptor to a file pointer with fget_light(). We then call vfs_read(), which does all the main work. Each fget_light() needs to be paired with fput_light(,) so we do that after our vfs_read() finishes. The system call, sys_read(), has passed control to vfs_read(), so let's continue our trace: ----------------------------------------------------------------------- fs/read_write.c 200 ssize_t vfs_read(struct file *file, char __user *buf, size_t count, loff_t *pos) 201 { 202 struct inode *inode = file->f_dentry->d_inode; 203 ssize_t ret; 204 205 if (!(file->f_mode & FMODE_READ)) 206 return -EBADF; 207 if (!file->f_op || (!file->f_op->read && \ !file->f_op->aio_read)) 208 return -EINVAL; 209 210 ret = locks_verify_area(FLOCK_VERIFY_READ, inode, file, *pos, count); 211 if (!ret) { 212 ret = security_file_permission (file, MAY_READ); 213 if (!ret) { 214 if (file->f_op->read) 215 ret = file->f_op->read(file, buf, count, pos); 216 else 217 ret = do_sync_read(file, buf, count, pos); 218 if (ret > 0) 219 dnotify_parent(file->f_dentry, DN_ACCESS); 220 } 221 } 222 223 return ret; 224 } ----------------------------------------------------------------------- Line 200The first three parameters are all passed via, or are translations from, the original sys_read() parameters. The fourth parameter is the offset within file, where the read should start. This could be non-zero if vfs_read() is called explicitly because it could be called from within the kernel. Line 202We store a pointer to the file's inode. Lines 205208Basic checking is done on the file operations structure to ensure that read or asynchronous read operations have been defined. If no read operation is defined, or if the operations table is missing, the function returns the EINVAL error at this point. This error indicates that the file descriptor is attached to a structure that cannot be used for reading. Lines 210214We verify that the area to be read is not locked and that the file is authorized to be read. If it is not, we notify the parent of the file (on lines 218219). Lines 215217These are the guts of vfs_read(). If the read file operation has been defined, we call it; otherwise, we call do_sync_read(). In our tracing, we follow the standard file operation read and not the do_sync_read() function. Later, it becomes clear that both calls eventually reach the same underlying point. 6.5.3.1. Moving from the Generic to the SpecificThis is our first encounter with one of the many abstractions where we move between the generic filesystem layer and the specific filesystem layer. Figure 6.17 illustrates how the file structure points to the specific filesystem table or operations. Recall that when read_inode() is called, the inode information is filled in, including having the fop field point to the appropriate table of operations defined by the specific filesystem implementation (for example, ext2). Figure 6.17. File Operations

When a file is created, or mounted, the specific filesystem layer initializes its file operations structure. Because we are operating on a file on an ext2 filesystem, the file operations structure is as follows: ----------------------------------------------------------------------- fs/ext2/file.c 42 struct file_operations ext2_file_operations = { 43 .llseek = generic_file_llseek, 44 .read = generic_file_read, 45 .write = generic_file_write, 46 .aio_read = generic_file_aio_read, 47 .aio_write = generic_file_aio_write, 48 .ioctl = ext2_ioctl, 49 .mmap = generic_file_mmap, 50 .open = generic_file_open, 51 .release = ext2_release_file, 52 .fsync = ext2_sync_file, 53 .readv = generic_file_readv, 54 .writev = generic_file_writev, 55 .sendfile = generic_file_sendfile, 56 }; ----------------------------------------------------------------------- You can see that for nearly every file operation, the ext2 filesystem has decided that the Linux defaults are acceptable. This leads us to ask when a filesystem would want to implement its own file operations. When a filesystem is sufficiently unlike a UNIX filesystem, extra steps might be necessary to allow Linux to interface with it. For example, MSDOS- or FAT-based filesystems need to implement their own write but can use the generic read.[10]

Discovering that the specific filesystem layer for ext2 passes control to the generic filesystem layer, we now examine generic_file_read(): ----------------------------------------------------------------------- mm/filemap.c 924 ssize_t 925 generic_file_read(struct file *filp, char __user *buf, size_t count, loff_t *ppos) 926 { 927 struct iovec local_iov = { .iov_base = buf, .iov_len = count }; 928 struct kiocb kiocb; 929 ssize_t ret; 930 931 init_sync_kiocb(&kiocb, filp); 932 ret = __generic_file_aio_read(&kiocb, &local_iov, 1, ppos); 933 if (-EIOCBQUEUED == ret) 934 ret = wait_on_sync_kiocb(&kiocb); 935 return ret; 936 } 937 938 EXPORT_SYMBOL(generic_file_read); ----------------------------------------------------------------------- Lines 924925Notice that the same parameters are simply being passed along from the upper-level reads. We have filp, the file pointer; buf, the pointer to the memory buffer where the file will be read into; count, the number of characters to read; and ppos, the position within the file to begin reading from. Line 927An iovec structure is created that contains the address and length of the user space buffer that the results of the read are to be stored in. Lines 928 and 931A kiocb structure is initialized using the file pointer. (kiocb stands for kernel I/O control block.) Line 932The bulk of the read is done in the generic asynchronous file read function.

Lines 933935After we send off the read, we wait until the read finishes and then return the result of the read operation. Recall the do_sync_read() path in vfs_read(); it would have eventually called this same function via another path. Let's continue the trace of file I/O by examining __generic_file_aio_read(): ----------------------------------------------------------------------- mm/filemap.c 835 ssize_t 836 __generic_file_aio_read(struct kiocb *iocb, const struct iovec *iov, 837 unsigned long nr_segs, loff_t *ppos) 838 { 839 struct file *filp = iocb->ki_filp; 840 ssize_t retval; 841 unsigned long seg; 842 size_t count; 843 844 count = 0; 845 for (seg = 0; seg < nr_segs; seg++) { 846 const struct iovec *iv = &iov[seg]; ... 852 count += iv->iov_len; 853 if (unlikely((ssize_t)(count|iv->iov_len) < 0)) 854 return -EINVAL; 855 if (access_ok(VERIFY_WRITE, iv->iov_base, iv->iov_len)) 856 continue; 857 if (seg == 0) 858 return -EFAULT; 859 nr_segs = seg; 860 count -= iv->iov_len 861 break; 862 } ... ----------------------------------------------------------------------- Lines 835842Recall that nr_segs was set to 1 by our caller and that iocb and iov contain the file pointer and buffer information. We immediately extract the file pointer from iocb. Lines 845862This for loop verifies that the iovec struct passed is composed of valid segments. Recall that it contains the user space buffer information. ----------------------------------------------------------------------- mm/filemap.c ... 863 864 /* coalesce the iovecs and go direct-to-BIO for O_DIRECT */ 865 if (filp->f_flags & O_DIRECT) { 866 loff_t pos = *ppos, size; 867 struct address_space *mapping; 868 struct inode *inode; 869 870 mapping = filp->f_mapping; 871 inode = mapping->host; 872 retval = 0; 873 if (!count) 874 goto out; /* skip atime */ 875 size = i_size_read(inode); 876 if (pos < size) { 877 retval = generic_file_direct_IO(READ, iocb, 878 iov, pos, nr_segs); 879 if (retval >= 0 && !is_sync_kiocb(iocb)) 880 retval = -EIOCBQUEUED; 881 if (retval > 0) 882 *ppos = pos + retval; 883 } 884 file_accessed(filp); 885 goto out; 886 } ... ----------------------------------------------------------------------- Lines 863886This section of code is only entered if the read is direct I/O. Direct I/O bypasses the page cache and is a useful property of certain block devices. For our purposes, however, we do not enter this section of code at all. Most file I/O takes our path as the page cache, which we describe soon, which is much faster than the underlying block device. ----------------------------------------------------------------------- mm/filemap.c ... 887 888 retval = 0; 889 if (count) { 890 for (seg = 0; seg < nr_segs; seg++) { 891 read_descriptor_t desc; 892 893 desc.written = 0; 894 desc.buf = iov[seg].iov_base; 895 desc.count = iov[seg].iov_len; 896 if (desc.count == 0) 897 continue; 898 desc.error = 0; 899 do_generic_file_read(filp,ppos,&desc,file_read_actor); 900 retval += desc.written; 901 if (!retval) { 902 retval = desc.error; 903 break; 904 } 905 } 906 } 907 out: 08 return retval; 909 } ----------------------------------------------------------------------- Lines 889890Because our iovec is valid and we have only one segment, we execute this for loop once only. Lines 891898We translate the iovec structure into a read_descriptor_t structure. The read_descriptor_t structure keeps track of the status of the read. Here is the description of the read_descriptor_t structure: ----------------------------------------------------------------------- include/linux/fs.h 837 typedef struct { 838 size_t written; 839 size_t count; 840 char __user * buf; 841 int error; 842 } read_descriptor_t; ----------------------------------------------------------------------- Line 838The field written keeps a running count of the number of bytes transferred. Line 839The field count keeps a running count of the number of bytes left to be transferred. Line 840The field buf holds the current position into the buffer. Line 841The field error holds any error code encountered during the read operation. Lines 899We pass our new read_descriptor_t structure desc to do_generic_file_read(), along with our file pointer filp and our position ppos. file_read_actor() is a function that copies a page to the user space buffer located in desc.[11]

Lines 900909The amount read is calculated and returned to the caller. At this point in the read() internals, we are about to access the page cache[12] and determine if the sections of the file we want to read already exist in RAM, so we don't have to directly access the block device.

6.5.3.2. Tracing the Page CacheRecall that the last function we encountered passed a file pointer filp, an offset ppos, a read_descriptor_t desc, and a function file_read_actor into do_generic_file_read(). ----------------------------------------------------------------------- include/linux/fs.h 1420 static inline void do_generic_file_read(struct file * filp, loff_t *ppos, 1421 read_descriptor_t * desc, 1422 read_actor_t actor) 1423 { 1424 do_generic_mapping_read(filp->f_mapping, 1425 &filp->f_ra, 1426 filp, 1427 ppos, 1428 desc, 1429 actor); 1430 } ----------------------------------------------------------------------- Lines 14201430do_generic_file_read() is simply a wrapper to do_generic_mapping_read(). filp->f_mapping is a pointer to an address_space object and filp->f_ra is a structure that holds the address of the file's read-ahead state.[13]

So, we've transformed our read of a file into a read of the page cache via the address_space object in our file pointer. Because do_generic_mapping_read() is an extremely long function with a number of separate cases, we try to make the analysis of the code as painless as possible. ----------------------------------------------------------------------- mm/filemap.c 645 void do_generic_mapping_read(struct address_space *mapping, 646 struct file_ra_state *_ra, 647 struct file * filp, 648 loff_t *ppos, 649 read_descriptor_t * desc, 650 read_actor_t actor) 651 { 652 struct inode *inode = mapping->host; 653 unsigned long index, offset; 654 struct page *cached_page; 655 int error; 656 struct file_ra_state ra = *_ra; 657 658 cached_page = NULL; 659 index = *ppos >> PAGE_CACHE_SHIFT; 660 offset = *ppos & ~PAGE_CACHE_MASK; ----------------------------------------------------------------------- Line 652We extract the inode of the file we're reading from address_space. Lines 658660We initialize cached_page to NULL until we can determine if it exists within the page cache. We also calculate index and offset based on page cache constraints. The index corresponds to the page number within the page cache, and the offset corresponds to the displacement within that page. When the page size is 4,096 bytes, a right bit shift of 12 on the file pointer yields the index of the page. "The page cache can [be] done in larger chunks than one page, because it allows for more efficient throughput" (linux/pagemap.h). PAGE_CACHE_SHIFT and PAGE_CACHE_MASK are settings that control the structure and size of the page cache: ----------------------------------------------------------------------- mm/filemap.c 661 662 for (;;) { 663 struct page *page; 664 unsigned long end_index, nr, ret; 665 loff_t isize = i_size_read(inode); 666 667 end_index = isize >> PAGE_CACHE_SHIFT; 668 669 if (index > end_index) 670 break; 671 nr = PAGE_CACHE_SIZE; 672 if (index == end_index) { 673 nr = isize & ~PAGE_CACHE_MASK; 674 if (nr <= offset) 675 break; 676 } 677 678 cond_resched(); 679 page_cache_readahead(mapping, &ra, filp, index); 680 681 nr = nr - offset; ----------------------------------------------------------------------- Lines 662681This section of code iterates through the page cache and retrieves enough pages to fulfill the bytes requested by the read command. ----------------------------------------------------------------------- mm/filemap.c 682 find_page: 683 page = find_get_page(mapping, index); 684 if (unlikely(page == NULL)) { 685 handle_ra_miss(mapping, &ra, index); 686 goto no_cached_page; 687 } 688 if (!PageUptodate(page)) 689 goto page_not_up_to_date; ----------------------------------------------------------------------- Lines 682689We attempt to find the first page required. If the page is not in the page cache, we jump to the no_cached_page label. If the page is not up to date, we jump to the page_not_up_to_date label. find_get_page() uses the address space's radix tree to find the page at index, which is the specified offset. ----------------------------------------------------------------------- mm/filemap.c 690 page_ok: 691 /* If users can be writing to this page using arbitrary 692 * virtual addresses, take care about potential aliasing 693 * before reading the page on the kernel side. 694 */ 695 if (mapping_writably_mapped(mapping)) 696 flush_dcache_page(page); 697 698 /* 699 * Mark the page accessed if we read the beginning. 700 */ 701 if (!offset) 702 mark_page_accessed(page); ... 714 ret = actor(desc, page, offset, nr); 715 offset += ret; 716 index += offset >> PAGE_CACHE_SHIFT; 717 offset &= ~PAGE_CACHE_MASK; 718 719 page_cache_release(page); 720 if (ret == nr && desc->count) 721 continue; 722 break; 723 ----------------------------------------------------------------------- Lines 690723The inline comments are descriptive so there's no point repeating them. Notice that on lines 656658, if more pages are to be retrieved, we immediately return to the top of the loop where the index and offset manipulations in lines 714716 help choose the next page to retrieve. If no more pages are to be read, we break out of the for loop. ----------------------------------------------------------------------- mm/filemap.c 724 page_not_up_to_date: 725 /* Get exclusive access to the page ... */ 726 lock_page(page); 727 728 /* Did it get unhashed before we got the lock? */ 729 if (!page->mapping) { 730 unlock_page(page); 731 page_cache_release(page); 732 continue; 734 735 /* Did somebody else fill it already? */ 736 if (PageUptodate(page)) { 737 unlock_page(page); 738 goto page_ok; 739 } 740 ----------------------------------------------------------------------- Lines 724740If the page is not up to date, we check it again and return to the page_ok label if it is, now, up to date. Otherwise, we try to get exclusive access; this causes us to sleep until we get it. Once we have exclusive access, we see if the page attempts to remove itself from the page cache; if it is, we hasten it along before returning to the top of the for loop. If it is still present and is now up to date, we unlock the page and jump to the page_ok label. ----------------------------------------------------------------------- mm/filemap.c 741 readpage: 742 /* ... and start the actual read. The read will unlock the page. */ 743 error = mapping->a_ops->readpage(filp, page); 744 745 if (!error) { 746 if (PageUptodate(page)) 747 goto page_ok; 748 wait_on_page_locked(page); 749 if (PageUptodate(page)) 750 goto page_ok; 751 error = -EIO; 752 } 753 754 /* UHHUH! A synchronous read error occurred. Report it */ 755 desc->error = error; 756 page_cache_release(page); 757 break; 758 ----------------------------------------------------------------------- Lines 741743If the page was not up to date, we can fall through the previous label with the page lock held. The actual read, mapping->a_ops->readpage(filp, page), unlocks the page. (We trace readpage() further in a bit, but let's first finish the current explanation.) Lines 746750If we read a page successfully, we check that it's up to date and jump to page_ok when it is. Lines 751758If a synchronous read error occurred, we log the error in desc, release the page from the page cache, and break out of the for loop. ----------------------------------------------------------------------- mm/filemap.c 759 no_cached_page: 760 /* 761 * Ok, it wasn't cached, so we need to create a new 762 * page.. 763 */ 764 if (!cached_page) { 765 cached_page = page_cache_alloc_cold(mapping); 766 if (!cached_page) { 767 desc->error = -ENOMEM; 768 break; 769 } 770 } 771 error = add_to_page_cache_lru(cached_page, mapping, 772 index, GFP_KERNEL); 773 if (error) { 774 if (error == -EEXIST) 775 goto find_page; 776 desc->error = error; 777 break; 778 } 779 page = cached_page; 780 cached_page = NULL; 781 goto readpage; 782 } ----------------------------------------------------------------------- Lines 698772If the page to be read wasn't cached, we allocate a new page in the address space and add it to both the least recently used (LRU) cache and the page cache. Lines 773775If we have an error adding the page to the cache because it already exists, we jump to the find_page label and try again. This could occur if multiple processes attempt to read the same uncached page; one would attempt allocation and succeed, the other would attempt allocation and find it already existing. Lines 776777If there is an error in adding the page to the cache other than it already existing, we log the error and break out of the for loop. Lines 779781When we successfully allocate and add the page to the page cache and LRU cache, we set our page pointer to the new page and attempt to read it by jumping to the readpage label. ----------------------------------------------------------------------- mm/filemap.c 784 *_ra = ra; 785 786 *ppos = ((loff_t) index << PAGE_CACHE_SHIFT) + offset; 787 if (cached_page) 788 page_cache_release(cached_page); 789 file_accessed(filp); 790 } ----------------------------------------------------------------------- Line 786We calculate the actual offset based on our page cache index and offset. Lines 787788If we allocated a new page and could add it correctly to the page cache, we remove it. Line 789We update the file's last accessed time via the inode. The logic described in this function is the core of the page cache. Notice how the page cache does not touch any specific filesystem data. This allows the Linux kernel to have a page cache that can cache pages regardless of the underlying filesystem structure. Thus, the page cache can hold pages from MINIX, ext2, and MSDOS all at the same time. The way the page cache maintains its specific filesystem layer agnosticism is by using the readpage() function of the address space. Each specific filesystem implements its own readpage(). So, when the generic filesystem layer calls mapping->a_ops->readpage(), it calls the specific readpage() function from the filesystem driver's address_space_operations structure. For the ext2 filesystem, readpage() is defined as follows: ----------------------------------------------------------------------- fs/ext2/inode.c 676 struct address_space_operations ext2_aops = { 677 .readpage = ext2_readpage, 678 .readpages = ext2_readpages, 679 .writepage = ext2_writepage, 680 .sync_page = block_sync_page, 681 .prepare_write = ext2_prepare_write, 682 .commit_write = generic_commit_write, 683 .bmap = ext2_bmap, 684 .direct_IO = ext2_direct_IO, 685 .writepages = ext2_writepages, 686 }; ----------------------------------------------------------------------- Thus, readpage()actually calls ext2_readpage(): ----------------------------------------------------------------------- fs/ext2/inode.c 616 static int ext2_readpage(struct file *file, struct page *page) 617 { 618 return mpage_readpage(page, ext2_get_block); 619 } ----------------------------------------------------------------------- ext2_readpage() calls mpage_readpage(),which is a generic filesystem layer call, but passes it the specific filesystem layer function ext2_get_block(). The generic filesystem function mpage_readpage() expects a get_block() function as its second argument. Each filesystem implements certain I/O functions that are specific to the format of the filesystem; get_block() is one of these. Filesystem get_block() functions map logical blocks in the address_space pages to actual device blocks in the specific filesystem layout. Let's look at the specifics of mpage_readpage(): ----------------------------------------------------------------------- fs/mpage.c 358 int mpage_readpage(struct page *page, get_block_t get_block) 359 { 360 struct bio *bio = NULL; 361 sector_t last_block_in_bio = 0; 362 363 bio = do_mpage_readpage(bio, page, 1, 364 &last_block_in_bio, get_block); 365 if (bio) 366 mpage_bio_submit(READ, bio); 367 return 0; 368 } ----------------------------------------------------------------------- Lines 360361We allocate space for managing the bio structure the address space uses to manage the page we are trying to read from the device. Lines 363364do_mpage_readpage() is called, which translates the logical page to a bio structure composed of actual pages and blocks. The bio structure keeps track of information associated with block I/O. Lines 365367We send the newly created bio structure to mpage_bio_submit() and return. Let's take a moment and recap (at a high level) the flow of the read function so far:

mpage_readpage() is the function that creates the bio structure and ties together the newly allocated pages from the page cache to the bio structure. However, no data exists in the pages yet. For that, the filesystem layer needs the block device driver to do the actual interfacing to the device. This is done by the submit_bio() function in mpage_bio_submit(): ----------------------------------------------------------------------- fs/mpage.c 90 struct bio *mpage_bio_submit(int rw, struct bio *bio) 91 { 92 bio->bi_end_io = mpage_end_io_read; 93 if (rw == WRITE) 94 bio->bi_end_io = mpage_end_io_write; 95 submit_bio(rw, bio); 96 return NULL; 97 } ----------------------------------------------------------------------- Line 90The first thing to notice is that mpage_bio_submit() works for both read and write calls via the rw parameter. It submits a bio structure that, in the read case, is empty and needs to be filled in. In the write case, the bio structure is filled and the block device driver copies the contents to its device. Lines 9294If we are reading or writing, we set the appropriate function that will be called when I/O ends. Lines 9596We call submit_bio() and return NULL. Recall that mpage_readpage() doesn't do anything with the return value of mpage_bio_submit(). submit_bio() is part of the generic block device driver layer of the Linux kernel. ----------------------------------------------------------------------- drivers/block/ll_rw_blk.c 2433 void submit_bio(int rw, struct bio *bio) 2434 { 2435 int count = bio_sectors(bio); 2436 2437 BIO_BUG_ON(!bio->bi_size); 2438 BIO_BUG_ON(!bio->bi_io_vec); 2439 bio->bi_rw = rw; 2440 if (rw & WRITE) 2441 mod_page_state(pgpgout, count); 2442 else 2443 mod_page_state(pgpgin, count); 2444 2445 if (unlikely(block_dump)) { 2446 char b[BDEVNAME_SIZE]; 2447 printk(KERN_DEBUG "%s(%d): %s block %Lu on %s\n", 2448 current->comm, current->pid, 2449 (rw & WRITE) ? "WRITE" : "READ", 2450 (unsigned long long)bio->bi_sector, 2451 bdevname(bio->bi_bdev,b)); 2452 } 2453 2454 generic_make_request(bio); 2455 } ----------------------------------------------------------------------- Lines 24332443These calls enable some debugging: Set the read/write attribute of the bio structure, and perform some page state housekeeping. Lines 24452452These lines handle the rare case that a block dump occurs. A debug message is thrown. Line 2454generic_make_request() contains the main functionality and uses the specific block device driver's request queue to handle the block I/O operation. Part of the inline comments for generic_make_request() are enlightening: ----------------------------------------------------------------------- drivers/block/ll_rw_blk.c 2336 * The caller of generic_make_request must make sure that bi_io_vec 2337 * are set to describe the memory buffer, and that bi_dev and bi_sector are 2338 * set to describe the device address, and the 2339 * bi_end_io and optionally bi_private are set to describe how 2340 * completion notification should be signaled. ----------------------------------------------------------------------- In these stages, we constructed the bio structure, and thus, the bio_vec structures are mapped to the memory buffer mentioned on line 2337, and the bio struct is initialized with the device address parameters as well. If you want to follow the read even further into the block device driver, refer to the "Block Device Overview"section in Chapter 5, which describes how the block device driver handles request queues and the specific hardware constraints of its device. Figure 6.18 illustrates how the read() system call traverses through the layers of kernel functionality. Figure 6.18. read() Top-Down Traversal After the block device driver reads the actual data and places it in the bio structure, the code we have traced unwinds. The newly allocated pages in the page cache are filled, and their references are passed back to the VFS layer and copied to the section of user space specified so long ago by the original read() call. However, we hear you ask, "Isn't this only half of the story? What if we wanted to write instead of read?" We hope that these descriptions made it somewhat clear that the path a read() call takes through the Linux kernel is similar to the path a write() call takes. However, we now outline some differences. 6.5.4. write()A write() call gets mapped to sys_write() and then to vfs_write() in the same manner as a read() call: ----------------------------------------------------------------------- fs/read_write.c 244 ssize_t vfs_write(struct file *file, const char __user *buf, size_t count, loff_t *pos) 245 { ... 259 ret = file->f_op->write(file, buf, count, pos); ... 268 } ----------------------------------------------------------------------- vfs_write() uses the generic file_operations write function to determine what specific filesystem layer write to use. This is translated, in our example ext2 case, via the ext2_file_operations structure: ----------------------------------------------------------------------- fs/ext2/file.c 42 struct file_operations ext2_file_operations = { 43 .llseek = generic_file_llseek, 44 .read = generic_file_read, 45 .write = generic_file_write, ... 56 }; ----------------------------------------------------------------------- Lines 4445Instead of calling generic_file_read(), we call generic_file_write(). generic_file_write() obtains a lock on the file to prevent two writers from simultaneously writing to the same file, and calls generic_file_write_nolock(). generic_file_write_nolock() converts the file pointers and buffers to the kiocb and iovec parameters and calls the page cache write function generic_file_aio_write_nolock(). Here is where a write diverges from a read. If the page to be written isn't in the page cache, the write does not fall through to the device itself. Instead, it reads the page into the page cache and then performs the write. Pages in the page cache are not immediately written to disk; instead, they are marked as "dirty" and, periodically, all dirty pages are written to disk. There are analogous functions to the read() functions' readpage(). Within generic_file_aio_write_nolock(), the address_space_operations pointer accesses prepare_write() and commit_write(), which are both specific to the filesystem type the file resides upon. Recall ext2_aops, and we see that the ext2 driver uses its own function, ext2_prepare_write(), and a generic function generic_commit_write(). ----------------------------------------------------------------------- fs/ext2/inode.c 628 static int 629 ext2_prepare_write(struct file *file, struct page *page, 630 unsigned from, unsigned to) 631 { 632 return block_prepare_write(page,from,to,ext2_get_block); 633 } ----------------------------------------------------------------------- Line 632ext2_prepare_write is simply a wrapper for the generic filesystem function block_prepare_write(), which passes in the ext2 filesystem-specific get_block() function. block_prepare_write() allocates any new buffers that are required for the write. For example, if data is being appended to a file enough buffers are created, and linked with pages, to store the new data. generic_commit_write() takes the given page and iterates over the buffers within it, marking each dirty. The prepare and the commit sections of a write are separated to prevent a partial write being flushed from the page cache to the block device. 6.5.4.1. Flushing Dirty PagesThe write() call returns after it has insertedand marked dirtyall the pages it has written to. Linux has a daemon, pdflush, which writes the dirty pages from the page cache to the block device in two cases:

The pdflush daemon calls the filesystem-specific function writepages() when it is ready to write pages to disk. So, for our example, recall the ext2_file_operation structure, which equates writepages() with ext2_writepages().[14]

----------------------------------------------------------------------- 670 static int 671 ext2_writepages(struct address_space *mapping, struct writeback_control *wbc) 672 { 673 return mpage_writepages(mapping, wbc, ext2_get_block); 674 } ----------------------------------------------------------------------- Like other specific implementations of generic filesystem functions, ext2_ writepages() simply calls the generic filesystem function mpage_writepages() with the filesystem-specific ext2_get_block() function. mpage_writepages() loops over the dirty pages and calls mpage_writepage() on each dirty page. Similar to mpage_readpage(), mpage_writepage() returns a bio structure that maps the physical device layout of the page to its physical memory layout. mpage_writepages() then calls submit_bio() to send the new bio structure to the block device driver to transfer the data to the device itself. |

EAN: N/A

Pages: 134