Multithreading Problems

Several bug types are associated with multithreading. The ones that you're most likely to meet are as follows :

-

Data races : A data race occurs when multiple threads are allowed simultaneous access to read from and write to the same data area. This is likely to result in inconsistency or even corruption of that data. Using synchronization locks to serialize thread access to the common data area is the usual way of combating this problem.

-

Deadlock : A Process deadlock happens when two or more threads are unable to proceed because each is waiting for one of the others to proceed. The most common type of deadlock involves one thread issuing a synchronization lock on resource A and then trying to access resource B while another thread locks resource B and then tries to access resource A. The result is that the two threads are in a deadly embrace and each thread will wait forever for the opposing thread to relinquish its lock.

-

Livelock : Process livelock occurs when two or more threads become caught in a circular loop. For example, thread A sends an error message to thread B, which responds by sending an error message back. This can result in a never-ending stream of error messages from one thread to the other.

-

Starvation : Thread starvation happens when a thread grinds almost to a halt because of lack of processor time or a continuing failure to access some resource being used by other threads.

The next section looks at each of these problems in more detail and suggests ways of dealing with each problem.

Understanding Data Races

A data race happens when two or more threads race each other to read from and write to common data shared between the threads. The adverse effects of a data race happen when the reading and writing occur in a sequence not anticipated by the developer of the multithreaded code, and the common data then becomes corrupted.

The ThreadSynch console application demonstrates how a data race can occur. This program uses multiple worker threads to perform the very simple task of incrementing a shared counter from its starting value to a specified end value. Listing 14-1 shows the Sub Main of the application and the CountCoordinator class that launches the worker threads and coordinates the shared count.

| |

Option Strict On Imports System.Threading Module CountMonitor Sub Main() Dim CountTest As New CountCoordinator(5) Console.ReadLine() End Sub End Module Class CountCoordinator Private Const MAX_COUNT As Integer = 99 Private m_Counter As Integer = 0 Public Sub New(ByVal NumberOfCounters As Integer) Dim EachWorker As Integer, NewThread As Thread, _ Worker As CountWorker 'Show starting conditions Console.WriteLine(_ "Count started at {0} with max value of {1}.", _ CStr(Me.CurrentCount), CStr(Me.MaxCount)) Console.WriteLine(_ "{0} worker threads are doing the counting.", _ CStr(NumberOfCounters)) 'Start specified number of worker threads For EachWorker = 1 To NumberOfCounters Worker = New CountWorker(Me, EachWorker) NewThread = New Thread(AddressOf _ Worker.IncrementCount) NewThread.Start() Next EachWorker End Sub Public Property CurrentCount() As Integer Get Return m_Counter End Get Set(ByVal Value As Integer) m_Counter = Value End Set End Property Public ReadOnly Property MaxCount() As Integer Get Return MAX_COUNT End Get End Property End Class | |

The application creates the CountCoordinator class and passes 5 as the number of worker threads required to the class constructor. The class constructor starts each of the specified number of threads, and these worker threads then compete for processor time to increment the shared counter from 0 to its maximum value, in this case 99. The worker threads are given access to this counter and its maximum value through the CurrentCount and MaxCount properties of the CountCoordinator class.

Listing 14-2 shows the CountWorker class, which represents each of the worker threads. This class stored the reference to the CountCoordinator class that it receives in its constructor and then uses this to increment the CountCoordinator.CurrentCount property.

| |

Class CountWorker Private m_Coordinator As CountCoordinator Private m_WorkerId As Integer Public Sub New(ByVal Coordinator As CountCoordinator, _ ByVal WorkerId As Integer) m_Coordinator = Coordinator m_WorkerId = WorkerId End Sub Public Sub IncrementCount() 'Increment shared counter until equal to maximum allowed With m_Coordinator Do While.CurrentCount < .MaxCount Select Case.CurrentCount Case Is < (.MaxCount - 10) Thread.Sleep(0) .CurrentCount += 10 Case Is <.MaxCount Thread.Sleep(0) .CurrentCount += 1 Case Else End Select 'Show current thread and counter value Console.WriteLine(_ "Worker {0} current count {1}", _ CStr(m_WorkerId), CStr(.CurrentCount)) Loop End With End Sub End Class | |

The IncrementCount method takes one of three actions, depending on the current value of the shared counter. If the counter value is within 10 of the maximum, it adds 1 to the counter. If the counter value is not within 10 of the maximum, it adds 10 to the counter. If the counter value is equal to or greater than the maximum value, the thread simply finishes running, as no more counting is required.

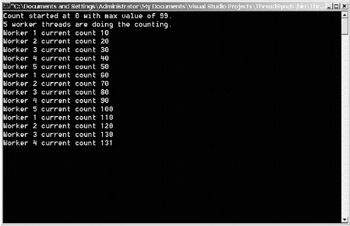

Given this relatively simple code, it's hard to see what could go wrong. But if you run the ThreadSynch program, you can see that the counter is always incremented well beyond its maximum value before all of the worker threads cease working. Figure 14-2 shows an example where the counter starts at 0, has a maximum value of 99, and five worker threads have been allocated to perform the counting. The final counter value of 131 is much higher than you might expect from reading the code.

Figure 14-2: An example run of the ThreadSynch application

What's happening is that in between a thread reading the shared counter value and incrementing it, another thread has also incremented the value. The threads aren't all working with the same value of the counter, and therefore they race each other to read and increment this value.

The insidious evil is that in a real application, this final counter value could be almost any number equal to or greater than the maximum, depending on the number of allocated worker threads and when Windows decides to switch between each thread. In this example program, I deliberately made each thread give up its time slice by issuing a Thread.Sleep(0) instruction after reading the shared counter. This allows me to force a data race problem because sleeping one thread allows the processor to switch the execution of other threads. In the real world, where the Thread.Sleep instruction probably wouldn't be used in this manner, the code might work fine 99.9% of the time, and you're likely to see an inconsistent final number only when you're demonstrating the program to your boss or an important customer.

The most common way of solving a data race problem is to lock the data shared between multiple threads so that only one thread can access the shared data at any one time. One way of locking shared data is with the SyncLock statement. If you add the lines shown in bold in Listing 14-3 to the CountWorker.IncrementCount method, you'll find that the data race goes away completely.

| |

'Increment shared counter until equal to maximum allowed With m_Coordinator Do While.CurrentCount<.MaxCount SyncLock(m_Coordinator) Select Case.CurrentCount Case Is < (.MaxCount - 10) Thread.Sleep(0) .CurrentCount += 10 Case Is <.MaxCount Thread.Sleep(0) .CurrentCount += 1 Case Else End Select End SyncLock 'Show current thread and counter value Console.WriteLine("Worker {0} current count {1}", _ CStr(m_WorkerId), CStr(.CurrentCount)) Loop End With | |

In this example, SyncLock is used to lock access to the count coordinator object so that only one thread at a time can run code within this object for the duration of the lock. For finer synchronization, you can use the Monitor class. For high-performance addition or subtraction of a value type variable, you can use the Interlocked class. However, any locking strategy needs to beware of two potential problems. The first problem is that performance can be adversely affected if the locking is done too frequently or blocks too big a region of code from executing. The second problem is that locking exposes you to the classic deadlock situation, as discussed further in the next section.

Understanding Process Deadlock

A process deadlock happens when two or more threads are unable to proceed because each is waiting for one of the others to proceed. The most common type of deadlock occurs when one thread issues a synchronization lock on resource A and then tries to access resource B while another thread locks resource B and then tries to access resource A. The result is that the two threads are in a deadly embrace and each thread will wait forever for the opposing thread to relinquish its lock. An alternative scenario involves a cyclic chain of dependencies where multiple threads become gridlocked because ther're queuing up and waiting for other threads to relinquish one or more shared resources. This is similar to the way that road traffic can become gridlocked at a very busy intersection.

The ThreadDeadlock console application demonstrates how a process deadlock can occur. This program consists of a Bank object that spawns multiple Cashier threads to randomly debit and credit two Account objects owned by the Bank object. Listing 14-4 shows the Sub Main of the application and the Bank class that launches the cashier threads and keeps control over the two bank accounts.

| |

Option Strict On Imports System.Threading Module DeadlockTest Sub Main() Dim TransferTest As New Bank(2, 10000) Console.ReadLine() End Sub End Module Class Bank Private m_AccountOne As New Account(1000000) Private m_AccountTwo As New Account(1000000) Public Sub New(ByVal NumberOfCashiers As Integer, _ ByVal NumberOfTransfers As Integer) Dim EachWorker As Integer, NewThread As Thread, _ Worker As Cashier 'Show starting conditions Console.WriteLine(_ "{0} cashiers are performing {1} transfers each.", _ NumberOfCashiers.ToString, _ NumberOfTransfers.ToString) 'Start specified number of worker threads For EachWorker = 1 To NumberOfCashiers Worker = New Cashier(Me, NumberOfTransfers) NewThread = New Thread(AddressOf Worker.TransferMoney) NewThread.Name = "Cashier" & EachWorker.ToString NewThread.Start() Next EachWorker End Sub Public ReadOnly Property AccountOne() As Account Get AccountOne = m_AccountOne End Get End Property Public ReadOnly Property AccountTwo() As Account Get AccountTwo = m_AccountTwo End Get End Property End Class | |

The application creates the Bank class and passes 2 to the class constructor as the number of cashiers required. It also passes the number of account transfers to be made, in this case 10,000. This relatively large number is used to demonstrate that a process deadlock can happen infrequently and may require some extensive execution testing to find. The Bank class has two instances of the Account class, each given an opening account balance of & pound ;1 million. When the Bank object is created, it then instantiates the specified number of Cashier objects and starts a worker thread for each of the cashiers. It also passes a reference to itself to each of the cashiers so that they can transfer money to and from the bank accounts.

One interesting point is that each of the cashier threads is given a name when it's instantiated . This really helps with debugging because the thread name is shown in the IDE Threads window, which makes each thread easier to identify. I go into more detail about the Threads window shortly, when you start to run this application.

Listing 14-5 shows the associated Cashier class. Each cashier is instantiated with an instruction to perform a certain number of transfers on the bank's two accounts, in this case 10,000 transfers. For each transfer, the bank accounts to credit and debit are chosen randomly from the two available. Before performing the transfer, the credit account is locked first followed by the debit account. This synchronization locking (shown in bold) prevents multiple cashiers from interfering with each other's transfers and causing a data race as seen in the previous example. Unlike the previous example, no thread sleeping is used. The sheer number of transfers happening is going to force a deadlock at some point, although thread sleeping (which allows other threads to run) will usually increase the speed at which a deadlock happens.

| |

Class Cashier Private m_Bank As Bank, m_NumberOfTransfers As Integer Public Sub New(ByVal AnyBank As Bank, _ ByVal NumberOfTransfers As Integer) m_Bank = AnyBank m_NumberOfTransfers = NumberOfTransfers End Sub Public Sub TransferMoney() Dim CurrentTransfer As Integer With m_Bank For CurrentTransfer = 1 To m_NumberOfTransfers If TrueOrFalse() = True Then SyncLock (.AccountOne) SyncLock (.AccountTwo) .AccountOne.CreditBalance(100) .AccountTwo.DebitBalance(100) Console.WriteLine(_ "{0}: Transfer {1}", _ Thread.CurrentThread.Name, _ CurrentTransfer.ToString) End SyncLock End SyncLock Else SyncLock (.AccountTwo) SyncLock (.AccountOne) .AccountOne.DebitBalance(100) .AccountTwo.CreditBalance(100) Console.WriteLine(_ "{0}: Transfer {1}", _ Thread.CurrentThread.Name, _ CurrentTransfer.ToString) End SyncLock End SyncLock End If Next CurrentTransfer End With End Sub Private Function TrueOrFalse() As Boolean Randomize() Dim Test As Single = (Int((2 * Rnd()) + 1)) Return CBool(Test = 1) End Function End Class | |

Finally, Listing 14-6 shows the Account class. This class offers functions for debiting and crediting the bank account, and a property for interrogating the current account balance.

| |

Class Account Private m_AccountBalance As Decimal = 0 Public Sub New(ByVal StartingBalance As Decimal) m_AccountBalance = StartingBalance End Sub Public ReadOnly Property AccountBalance() As Decimal Get AccountBalance = m_AccountBalance End Get End Property Public Function DebitBalance _ (ByVal AmountToDebit As Decimal) As Decimal m_AccountBalance -= AmountToDebit Return m_AccountBalance End Function Public Function CreditBalance _ (ByVal AmountToCredit As Decimal) As Decimal m_AccountBalance += AmountToCredit Return m_AccountBalance End Function End Class

| |

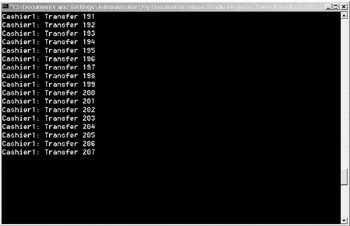

If you load the ThreadDeadlock solution into Visual Studio and run it using F5, you can see that it prints each transfer made by the two cashiers as it happens, along with the name of the cashier (thread) doing the transfer. At some unpredictable number of transfers, the application simply hangs, as shown in Figure 14-3. If you run the application many times, you should see that it hangs at a different point each time.

Figure 14-3: Process deadlock in the ThreadDeadlock application

To establish What's happening and why the program is hanging, press Ctrl+Break at the point where the application hangs. The debugger should break into the program at one of the four SyncLock statements. Select Debug ![]() Windows

Windows ![]() Threads to display the Threads window, and you can see the three managed threads within the program, as shown in Figure 14-4. The first thread is the main application thread, and the other two are the cashier threads.

Threads to display the Threads window, and you can see the three managed threads within the program, as shown in Figure 14-4. The first thread is the main application thread, and the other two are the cashier threads.

Figure 14-4: Using the Threads windows to investigate a process deadlock

The debugger should be paused on one of the SyncLock statements. The thread that was active when the program was suspended by the debugger is shown with a small yellow arrow next to it. If you right-click the nonactive cashier thread in the Threads window (the cashier thread without a yellow arrow next to it) and choose Switch to Thread from the context menu, you can see that the other thread is also paused at a SyncLock statement. If you switch between the two cashier threads in this manner, you can see that each of the threads is attempting to lock a different account. One cashier has a lock on the first account and is trying to lock the second account, and the other cashier has a lock on the second account and is trying to lock the first account. Hence the process is deadlocked and can't go any further, so the application hangs.

Notice that the program is randomly picking the order in which it locks the accounts. One way of avoiding this type of deadlock situation is always to lock your resources in exactly the same sequence within each thread. An identical sequence of synchronization locks ensures that multiple threads won't try to grab each other's resources in a nondeterministic order. This is usually easier said than done, because it's normally very hard to guarantee that code statements are executed in the same order in each thread. Depending upon thread entry, data, and timing conditions, each thread may follow a quite different execution path to its siblings. Even if you can guarantee that objects are locked in exactly the same order, you can still be caught by subtleties, as you'll see in a couple of paragraphs.

In this case, an alert developer would probably anticipate the deadlock because the synchronization is explicit, out in the open , and clearly happening in a random order. A more dangerous situation is when the synchronization locks that lead to a deadlock are implicit rather than explicit. In this example, the synchronization lock might be placed inside a Transfer method as shown in Listing 14-7, and you might not have easy access to that method's source code. If your threads are calling another component's methods and those methods perform their own synchronization, you may not even be aware that this locking is taking place and that a potential deadlock is hovering over your program.

| |

Public Sub Transfer(ByVal AccountToDebit As Account, _ ByVal AccountToCredit As Account, _ ByVal AmountToTransfer As Decimal) SyncLock (AccountToDebit) SyncLock (AccountToCredit) 'Transfer happens here

| |

And here's the subtlety that I talked about a couple of paragraphs ago. In Listing 14-7, the resources are always locked in the same order, so you might think that a deadlock can't occur. However, what happens when the first thread passes account A as the debit account and account B as the credit account, while the second thread does the opposite ? If both threads enter this method simultaneously , and a context switch happens after the first lock, you might well see a deadlock.

To reduce the amount of time that your application is exposed to a potential deadlock, you should acquire your synchronization locks as late as possible and release them as early as possible. You should always try to avoid lengthy operations inside code that's locked, especially operations (such as I/O) that can block indefinitely.

If your code throws an exception in a region of code protected by a SyncLock statement, the synchronization lock will always be released. The VB .NET compiler automatically places any synchronized region of code inside an implicit Try Finally block, where the Finally block releases the synchronization lock. This has one interesting side effect: You can't use SyncLock in a method that also uses unstructured exception handling ( On Error ), because structured and unstructured exception handling can't be combined within the same method.

A final subtlety to remember is that threads can deadlock while waiting on events as well as while waiting to acquire resources locked by other threads. This is often overlooked, especially when a developer forgets that code in an event handler is executed by the thread that raises the event, not by the thread that owns the object within which the event handler resides.

Understanding Process Livelock

Process livelock is similar to process deadlock in its external appearance, in that both situations result in the process appearing to hang indefinitely. Internally, however, livelock is quite different from deadlock. A process is considered to be in a state of livelock when thread code is still executing, but two or more threads are in a never-ending cycle with each other and no useful work is being done. One example of this was mentioned earlier, a situation where one thread throws an error message, to which a second thread, not expecting this error, responds with an error message of its own. This can result in a continuous cycle of errors being thrown.

There's no easy way to prevent process livelock, although it's often possible to detect the livelock once it's happened . In the case of a livelock produced by a cycle of exception messages, Chapter 6 discusses performance counters that you can use from Performance Monitor (or from code) to detect an excessive number of exceptions being thrown within a certain time period. Detecting a livelock internally is much easier than detecting a deadlock because thread code can continue to execute even in the presence of a livelock. However, it requires some careful design to ensure that your detection code can adequately find and stop livelocks. The best solution is to try to anticipate potential livelock situations and then design your threads to prevent them or at least make them highly unlikely to happen.

Understanding Thread Starvation

To understand thread starvation, think about approaching a road tunnel in your car. The road tunnel has only a single lane, but it has to accommodate traffic traveling in both directions. The problem is that if the oncoming stream of cars is steady enough, you won't get a chance to go through the tunnel yourself. The analogy is that each car is a thread and the tunnel is a shared resource.

Just like your car can't get through the tunnel because of the oncoming cars, a thread can be starved of a resource by multiple other threads and be unable to execute in a timely fashion. The thread might not die of starvation ”it just runs very slowly. This happens when the thread can get access to the resource, but only for a limited time before competing threads grab the resource back. Going back to the car'thread analogy, think of your car managing to enter the tunnel, but having to swerve into a turnout and stop every time it meets an oncoming car.

There are at least two common reasons for thread starvation. The first reason is when you assign a lower priority than normal to a specific thread. In this case, you've explicitly requested that the thread is less important than other threads with a normal priority, and you can solve the problem by juggling and tuning thread priorities as required. The second common reason for thread starvation is sometimes called the writer-reader problem, where a single writer thread tries to lock some data in order to update it but is repeatedly blocked by multiple reader threads with their own synchronization locks on the same data. This situation is common enough that the .NET Framework has constructs specifically designed to help you to avoid thread starvation when it occurs.

In the writer-reader situation, you need to make sure that a writer thread isn't prevented from doing its writing for lengthy periods of time by multiple competing reader threads. To do this, you can use the Framework's ReaderWriterLock synchronization class. This class enforces exclusive access to a region of code for any writer thread, but it allows nonexclusive access to a code region for any reader thread. The class also coordinates thread access so that once a writer thread has requested a lock, all subsequent lock requests by reader threads are queued until the writer lock has been granted. This prevents starvation of a writer thread through any inability to grab a shared resource away from multiple reader threads.

In effect, the ReaderWriterLock class switches between one writer thread and a group of reader threads. In any situation where the shared resource is being updated infrequently, this class has been designed to provide better throughput than a standard one-at-a-time lock such as SyncLock or Monitor .

Listing 14-8 demonstrates this by showing a class designed for reading and writing of some hypothetical shared data. The class is completely thread-safe in that multiple reader and writer threads can use it simultaneously. Its use of the ReaderWriterLock class also prevents starvation of any writer threads, even in the presence of a larger number of competing reader threads. It's worth examining the code closely because, although it looks simple, it has some subtleties that may not be immediately apparent from a first reading.

| |

Option Strict On Imports System.Threading Class ReadWrite 'This class is thread-safe in that its methods can 'be called safely from multiple threads simultaneously. Private m_Lock As New Threading.ReaderWriterLock() Public Sub ReadData(ByVal MillisecondsToWait As Integer) 'This procedure reads information from some source. 'The read lock prevents data from being written until 'the thread is done reading, while allowing other threads 'to call ReadData. m_Lock.AcquireReaderLock(MillisecondsToWait) 'We have a lock, so now try to read the data Try ' Perform read operation here. Finally m_Lock.ReleaseReaderLock() End Try End Sub Public Sub WriteData(ByVal MillisecondsToWait As Integer) 'This procedure writes information to some source. 'The write lock prevents data from being read or 'written until the thread has finished writing. m_Lock.AcquireWriterLock(MillisecondsToWait) 'We have a lock, so now try to write the data Try ' Perform write/update operation here. Finally m_Lock.ReleaseWriterLock() End Try End Sub End Class

| |

Acquiring a Reader Lock

When a thread enters the ReadData method shown in Listing 14-8, it attempts to acquire a reader lock using the AcquireReaderLock method of the ReaderWriterLock class. The AcquireReaderLock will block if a different thread has a writer lock or if any thread is waiting to acquire a writer lock. This latter point is one of the keys to avoiding thread starvation of a writer thread. Any block on the attempt to acquire a reader lock will last until either the reader lock is granted or the number of milliseconds specified by the MillisecondsToWait parameter of the ReadData method has expired . If the time-out of the reader lock attempt does expire, the AcquireReaderLock statement will throw an ApplicationException exception that can be caught by the code calling the ReadData method. Using a time-out in this manner prevents any possible deadlock problems.

If the reader lock is granted, the ReadData method then attempts to read the data. This read attempt is placed within a Try End Try block so that read lock will always be released, even if an error occurs. This is important because the number of reader locks acquired and reader locks released should always be matched.

There is one final subtlety of the AcquireReaderLock method of which you should be aware. If the current thread already has a writer lock and it then attempts to acquire a reader lock, the reader lock isn't granted. Instead, the writer lock count is incremented by one and the data read then proceeds as normal. This nuance has the advantage of preventing a thread from blocking on its own writer lock. The disadvantage is that instead of calling ReleaseReaderLock when the data read has completed, you need to call ReleaseWriterLock instead. This is because every writer lock has to be matched with a writer lock release, even if the writer lock has been acquired by using the AcquireReaderLock method. Fortunately, you can check if the current thread already has a writer lock by checking the IsWriterLockHeld property and then releasing the writer lock if necessary.

Acquiring a Writer Lock

When a thread enters the WriteData method shown in Listing 14-8, it attempts to acquire a writer lock using the AcquireWriterLock method of the ReaderWriterLock class. This method blocks if a different thread has a reader or writer lock. If the writer lock attempt is blocked, it's placed in a queue ahead of any reader locks that are blocked. This is the final key to avoiding thread starvation of a writer thread. Once again, a time-out period is specified on the attempt to acquire the writer lock to avoid any possibility of deadlocks. If the time-out expires , the AcquireWriterLock statement will throw an ApplicationException exception that can be caught by the code calling the WriteData method.

If the writer lock is granted, the WriteData method then tries to update the data. As with the ReadData method, this update is placed within a Try End Try block so that writer lock will always be released, even if an error occurs. This is important because the number of writer locks acquired and writer locks released should always be matched.

As with the AcquireReaderLock method, AcquireWriterLock also has a final subtlety that can trip you up. If a thread calls AcquireWriterLock while it also has a reader lock, it will block on that reader lock. If an infinite time-out is specified for the writer lock attempt, the thread will then deadlock with itself. To prevent this, you can use the IsReaderLockHeld property and then upgrade the reader lock to a writer lock using the UpgradeToWriterLock method if necessary. Remember, of course, that if you do upgrade a reader lock to a writer lock, you need to release the writer lock rather than the reader lock.

EAN: 2147483647

Pages: 160