10.4 Estimating the Effects of Network Latency on Applications Performance

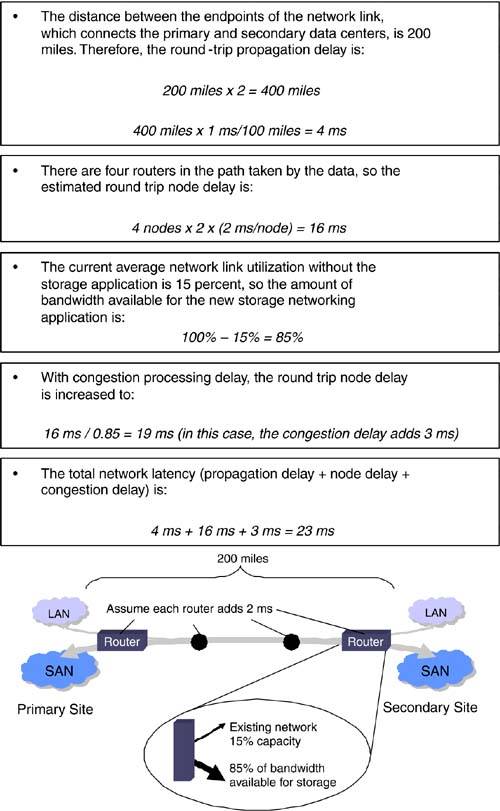

| Providing the storage network with sufficient bandwidth is a necessary, but not sufficient, step to ensuring good performance of a storage networking application. If excessive network latency is causing the application to spend a large amount of time waiting for responses from a distant data center, then the bandwidth may not be fully utilized, and performance will suffer. As mentioned previously, network latency is comprised of propagation delay, node delay, and congestion delay. The end systems themselves also add processing delay, which is covered later in this section. Good network design can minimize node delay and congestion delay, but a law of physics governs propagation delay: the speed of light. Still, there are ways to seemingly violate this physical law and decrease the overall amount of time that applications spend waiting to receive responses from remote sites. The first is credit spoofing. As described previously, credit spoofing switches can convince Fibre Channel switches or end systems to forward chunks of data much larger than the amount that could be stored in their own buffers. The multiprotocol switches continue to issue credits until their own buffers are full, while the originating Fibre Channel devices suppose that the credits arrived from the remote site. Since the far-end switches also have large buffers, they can handle large amounts of data as it arrives across the network from the sending switch. As a result, fewer control messages need to be sent for each transaction and less time is spent waiting for the flow control credits to travel end to end across the high-latency network. That, in turn , increases the portion of time spent sending actual data and boosts the performance of the application. Another way to get around the performance limitations caused by network latency is to use parallel sessions or flows. Virtually all storage networks operate with multiple sessions, so the aggregate performance can be improved simply by sending more data while the applications are waiting for the responses from earlier write operations. Of course, this has no effect on the performance of each individual session, but it can improve the overall performance substantially. Some guidelines for estimating propagation delay and node delay are shown in Figure 10-7. Figure 10-7. Estimating the propagation and node delays. Network congestion delay is more difficult to estimate. In a well-designed network ”one that has ample bandwidth for each application ”it simply may be possible to ignore the effects of congestion, as it would be a relatively small portion of the overall network latency. Even in congested networks, the latency can be minimized for high-priority applications, such as storage networking, through the use of the traffic prioritization or bandwidth reservation facilities now available in some network equipment. Congestion queuing models are very complex, but a method for calculating a very rough approximation of the network congestion delay is shown in Figure 10-8. Figure 10-8. Estimating the congestion queuing delay. Figure 10-9 shows a numerical example of estimates for the total network latency. Figure 10-9. Numerical example of total network latency estimate. In simplest terms, the maximum rate at which write operations can be completed is the inverse of the network latency. In other words, if the data can be sent to the secondary array, written, and acknowledged in 23 ms, as in the numerical example, then in one second, about 43 operations can be completed for a single active session, as shown below:

The rate of remote write operations can be increased linearly, up to a point, by increasing the number of active sessions used. So, if four sessions were used in the example, the performance would increase to 174 write operations per second:

As mentioned, there is a point at which other factors may limit the performance of the remote write operations. For example, transactions with large block sizes may be limited by the amount of network bandwidth allocated. Or, in applications that have plenty of bandwidth, the performance of the serverless backup software used to mirror the disk arrays may have an upper limit of, say, 40 to 50 MBps. |

EAN: 2147483647

Pages: 108