NAS Hardware as the IO Manager

| |

NAS Hardware as the I/O Manager

As we discussed in the previous chapter, the NAS devices perform a singular directive with a duality of function, network file I/O processing and file storage. A more concise term would be that it functions as an I/O manager. So, what is an I/O manager?

The I/O manager is a major component of computers and is made up from a complex set of hardware and software elements. Their combined operations enable the necessary functions to access, store, and manage data. A discussion of many of these components can be found in Part II. A comprehensive view of the internals of I/O management is beyond the scope of this book, however an overview of a theoretical I/O manager will help you understand the hardware operations of NAS.

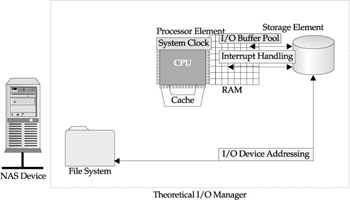

Figure 10-1 shows the typical components that make up a theoretical I/O manager.

Figure 10-1: The I/O manager components

The I/O subsystem is made up of hardware, the processor instruction sets, low-level micro-code, and software subroutines that communicate and are called by user applications. The I/O manager is, in effect, the supervisor of all I/O within the system. Some of the important aspects of the I/O manager are the following:

-

Hardware Interrupt Handler Routines that handle priority interrupts for hardware devices and traps associated with error and correction operations.

-

System and User Time A system clock that provides timing for all system operations. I/O operations called by the user and executed in kernel mode take place in system time and are performed by the clock handler routines.

-

I/O Device Addressing A system whereby hardware devices are addressed by a major and minor numbering scheme. Major device numbers are indexed to a device table. The minor device number identifies the device unit number. For disks, the minor number refers to the device number and the partition number. For tapes, the major number refers to the controller slot number, and the minor number refers to the drive number.

-

I/O Buffer Pool Retaining data blocks in memory and writing them back only if they are modified optimizes disk access. Certain data blocks are used very frequently and can be optimized through buffering in RAM.

-

Device I/O Drivers Block I/O devices such as disk and tape units require instructions on how they must work and operate within the processing environment. Called device drivers, they consist of two parts . The first part provides kernel service to system calls, while the second part, the device interrupt handler, calls the device priority routines, manages and dispatches device errors, and resets devices and the status of a device.

-

Read/Write Routines System calls that derive the appropriate device driver routine, which actually performs the read or write operation to the device.

-

I/O Priority Interface System routine that handles requests for the transfer of data blocks. This routine manages the location of the block, making sure it is handled properly if in the buffer, or initiates an operation to locate disk address and block location within a device.

-

Raw I/O on Block Devices A system call and method used for very fast access to block devices, effectively bypassing the I/O buffer pool. These types of low-level operations of the I/O manager are very important in order to better understand the architecture and usage of database implementations using I/O manager functions.

NAS Hardware I/O Manager Components

If we view the NAS hardware functioning as an I/O manager, the critical components will be the processor element, the memory/cache elements, and the storage element. Certainly, the connectivity to the outside world is important and as such will be handled through its own chapter (Chapter 12 in this book). However, the internal hardware aspects of the NAS devices determine how fast it can process I/O transactions, how much data it can store, the types of workloads it can handle, and how it can recover from failing components.

The NAS Processor Element

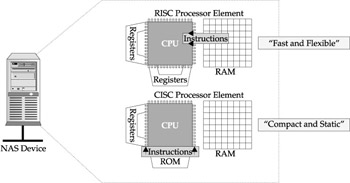

The NAS processor component is critical to the power required to handle specific types of workloads. Although most vendors have moved to a CISC model (for example, using Intel chips to keep costs down), many of the leading vendors offer RISC-based processor components. The difference, although seemingly slight , can be substantial when evaluating the performance required for complex workloads.

RISC (Reduced Instruction Set Computing) versus CISC (Complex Instruction Set Computing) continues to be an on-going discussion within chip designers and system vendors. The differences are based upon different architectural designs in how the CPU component processes its instructions. The differences are depicted in Figure 10-2, which shows that RISC uses simple software primitives, or instructions, which are stored in memory as regular programs.

Figure 10-2: RISC versus CISC

CISC provides the instruction sets using micro-code in the processor ROM (read-only memory). The RISC processors have fewer and simpler instructions and consequently require less processing logic to interpretthat is, execute. This results in a high execution rate for RISC-based processors. Consequently, more instructions will produce a gross higher processing rate with everything being balanced at a higher throughput. Another important characteristic of RISC-based processor architecture is that they have a large number of registers compared to CISC processors. More instructions that are closer to the ALU unit means faster gross processing and processor throughput.

Given the architectural differences, the capability to provide high system performance, with repetitive instructions, against a focused workload puts the RISC-based system on top. However, this is not achieved without cost. RISC-based systems are more costly than CISC-based systems, specifically Intel commodity processors. Therefore, a balance must be achieved between the performance sophistication of the NAS device versus the processing complexities of the I/O workload.

This is important as decisions made on cost alone result in non-scalable CISC-based NAS devices, or devices that may have a short-term usefulness in supporting a complex I/O workload. On the other hand, making a purchase based on performance alone may lead to an overallocation of processor performance which could be handled just as effectively with CISC-based systems performing in multiples (for example, deploying many NAS devices).

This is also important because it defines the type of Micro-kernel operating system the vendor supplies . All RISC-based systems are UNIX-based micro-kernel systems, while the CISC-based systems are a combination of both UNIX and Windows micro-kernel systems.

When evaluating NAS devices, you should at least know the type of processor architecture thats being used. Looking at technical specification sheets beyond the capacities of the disks and storage RAID capabilities, its important to understand the scalability of instruction set computing for the workload being supported. You will find that all CISC-based processing will use Intel-based processors with the most recognizable RISC-based processors being offered through Sun, HP, and IBM.

The NAS Memory Element

RAM is an important factor in processing I/O requests in conjunction with available cache and cache management. Caching has become a standard enhancement to I/O processing. Cache management is a highly complex technology and subject to workload and specific vendor implementations. However, most caching solutions will be external to the processor and utilize cache functionality within the storage array controllers. As NAS solutions are bundled with storage arrays, any caching will be integrated into the NAS device using both RAM and storage controller cache. Caching solutions within the NAS hardware will remain vendor specific and available through the configurations. Note that some vendors have included a NVRAM (Non-Volatile Random Access Memory) solution that will provide a level of performance enhancement but a significant safeguard in data integrity to writes that occur within the workload.

The functions of the I/O hardware manager enable buffer management, which is a key element in the effective handling of I/O requests. As requests are passed from the TCP stack to the application, in this case the software components in the I/O manager, they are queued for processing through buffer management. Given the slower performance of the disk elements compared to RAM, the RAM I/O buffers provide an important element to quick and orderly processing of requests that may come in faster than the disk I/O can handle. Therefore, the more buffer capacity you have, the more requests can be processed in a timely fashion.

For data operations inside the processor element, the functions of system cache can also provide an additional level of efficiency. Our discussion regarding RISC versus CISC showed the efficiencies of having software instructions loaded as close to the CPU as possible. Having a combination of RISC-based instructions and user data blocks can provide additional levels of efficiency and speed. This can also prove effective in CISC architectures given the cache usage for user data blocks and the redundant nature of the I/O operations performed by the processor element within the NAS devices.

A popular solution to the esoteric I/O bottleneck problem is to add memory. Although this is an overly simple solution to a complex problem, in the case of NAS simple file processing (Sfp), it can generally be effective. The caveats (refer to Chapter 17) are many and lie within the read/write nature of the workload. Given that many NAS implementations are for read-only high-volume I/O transactions, the RAM element is an important part of the solution for increasing capacities for I/O buffer management.

The other effect RAM has on NAS devices is on the input side (for example, TCP/IP processing). Given that similar requests may develop faster than the processor element can execute them, the capability to queue these requests provides a more efficient environment in processing the TCP stack. In addition, the same will be true on the outbound side as requests for data are queued and wait their turn to be transmitted to the network.

NAS devices are configured with static RAM/Cache options in the low-end appliance level devices. Sizes of 256MB are common and are balanced to the small workloads these devices are configured for. Sizes and options increase dramatically in the mid-range and high-end models, with RAM options able to reach limits of one to two gigabyte levels. The configuration of system cache is highly dependent on the vendor and their proprietary implementation of the hardware processor elements.

The NAS Storage Element

Being that NAS is a storage device, one of its most important elements is the storage system element. As stated earlier, NAS comes with a diverse set of configurations for storage. These range from direct attached disk configurations with minimal RAID and fault resiliency support to disk subsystems complete with FC connectivity and a full complement of RAID options. Again, choice of NAS type is dependent on your needs and the workloads being supported.

At the appliance level there are two types of entry-level configurations. First is the minimum Plug and Play device that provides anywhere from 100 to 300GB of raw storage capacity. These devices provide a PC-like storage system connected to an Ethernet network using IDE connected devices with no RAID support. These configurations provide a single path to the disks, which range anywhere from 2 to 4 large capacity disks. As shown in Figure 10-3, the system provides support for a workgroup or departmental workload as Windows-based network file systems. However, these limited devices also do well with applications that support unstructured data (for example, image and video) within a departmental or engineering workstation group . Given their price/performance metrics, their acquisition and usage within an enterprise can be very productive.

Figure 10-3: NAS appliance-level storage configurations

The other ends of the appliance spectrum are the entry-level enterprise and mid-range NAS devices, as shown in Figure 10-4. These devices are also Plug and Play. However, capacities start to take on proportions that support a larger network of users and workload types. Storage systems capacities range from 300GB to 1 terabyte in size with RAID functionality at levels 0, 1, and 5. At these levels, the storage system is SCSI-based with options for the type of SCSI bus configuration required. With this, SCSI controller functionality becomes increasingly sophisticated. Although most use a single path into the SCSI controller, the SCSI bus functionality, because of its architecture, has multiple LUNs connecting the disk arrays.

Figure 10-4: NAS mid-range and enterprise storage configurations

The enterprise NAS devices extend the previous configurations with storage capacities, SCSI controller functionality, SCSI device options, and extensibility. Capacities extend into the terabyte range with multiple path connections into the disk arrays. The number and density of disks will depend on the array configuration. However, the top NAS configurations can support over 1,000 disk drives within a single device. At this level, RAID functionality becomes common place with pre-bundled configurations for levels 1 and 5, depending on your workloads.

Across the mid-range and enterprise offerings of NAS are three important hardware factors worth considering. These are fault resilience, FC integration, and tape connectivity. The NAS architecture renders these devices as functional units operating in a singular and unattended capacity. Therefore, the capability to provide a level of hardware fault tolerance particularly for the density and operation of the storage system has become a characteristic of the NAS solution. While Storage Area Networks provide another pillar to the storage networking set of solutions, the requirement for NAS to interoperate with SANs is increasing as related user data becomes interspersed among these devices. Finally, one of the key drawbacks to keeping critical user data on NAS was its inability to participate in an enterprise (or departmental) backup and recovery solution. This deficiency has slowly abated through the increased offerings of direct access to tape systems bundled with the NAS devices.

The ability to recover from a disk hardware failure has become sophisticated and standard among enterprise storage systems. These features have become integrated into the higher mid-range solutions and enterprise NAS devices. Look for full power supply and fan redundancy at the high end, and elements of both as these recovery options evolve from appliance-level solutions. This can be beneficial, as the environmental factors will differ greatly from closet installations to crowded data center raised-floor implementations. As one failure perpetuates another, this is an important place to consider fault-tolerant elements, because the inoperative power supply will bring the entire disk array down and potentially damage disk drives in the process. On the other hand, an inoperative or poorly operating fan will allow drives to overheat with potentially disastrous effects.

Another important aspect to the fault-tolerant elements is the capability to recover from a single inoperative drive within one of the disk arrays. NAS devices at the mid-range and enterprise levels will offer hot-swappable drive units. Used in conjunction with RAID functionality, either (software- or hardware-based) will allow the device to be repaired without the data being unavailable. Performance, however, is another consideration during a disk crash recovery period and is dependent on the workload and I/O content (additional discussion on this condition can be found in Part VI).

As with any recovery strategy, the importance of backing up the data on the storage system cant be underestimated. As stated previously, this is one area that has been lacking in the configurations and operation of NAS devices: how to back up the data within existing enterprise backup/recovery operations. Look for NAS devices to provide attachments to tape units. These will be offered as SCSI bus attachments where enterprise-level tape devices can be attached directly to the NAS device. Although a step in the right direction, there are two important considerations to make before launching into that area. First is the transparent implementation of yet another tape library segment. Copying data from the NAS device to an attached tape unit requires some level of supervision, ownership, and operation. In addition, the software required to provide the backup, and more importantly, the recovery operation has to run under the micro-kernel proprietary operating system of the NAS device. So, be prepared to support new tapes and new software to leverage this type of backup and recovery. (Additional discussion of this condition can be found in Part VI.)

User data is now stored on disks directly attached to servers, using Storage Area Network and NAS devices. The applications using the storage infrastructure will largely ignore the specifics of the device infrastructure being used. That means that more interplay will happen with data that is stored between these different architectures. This condition is accelerating the need for NAS and SAN to communicate and provide some level of data access of each others device. Look for high-end NAS devices to provide additional enhancements to participate within an FC-based storage area network. This will be offered as a host bus adapter from the NAS device with the appropriate software drivers running within the NAS OS. Using this type of configuration, the NAS device can service a file I/O and a block level I/O into the SAN. (Additional discussion on FC and Network connectivity can be found in Chapter 12.)

The sophistication and operational usage is just evolving for these types of integrated I/O activities. Data integrity, security, and protocol considerations (to name a few) are being developed as of this writing. In Chapter 17, well discuss some of the factors driving these requirements and various considerations to take note of when implementing applications within these configurations.

| |

EAN: 2147483647

Pages: 192

- The Second Wave ERP Market: An Australian Viewpoint

- Context Management of ERP Processes in Virtual Communities

- Healthcare Information: From Administrative to Practice Databases

- A Hybrid Clustering Technique to Improve Patient Data Quality

- Relevance and Micro-Relevance for the Professional as Determinants of IT-Diffusion and IT-Use in Healthcare