Beginner Topic: Thread Basics A thread is a sequence of instructions that is executing. A program that enables more than one sequence to execute concurrently is multithreaded. For example, in order to import a large file while simultaneously allowing a user to click Cancel, a developer creates an additional thread to perform the import. By performing the import on a different thread, the program can cancel instead of freezing the user interface until the import completes. An operating system simulates multiple threads via a mechanism known as time slicing. Even with multiple processors, there is generally a demand for more threads than there are processors, and as a result, time slicing occurs. Time slicing is a mechanism whereby the operating system switches execution from one thread (sequence of instructions) to the next so quickly that it appears the threads are executing simultaneously. The effect is similar to that of a fiber optic telephone line in which the fiber optic line represents the processor and each conversation represents a thread. A (singlemode) fiber optic telephone line can send only one signal at a time, but many people can hold simultaneous conversations over the line. The fiber optic channel is fast enough to switch between conversations so quickly that each conversation appears to be uninterrupted. Similarly, each thread of a multithreaded process appears to run continuously in parallel with other threads. Since a thread is often waiting for various events, such as an I/O operation, switching to a different thread results in more efficient execution, because the processor is not idly waiting for the operation to complete. However, switching from one thread to the next does create some overhead. If there are too many threads, the switching overhead overwhelms the appearance that multiple threads are executing, and instead, the system slows to a crawl; time is spent switching from one thread to another instead of accomplishing the work of each thread. Even readers new to programming will have heard the term multithreading before, most likely in a conversation about its complexity. In designing both the C# language and the framework, considerable time was spent on simplifying the programming API that surrounds multithreaded programming. However, considerable complexity remains, not so much in writing a program that has multiple threads, but in doing so in a manner that maintains atomicity, avoids deadlocks, and does not introduce execution uncertainty such as race conditions. Atomicity Consider code that transfers money from a bank account. First, the code verifies whether there are sufficient funds; if there are, the transfer occurs. If after checking the funds, execution switches to a thread that removes the funds, an invalid transfer may occur when execution returns to the initial thread. Controlling account access so that only one thread can access the account at a time fixes the problem and makes the transfer atomic. An atomic operation is one that either completes all of its steps fully, or restores the state of the system back to its original state. A bank transfer should be an atomic operation because it involves two steps. In the process of performing those steps, it is possible to lose operation atomicity if another thread modifies the account before the transfer is complete. Identifying and implementing atomicity is one of the primary complexities of multithreaded programming. The complexity increases because the majority of C# statements are not necessarily atomic. _Count++, for example, is a simple statement in C#, but it translates to multiple instructions for the processor. The processor reads the data in _Count. The processor calculates the new value. _Count is assigned a new value (even this may not be atomic).

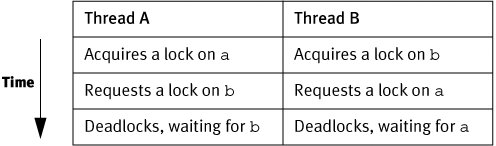

After the data is accessed, but before the new value is assigned, a different thread may modify the original value (perhaps also checking the value prior to modifying it), creating a race condition because the value in Count has, for at least one thread's perspective, changed unexpectedly. Deadlock To avoid such race conditions, languages support the ability to restrict blocks of code to a specified number of threads, generally one. However, if the order of lock acquisition between threads varies, a deadlock could occur such that threads freeze, each waiting for the other to release their lock. For example:  At this point, each thread is waiting on the other thread before proceeding, so each thread is blocked, leading to an overall deadlock in the execution of that code. Uncertainty The problem with code that is not atomic or causes deadlocks is that it depends on the order in which processor instructions across multiple threads occur. This dependency introduces uncertainty concerning program execution. The order in which one instruction will execute relative to an instruction in a different thread is unknown. Many times, the code will appear to behave uniformly, but occasionally it won't, and this is the crux of multithreaded programming. Because such race conditions are difficult to replicate in the laboratory, much of the quality assurance of multithreaded code depends on long-running stress tests and manual code analysis/reviews. |