COMMON COUNTERMEASURES

| | ||

| | ||

| | ||

Although specific countermeasures were discussed with each attack we introduced, there needs to be a broader discussion around why these problems occur in the first place and what to do about them. As the mantra of IT goes, a solid approach to any problem includes people, process, and technology dimensions. This section will cover some of the emerging best practices in secure software development, organized around those three vectors.

People: Changing the Culture

One thing we've learned over years of consulting with, being employed by, building, and running software development organizations is that security will never improve until it is integrated into the culture of software development itself. We've seen many different organizational cultures at product development companies. Unfortunately, thanks to today's highly competitive global markets, most organizations do not prioritize security appropriately, dooming product security initiatives to failure time and again. This is somewhat ironic, because security is something customers want and need. Here are some tips for getting the ball rolling in the right direction.

Talk Softly

First of all, don't underestimate the potential impact of trying to alter the product development process at any organization. This process is the lifeblood of the organization, and haphazard approaches will likely fail miserably. Learn the current process as well as possible, formulate a well-thought-out plan (we'll outline an example momentarily), and align strong-willed and smart people behind you. Talk softly and well, read the next section.

Carry a Big Stick

Yes, sometimes you will need to tread heavily. Remember that a big stick is only effective if the senior execs gave you the stick in the first place. With little or no executive support and incentive, you are also likely doomed to fail. More rarely, we have observed organizations that were managed "bottom-up," where the key to success is gaining grassroots support from a critical mass of influential development teams . You need to be sensitive to the unique organizational infrastructure within which your initiative will exist, and leverage it accordingly .

Security Improves Quality and Efficiency

One of the more successful approaches we've seen is to exploit the perpetual tension between quality and efficiency by playing both sides against the middle: Link security tightly with product quality, and continuously repeat the mantra that a well-oiled security development process increases operational efficiency (since there will likely be fewer nasty surprises approaching release and shortly thereafter). Remember, security is really all about quality. This approach tends to be the most pleasing across the ranks of management and staff. Simply pushing security for security's sake is likely to be overshadowed by the constant pressure to ship product sooner, and for less overall cost. By integrating security into the existing culture, you position it for longer- term success across subsequent product releases. We think the Security Development Lifecycle process (a term we borrowed from Microsoft and introduce later in the chapter) substantially achieves this goal. You can read more about Microsoft's SDL in a paper written by Michael Howard and Steve Lipner, and presented by Mr. Lipner at the 20th Annual Computer Security Applications Conference, December 2004, at http://www.acsac.org/2004/dist.html.

Encode It into Governance

Once you've got buy-in that security in the development process is necessary, encode it into the governance process of the organization. A good place to start is to document the requirements for security in the development process into the organization's security policy. For some cut-and-paste sample language that has broad industry support, try ISO17799's section on system development and maintenance (see http://www.iso17799-web.com) or NIST Publications 800-64 and 800-27 (see http://csrc.nist.gov/ publications /nistpubs). As an aside, it doesn't hurt to promote the existence of such language in widely acknowledged policy benchmarks like ISO17799 with your management, because it strongly supports the notion that all organizations should be following such practices.

Do not lose sight of what you're trying to achieveyou're trying to create software solutions with fewer security defects. However, defects will remain in the code, so the long-term goal is to reduce the severity and risk of remaining security bugs .

Measure, Measure, Measure

Another key consideration is measurement. Savvy organizations will expect some system to measure the effectiveness of the improvements promised by any new-fangled alteration of their product development process. We recommend using the classic metric for security: risk. Again, the Security Development Lifecycle we'll discuss next tightly integrates the concept of risk measurement across product releases to drive continuous, tangible improvements to product security (and thus quality). Specifically, the DREAD formula for quantifying security risk is used within SDL to drive such improvements within Microsoft.

Accountability

Finally, establish an organizational accountability model for security and stick with it. Based on the perpetual imbalance between the drive for innovation and security, we recommend holding product teams accountable for the vast majority of security effort. Ideally , the security team should be accountable only for defining policies, education regimens, and audits .

Process: Security in the Development Lifecycle (SDL)

Assuming the proper organizational groundwork has been laid, what exactly do secure development practices look like? We provide the following rough outline, which is an amalgam of industry best practices promoted by others, as well as our own experiences in initiating such processes at large companies. We have borrowed the term "Security Development Lifecycle" (SDL) from our colleagues at Microsoft to describe the integration of security best practices into a generic software development lifecycle.

Appoint a Security Liaison on the Development Team

The development team needs to understand that they are ultimately accountable for the security of their product, and there is no better way to drive home this accountability than to make it a part of a team member's job description. Additionally, it is probably unrealistic to expect members of a central security team to ever acquire the product-centric expertise (across releases) of a "local" member of the development team (interestingly, ISO17799 also requires "local" expertise in Section 4.1.3, "Allocation of information security responsibilities"). Especially in large software development organizations, with multiple projects competing for attention, having an agent "on the ground" can be indispensable . It also creates great efficiencies to channel training and process initiatives through a single point of contact.

| Caution | Do not make the mistake of holding the security liaison accountable for the security of the product. This must remain the sole accountability of the product team's leadership, and it should reside no lower in the organization than the executive most directly responsible for the product or product family. |

Education, Education, Education

Most people aren't able to do the right thing if they've never been taught what it is, and this is extremely true with developers (who have trouble even spelling "security" when they're on a tight ship schedule). Therefore, an SDL initiative must begin with training. There are two primary goals to the training:

-

Learning the organizational SDL process

-

Learning organizational-specific and general secure design, coding, and testing best practices

Develop a curriculum, measure attendance and understanding, and, again, hold teams accountable at the executive level.

Training should be ongoing because threats evolve . Each week we see new attacks and new defenses, and it's incredibly important that designers, developers, and testers stay abreast of the security landscape as it unfolds.

Threat Modeling

Threat modeling is a critical component of SDL, and it has been championed by many prominent security expertsmost notably, Michael Howard of Microsoft Corp. Threat modeling is the process of identifying security threats to the final product and then making changes during the development of the product to mitigate those threats. In its most simple form, threat modeling can be a series of meetings among development team members (including organizational or external security expertise as needed) where such threats and mitigation plans are discussed and documented.

The biggest challenge of threat modeling is being systematic and comprehensive. No techniques currently available can claim to identify 100 percent of the feasible threats to a complex software product, so you must rely on best practices to achieve as close to 100 percent as possible, and use good judgment to realize when you've reached a point of diminishing returns. Microsoft Corp. has published one of the more mature threat-modeling methodologies (including a book and a software tool) at http://msdn.microsoft.com/security/securecode/threatmodeling/default.aspx. We've highlighted some of the key aspects of Microsoft's methodology in the following excerpt from the "Security Across the Software Development Lifecycle Task Force" report (see http://www.itaa.org/software/docs/SDLCPaper.pdf):

-

Identify assets protected by the application (it is also helpful to identify the confidentiality, integrity, and availability requirements for each asset).

-

Create an architecture overview. This should at the very least encompass a data flow diagram (DFD) that illustrates the flow of sensitive assets throughout the product and related systems.

-

Decompose the application, paying particular attention to security boundaries (for example, application interfaces, privilege use, authentication/authorization model, logging capabilities, and so on).

-

Identify and document threats. One helpful way to do this is to consider Microsoft's STRIDE model: Attempt to brainstorm S poofing, T ampering, R epudiation, I nformation disclosure, D enial of service, and E levation of privilege threats for each documented asset and/or boundary.

-

Rank the threats using a systematic metric; Microsoft promotes the DREAD system ( D amage potential, R eproducibility, E xploitability, A ffected users, and D iscoverability).

-

Develop threat mitigation strategies for the highest-ranking threats (for example, set a DREAD threshold above which all threats will be mitigated by specific design and/or implementation features).

-

Implement the threat mitigations according to the agreed-upon schedule (hint: not all threats need to be mitigated before the next release).

The Microsoft threat-modeling process also uses threat trees, derived from hardware fault trees, to identify the security preconditions that lead to security vulnerabilities.

Code Checklists

A good threat model should provide solid coverage of the key security risks to an application from a design perspective, but what about implementation-level mistakes? SDL should include manual and automated processes for scrubbing the code itself for common mistakes, robust construction, and redundant safety precautions .

Manual code review is tedious and of questionable efficacy when it comes to large software projects. However, it remains the gold standard for finding deep, serious security bugs, so don't trivialize it. We recommend focusing manual review using the results of the threat-model sessions, or perhaps relying on the development team itself to peercode-review each others' work before checking in code to achieve broad coverage. You should spend time manually inspecting code that has had a history of errors or is "high risk" (which could be defined simply as code that is enabled within default configurations, is accessible from a network, and/or is executed within the context of a highly privileged user account, such as root on Linux and UNIX, or SYSTEM on Windows ).

Automated code analysis is optimal, but modern tools are far from comprehensive. Nevertheless, some good tools are available, and every simple stack-based buffer overflow identified before release is worth its weight in gold versus being found in the wild. Table 11-2 lists some tools that could help you find potential security defects. Note that some tools are better than others, so test them out on your code to determine how many real bugs you find (versus just noise). Too many false positives will simply annoy developers, and people will shun them.

| Name | Language | Link |

|---|---|---|

| FXCop | .NET | http://www.gotdotnet.com/team/fxcop (FXCop is also available in Visual Studio .NET 2005.) |

| SPLINT | C | http://lclint.cs. virginia .edu |

| Flawfinder | C/C++ | http://www.dwheeler.com/flawfinder |

| ITS4 | C/C++ | http://www. cigital .com |

| PREfast | C/C++ | PREfast is available in Visual Studio .NET 2005. |

| Bugscan | C/C++ binaries | http://www.logiclibrary.com |

| CodeAssure | C/C++, Java | http://www.securesw.com/products |

| Prexis | C/C++, Java | http://www.ouncelabs.com |

| RATS | C/C++, Python, Perl, PHP | http://www.securesw.com/resources/tools.html |

In addition to the tools listed in Table 11-2, numerous development environment parameters can be used to enhance the security of code. For example, Microsoft's Visual Studio development environment offers the/GS complier option to help protect against some forms of buffer overflow attacks (see http://msdn.microsoft.com/library/en-us/vccore/html/vclrfGSBufferSecurity.asp). Another good example is the Visual C++ linker /SAFE- SEH option, which can help protect against the abuse of the Windows Safe Exception Handlers (SEH; see http://msdn.microsoft.com/library/en-us/vccore/html/vclrfSAFESEHImageHasSafeExceptionHandlers.asp). Microsoft's new Data Execution Protection (DEP) feature works in conjunction with /SAFESEH (see the upcoming discussion titled "Platform Improvements").

We'll talk more about how other technologies can improve security in the development lifecycle in an upcoming section of this chapter.

Security Testing

Threat-modeling and implementation-checking tools are powerful, but only part of the equation for more secure software. There is really no substitute for good, old-fashioned adversarial testing of the near-finished application. Of course, there are entire fields of study devoted to software testing, and for the sake of brevity, we will focus here on the two most common security testing approaches we've encountered in our work with organizations large and small:

-

Fuzz testing

-

Penetration testing (pen testing)

We believe automated fuzz testing should be incorporated into the normal release cycle for every software product. Pen testing typically requires expert resources and therefore is typically scheduled less frequently (say, before each major release).

Fuzzing Fuzzing is really another type of implementation check. It is essentially the generation of random and crafted application input from the perspective of a malicious adversary. Fuzzing has traditionally been used to identify input-handling issues with protocols and APIs, but it is more broadly applicable to just about any type of software that receives or passes information, such as complex files. Numerous articles and books have been published on fuzz testing, so a lengthy discussion is out of scope here, but here are a few references:

-

Fuzz Testing of Application Reliability at University of Wisconsin, Madison (http://www.cs.wisc.edu/~bart/fuzz/fuzz.html)

-

The Advantages of Block-Based Protocol Analysis for Security Testing, by David Aitel (http://www.immunitysec.com/downloads/advantages_of_block_based_analysis.pdf)

-

The Shellcoder's Handbook: Discovering and Exploiting Security Holes, by Koziol et al. (John Wiley & Sons, 2004)

-

Exploiting Software: How to Break Code, by Hoglund and McGraw (AddisonWesley, 2004)

-

How to Break Software Security: Effective Techniques for Security Testing, by Whittaker and Thompson (Pearson Education, 2003)

-

Gray Hat Hacking: The Ethical Hacker's Handbook, by Harris et al. (McGraw-Hill/Osborne, 2004)

If you plan to build your own file-fuzzing infrastructure, consider the following as a starting point:

-

Enumerate all the data formats your application consumes.

-

Get as many valid files as possible, covering all the file formats you found during step 1.

-

Build a tool that picks a file from step 2, changes one or more bytes in the file, and saves it to a temporary location.

-

Have your application consume the file in step 3 and monitor the application for failure.

-

Rinse and repeat a hundred thousand times!

Pen Testing Traditionally, the term "penetration testing" has been used to describe efforts by authorized professionals to penetrate the physical and logical defenses provided by a typical IT organization, using the tools and techniques of malicious hackers. Although it ranks up there with terms like "social engineering" in our all-time Hall of Fame for Unfortunate Monikers, the term has stuck in the collective mentality of the technology industry, and is now universally recognized as a " must-have " component of any serious security program. More recently, the term has come to apply to all forms of "ethical hacking," including dissection of software products and services.

In contrast to fuzz testing, pen testing of software products and services is more labor intensive (which does not mean that pen testing cannot leverage automated test tools like fuzzers , of course). It is most aptly described as "adversarial use by experienced attackers ." The word "experienced" in this definition is critical: We find time and again that the quality of results derived from pen testing is directly proportional to the skill of the personnel who perform the tests. At most organizations we've worked with, very few individuals are philosophically and practically well-situated to perform such work. It is even more challenging to sustain an internal pen-test team over the long haul, due primarily to the perpetual mismatch between the extra-organizational market price for such skills and the perceived intra-organizational value. Internal pen-testers also have a tendency to get corralled into more mundane security functions (such as project management) that organizations may periodically prioritize over technical, tactical testing. Therefore, we recommend critically evaluating the abilities of internal staff to perform pen testing and strongly considering an external service provider for such work. A third party gives the added benefit of impartiality, a fact that can be leveraged during external negotiations (for example, partnership agreements) or marketing campaigns .

Given that you elect to hire third-party pen testers to attack your product, here are some issues to consider when striving for maximum return on investment:

-

Schedule Ideally, pen testing occurs after the availability of beta-quality code, but early enough to permit significant changes before ship date should the pen-test team identify serious issues. Yes, this is a fine line to walk.

-

Scope The product team should be prepared up front with documentation and in-person meetings to describe the application and set a proper scope for the pen-test engagement. We recommend using a consistent requestfor-proposal (RFP) template for evaluating multiple vendors . When setting scope, consider new features in this release, legacy features that have not been previously reviewed, components that present the most security risk from your perspective, as well as features that do not require testing in this release. Ideally, existing threat-model documentation can be used to cover these points.

-

Liaison Make sure managers are prepared to commit necessary productteam personnel to provide information to pen testers during testing. They will require significant engagement to achieve the necessary expertise in your product to deliver good results.

-

Methodology Press vendors hard on what they intend to do; typical approaches include basic black-box pen testing, infrastructure assessment, and/or code review. Also make sure they know how to pen-test your type of application: A company with web application pen-test skills may not be able to effectively pen-test a mainframe line-of-business application.

-

Location The location should be set proximal to the product team (ideally, the pen testers become part of the team during the period of engagement). Remote engagements require a high degree of existing trust and experience with the vendor in question.

-

Funding Funding should be budgeted for security pen testing in advance, to avoid delays. These services are typically bid on an hourly basis, depending on the scope of work, and they range from $150 to over $250 per hour , depending on the level of skill required. For your first pen-testing engagement, we recommend setting a small scope and budget.

-

Deliverables Too often, pen testers deliver a documented report at the end of the engagement and are never seen again. This report collects dust on someone's desk until it unexpectedly shows up on an annual audit months later after much urgency has been lost. We recommend familiarizing the pen testers with your in-house bug-tracking systems and having them file issues directly with the development team as the work progresses.

Finally, no matter which security testing approach you choose, we strongly recommend that all testing focus on the risks prioritized during threat modeling. This will lend coherence and consistency to your overall testing efforts, which will result in regular progress toward reducing serious security vulnerabilities.

Audit or Final Security Review

We've found it helpful to promote a final security checkpoint through which all products must pass before they are permitted to ship. This sets clear, crisp expectations for the development team and their management, and provides a single deadline in the development schedule around which to focus overall security efforts.

The pre-ship security audit should be focused on verifying that each of the prior elements of the Security Development Lifecycle were completed appropriately, including training, threat modeling, code reviews, testing, and so on. It should be performed by personnel independent of the product team, preferably the internal security team or their authorized agents . One of the useful metaphors we've seen employed during pre-ship security audits is the checklist questionnaire. This can be filled out by the product team security liaison (with the assistance of the whole team, of course) and then reviewed by the security team for completeness.

Of course, the concept of a pre-ship checkpoint always raises the question, What happens if the product team "fails" the audit? Should the release be delayed? We've found that the answer to this question depends much on the culture and overall business risk tolerance of the organization. Let's face it, not all security risks are worthy of slipping product releases, which in some cases can cause more damage to the business than shipping security vulnerabilities. At the end of the day, this is what the executives are paid to do: make decisions based on the lesser of two evils. We recommend that the final audit results be presented in just that way, as an advisory position to executive management. If the case is compelling enough (and it should be if you've quantified the risks well using models such as DREAD), they will make the right decision, and the organization will be healthier in the long run.

| Tip | If your organization has an aversion to the term "audit" for whatever reason, try using a similar term such as "Final Security Review (FSR)." |

Maintenance

In many ways, the SDL only begins once "version 1.0" of the product has officially been released. The product team should be prepared to receive external reports of security vulnerabilities discovered in the wild, issue patches and hotfixes, perform post-mortem analyses of issues identified externally, and explain why they were not caught by internal processes. Internal analysis of defects in code that lead to security errata or hotfixes is also critical. You need to ask questions such as, Why did the bug happen? How was it missed? What tools can we use to make sure this never happens again? When was the bug introduced?

Coincidentally, these are all very useful in defining overall SDL process improvements. Therefore, we also recommend an organization-wide post-mortem on each SDL implementation, to identify opportunities for improvement that are sure to crop up in every organization. All significant findings should be documented and fed into the next product release cycle, in which the organization will take yet another turn on the Security Development Lifecycle.

Putting It All Together

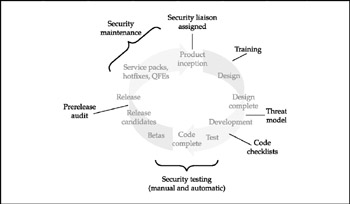

We've talked about a number of components to the Security Development Lifecycle, some of which may seem disjointed when considered by themselves . To lend coherence to the concept of SDL, you might think of each of the preceding concepts as a milestone in the software development process, as shown in Figure 11-3.

Figure 11-3: A model Security Development Lifecycle process, showing each key security checkpoint

Technology

Having just spent significant time speaking to the people and process dimensions of software security, we'll now delve a bit into technology that can assist you in developing more secure applications.

Managed Execution Environments

As appropriate, we strongly recommend migrating your software products to managed development platforms such as Sun's Java (http://java.sun.com) and Microsoft's .NET Framework (http://msdn.microsoft.com/netframework) if you have not already. Code developed using these environments leverages strong memory-management technologies and executes within a protected security sandbox, which can greatly reduce the possibility of security vulnerabilities.

Input Validation Libraries

Almost all software hacking rests on the assumption that input will be processed in an unexpected manner. Thus, the holy grail of software security is airtight input validation. Most software development shops cobble up their own input validation routines, using regular expression matching (try http://www.regexlib.com for great tips). Amongst vendors of web server software, which is commonly targeted for attack, Microsoft Corp. stands out as one of the only vendors to provide an off-the-shelf input validation library for its IIS web server software, called URLScan (see http://www.microsoft.com/technet/security/tools/urlscan.mspx). If at all possible, we recommend using such input validation libraries to deflect as much noxious input as possible for your applications. If you choose to implement your own input validation routines, remember these cardinal rules:

-

Assume all input is malicious and treat it as such, throughout the application.

-

Constrain the possible inputs your application will accept (for example, a ZIP code field might only accept five-digit numerals).

-

Reject all input that does not meet these criteria.

-

Sanitize any remaining inputfor example, remove metacharacters (such as & ˜ > < and so on) that might be interpreted as executable content.

-

Never, ever automatically trust client input.

-

Don't forget output validation or preemptive formatting, especially where input validation is infeasible. One common example is HTML-encoding output from web forms to prevent Cross-Site Scripting (XSS) vulnerabilities.

Platform Improvements

Keep your eye on new technology developments such as Microsoft's Data Execution Prevention (DEP) feature. As we discussed in Chapter 4, in Windows XP Service Pack 2 and later, Microsoft has implemented DEP to provide broad protection against memory corruption attacks such as buffer overflows (see http://support.microsoft.com/kb/875352 for full details). DEP has both a hardware and software component. When run on compatible hardware, DEP kicks in automatically and marks certain portions of memory as nonexecutable, unless it explicitly contains executable code. Ostensibly, this reduces the chance that some stack-based buffer overflow attacks are successful. In addition to hardware-enforced DEP, Windows XP SP2 and later also implement software-enforced DEP, which attempts to block exploitation of Safe Exception Handler (SEH) mechanisms in Windows (as described, for example, at http://www. securiteam .com/windowsntfocus/5DP0M2KAKA.html). As we noted earlier in this chapter, using Microsoft's/SAFESEH C/C++ linker option works in conjunction with software-enforced DEP to help protect against such attacks.

Recommended Further Reading

We could write an entire book about software hacking, but fortunately we don't have to, thanks to the quality material that has already been published to date. Here are some of our personal favorites (many have already been touched upon in this chapter) to hopefully further your understanding of this vitally important frontier in information system security:

-

The Security Across the Software Development Lifecycle Task Force, a diverse coalition of security experts from the public and private sectors, published a report in April 2004 at http://www.itaa.org/software/docs/SDLCPaper.pdf that covers the prior topics in more depth.

-

Writing Secure Code, 2nd Edition, by Howard and LeBlanc (Microsoft Press, 2002), is the winner of the RSA Conference 2003 Field of Industry Innovation Award and a definite classic in the field of software security.

-

Threat Modeling, by Swiderski and Snyder (Microsoft Press, 2004) is a great reference to start product teams thinking systematically about how to conduct this valuable process (see http://msdn.microsoft.com/security/securecode/threatmodeling/default.aspx for a link to the book and related tool).

-

For those interested in web application security, we also recommend Building Secure ASP.NET Applications and Improving Web Application Security: Threats and Countermeasures, by J.D. Meier and colleagues at Microsoft (see http://www.microsoft.com/downloads/release.asp?ReleaseID=44047 and http://msdn.microsoft.com/library/default.asp?url=/library/en-us/dnnetsec/html/ThreatCounter.asp, respectively).

-

As noted in our earlier discussion of security testing, we also like The Shellcoder's Handbook: Discovering and Exploiting Security Holes by Koziol, et. al. (John Wiley & Sons, 2004), Exploiting Software: How to Break Code, by Hoglund and McGraw (Addison-Wesley, 2004), How to Break Software Security: Effective Techniques for Security Testing, by Whittaker and Thompson (Pearson Education, 2003), and Gray Hat Hacking: The Ethical Hacker's Handbook, by Harris et al. (McGraw-Hill/Osborne, 2004).

EAN: N/A

Pages: 127

- An Emerging Strategy for E-Business IT Governance

- Linking the IT Balanced Scorecard to the Business Objectives at a Major Canadian Financial Group

- Technical Issues Related to IT Governance Tactics: Product Metrics, Measurements and Process Control

- Governing Information Technology Through COBIT

- Governance in IT Outsourcing Partnerships

- Chapter I e-Search: A Conceptual Framework of Online Consumer Behavior

- Chapter VII Objective and Perceived Complexity and Their Impacts on Internet Communication

- Chapter IX Extrinsic Plus Intrinsic Human Factors Influencing the Web Usage

- Chapter XI User Satisfaction with Web Portals: An Empirical Study

- Chapter XIII Shopping Agent Web Sites: A Comparative Shopping Environment