Introduction to QoS

| Although the amount of bandwidth is increasing as higher-speed networks become more economically viable, QoS is not unnecessary. All networks have congestion points where data packets can be dropped, such as WAN links where a larger link feeds data into a smaller link, or a place where several links are aggregated into fewer trunks. QoS is not a substitute for bandwidth, nor does it create bandwidth. QoS lets network administrators control when and how data is dropped when congestion does occur. As such, QoS is an important tool that should be enabled, along with adding bandwidth, as part of a coordinated capacity-planning process. Another important aspect to consider alongside QoS is traffic engineering (TE). TE is the process of selecting the paths that traffic will transit through the network. TE can be used to accomplish a number of goals. For example, a customer or service provider could traffic-engineer its network to ensure that none of the links or routers in the network are overutilized or underutilized. Alternatively, a service provider or customer could use TE to control the path taken by voice packets to ensure appropriate levels of delay, jitter, and packet loss. End-to-end QoS should be considered a prerequisite with the convergence of latency-sensitive traffic, such as voice and videoconferencing along with more traditional IP data traffic in the network. QoS becomes a key element in delivery of service in an assured, robust, and highly efficient manner. Voice and video require network services with low latency, minimal jitter, and minimal packet loss. The biggest impact on this and other real-time applications is packet loss and delay, which seriously affects the quality of the voice call or the video image. These and other data applications also require segregation to ensure proper treatment in this converged infrastructure. The application of QoS is a viable and necessary methodology to provide optimal performance for a variety of applications in what is ultimately an environment with finite resources. A well-designed QoS plan conditions the network to give access to the right amount of network resources needed by applications using the network, whether they are real-time or noninteractive applications. Before QoS can be deployed, the administrator must consider developing a QoS policy. Voice traffic needs to be kept separate because it is especially sensitive to delay. Video traffic is also delay-sensitive and is often so bandwidth-intensive that care needs to be taken to make sure that it doesn't overwhelm low-bandwidth WAN links. After these applications are identified, traffic needs to be marked in a reliable way to make sure that it is given the correct classification and QoS treatment within the network. If you look at the available options, you must ask yourself some questions that will inevitably help guide you as you formulate a QoS strategy:

From these questions, you have various options: Define a policy that supports the use of real-time applications and that treats everything else as best-effort traffic, or build a tiered policy that addresses the whole. After all, QoS can provide a more granular approach to segmentation of traffic and can expedite traffic of a specific type when required. You will explore these options in this chapter. After the QoS policies are determined, you need to define the "trusted edge," which is the place where traffic is marked in a trustworthy way. It would be useless to take special care in transporting different classes of network traffic if traffic markings could be accidentally or maliciously changed. You should also consider how to handle admission controlmetered access to finite network resources. For example, a user who fires up an application that consumes an entire pipe and consequently affects others' ability to share the resource needs a form of policing. Building a QoS Policy: Framework ConsiderationsTraffic on a network is made up of flows, which are placed on the wire by various functions or endpoints. Traffic may consist of applications such as Service Advertising Protocol (SAP), CAD/CAM, e-mail, voice, video, server replication, collaboration applications, factory control applications, branch applications, and control and systems management traffic. If you take a closer look at these applications, it is apparent that some level of control over performance measures is necessaryspecifically, the bandwidth and delay/jitter and loss that each class of application can tolerate. These performance measures can vary greatly and have various effects. If you apply a service level against these performance measures, it can be broadly positioned into four levels that drive the strategy:

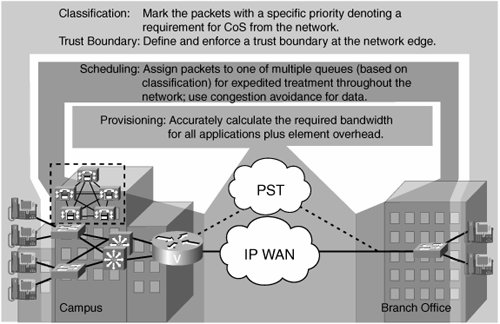

Applying these service levels against the application classes for their required level of service means that you need to understand where in the network they should be applied. The best approach is to define a "trust boundary" at the edge of the network where the endpoints are connected, as well as look at the tiers within the network where congestion may be encountered. After you know these, you can decide on the policy of application. For example, in the core of the network, where bandwidth may be plentiful, the policy becomes a queue scheduling tool. However, at the edge of the network, especially where geographically remote sites may have scarce bandwidth, the policy becomes one of controlling admission to bandwidth. Basically, this is equivalent to shoving a watermelon down a garden hoseintact! Figure 5-1 outlines the high-level principles of a QoS application in network design. Figure 5-1. Principles of QoS Application This design approach introduces key notions to the correct road to QoS adoption. These notions provide the correct approach to provisioning before looking at classification of packets toward a requirement for a class of service (CoS) over the network. Determine where the trust boundary will be most effective before starting such a classification, and then indicate the area of the network where scheduling of packets to queues is carried out. Finally, determine the requirement of provisioning that is needed to ensure that sufficient bandwidth exists to carry traffic and its associated overheads. After the network's QoS requirements have been defined, an appropriate service model must be selected. A service model is a general approach or a design philosophy for handling the competing streams of traffic within a network. You can choose from four service models:

Provisioning is quite straightforward. It is about ensuring that there is sufficient base capacity to transport current applications, with forward consideration and thinking about future growth needs. This needs to be applied across the LANs, WANs, and MANs that will support the enterprise. Without proper consideration to provisioning appropriate bandwidth, QoS is a wasted exercise. The best-effort model is relatively simple to understand because there is no prioritization and all traffic gets treated equally regardless of its type. The two predominant architectures for QoS are DiffServ, defined in RFC 2474 and RFC 2475, and IntServ, documented in RFC 1633, RFC 2212, and RFC 2215. In addition, a number of RFCs and Internet Drafts expand on the base RFCsparticularly RFC 2210, which explores the use of RSVP with IntServ. Unfortunately, the IntServ/RSVP architecture does not scale in large enterprises due to the need for end-to-end path setup and reservation. The service model selected must be able to meet the network's QoS requirements as well as integrate any networked applications. This chapter explores the service models available so that you can leverage the best of all three. Implementing QoS is a means to use bandwidth efficiently, but it is not a blanket substitute for bandwidth itself. When an enterprise is faced with ever-increasing congestion, a certain point is reached where QoS alone does not solve bandwidth requirements. At such a point, nothing short of another form of QoS or correctly sized bandwidth will suffice. |

EAN: 2147483647

Pages: 136