The Organization of the Internet

It is important to understand what the Internet infrastructure is composed of and how it is structured in terms of the large variety of players represented in the Internet space. It is also important to keep in mind that similarly to the PSTN, the Internet was not originally structured for what we're asking it to do now.

Initially, the Internet was designed to support data communicationsbursty, low-speed text data traffic. It was structured to accommodate longer hold times while still facilitating low data volumes, in a cost-effective manner. (With the packet-switching technique, through statistical multiplexing, long hold times do not negatively affect the cost structure because users are sharing the channel with other users.) The capacities of the links initially dedicated to the Internet were narrowband: 56Kbps or 64Kbps. The worldwide infrastructure depended on the use of packet switches (i.e., routers), servers (i.e., repositories for the information), and clients (i.e., the user interfaces into the repositories). The Internet was composed of a variety of networks, including both LANs and WANs, with internetworking equipment such as routers and switches designed for interconnection of disparate networks. The Internet relied on TCP/IP to move messages between different subnetworks, and it was not traditionally associated with strong and well-developed operational support systems, unlike the PSTN, where billing, provisioning, and network management systems are quite extensive, even if they are not integrated.

The traditional Internet relied on the PSTN for subscriber access to the Internet. So the physical framework, the roadways over which a package travels on what we know as the Internet, is the same type of physical infrastructure as the PSTN: It uses the same types of communications, links, and capacities. In order for users to actually access this public data network, they had to rely on the PSTN. Two types of access were facilitated: dialup for consumers and small businesses (i.e., the range of analog modems, Narrowband ISDN) and dedicated access in the form of leased lines, ISDN Primary Rate Interface (PRI), and dedicated lines based on T-1/E-1 capacities for larger enterprises, and, in some cases, even T-3/E-3.

The Evolution of the POP Architecture

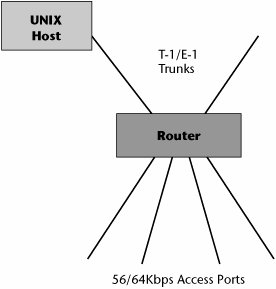

The early Internet point-of-presence (POP) architecture was quite simple, as illustrated in Figure 8.19. Either 56Kbps or 64Kbps lines came in to access ports on a router. Out of that router, T-1/E-1 trunks led to a UNIX host. This UNIX environment was, for most typical users, very difficult to navigate. Until there was an easier way for users to interfacethe World Wide Webthe Internet was very much the province of academicians, engineers, and computer scientists.

Figure 8.19. POP architecture in the 1980s

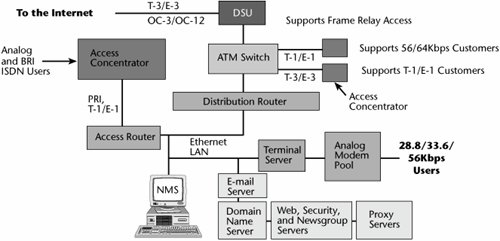

The architecture of the Internet today is significantly different from its early days. Figure 8.20 shows some of the key components you would find in a higher-level network service provider's (NSP's) or a high-tier ISP's POP today. (Of course, a local service provider with just one POP or one node for access purposes, perhaps to a small community, looks quite different from this because it is much simpler.)

Figure 8.20. POP architecture today

First, let's look at the support for the dialup users. Today, we have to facilitate a wide range of speeds; despite our admiration of and desire for broadband access, it's not yet widely available. As of year-end 2005, only 15.7% of the world's population had any type of Internet access (www.internetworldstats.com). In the next several years, we should see more activity in terms of local loop modernization to provide broadband access to more users, using both fiber and wireless broadband alternatives (see Chapter 12, "Broadband Access Alternatives"). But for the time being, we still have to accommodate a wide range of analog modems that operate at speeds between 14.4Kbps and 56Kbps. Therefore, the first point of entry at the POP requires a pool of analog modems that complement the ones that individuals are using.

Also, as we add broadband access alternatives, additional access devices are required (e.g., for DSL modems or cable modems). The analog modem pool communicates with a terminal server, and the terminal server establishes a PPP session. PPP does two things: It assigns an IP address to a dialup user's session, and it authenticates that user and authorizes entry. By dynamically allocating an IP address when needed, PPP enables us to reuse IP addresses, helping to mitigate the problem of the growing demand for IP addresses. A user is allocated an address when she or he dials in for a session; when the session terminates, the IP address can be assigned to another user. PPP supports two protocols that provide link-level security:

- Password Authentication Protocol (PAP) PAP uses a two-way handshake for the peer to establish its identity upon link establishment. The peer repeatedly sends the password to the authenticator until verification is acknowledged or the connection is terminated. PAP is insecure, though, in that it transmits passwords in the clear, which means they could be captured and replayed.

- Challenge Handshake Authentication Protocol (CHAP) CHAP uses a three-way handshake to periodically verify the identity of the peer throughout the life of the connection. The server sends to the remote workstation a random token (a challenge) that is encrypted with the user's password and sent back to the server. The server performs a lookup to see if it recognizes the password. If the values match, the authentication is acknowledged; if not, the connection is terminated. A different token is provided each time a remote user dials in, which adds more robustness. Although an improvement over PAP, CHAP is still vulnerable. If an attacker were to capture the clear-text challenge and the encrypted response, it is possible to statistically determine the encryption keywhich is the password. CHAP is strong when the password is complex or, better, at least 15 characters long.

The terminal server resides on a LAN, which is typically a Gigabit Ethernet network today. Besides the terminal server, the ISP POP houses a wide range of other servers. It also contains network management systems that the service providers can use to administer passwords and to monitor and control all the network elements in the POP, as well as to remotely diagnose elements outside the POP. As shown in Figure 8.20, the following are possible elements in the ISP POP architecture, and not all POPs necessarily have all these components (e.g., ATM switches are likely to be found only in larger ISPs):

- E-mail servers These servers route e-mail between ISPs and house the e-mail boxes of subscribers.

- Domain name servers These servers resolve DNS names into IP addresses.

- Web servers If an ISP is engaged in a hosting business, it needs a Web server, most likely several.

- Security servers Security servers engage in encryption, as well as in authentication and certification of users. Not every ISP has a security server. For example, those that want to offer e-commerce services or the ability to set up storefronts must have them. (Security is discussed in detail in Chapter 9.)

- Newsgroup servers Newsgroup servers store the millions of Usenet messages that are posted daily, and they are updated frequently throughout the day.

- Proxy servers A proxy server provides firewall functionality, acting as an intermediary for user requests and establishing a connection to the requested resource either at the application layer or at the session or transport layer. Proxy servers provide a means to keep outsiders from directly connecting to a service on an internal network. Proxy servers are also becoming critical in support of edge caching of content. People are constantly becoming less tolerant of lengthy downloads, and information streams (such as video, audio, and multimedia) are becoming more demanding of timely delivery.

Say you want to minimize the number of hops that a user has to go through. You could do this by using a tracing product to see how many hops it takes to reach a Web site. You'll see that sometimes you need to go through 17 or 18 hops to get to a site. Because the delay at each hop can be more than 2,000 milliseconds, if you have to make 18 hops when you're trying to use a streaming media tutorial, you will not be satisfied. ISPs can also use proxy servers to cache content locally, which means the information is distributed over 1 hop rather than over multiple hops, and that greatly improves the streaming media experience. Not all proxy servers support caching, however.

- Access router An access router filters local traffic. If a user is simply checking e-mail, working on a Web site, or looking up newsgroups, there's no reason for the user to be sent out over the Internet and then brought back to this particular POP. An access router keeps traffic contained locally in such situations.

- Distribution router A distribution router determines the optimum path to get to the next hop that will bring you one step closer to the destination URL, if it is outside the POP from which you are being served. Typically, in a higher-level ISP, this distribution router will connect to an ATM switch.

- ATM switch An ATM switch enables the ISP to guarantee QoS, which is especially necessary for supporting larger customers on high-speed interfaces and links and for supporting VPNs, VoIP, or streaming media applications. The ATM switch, by virtue of its QoS characteristics, enables us to map the packets into the appropriate cells, which guarantee that the proper QoS is administered and delivered. Typically, QoS is negotiated (and paid for) by an organization using the Internet as the transport between its various facilities in a region or around the world, to guarantee a minimum performance level between sites; a single ISP is used for all site connections. QoS for general public Internet access is unlikely to arise because the billing and servicing is economically infeasible. (ATM QoS is discussed further in Chapter 10, "Next-Generation Networks.") The ATM switch is front-ended by a DSU.

- Data service unit (DSU) The DSU is the data communications equipment on which the circuit terminates. It performs signal conversion and provides diagnostic capabilities. The network also includes a physical circuit, which, in a larger higher-tier provider, would generally be in the optical carrier levels.

- Access concentrator An access concentrator can be used to create the appearance of a virtual POP. For instance, if you want your subscribers to believe they're accessing a local nodethat is, to make it appear that you're in the same neighborhood that they are inyou can use an access concentrator. The user dials a local number, thinking that you're located down the street in the business park, when in fact, the user's traffic is being hauled over a dedicated high-speed link to a physical POP located elsewhere in the network. Users' lines terminate on a simple access concentrator, where their traffic is multiplexed over the T-1s or T-3s, E-1s or E-3s, or perhaps ISDN PRI. This gives ISPs the appearance of having a local presence when, in fact, they have none. Later text in this chapter discusses the advantages of owning the infrastructure versus renting the infrastructure; clearly, if you own your infrastructure, backhauling traffic allows you to more cost-effectively serve remote locations. For an ISP leasing facilities from a telco, these sorts of links to backhaul traffic from more remote locations add cost to the overall operations.

You can see that the architecture of the POP has become incredibly more sophisticated today than it was in the beginning; the architecture has evolved in response to and in preparation for a very wide range of multimedia, real-time, and interactive applications.

Internet Challenges and Changes

Despite all its advances over the past couple decades, the Internet is challenged today. It is still limited in bandwidth at various points. The Internet is composed of some 10,000 service providers. Although some of the really big companies have backbone capacities that are 50Gbps or greater, there are still plenty of small backbones worldwide that have only a maximum of 1.5Mbps or 2Mbps. Overall, the Internet still needs more bandwidth.

One reason the Internet needs more bandwidth is that traffic keeps increasing at an astonishing rate. People are drawn to Web sites that provide pictures of products in order to engage in demonstrations and to conduct multimedia communications, not to mention all the entertainment content. Multimedia, visual, and interactive applications demand greater bandwidth and more control over latencies and losses. This means that we frequently have bottlenecks at the ISP level, at the backbone level (i.e., the NSP level), and at the Internet exchange points (IXPs) where backbones interconnect to exchange traffic between providers. These bottlenecks greatly affect our ability to roll out new time-sensitive, loss-sensitive applications, such as Internet telephony, VoIP, VPNs, streaming media, and IPTV. And remember, as discussed in the Introduction, one other reason more bandwidth is needed is that in the future, everything imaginableyour TV, refrigerator, electrical outlets, appliances, furniture, and so onwill have IP addresses, thus vastly increasing demand.

Therefore, we are redefining the Internet, with the goal of supporting more real-time traffic flows, real audio, real video, and live media. This requires the introduction of QoS into the Internet or any other IP infrastructure. However, this is much more important to the enterprise than to the average consumer. While enterprises are likely to indeed be willing to pay to have their traffic between ISP-connected sites routed at a certain priority, consumers are more likely to be willing to pay for simple enhanced services (such as higher bandwidth for a higher monthly fee) rather than individual QoS controls. And for the ISPs, there's little economic incentive to offer individual QoS because it is much too expensive to manage.

There are really two types of metrics that we loosely refer to as QoS: class of service (CoS) and true QoS. CoS is a prioritization schemelike having a platinum credit card versus a gold card versus a regular card. With CoS, you can prioritize streams and thereby facilitate better performance. QoS, however, deals with very strict traffic measurements, where you can specify the latencies end to end, the jitter or variable latencies in the receipt of the packets, the tolerable cell loss, and the mechanism for allocating the bandwidth continuously or on a bursty basis. QoS is much more stringent than CoS, and what we are currently using in the Internet is really more like CoS than QoS.

Techniques such as DiffServ allow us to prioritize the traffic streams, but they really do not allow us to control the traffic measurements. That is why, as discussed in Chapter 10, we tend to still rely on ATM within the core: ATM allows the strict control of traffic measurements, and it therefore enables us to improve performance, quality, reliability, and security. Efforts are under way to develop equivalent QoS mechanisms for IP networks, such as MPLS, which is increasingly deployed and available (also discussed in Chapter 10), but we are still a couple years away from clearly defining the best mechanism, especially because we need to consider the marriage of IP and optical technologies. In the meantime, we are redesigning the Internet core, moving away from what was a connectionless router environment that offered great flexibility and the ability to work around congestion and failures, but at the expense of delays. We are moving to a connection-oriented environment where we can predefine the path and more tightly control the latencies, using techniques such as Frame Relay, ATM, and MPLS, each of which allow us to separate traffic types, prioritize time-sensitive traffic, and ultimately reduce access costs by eliminating leased-lines connections.

The other main effort in redesigning the Internet core is that we are increasing the capacity, moving from OC-3 and OC-12 (155Mbps and 622Mbps) at the backbone level to OC-48 (2.5Gbps), OC-192 (10Gbps), and even some early deployments of OC-768 (40Gbps). But remember that the number of bits per second that we can carry per wavelength doubles every year, and the number of wavelengths that we can carry per fiber also doubles every year. So the migration beyond 10Gbps is also under way in the highest class of backbones, and it will continue with the evolution and deployment of advanced optical technologies. (However, to be fair, there are issues of power consumption and customer demand to deal with, which will ultimately determine how quickly what is technically feasible gets adopted in the marketplace.)

The emergent generation of Internet infrastructure is quite different from the traditional foundation. It is geared for a new set of traffic and application types: high-speed, real-time, and interactive multimedia. It must be able to support and guarantee CoS and QoS. As discussed in Chapter 9, it includes next-generation telephony, which is a new approach to providing basic telephony services while using IP networks.

The core of the Internet infrastructure will increasingly rely on DWDM and optical networking. It will require the use of ATM, MPLS, and Generalized MPLS (GMPLS) networking protocols to ensure proper administration of performance. (Optical advances are discussed in Chapter 11, "Optical Networking.") New generations of IP protocols are being developed to address real-time traffic, CoS, QoS, and security. Distributed network intelligence is being used to share the network functionality. At the same time, broadband access line deployment continues around the world, with new high-speed fiber alternatives, such as passive optical networks (PONs), gaining interest. (PONs are discussed in Chapter 12.) An impressive number of wireless broadband alternatives are also becoming available, as discussed in Chapter 15, "WMANs, WLANs, and WPANs."

Service Providers and Interconnection

There is a wide range of service providers in the Internet space, and they vary greatly. One way they differ is in their coverage areas. Some providers focus on simply serving a local area, others are regionally based, and others offer national or global coverage. Service providers also vary in the access options they provide. Most ISPs offer plain old telephone service (POTS), and some offer ISDN, xDSL, Frame Relay, ATM, cable modem service, satellite, and wireless as well. Providers also differ in the services they support. Almost all providers support e-mail (but not necessarily at the higher-tier backbone level). Some also offer FTP hosting, Web hosting, name services, VPNs, VoIP, application hosting, e-commerce storefronts, and streaming media. Providers could service a very wide variety of applications, and as a result, there is differentiation on this basis as well. Two other important issues are customer service and the number of hops a provider must take in order to get to the main point of interconnection to the Internet.

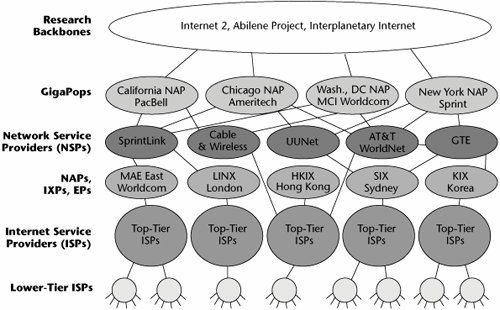

It is pretty easy to become an ISP in a developed economy: Pick up a router, lease a 56Kbps/64Kbps line, and you're in business. But this is why there are some 10,000 such providers, of varying sizes and qualities, worldwide. And it is why there is a service provider pecking order: Research backbones have the latest technology, top-tier providers focus on business-class services, and lower-tier providers focus on rock-bottom pricing. There are therefore large variations in terms of the capacity available, the performance you can expect, the topology of the network, the levels of redundancy, the numbers of connections with other operators, and the level of customer service and the extent of its availability (i.e., whether it is a 24/7 or a Monday-through-Friday, 9-to-5 type of operation). Ultimately, of course, ISPs vary greatly in terms of price.

Figure 8.21 shows an idealized model of the service provider hierarchy. At the top of the heap are research backbones. For example, Internet 2 replaces what the original Internet was forthe academic network. Some 85% of traffic within the academic domain stays within the academic domain, so there's good reason to have a separate backbone for the universities and educational institutions involved in research and learning. Internet 2 will, over time, contribute to the next commercialized platform. It acts as a testbed for many of the latest and greatest technologies, so the universities stress-test Internet 2 to determine how applications perform and which technologies suit which applications or management purposes best. Other very sophisticated technology platforms exist, such as the Abilene Project and the Interplanetary Internet (IPN), which are discussed later in this chapter.

Figure 8.21. Service provider hierarchy

In the commercial realm, the highest tier is the NSP. NSPs are very large backbones, global carriers that own their infrastructures. The NSPs can be broken down into three major subsets:

- National ISPs These ISPs have national coverage. They include the incumbent telecom carriers and the new competitive entrants.

- Regional ISPs These ISPs are active regionally throughout a nation or world zone, and they own their equipment and lease their lines from the incumbent telco or competitive operator.

- Retail ISPs These ISPs have no investment in the network infrastructure whatsoever. They basically use their brand name and outsource all the infrastructure to an NSP or a high-tier ISP, but they build from a known customer database that is loyal and provides an opportunity to offer a branded ISP service.

These various levels of NSPs interconnect, and they can connect in several ways. In the early days of the Internet, there were four main network access points (NAPs), all located in the United States, through which all of the world's Internet traffic interconnected and traveled. The four original NAPs were located in San Francisco (PacBell NAP, operated by PacBell), Chicago (AADS, operated by Ameritech), Tysons Corner, Virginia (MAE-East, operated by MCI Worldcom), and Pennsauken, New Jersey (SprintNAP, operated by Sprint) These IXPs are also called metropolitan area exchanges (MAEs). Today there are hundreds of IXPs distributed around the world.

IXPs

An IXP is a physical infrastructure where different NSPs and/or ISPs can exchange traffic between their networks with their counterparts. By means of mutual peering arrangements, traffic can be exchanged without cost. An IXP is therefore a public meeting point that houses cables, routers, switches, LANs, data communications equipment, and network management and telecommunications links. IXPs allow the NSPs and top-tier ISPs to exchange Internet traffic without having to send the traffic through a main transit link. This translates to decreased costs associated with the transit links, and it reduces congestion on the transit links.

The main purpose of an IXP is to allow providers to interconnect via the exchange rather than having to pass traffic through third-party networks. The main advantage is that traffic passing through an exchange usually isn't billed by any party, whereas connection to an upstream provider would be billed by that upstream provider. Also, because IXPs are usually in the same city, latencies are reduced because the traffic doesn't have to travel to another city or, potentially, continent. Finally, there is a speed advantage, so an ISP can avoid the high cost of long-distance leased lines, which may limit its available bandwidth due to the costs, whereas a connection to a local IXP might allow limitless data transfer, improving the bandwidth between adjacent ISPs.

A common IXP is composed of one or more switches to which the various ISPs connect. During the 1990s, ATM switches were the most popular type of switch, but today Ethernet switches are increasingly being employed, with the ports used by the different ISPs ranging from 10Mbps to 100Mbps for smaller ISPs to 1Gbps or 10Gbps for larger providers. The costs for operating an IXP are generally shared by all the participating ISPs, with each member paying a monthly fee, depending on the speed of its port or the amount of traffic it offers. (A good source of information showing all the IXPs around the world is www.ep.net.)

Peering Agreements

An alternative to IXPs is the use of private peering agreements. In a peering solution, operators agree to exchange with one another the same amount of traffic over high-speed lines between their routers so that users on one network can reach addresses on the other. This type of agreement bypasses public congestion points, such as IXPs. It is called peering because there is an assumption that the parties are equal, or peers, in that they have an equal amount of traffic to exchange. This is an important point because it makes a difference in how money is exchanged. People are studying this issue to determine whether a regulatory mechanism is needed or whether market forces will drive it. In general, with the first generation of peering agreements, there was an understanding that peers were equals. Newer agreements often call for charges to be applied when traffic levels exceed what was agreed to in negotiations.

The most obvious benefit of peering is that because two parties agree to work with one another, and therefore exchange information about the engineering and performance of their networks, the overall performance of the network is increased, including better availability, the ability to administer service-level agreements (SLAs), and the ability to provide greater security. Major backbone providers are very selective about international peering, where expensive international private-line circuits are used to exchange international routes. Buying transit provides the same benefits as peering, but at a higher price. Exchanging traffic between top-tier providers basically means better performance and involves fewer routers. Again, these types of arrangements are critical to seeing the evolution and growth in IP telephony, VoIP, IPTV, interactive gaming, and the expanding varieties of multimedia applications.

One problem with peering is that it can be limited. Under peering arrangements, ISPs often can have access only to each other's networks. In other words, I'll agree to work with you, but you can work only with me; I don't want you working with anyone else. Exclusivity demands sometimes arise.

Part I: Communications Fundamentals

Telecommunications Technology Fundamentals

- Telecommunications Technology Fundamentals

- Transmission Lines

- Types of Network Connections

- The Electromagnetic Spectrum and Bandwidth

- Analog and Digital Transmission

- Multiplexing

- Political and Regulatory Forces in Telecommunications

Traditional Transmission Media

Establishing Communications Channels

- Establishing Communications Channels

- Establishing Connections: Networking Modes and Switching Modes

- The PSTN Versus the Internet

The PSTN

- The PSTN

- The PSTN Infrastructure

- The Transport Network Infrastructure

- Signaling Systems

- Intelligent Networks

- SS7 and Next-Generation Networks

Part II: Data Networking and the Internet

Data Communications Basics

- Data Communications Basics

- The Evolution of Data Communications

- Data Flow

- The OSI Reference Model and the TCP/IP Reference Model

Local Area Networking

Wide Area Networking

The Internet and IP Infrastructures

- The Internet and IP Infrastructures

- Internet Basics

- Internet Addressing and Address Resolution

- The Organization of the Internet

- IP QoS

- Whats Next on the Internet

Part III: The New Generation of Networks

IP Services

Next-Generation Networks

- Next-Generation Networks

- The Broadband Evolution

- Multimedia Networking Requirements

- The Broadband Infrastructure

- Next-Generation Networks and Convergence

- The Next-Generation Network Infrastructure

Optical Networking

- Optical Networking

- Optical Networking Today and Tomorrow

- End-to-End Optical Networking

- The Optical Edge

- The Optical Core: Overlay Versus Peer-to-Peer Networking Models

- The IP+Optical Control Plane

- The Migration to Optical Networking

Broadband Access Alternatives

- Broadband Access Alternatives

- Drivers of Broadband Access

- DSL Technology

- Cable TV Networks

- Fiber Solutions

- Wireless Broadband

- Broadband PLT

- HANs

Part IV: Wireless Communications

Wireless Communications Basics

- Wireless Communications Basics

- A Brief History of Wireless Telecommunications

- Wireless Communications Regulations Issues

- Wireless Impairments

- Antennas

- Wireless Bandwidth

- Wireless Signal Modulation

- Spectrum Utilization

Wireless WANs

- Wireless WANs

- 1G: Analog Transmission

- 2G: Digital Cellular Radio

- 5G: Enhanced Data Services

- 3G: Moving Toward Broadband Wireless

- Beyond 3G

- 4G: Wireless Broadband

- 5G: Intelligent Technologies

WMANs, WLANs, and WPANs

Emerging Wireless Applications

- Emerging Wireless Applications

- The Handset Revolution

- Mobile IP

- The IP Multimedia Subsystem

- Mobile Gaming

- Mobile Video

- Mobile TV

- Mobile Content

Glossary

EAN: 2147483647

Pages: 160