5. Visual Realism

5. Visual Realism

The ultimate goal of augmented imagery is to produce seamless and realistic image compositions, which means the elements in the resulting images are consistent in two aspects: spatial alignment and visual representation. This section discusses techniques for visual realism, which attempt to generate images "indistinguishable from photographs" [39].

Most of the source images or videos used in augmented imagery represent scenes of the real world. The expected final composition should not only be consistent and well integrated, but also match or comply with the surrounding environment shown in the scene. It is hard to achieve visual realism in computer-generated images, due to the richness and complexity of the real environment. There are so many surface textures, subtle color gradations, shadows, reflections and slight irregularities that complicate the process of creating a "real" visual experience. Meanwhile, computer graphics itself has inherent weaknesses in realistic representation. This problem is aggravated by the merging of graphics and real content into integrated images, where they are contrasted with each other in the most brutal comparison environment possible. Techniques have been developed for improving visual realism, including anti-aliased rendering and realistic lighting.

5.1 Anti-Aliasing

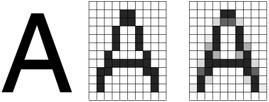

Aliasing is an inherent property of raster displays. Aliasing is evidenced by jagged edges and disappearing small objects or details. According to Crow, aliasing originates from inadequate sampling, when a high frequency signal appears to be replaced by its lower "alias" frequency [40]. Com puter-generated images are stored and displayed in a rectangular array of pixels, and each pixel is set to "painted" or not in aliased content, based on whether the graphics element covers one particular point of the pixel area, say, its center. This manner of sampling is inadequate in that it causes prominent aliasing, as shown in Figure 13.9. Therefore, if computer-synthesized images are to achieve visual realism, it's necessary to apply anti-aliasing techniques to attenuate the potentially jagged edges. This is particularly true for augmented imagery applications, where the graphics are combined with imagery that is naturally anti-aliased.

Figure 13.9: Aliased and anti-aliased version of the character "A"

Anti-aliasing is implemented by combined processing of smooth filtering and sampling, in which filtering is used for reducing high frequencies of the original signal and sampling is applied for reconstructing signals in a discrete domain for raster displays. There are commonly two approaches for anti-aliasing: prefiltering and postfiltering. Prefiltering is filtering at object precision before calculating the value of each pixel sample. The color of each pixel is based on the fraction of the pixel area covered by the graphic object, rather than determined by the coverage of an infinitesimal spot in the pixel area. This computation can be very time consuming, as with Bresenham's algorithm [41]. Pitteway and Watkinson [42] developed an algorithm that is more efficient to find the coverage for each pixel, and Catmull's algorithm [43] is another example of a prefiltering method using unweighted area sampling. Postfiltering method implements anti-aliasing by increasing the frequency of the sampling grid and then averaging the results down. Each display pixel is computed as a weighted average of N neighbouring samples. This weighted average works as a lowpass filter to reduce the high frequencies that are subject to aliasing when resampled to a lower resolution. Many different kinds of square masks or window functions can be used in practice, some of which are mentioned in Crow's paper [44]. Postfiltering with a box filter is also called super-sampling, in which a rectangular neighbourhood of pixels is equally weighted. OpenGL supports postfiltering through use of the accumulation buffer.

5.2 Implementing Realistic Lighting Effects

Graphics packages like Direct3D or OpenGL are designed for interactive or real-time application, displaying 3-D objects using constant-intensity shading or intensity-interpolation (Gouraud) shading. These rendering algorithms are simple and fast, but do not duplicate the lighting effects experienced in the real world, such as shadows, transparency and multiple light-source illumination. Realistic lighting algorithms, including ray tracing and radiosity, provide powerful rendering techniques for obtaining global reflection and transmission effects, but are much more complex computationally.

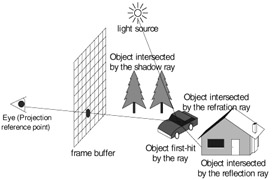

Ray tracing casts a ray (a vector with an associated origin) from the eye through the pixels of the frame buffer into the scene, and collects the lighting effects at the surface-intersection point that is first hit by the ray. Recursive rays are then cast from the intersection point in light, reflection and transmission directions. In Figure 13.10, when a ray hits the first object having reflective and transparent material, these secondary rays in the specular and refractive paths are sent out to collect corresponding illuminations. Meanwhile, a shadow ray pointing to the light source is also sent out, so that any shadow casting can be computed if there are any objects that block the light source from reaching the point. This procedure is repeated until some preset maximum recursion depth or the contribution of additional rays is negligible. The resulting color assigned to the pixel is determined by accumulation of intensity generated from all of these rays. Ray trace rendering reflects the richness of the lighting effects so as to generate realistic images, but the trade-off is considerable computation workload, due to the procedures to find the intersections between a ray and an object. F. S. Hill, Jr. gives detailed description of related contents in his computer graphics text [45].

Figure 13.10: A ray tracing model

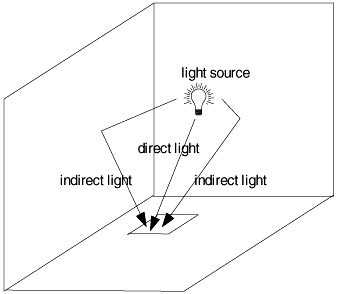

Figure 13.11: Direct and indirect lighting in radiosity

Radiosity is an additional realistic lighting model. Radiosity methods compute shading using the intensity of radiant energy arriving at the surface directly from the light sources or diffuse reflection from other surfaces. This method is based on a detailed analysis of light reflections off surfaces as an energy transfer theory. The rendering algorithm calculates the radiosity of a surface as the sum of the energy inherent in the surface and that received from the emitted energy of other surfaces. Accuracy in the resulting intensity solution requires preprocessing the environment, which subdivides large surfaces into a set of small meshes. A data-driven approach to light calculation is used instead of traditional demand-driven mode. Certain surfaces driving the lighting processing are given initial intensities, and the effects they have on other surfaces are computed in an iterative manner. A prominent attribute of radiosity rendering is viewpoint independence, because the solution only depends on the attributes of source lights and surfaces in the scene. The output images can be highly realistic, featuring soft shadows and color blending effects, which differ from the hard shadows and mirror-like reflections in ray tracing. The disadvantages of radiosity are similar as those of the ray tracing method, in that large computational and storage costs are required. However, if lighting is static in an environment, radiosity can be precomputed and applied in real-time. Radiosity is, in general, the most realistic lighting model currently available.

5.3 Visual Consistency

Using the realistic rendering techniques introduced earlier, augmented imagery systems can generate graphic elements that approximate photography. However, in augmented imagery, the goal is to create realistic integration and mixing of source images with obviously different lighting effects can yield unsatisfying results. Therefore, visual consistency is an important consideration when preparing graphic patches used in augmented imagery. Key to visual consistency is the duplication of conditions from the real environment to the virtual environment. This duplication can be done statically or dynamically. An obvious static approach is to ensure the graphics environment defines lights in locations identical to those in the real world and with the same illumination characteristics. A dynamic approach might include the analysis of shading of patches that will be replaced in order to determine shadows and varying lighting conditions on the patch that can be duplicated on the replacement patch.

Systems may acquire lighting information through analyzing the original scene, as in scene-dependent modeling. But, compared with geometric attributes, complete rebuilding of source lighting is a difficult mission, in that a system must deduce multiple elements from a single input. The problem is due to the complexity of the illumination in the real world, in which the color and intensity of one certain point is the comprehensive results of the lights, object material and environments. A common approach is to adjust lighting interactively when rendering images or graphics elements to be augmented with another source; this depends on the experience and skills of the operator.

EAN: 2147483647

Pages: 393