18.2 Distributed Lock Manager (DLM)

18.2 Distributed Lock Manager (DLM)

Most system administrators have a working knowledge of locking mechanisms available to applications. Some of the more common functions used by applications to achieve a form of mutual exclusion are flock (2), fcntl (2), and lockf (3). As you probably expected, the introduction of clustering adds a level of complexity to the locking scenarios. The kernel data structures supporting traditional locking are found in the physical memory of a particular system. This raises legitimate concerns over the effectiveness of asking for UNIX (POSIX) locks in a TruCluster Server cluster. Have no fear. CFS is POSIX compliant and is prepared to deal with all lock requests.

But traditional UNIX locks are not as sophisticated as needed for many components and applications functioning in a cluster environment. The TruCluster Server Distributed Lock Manager (DLM) component provides a form of distributed (cluster-wide) locking that goes well beyond traditional locks by providing:

-

More than just exclusive and shared access locks (see the excerpt from the dlm(4) reference page below)

-

Asynchronous notification of lock holder that a lock being held is currently blocking another application's request for the lock

-

Asynchronous lock grant notification

-

Lock conversion capability (synchronous and asynchronous)

-

Application created resource names representing the contested resource

-

A per-resource lock value block for communication between contending applications

-

Deadlock detection and notification mechanisms

-

DLM API to create cluster-aware applications

The following is an excerpt from the dlm(4) reference page showing the available lock types.

DLM_NLMODE Null mode (NL). This mode grants no access to the resource; it serves as a placeholder and indicator of future interest in the resource. The null mode does not inhibit locking at other lock modes; further, it prevents the deletion of the resource and lock value block, which would otherwise occur if the locks held at the other lock modes were dequeued.

DLM_CRMODE Concurrent Read (CR). This mode grants the caller read access to the resource and permits write access to the resource by other users. This mode is used to read data from a resource in an unprotected manner, because other users can modify that data as it is being read. This mode is typically used when additional locking is being performed at a finer granularity with sublocks. DLM_CWMODE Concurrent Write (CW). This mode grants the caller write access to the resource and permits write access to the resource by other users. This mode is used to write data to a resource in an unpro- tected fashion, because other users can simultaneously write data to the resource. This mode is typically used when additional locking is being performed at a finer granularity with sublocks. DLM_PRMODE Protected Read (PR). This mode grants only read access to the resource by the caller and other users. Write access is not allowed. This is the traditional share lock. DLM_PWMODE Protected Write (PW). This mode grants the caller write access to the resource and permits only read access to the resource by other users; the other users must have specified concurrent read mode access. No other writers are allowed access to the resource. This is the traditional update lock. DLM_EXMODE Exclusive (EX). The exclusive mode grants the caller write access to the resource and permits no access to the resource by other users. This is the traditional exclusive lock.

How is DLM used? Which TruCluster Server components use it and which resource names do they use? Can user applications use DLM? DLM is used to coordinate cluster-wide activities. It is not restricted to file locking. DLM locks the access to a resource name, which represents a system resource. The resources can be real (device, data structure, alias, etc.) or ephemeral (user-defined or system-defined resource name representing anything -- or nothing).

A homegrown resource name, shown in the following examples, is "den". This resource name represents nothing but a figment of our imagination, although we can use it in a communication scenario where an application running on member1 needs to know if a companion application is running on member2 (within the same cluster). If the first application takes out an Exclusive lock on the resource named "den", then when the second application tries to get the Exclusive lock on the resource named "den", it is refused. It gets an unsuccessful status returned. At that time it knows that the companion application is up and running. If it gets a success status back, it knows that it is the first of the companion apps running and that the other is not currently running.

What did these applications lock? Nothing at which we can point a finger. They locked a resource name representing something that had meaning to them and had no meaning to any other applications in the cluster. The following is a truncated display of the system attributes (approximately 60 in total) pertaining to DLM.

# sysconfig -q dlm dlm: dlm_name = Distributed Lock Manager - V2.0 dlm_lkbs_allocated = 36 dlm_rsbs_allocated = 36 dlm_tot_lkids = 8191 dlm_lkids_inuse = 36 dlm_ddlckq_len = 0 dlm_timeoutq_len = 0

Note that the following is a kdbx (8) command. It displays many of the Resource Block (RSB) resource names supporting the cluster components, as well as the one supporting our "den" resource (array element 2605). This command takes a while to complete and this particular run was stopped before it finished. It would be interesting to run this command on both members in a two-member cluster to see if the per member lock databases are the same. Remember that this component is called the Distributed Lock Manager because portions of the in-memory lock database can be distributed across the members of the cluster. However, if the locking can be accomplished using one member only, then the other members need little or no knowledge of the locking activities.

But what happens if another cluster member asks for a lock that is currently in use on only one member of the cluster? How will the member that is new in the locking scenario become aware that the resource he wants to lock is already locked on another member? One solution would be to really distribute the lock database (in its entirety) in the memory of all cluster members. This solution wastes memory and causes traffic between members each time a lock is created, tested, deleted, or accessed in any way.

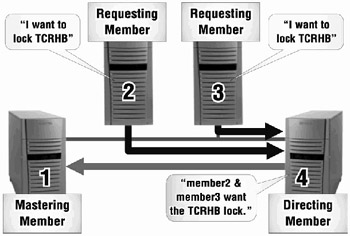

The actual solution distributes a lock mastering table, which will be exactly the same on each cluster member. When access to a lock is requested, the resource name (representing the lock) is passed through a hashing algorithm that ultimately generates an offset into the lock mastering table (called the lock directory vector table). The appropriate entry in the table will identify the cluster member who is the director for the lock traffic involving this particular resource. All requests for new locks for a particular resource are funneled to the same director cluster member, who will be responsible for directing the lock traffic to the cluster member who is mastering the lock structures for this resource (see Figure 18-3 & Figure 18-4). Note that once a requesting member is told where the mastering member is for a particular lock, subsequent lock activities for the same lock go directly to the mastering member.

Figure 18-3: DLM – Create a Lock

Figure 18-4: DLM – Directing Lock Request to the Lock Master

This seems like a double indirect without much payback, but in fact, it allows the lock directory vector table to remain constant as long as cluster membership does not change. Meanwhile, the directing member will direct traffic to the mastering member. The mastering member will have the master copy of the lock database for this particular resource. Other cluster members may be the master for other locks. Thus the lock database (and its associated processing responsibilities) is distributed across the cluster.

If a member is requesting a brand new lock (such as "den" in our example), the string "den" will be hashed down to a number between zero and one less than the cluster member count. The derived number will be used as an offset into the lock directory vector table to figure out which member is the director for this resource. The director member is queried to find out which member is the master member for the resource "den". The director responds that it currently has no knowledge of the resource "den" and so deems that the master will be the requesting member. (You asked for it, you deal with it.) The director member keeps track of the fact that the new master for "den" is the requesting member, so that any subsequent requests from any cluster member will be directed to the appropriate member for lock mastering information.

When a member leaves the cluster, the DLM database has to be rebuilt. This may require the selection of a new master for a resource and will cause the directory vector to be recreated and distributed to all remaining members.

Discussing the internals of TruCluster Server is beyond the scope of this book, but you may find it enlightening to scan through the resource names in the following output and see if you can recognize some of the cluster components and services that use DLM for cluster-wide synchronization. Also, note that not all cluster components use DLM.

# kdbx –k /vmunix ... (kdbx) array_action "rhash_tbl_entry_t *" 8191 &rhash_tbl[0] -cond %c.chain!=0 p %i, (char *)(%c.chain).rsbdom.resnam 134 0xfffffc001d4c9878 = "cfs_devt:swap:19_83" 209 0xfffffc00024995f8 = "rootdg" 249 0xfffffc001ba9c838 = "clua_si_616_0" 347 0xfffffc001a235c38 = "/etc/exports\377^C" 515 0xfffffc000dd07238 = "cluster_lockd^C" 527 0xfffffc001d59f738 = "cfs_devt:19_198" 626 0xfffffc00098f4bf8 = "clua_si_1126_1" 639 0xfffffc0003443738 = "clua_doorbell" 727 0xfffffc000b0f7eb8 = "00000080-PR-DBP815000000-MM-DBP8^B" 790 0xfffffc0006cc6fb8 = "clua_si_1484_0" 841 0xfffffc000ebc81f8 = "clua_si_2301_0" 917 0xfffffc001a2354b8 = "RPC100005.17^A" 981 0xfffffc00077bf238 = "clua_si_1142_1" 1056 0xfffffc001e98d378 = "clua_si_2793_1" 1070 0xfffffc0006cc61f8 = "clua_si_765_1" 1100 0xfffffc00195aad38 = "clua_si_619_1" 1337 0xfffffc0003442e78 = "clua_pm_16.85.0.35" 1464 0xfffffc000bcd0838 = "clua_si_1198_0" 1492 0xfffffc001e98deb8 = "clua_si_111_1" 1563 0xfffffc001a2341f8 = "clua_si_1137_0" 1576 0xfffffc0019246978 = "clua_si_965_1" 1637 0xfffffc000b5bb9b8 = "clua_si_316_1" 1782 0xfffffc000d12d378 = "00000000-PR-DBP815000000-MM-DBP8^B" 2141 0xfffffc001d59ebf8 = "cfs_devt:19_126" 2508 0xfffffc001e98dc38 = "596" 2539 0xfffffc001d59eab8 = "cfs_devt:19_114" 2570 0xfffffc00195ab5f8 = "clua_si_842_0" 2605 0xfffffc000a5d95f8 = "den" 2656 0xfffffc001a2345b8 = "clua_si_618_0" 2845 0xfffffc001d59f9b8 = "cfs_devt:19_128" 2851 0xfffffc00029a6ab8 = "clua_si_1143_1" 2852 0xfffffc000e24cab8 = "00000001-PR-DAALL_DB0000-MM-DAAL^B" 2884 0xfffffc001d59e0b8 = "lock_dg" 3258 0xfffffc0005e670f8 = "00000000-MN-DAALL_DB" 3370 0xfffffc001d59e838 = "clua_si_3354_1" 3576 0xfffffc00034421f8 = "clua_si_1149_0" 3755 0xfffffc001ce38ab8 = "clua_si_1122_0" 3863 0xfffffc0004f55c38 = "clua_si_1124_0" 3874 0xfffffc001d59e6f8 = "cfs_devt:40_5" 4061 0xfffffc001ba9d0f8 = "RPC100005.6" 4397 0xfffffc001ce39738 = "00000081-PR-DBP815000000-MM-DBP8^B" 4536 0xfffffc000e60d378 = "00000000-MS-DBP815" 4539 0xfffffc0019247eb8 = "clua_si_967_0" 4585 0xfffffc000a3e7d78 = "00000001-MP-DBP815" 4610 0xfffffc00032015f8 = "00000001-MN-DBP815" 4769 0xfffffc000b5baab8 = "clua_si_177_0" 5136 0xfffffc000dd06e78 = "clua_si_1022_1" 5239 0xfffffc0004f554b8 = "clua_si_1123_0" ...

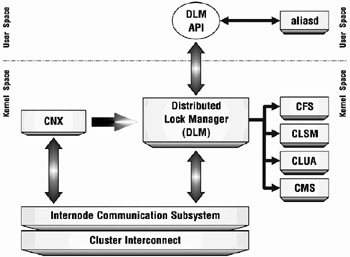

Figure 18-5 shows the position of DLM in the component hierarchy.

Figure 18-5: DLM Cluster Subsystem Communication

Additional information on DLM is available in Appendix B.

EAN: 2147483647

Pages: 273