Exploring Your Server Load-Balancing Devices

| Cisco developed the Local Director, its first standalone load-balancing appliance, in the mid-1990s. Toward the late1990s, Cisco embedded the IOS SLB feature within the Cisco IOS for basic SLB functionality. Cisco retired the Local Director in 2001 and concerted content switching development efforts on the more powerful CSS and CSM products. Although SLB has been available in Cisco IOS since the late 1990s, and you can still use it for very small data center environments, the CSS and CSM provide you with a superior hardware platform and robust SLB feature support. Unique to the CSS and CSM is their customized hardware for connection processing, packet forwarding, and control processing.

Note The control processing hardware is also called the slowpath. Content Services SwitchThe CSS 11500 series is a standalone modular appliance, designed for any size of data center environment. The following module types make up the architecture of the CSS:

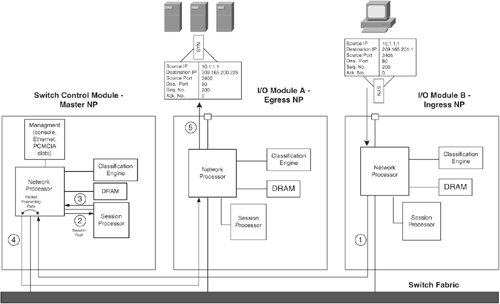

Each of the modules in the preceding list includes a network processor (NP), which is responsible for the forwarding processing. Additionally, the NP interfaces the module into the switch fabric. Each module also contains a classification engine (CE) for accelerating access control list (ACL) processing and address resolution protocol (ARP) table lookups. The NP contains four 200-MHz CPUs, each with its own direct access to the CE, thereby substantially decreasing the load on NP resources so that it can concentrate more on packet forwarding. The forwarding processors within the NP use the DRAM memory for packet buffering. The session processor (SP) is responsible for the connection processing of the switch and control processing on the SCM. The beauty of this architecture is that it is scalable, enabling an increase in overall performance with the addition of any type of module to the chassis. In other words, the NP, SP, and CE within any module can process their own packets, or the packets of any other module. The SAM module always processes packets of other modules, because it does not have any I/O interfaces of its own. Figure 10-5 illustrates the CSS switching architecture. Figure 10-5. The CSS Switching Architecture The CSS I/O modules distribute incoming flows evenly across the available modules. For example, if three clients initiate flows from the I/O module in Figure 10-5, the I/O module switches one request to the SCM and another to the SAM, and processes one itself. CSS Packet FlowConsider an example in which a client, located upstream via the Fast Ethernet I/O module B in Figure 10-6, issues a single HTTP request for the virtual server configured previously in Example 10-1. The real servers for this virtual server are reachable via Fast Ethernet ports on a different module within the CSS chassis (I/O module A). Figure 10-6 illustrates the flow of this packet through the CSS. Figure 10-6. CSS Packet Flow The packet takes the following steps through the CSS:

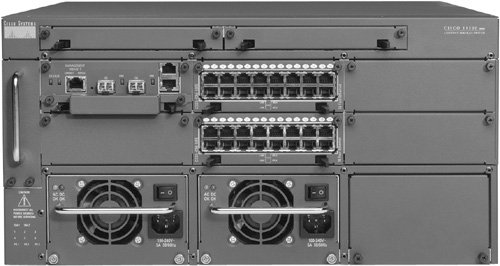

In the example in Figure 10-6, the single I/O distributes the total load between three modules. If you add another SAM to this CSS, the I/O module would further distribute the load across four modules. CSS ModelsThe CSS chassis is available in three different form factors, each with the NP/SP/CE architecture described previously:

Figure 10-9. The CSS 11506 Content Switch Table 10-1 gives the specifications for these content switches.

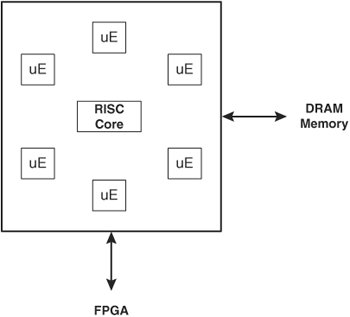

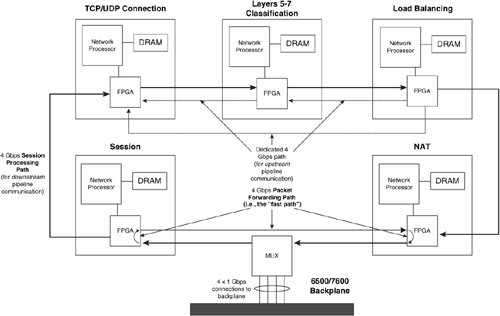

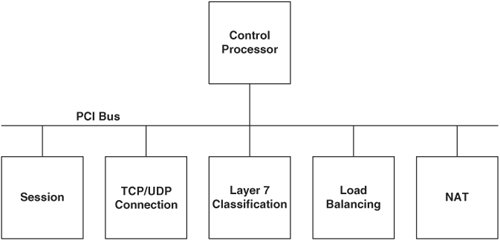

Content Switching ModuleThe Content Switching Module (CSM) is an integrated services module that you can install in your Catalyst 6500 series switch or Cisco 7600 Series Internet routers. Figure 10-10 shows the CSM. Figure 10-10. The Content Switching Module The CSM supports four 1-Gigabit connections into the switching fabric, which the CSM multiplexes into the processing fabric. The CSM has a pipeline NP architecture in which packets traverse a series of stages that apply logic or modifications to the packet. Figure 10-11 illustrates the CSM pipelined architecture. Figure 10-11. CSM Pipelined Architecture Note Do not confuse HTTP "pipelining," which you learned about previously, with the CSM "pipeplined" architecturethey refer to different concepts. Each stage contains a Field Programmable Gate Array (FPGA), 128 MB of DRAM memory, and an NP. Each NP has an Intel IXP 1200 processor containing six RISC microengines (uE) and a RISC core, as Figure 10-12 illustrates. The seven IXP subprocessors can operate in parallel on packets from different flows. A particular IXP provides 1 billion operations per second, giving the CSM an aggregate 5 billion operations per second across the five stages of the pipeline. The FPGAs are the physical connection points between each stage of the architecture, providing an addressable communications mechanism between the NPs. For example, if one stage needs to communicate with another in the pipeline, the intermediary FPGAs simply forward the packet to the next FPGA until the packet reaches the requested stage. Figure 10-12. Network Processor Architecture Note You can view the use of the CSM IXPs using CiscoView Device Manager for the CSM. You can also see the statistics for individual IXPs using the command show module csm mod-num tech-support processor IXP-num command. You can also use the show module csm mod-num tech-support fpga command to display the FPGA statistics. The NPs within each stage also maintain a connection to a shared PCI bus. The control processor provides a dedicated general-purpose PowerPC CPU for performing out-of-band health checking and configuration management to avoid increases in administrative processing from affecting the session or forwarding paths. Additionally, because the control processor is a general-purpose CPU, its functions are performed in software, as opposed to in hardware that uses IXPs, and the functions are therefore much more flexible (albeit slower) when performing the control functions. Figure 10-13 illustrates the control processor's placement in the CSM. Figure 10-13. The Shared PCI Bus for Control Processing The CSM pipeline operates in a manner that is similar to an automobile assembly-line system. While some algorithms are being computed at one stage of a data pipeline, others are computed at a later stage of the same pipeline. When a packet arrives at the CSM, the session stage is the first to process the packet, as you saw previously in Figure 10-11. The session stage determines whether the packet is part of an existing connection or a modification of an existing connection, such as an HTTP pipelined GET request. If it is part of an existing connection, the session stage sends the packet directly to the NAT stage through the packet forwarding path, completely bypassing the session forwarding path. Otherwise, the session stage forwards the packet through the session forwarding path to the TCP/UDP connection stage. The TCP/UDP connection stage maintains the connection information for the flow, ranging from creating and removing entries from its connection state table, and performing delayed binding on flows matching Layer 57 policies, to performing virtual server lookups. For flows matching Layer 57 policies, the TCP stage passes the packet and its virtual server lookup results to the Layer 57 classification stage. The Layer 57 stage parses the content of the packet for cookies or URLs, using regular expressions that you configure on the CSM, and passes the packet and its results to the load-balancing stage. For Layer 3 and 4 policies, the TCP/UDP stage bypasses the Layer 57 stage by addressing the FPGA of the load-balancing stage instead. That is, the load-balancing stage can receive packets from either the TCP connection or Layer 57 stages. The load-balancing stage applies the load-balancing algorithm, persistence policy, and fail-over mechanism associated with the virtual server you configured for the flow. For flows that require modification to the TCP connection entry during the load balancing or Layer 57 inspection process, both the load balancing or Layer 57 stages may communicate directly with the TCP stage over a secondary 4 Gbps communication path, as Figure 10-11 illustrates. The load-balancing stage then passes the packet and results to the NAT stage. The NAT stage is the final stage in the pipeline and is responsible for performing the packet transforms, such as NAT and sequence number remapping, and forwarding the packets to the MUX for transmission to the real servers or clients. |

EAN: 2147483647

Pages: 178