Planning the SAN

| Before you spring for all of the equipment needed to bring an Xsan system to life, you need to pull out a pencil and a calculator and make some plans for the implementation of a system. The Importance of PlanningPlanning the SAN ensures

With the flexibility and convenience of shared storage comes the necessity for proper security and performance guarantees. In small facilities, full access to every file for every client on the SAN may be acceptable, but other facilities may wish to keep certain projects secret and available to only a select group of editors. Files on the SAN may require different levels of redundancy, depending on their importance. Performance must meet the day-to-day needs of the facility in order to replace its current direct-attached systems. These all require planning infrastructure of both the physical components of the SAN and the actual creation of storage areas and folders on the SAN volume(s). Most of us are unfortunately aware of the consequences of having important direct-attached storage go down and the headaches of not having network file servers available. With SANs, these worries are combined. Fortunately, there are redundancy provisions for almost every part of Xsan, so if a hardware or software component fails, a similar component can pick up the ball and keep the SAN online. Proper planning of the infrastructure, and buying the right equipment to implement it, can minimize your SAN's chances of failing. Most importantly, proper planning lets you smoothly upgrade and enlarge the SAN when your needs outpace the SAN's current capabilities. One of Xsan's most outstanding features is that you can add storage or clients to it with almost no downtime. Having an eye to the future while planning makes sure that the proper infrastructure and equipment is there to accommodate perhaps sudden and drastic increases in storage availability or client need. Bandwidth ConsiderationsWe'll start our planning with the most important of all considerations: bandwidth. For the SAN to work properly, it must provide enough throughput so all clients can hum away, reading from and writing to the SAN simultaneously. There are two essential bandwidth considerations when planning your SAN:

We want our bandwidth availability to be higher (by a certain margin of comfort) than our bandwidth need. In our calculations, we'll start with bandwidth need first, since its result will dictate the equipment necessary to create the larger bandwidth availability. Calculating Bandwidth NeedBandwidth need is based on the following:

To calculate bandwidth need, you simply multiply these three considerations together. This yields a value, in megabytes per second (MB/s), that will determine the theoretical maximum need at any given moment of your SAN. Using the tables in the "Data Rates and Storage Requirements for Common Media Types" section of in Lesson 9, you can easily calculate your SAN's bandwidth need. Two examples will illustrate the calculation: A 10-seat SAN for a news facility works entirely with package editing using the DV25 format. Each editor uses primarily an A/B roll technique, and therefore needs up to three streams of real-time availability, since cross-dissolves and titles could occur simultaneously in a given sequence. So this SAN's bandwidth need is: 10 clients x 3 streams x 3.65 MB/s per second, which yields 109.5 MB/s. A three-seat boutique post facility SAN uses SD most days, but is flirting with doing high-end 720p 24-frame based work in 10-bit uncompressed as well. We'll give them a luxurious three streams of real time in this format, provided that they have the top-of-the-line G5s to do it. So the bandwidth need is: 3 clients x 3 streams x 55.67 MB/s = 501.03 MB/s. A Word on Mixed-Stream EnvironmentsIn the previous calculations, we didn't mix compressed and uncompressed workflows in a single SAN, although you might have mixed workflows in your facility. Using different kinds of video streams yields a unique challenge for SAN implementations: the storage controller has a much harder time accurately predicting the needs of a client requesting data from the storage. Uncompressed video streams offer great predictability, since every frame being read or written is a whole and complete frame. Compressed streams vary, sometimes widely, as to how much data is transacted per frame, since they are compressed. Because of this, when you're using mixed streams, the overall performance of the SAN may seem to deteriorate, as compared to its performance when serving up exclusively uncompressed or compressed streams of data. As a result, you need to be even more conservative when estimating the bandwidth need in systems that use mixed streams. Calculating Bandwidth AvailabilityBandwidth availability is based on the following:

As a general rule of thumb, when using Apple Xserve RAIDs as your storage, and assuming LUNs that are formatted with seven drives in a RAID 5 configuration (as they are when you open the box), you can conservatively plan bandwidth availability with this formula: 80 to 100 MB/s per port, or 160 to 200 MB/s per Xserve RAID. This formula will generally aggregate upwards. This means that four Xserve RAIDs, with eight seven-drive LUNs, combined into a single storage pool, are currently yielding a total bandwidth availability of 640 to 800 MB/s. You may also consider having multiple volumes, each with their own specific bandwidth availability, which allow you to shuttle availability around to creatives and projects that need it most. The efficiency of your storage will also increase if you can use a single LUN to create an initial storage pool that is specifically designated for the metadata information of the SAN, and then use your remaining LUNs to create a second storage pool exclusively for regular data. The downside with this plan is that you usually end up allocating a tremendous amount of space for a relatively small amount of metadata, but using this method will increase the efficiency of the SAN. Lastly, how your SAN nodes and controller ports are connected to the Fibre Channel switch is critical for accurately calculating bandwidth availability, since the very nature of the switch topology can affect this number. The general rule of thumb here is that any particular client port should have the least amount of cable "jumps" to the storage ports for greatest efficiency. More on Fibre switches in a moment. Storage ConsiderationsRight after bandwidth considerations come storage size needs. You increase storage by adding hard drives in a LUN, adding LUNs in a storage pool, or adding storage pools to a volume. In many cases, bandwidth considerations alone will dictate the size of your volume(s) for Xsan, but if you need more storage than your results deliver, there is no harm in increasing your storage, provided that each of your volumes stays within the current 16 TB limit. Provisioning for future storage increases is also an important consideration, and it is quite easy to implement, provided your infrastructure can handle it. An Example of Instantaneous Storage IncreaseLet's say your calculations yielded a SAN that has four LUNs consisting of seven drives each in RAID 5, and you chose to implement this with two fully configured Xserve RAIDs. If you needed additional storage in the future, you could simply add two additional Xserve RAIDs to the volume by adding their four LUNs to a new storage pool and adding this pool to the pre-existing volume. This would instantaneously double the size of the volume. Volume Size LimitationThe current size limitation for an Xsan volume is PB (petabyte, which equals one million gigabytes), so you probably won't exceed that for quite a while. Larger volumes require metadata controllers using the fastest Xserves, loaded with RAM, which you'll learn about in a moment. Xsan can handle as many as eight volumes per SAN, so another choice for increasing storage capacity is to add another volume to the SAN, making sure that it consisted of the right combination of LUNs, LUN populations, and RAID levels that satisfied our bandwidth requirements calculated in the previous section. RAID Levels for Video Work with XsanFor video work, there is little argument to going with any RAID level other than 5 when using Xserve RAIDs, since the built-in RAID 5 optimization makes their performance very close to RAID 0. Xserve RAIDs out of the box come preconfigured for RAID 5. You can find more information on this in the RAID Admin documentation or Lesson 13. If you have the luxury to implement a metadata-only storage pool in your volume, then a two-drive RAID 1 LUN should be created, in order to have a complete clone of the metadata on both drives, in case one fails. How these RAIDs are cobbled together is an art"LUNscaping." Consult the Xsan Quick Reference Guide (Peachpit Press) for more information on how RAID sets are combined together to create efficient and versatile volumes. Workflow ConsiderationsBesides the fundamental changes to aesthetic paradigms that come with shared storage environments, there are several practical workflow considerations to note as you move your facility into a SAN. Switching from Auto-Login Local Directories to a Centralized DirectoryYes, it's true. You've read about it, and maybe went into denial, but you have to wrap your mind around it now: Xsan systems need a centralized directory in order to work seamlessly, mainly because of the way Xsan grants file access in real time to requesting users. Each user must have a unique identity on the SAN, so that no one gets confused about who is asking for access and who has access at any given moment. Xsan uses the numerical user ID (UID) and group ID (GID) of each user in order to determine their identity. As you will see later in this lesson, whenever a Mac is born into the world, it starts its life with a UID of 501, a GID of 501, and administrator access to the files of the hard drive. So if two or more admin users on separate Macs tried to get access to the same file on the SAN at the same time, whether reading or writing, major confusion would break out as to which user had access. (Will the real 501 please stand up?) File corruption or even storage failure could result if two users tried to write to the same block of storage at the same time. Scary! To completely eradicate this issue, we implement a centralized directoryideally Open Directory in OS X Serverthat will grant unique user IDs for every user who intends to use the SAN, and have those users log in to their client machines. Centralizing the directory with Open Directory (once it's established) makes the creation and management of your SAN user base quite simple. Lastly, since FCP and other creative applications sometimes look for absolute path structures to write some of their preference, cache, and log files, home folders will remain on the local hard drive, even though we will use a server to log in to our accounts. Migrating Former Stand-Alone Editing Bays to Xsan ClientsAs the previous concept sinks in, it might occur to you that there are pre-existing editing systems that you may wish to turn into Xsan clients. These machines are a little bit trickier to implement into Xsan than new machines, since important data exists in the main user account (and perhaps other user accounts, if the computer is shared with other creatives). Because all users of Xsan will be created in the centralized directory of OS X Server's Open Directory, user data will need to be carefully transferred from the old NetInfo-based local user folder to the new LDAP-based local user folder. Then, proper ownership commands will need to be performed on these files so that they live happily in their new home. Can you say chown three times fast? If not, go hire an ACSA. There may also be pre-existing media files on the direct-attached storage that would do much better on your SAN once it's in place. Never fear with this: shared files can simply be copied, at blissfully fast speeds, from this storage to the SAN once it's running. However, if currently direct-attached Xserve RAIDs are destined for Xsan usage, all of their data must be backed up prior to the conversion, as Xsan's file system is completely different from HFS+. Provisioning Ingest/Layback StationsA delightful consideration when implementing a SAN is the equipment that won't be needed when storage is being shared among users and computers. Our SAN will still require video files to be ingested off of and laid back to tape or other sources, but for the first time, not every editing bay needs to have the proper decks, monitors, calibration equipment, and high-end capture cards in order to do so. A particular client or group of clients can have this expensive equipment, while other bays can be set up for offline editing, or editing with proper monitoring using inexpensive hardware. Infrastructure ConsiderationsXsan requires the addition of a Fibre Channel infrastructure to your facility, and quite possibly an additional set of Ethernet lines as well. The equipment that both provides and controls the storage should be rack mounted in a central location in your facility. Each individual consideration, along with relevant facts and figures, is outlined in the following sections. The Metadata Controller (MDC)Xsan allows file-level locking by arbitrating file access on an out-of-band Ethernet network, called the metadata network (MDN). The computer that does the arbitrating is called the metadata controller (MDC). MDCs do all of the arbitration of file access within their RAM, to have the least amount of latency when clients request access to a file. Xserve G5s are recommended to be MDCs over regular desktop G5s since their ECC RAM has built-in error checking. One other advantage of the Xserve G5 is its capability to be rack mounted close to the storage and switches. The MDC needs an initial pool of 512 MB of RAM, plus an additional 512 MB of RAM for each volume it will handle. MDCs handling volumes over16 TB need as much RAM as you can afford to put in the Xserve. Standby MDCsYou need at least one MDC in an Xsan system, but having two is better for redundancy. The standby MDC sits at the ready in case the primary MDC fails, and takes over the duties of the MDC in as little as half a second. If you choose not to implement a standby MDC, you may assign a current client to standby MDC status. However, in the rare case that a client acting as standby MDC actually takes over, that client's and the overall SAN's performance will substantially decrease if you try to do anything else with the client, especially edit in Final Cut. This is why having a proper standby MDC is critical, especially in large implementations. Choosing the Right Fibre Channel SwitchFibre Channel switches are the very heart of a SAN, and here you get what you pay for. Switches can be divided into two main categories: arbitrated loop and fabric. Arbitrated loop switches manage traffic by routing exclusive conversations between two individual ports on the switch, whereas fabric switches allow simultaneous conversations between every port. Further, fabric switches also allow greater flexibility when creating inter-switch links (ISLs) between multiple switches. ISLs extend the fabric nature of the Fibre network by cascading data (think two-way waterfall) between switches, offering an easy way to expand the number of ports available for clients and storage, and also providing redundancy in conversation routing, which helps to reduce any point of failure in the switches themselves. As you can imagine from these descriptions, fabric switches carry far larger price tags for all the extra features and flexibility! In some Xsan implementations, these extra features are critical to ensure the proper availability and bandwidth of the Fibre network. Of the switch manufacturers that Apple currently certifies, three of the four make fabric switches for Xsan. Cisco (www.cisco.com) MDS 9000 series and Brocade (www.brocade.com) are considered industry paradigms and have price points to boot. Qlogic (www.qlogic.com) switches are priced more competitively and offer one particular model (SANbox 5200) that has an attractive feature: more ports on the unit can be opened when needed by purchasing an unlocking code from the manufacturer. This leaves us with Emulex (www.emulex.com), whose Apple-approved models are exclusively arbitrated loop (355 and 375). These models have In-Speed technology, which allows them to have simultaneous conversation functionality similar to fabric switches. They are the least expensive Apple-approved switches. Fabric switches provide the greatest efficiency and flexibility when creating SANs for post-production work, and they will be most likely specified for Xsan systems. Although Emulex arbitrated loop switches are excellent price points for database and graphic arts implementations, they are not recommended for video-based SANs. Lastly, since the number of ports on the switch is critical in choosing a particular model, each manufacturer offers switches with varying numbers of ports. You'll need to select a switch, or a set of linked switches, that provide the correct number of ports for both your current needs and your future plans. For every Xserve RAID on our SAN, you need two ports; for every client, you need two ports; and for each MDC, one port. Further, you will need extra ports if you want to create ISLs between switches, and most importantly, if you want to add storage or clients to the SAN in the future. Ethernet Switches for the MDNYou will need an additional Ethernet switch to handle the metadata network. Gigabit Ethernet switches are recommended, especially since almost all nodes have Gigabit Ethernet ports these days, but 100BaseT switches will suffice as well. The switch doesn't need to be managed, although it is possible to provision a zone on an existing managed switch that is large enough to carry the traffic for every node and isolate the traffic to just the nodes on the SAN. Ethernet NICs and Fibre HBAsFor implementations where access to a pre-existing directory, network servers, or the Internet is necessary, each client node will need an additional Ethernet network interface card (NIC). Xserve G5s acting as MDCs won't require Ethernet NICs since they have two built-in Ethernet ports. In some cases, you might run out of slots on PowerMac G5s for an Ethernet NIC. Read more in the implementation section about how to circumvent this issue. Apple's Fibre Channel host bus adaptors (HBAs) are the most sensible choice for HBA cards, since they often cost half or a third the price of typical cards on the market. One card is necessary for every node (MDC or client) on the SAN. When buying either Ethernet NICs or Fibre HBAs, be sure to get the fastest speed available. Apple currently offers 133 MHz PCI-X versions of both these cards. Cabling and Run-Length IssuesSANs pose a new challenge to the physical logistics of your facility: since the storage is centralized, where do you put it? Most facilities opt to place the storage in their core, which is a room filled with rack-mounted computers, drive arrays, decks, and other equipment that geeks drool over. Since servers and switches (both Fibre and Ethernet) are also usually rack mounted, the connections between these components and the storage RAIDs are done with relatively short cables. However, the actual editing bay client nodes, sometimes located hundreds of feet away from the core, need extra-long runs of cabling in order to connect to the main SAN components. Further, and even more importantly, Xsan implementations that have the centralized directory, Internet, or other services on an outer network need two Ethernet cable runs from each client node. In some cases, this means that a new Ethernet infrastructure needs to be created apart from a pre-existing one. In general, CAT 6 or 5e Ethernet cabling for both the metadata network or the outer network can have relatively long runs, up to 329 feet (100 meters). If these runs are too short to connect to the core, repeater mechanisms can be inserted within the cable runs to refresh the signal strength, or convert the Ethernet signal to an optical Fibre signal and back, and boost their total length. Lastly, the Fibre Channel infrastructure is almost always a new addition to the facility. Thankfully, Fibre Channel cables can consist of either short-run copper substrate, with a maximum recommended length of 10 feet (3 meters), or super-long-run optical substrate, with a maximum recommended length of 10 kilometers. Note that Fibre Channel optical cables are expensive and require equally expensive electrical-to-optical transceivers on each end. The following table illustrates your choices for optical Fibre Channel cabling.

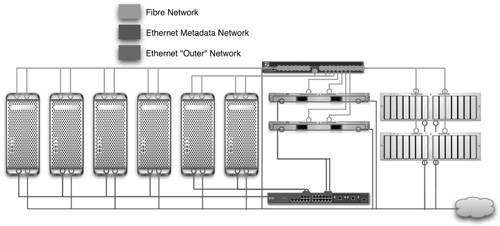

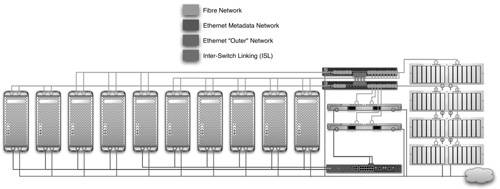

Optical TransceiversApple-recommended transceivers for optical cable are made by Finisar, JDS, and Pico Lite. You can find a full list of model numbers on the Xserve RAID technical specifications page: www.apple.com/xserve/raid/specs.html. What's important in implementing transceivers is that you stay consistent with manufacturer and model on both sides of the optical cable. Power ConsiderationsWith Xsan systems, the elements that usually stack together in a rack in the core of your facility, the Xserve(s), Xserve RAID(s) and switches, must all be connected to robust, reliable, filtered, and most importantly uninterrupted power. With their redundant power supplies, disk drives, and fans, these components draw huge amounts of power, so make sure that your core is wired properly, and that you provide a UPS that will allow the core system to run for at least a half hour in the event of a power failure, so that the system can be shut down formally. Typical TopologiesThe following figures show typical topologies for production environments. Again, these are guides, intended to provide a summary of the previously discussed information as you plan and acquire your equipment for integration. The Three-Seat Boutique In this example, we have three clients accessing a total of 3.9 TB of storage. The SANs bandwidth availability with one fully populated Xserve RAID is 160 to 200 MB/s. This SAN is isolated: the system is not connected to an outer network, which is perfect for implementations where the content being edited is highly confidential. Because of this, and because the SAN is relatively small, the Xserve is both MDC and directory server. For convenience, Ethernet cabling runs from both controllers of the Xserve RAID and the Fibre switch, in case they need to be administered. The Six-Seat Episodic Team In this larger implementation, we have six clients accessing a total of 7.9 TB of storage. The SANs bandwidth availability with two fully populated Xserve RAIDs is 320 to 400 MB/s. This SAN is connected to an outer network. Notice that all trivial Ethernet components are now routed to this outer network, and only the nodes of the SAN are on the metadata network. Further, we have a standby MDC available to take over MDC duties in case the primary MDC fails. For directory services, we have three choices:

The 10-Seat Post Facility In this implementation, 10 clients share 15.99 TB of storage. This SAN's bandwidth availability with four fully populated Xserve RAIDs is a whopping 640 to 800 MB/s. Because of the number of Fibre cables in this topology (32 total), an additional switch has been implemented. Data cascades effortlessly between the two switches because a high-bandwidth inter-switch link (ISL) has been made with six user ports on each switch. Think of it as a data river that flows bidirectionally between each switch. A primary and standby MDC are mandatory with such a large implementation, and to keep their roles simple and efficient, the directory is implemented on the outer network. | |||||||||||||||

EAN: N/A

Pages: 205