Methodology Design Principles

| Designing a methodology is not at all like designing software, hardware, bridges, or factories. Four things, in particular, get in the way:

Common Design ErrorsPeople who come freshly to their assignment of designing a methodology make a standard set of errors: One Size for All ProjectsHere is a conversation that I have heard all too often over the years:

This request is so widespread that I spend most of the next chapter on methodology tailoring. The need for localized methodologies may be clear to you by now, but it will not be clear to your new colleague who is given the assignment to design the corporation's common methodology. IntolerantNovice methodology designers have this notion that they have the answer for software development and that everyone really ought to work that way. Software development is a fluid activity. It requires that people notice small discrepancies wherever they lie and that they communicate and resolve the discrepancies in whatever way is most practical. Different people thrive on different ways of working. A methodology is, in fact, a straightjacket. It is exactly the set of conventions and policies the people agree to use: It is the size and shape of straightjacket they choose for themselves. Given the varying characteristics of different people, though, that straightjacket should not be made any tighter than it absolutely needs to be. Techniques are one particular section of the methodology that usually can be made tolerant. Many techniques work quite well, and different ones suit different people at different times. The subject of how much tolerance belongs in the methodology should be a conscious topic of discussion in the design of your methodology. HeavyWe have developed, over the years, an assumption that a heavier methodology, with closer tracking and more artifacts, will somehow be "safer" for the project than a lighter methodology with fewer artifacts. The opposite is actually the case, as the principles in this section should make clear. However, that initial assumption persists, and it manifests itself in most methodology designs. The heavier-is-safer assumption probably comes from the fear that project managers experience when they can't look at the code and detect the state of the project with their own eyes. Fear grows with the distance from the code. So they quite naturally request more reports summarizing various states of affairs and more coordination points. The alternative is to . . . trust people. This can be a truly horrifying thought during a project under intense pressure. Being a Smalltalk programmer, I felt this fear firsthand when I had to coordinate a COBOL programming project. Fear or no fear, adding weight to the methodology is not likely to improve the team's chance of delivering. If anything, it makes the team less likely to deliver, because people will spend more time filling in reports than making progress. Slower development often translates to loss of a market window, decreased morale, and greater likelihood of losing the project altogether. Part of the art of project management is learning when and how to trust people and when not to trust them. Part of the art of methodology design is to learn what constraints add more burden than safety. Some of these constraints are explored in this chapter. EmbellishedWithout exception, every methodology I have ever seen has been unnecessarily embellished with rules, practices, and ideas that are not strictly necessary. They may not even belong in the methodology. This even applies to the methodologies I have designed. It is so insidious that I have posted on the wall in front of me, in large print: "Embellishment is the pitfall of the methodologist."

From this experience, I learned that the words "ought to" and "should" indicate embellishment. If someone says that people "should" do something, it probably means that they have never done it yet, they have successfully delivered software without it, and there probably is no chance of getting people to use it in the future. Here is a sample story about that.

We didn't have to go much farther than that. Of course, no such meeting had taken place. Further, it was doubtful that we could enforce such a meeting in that company at that time, however useful it might have been. There is another side to this embellishment business. Typically, the process owner has a distorted view of how developers really work. In my interviews, I rarely ever find a team of people who work the way the process owner says they work. This is so pervasive that I have had to mark as unreliable any interview in which I only got to speak with the manager or process designer. The following is a sample, and typical, conversation from one of my interviews. At the time, I was looking for successful implementations of Object Modeling Technique (OMT). The person who was both process and team lead told me that he had a successful OMT project for me to review, and so I flew to California to interview this team.

Extreme Programming stands in contrast to the usual, deliverable-based methodologies. XP is based around activities. The rigor of the methodology resides in people carrying out their activities properly. Not being aware of the difference between deliverable-based and activity-based methodologies, I was unsure how to investigate my first XP project. After all, the team has no drawings to keep up to date, so obviously there would be no out-of-date work products to discover! An activity-based methodology relies on activities in action. XP relies on programming in pairs, writing unit tests, refactoring, and the like. When I visit a project that claims to be an XP project, I usually find pair programming working well (or else they wouldn't declare it an XP project). Then, while they are pair programming, the people are more likely to write unit tests, and so I usually see some amount of test-writing going on. The most common deviation from XP is that the people do not refactor their code often, which results in the code base becoming cluttered in ways that properly developed XP code shouldn't. In general, though, XP has so few rules to follow that most of the areas of embellishment have been removed. XP is a special case of a methodology, and I'll analyze it separately at the end of the chapter. Personally, I tend to embellish around design reviews and testing. I can't seem to resist sneaking an extra review or an extra testing activity through the "should" door ("Of course they should do that testing!" I hear you cry. Shouldn't they?!). The way to catch embellishment is to have the directly affected people review the proposal. Watch their faces closely to discover what they know they won't do but are afraid to say they won't do. UntriedMost methodologies are untried. Many are simply proposals created from nothing. This is the full-blown "should" in action: "Well, this really looks like it should work." After looking at dozens of methodology proposals in the last decade, I have concluded that nothing is obvious in methodology design. Many things that look like they should work don't (testing and keeping documentation up to date, for example), and many things that look like they shouldn't work actually do work (pair programming and test-first development, for example). The late Wayne Stevens, designer of the IBM Consulting Group's Information Engineering methodology in the early 1990s, was well aware of this trap. Whenever someone proposed a new object-centered / object-based / object-hybrid methodology for us to include in the methodology library, he would say, "Try it on a project, and tell us afterwards how it worked." They would typically object, "But that will take years! It is obvious that this is great!" To my recollection, not one of these obvious new methodologies was ever used on a project. Since that time, I have used Wayne Stevens' approach and seen the same thing happen. How are new methodologies made? Here's how I work when I am personally involved in a project:

But when someone sends me a methodology proposal, I ask him to try it on a project first and report back afterwards. Used OnceThe successor to "untried" is "used once." The methodology author, having discovered one project on which the methodology works, now announces it as a general solution. The reality is that different projects need different methodologies, and so any one methodology has limited ability to transfer to another project. I went through this phase with my Crystal Orange methodology (Cockburn 1998), and so did the authors of XP. Fortunately, each of us had the good sense to create a "truth-in-advertising" label describing our own methodology's area of applicability. We will revisit this theme throughout the rest of the book: How do we identify the area of applicability of a methodology, and how do we tailor a methodology to a project in time to benefit the project? Methodologically Successful ProjectsYou may be wondering about these project interviews I keep referring to. My work is based on looking for "methodologically successful" projects. These have three characteristics:

The first criterion is obvious. I set the bar low for this criterion, because there are so many strange forces that affect how people refer to the "successfulness" of a project. If the software is released and gets used, then the methodology was at least that good. The second criterion was added after I was called in to interview the people involved with a project that was advertised as being "successful." I found, after I got there, that the project manager had been fired a year into the project because no code had been developed up to that time, despite the mountains of paperwork the team had produced. This was not a large military or life-critical project, where such an approach might have been appropriate, but it was a rather ordinary, 18-developer technical software project. The third criterion is the difficult one. For the purpose of discovering a successful methodology, it is essential that the team be willing to work in the prescribed way. It is very easy for the developers to block a methodology. Typically all they have to say is, "If I do that, it will move the delivery date out two weeks." Usually they are right, too. If they don't block it directly, they can subvert it. I usually discover during the interview that the team subverted the process, or else they tolerated it once but wouldn't choose to work that way again. Sometimes, the people follow a methodology because the methodology designer is present on the project. I have to apply this criterion to myself and disallow some of my own projects. If the people on the project were using my suggestions just to humor me, I couldn't know if they would use them when I wasn't present. The pertinent question is, "Would the developers continue to work that way if the methodology author was no longer present?" So far, I have discovered three methodologies that people are willing to use twice in a row. They are

(I exclude Crystal Orange from this list because I was the process designer and lead consultant. Also, as written, it deals with a specific configuration of technologies and so needs to be reevaluated in a different, newly adapted setting.) Even if you are not a full-time methodology designer, you can borrow one lesson from this section about project interviews. Most of what I have learned about good development habits has come from interviewing project teams. The interviews are so informative that I keep on doing them. This avenue of improvement is also available to you. Start your own project interview file, and discover good things that other people do that you can use yourself. Author SensitivityA methodology's principles are not arrived at through an emotionally neutral algorithm but come from the author's personal background. To reverse the saying from The Wizard of Oz, "Pay great attention to the man behind the curtain." Each person has had experiences that inform his present views and serve as their anchor points. Methodology authors are no different. In recognition of this, Jim Highsmith has started interviewing methodology authors about their backgrounds. In Agile Software Development Ecosystems, he will present not only each author's methodology but also his or her background. A person's anchor points are not generally open to negotiation. They are fixed in childhood, early project experiences, or personal philosophy. Although we can renormalize a discussion with respect to vocabulary and scope, we cannot do that with personal beliefs. We can only accept the person's anchor points or disagree with them. When Kent Beck quipped, "All methodology is based on fears," I first thought he was just being dismissive. Over time, I have found it to be largely true. One can almost guess at a methodology author's past experiences by looking at the methodology. Each element in the methodology can be viewed as a prevention against a bad experience the methodology author has had.

Of course, as the old saying goes, just because you are paranoid doesn't mean that they aren't after you. Some of your fears may be well founded. We found this to be the case in one project, as told to us over time by an adventuresome team leader. Here is the story as we heard it in our discussion group:

This story raises an interesting point about trust: As much as I love to trust people, a weakness of people is being careless. Sometimes it is important to simply trust people, but sometimes it is important to install a mechanism to find out whether people can be trusted on a particular topic. The final piece of personal baggage of the methodology authors is their individual philosophy. Some have a laissez-faire philosophy, some a military control philosophy. The philosophy comes with the person, shaping his experiences and being shaped by his experiences, fears, and wishes. It is interesting to see how much of an author's methodology philosophy is used in his personal life. Does Watts Humphrey use a form of the Personal Software Process when he balances his checkbook? Does Kent Beck do the simplest thing that will work, getting incremental results and feedback as soon as he can? Do I travel light, and am I tolerant of other people's habits? Here are some key bits of my background that either drive my methodology style or at least are consistent with it. I travel light, as you might guess. I use a small laptop, carry a small phone, drive a small car, and see how little luggage I need when traveling. In terms of the eternal tug-of-war between mobility and armor, I am clearly on the side of mobility. I have lived in many countries and among many cultures, and I keep finding that each works. This perhaps is the source of my sensitivity to development cultures and why I encourage tolerance in methodologies. I also like to think very hard about consequences so that I can give myself room to be sloppy. Thus, I balance the checkbook only when I absolutely have to, doing it in the fastest way possible, just to make sure checks don't bounce. I don't care about absolute accuracy. Once, when I built bookshelves, I worked out the fewest places where I had to be accurate in my cutting (and the most places where I could be sloppy) to get level and sturdy bookshelves. When I started interviewing project teams, I was prepared to discover that process rigor was the secret to success. I was actually surprised to find that it wasn't. However, after I found that using light methodologies, communicating, and being tolerant were effective, it was natural that I would capitalize on those results. Beware the methodology author. Your experiences with a methodology may have a lot to do with how well your personal habits align with those of the methodology author. Seven PrinciplesOver the years, I have found seven principles that are useful in designing and evaluating methodologies:

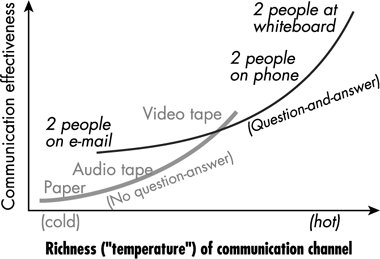

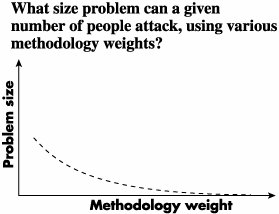

Following is a discussion of each principle. Principle 1. Interactive, face-to-face communication is the cheapest and fastest channel for exchanging informationThe relative advantages and appropriate uses of warm and cool communication channels were discussed in the last chapter. Generally speaking, we should prefer to use warmer communication channels in software development, because we are interested in reducing the cost of detecting and transferring information. Principle 1 predicts that people sitting near each other with frequent, easy contact will find it easier to develop software, and the software will be less expensive to develop. As the project size increases and interactive, face-to-face communications become more difficult to arrange, the cost of communication increases, the quality of communication decreases, and the difficulty of developing the software increases. The principle does not say that communication quality decreases to zero, nor does it imply that all software can be developed by a few people sitting in a room. It implies that a methodology author might want to emphasize small groups and personal contact if productivity and cost are key issues. The principle is supported by management research (Plowman 1995 and Sillince 1996, among others). We also used Principle 1 in the story "Videotaped Archival Documentation" on page 125, which describes documenting a design by videotaping two people discussing that design at a whiteboard. The principle addresses one particular question: "How do forms of communication affect the cost of detecting and transferring information?" Figure 4-16. Effectiveness of different communication channels (repeat of Figure 3-14). One could ask other questions to derive other, related principles. For example, it might be interesting to uncover a principle to answer this question: "How do forms of communication affect a sponsor's evaluation of a team's conformance to a contract?" This question would introduce the issue of visibility in a methodology. It should produce a very different result, probably one emphasizing written documents. Principle 2. Excess methodology weight is costlyImagine six people working in a room with osmotic communication, drawing on the printing whiteboard. Their communication is efficient, the bureaucratic load low. Most of their time is spent developing software, the usage manual, and any other documentation artifacts needed with the end product. Now ask them to maintain additional intermediate work products, written plans, GANTT charts, requirements documents, analysis documents, design documents, and test plans. In the imagined situation, they are not truly needed by the team for the development. They take time away from development. Productivity under those conditions decreases. As you add elements to the methodology, you add more things for the team to do, which pulls them away from the meat of software development. In other words, a small team can succeed with a larger problem by using a lighter methodology (Figure 4-17). Figure 4-17. Effect of adding methodology weight to a small team. Methodology elements add up faster than people expect. A process designer or manager requests a new review or piece of paperwork that should "only take a half hour from time to time." Put a few of these together, and suddenly the designers lose an additional 1520 percent of their already cramped week. The additional work items disrupt design flow. Very soon, the designers are trying to get their design thinking done in one- or two-hour blocks which, as you saw earlier, does not work well. This is something I often see on projects: designers unable to get the necessary quiet time to do their work because of the burden of paperwork and the high rate of distractions. This principle contains a catch, though. If you try to increase productivity by removing more and more methodology elements, you eventually remove those that address code quality. At some point the strategy backfires, and the team spends more time repairing bad work than making progress. The key word, of course, is excess. Different methodology authors produce different advice as to where "excess" methodology begins. I find that a team operating from people's strengthscommunication and citizenshipcan do with a lot less methodology than most managers expect. Jim Highsmith is more explicit about this. His suggestion would be that you start lighter than you think will possibly work! There are two points to draw from this discussion:

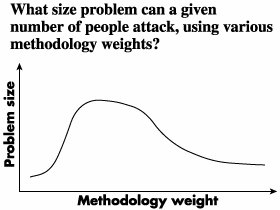

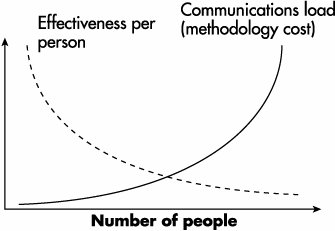

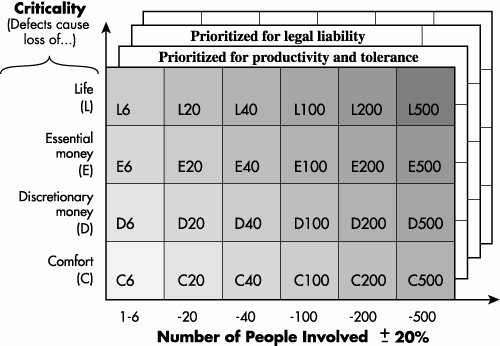

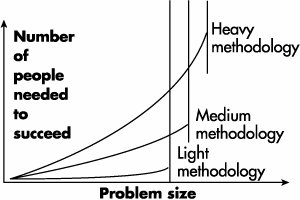

Principle 3. Larger teams need heavier methodologiesWith only four or six people on the team, it is practical to put them together in a room with printing whiteboards and allow the convection currents of information to bind the ongoing conversation in their cooperative game of invention and communication. After the team size exceeds 8 or 12 people, though, that strategy ceases to be so effective. As it reaches 30 to 40 people, the team will occupy a floor. At 80 or 100 people, the team will be spread out on multiple floors, in multiple buildings, or in multiple cities. With each increase in size, it becomes harder for people to know what others are doing and how not to overlap, duplicate, or interfere with each other's work. As the team size increases, so does the need for some form of coordination and communication. Figure 4-18 shows the effect of adding methodology to a large team. With very light methodologies, they work without coordination. As they start to coordinate their work, they become more effective (this is the left half of the curve). Eventually, for any size group, diminishing returns set in and they start to spend more time on the bureaucracy than on developing the system (the right half of the curve). Figure 4-18. Effect of adding methodology weight to a large team. The right half of the curve is described in Principle 2, "Excess methodology weight is costly." Principle 3 describes the left half of the curve: "Larger teams need heavier methodologies." Principle 4. Greater ceremony is appropriate for projects with greater criticalityThis principle addresses ceremony and tolerance, as discussed in the second section of this chapter.

The cost of leaving a fault in the third and fourth systems was quite different from the cost of leaving a fault in the first two. I use the word criticality for this distinction. It was more critical to get the work correct in the latter two than in the former two projects. Just as communications load affects the appropriate choice of methodology, so does criticality. I have chosen to divide criticality into four categories, according to the loss caused by a defect showing up in operation:

The cafeteria produces lasagne instead of a pizza. At the worst, the person eats from the vending machine.

Invoicing systems typically fall into this category. If a phone company sends out a billing mistake, the customer phones in and has the bill adjusted. Many project managers would like to pretend that their project causes more damage than this, but in fact, most systems have good human backup procedures, and mistakes are generally fixed with a phone call. I was surprised to discover that the bank-to-bank transaction tracking system actually fit into this category. Although the numbers involved seemed large to me, they were the sorts of numbers that the banks dealt with all the time, and they had human backup mechanisms in place to repair computer mistakes.

Your company goes bankrupt because of certain errors in the program. At this level of criticality, it is no longer possible to patch up the mistake with a simple phone call. Very few projects really operate at this level. I was recently surprised to discover two. One was a system that offered financial transactions over the Web. Each transaction could be repaired by phone, but there were 50,000 subscribers, estimated to become 200,000 in the following year, and a growing set of services was being offered. The call-in rate was going to increase by leaps and bounds. The time cost of repairing mistakes already fully consumed the time of one business expert who should have been working on other things and took up almost half of another business expert's time. This company decided that it simply could not keep working as though mistakes were easily repaired. The second was a system to control a multi-ton, autonomous vehicle. Once again, the cost of a mistake was not something to be fixed with a phone call and some money. Rather, every mistake of the vehicle could cause very real, permanent, and painful damage.

Software to control the movement of the rods in a nuclear reactor fall into this category, as do pacemakers, atomic power plant control, and the space shuttles. Typically, members of teams whose programs can kill people know they are working on such a project and are willing to take more care. As the degree of potential damage increases, it is easy to justify greater development cost to protect against mistakes. In keeping with the second principle, adding methodology adds cost, but in this case, the cost is worth it. The cost goes into defect reduction rather than communications load. Principle 4 addresses the amount of ceremony that should be used on a project. Recall that ceremony refers to the tightness of the controls used in development and the tolerance permitted. More ceremony means tighter controls and less tolerance. Consider a team-building software for the neighborhood bowling league. The people write a few sentences for each use case, on scraps of paper or a word processor. They review the use cases by gathering a few people in a room and asking what they think. Consider, in contrast, a different team, which is building software for a power plant. These people use a particular tool, fill in very particular fields in a common template, keep versions of each use case, and adhere to strong writing style conventions. They review, baseline, change control, and sign off the use cases at several stages in the lifecycle. The second set of use cases is more expensive to develop. The team works that way, though, expecting that fewer mistakes will be made. The team justifies being less tolerant of variation by the added safety of the final result. Principle 5. Increasing feedback and communication reduces the need for intermediate deliverablesRecall that a deliverable is a work product that crosses decision boundaries. An intermediate deliverable is one that is passed across decision boundaries within the team. These might include the detailed project plan, refined requirement documents, analysis and design documents, test plans, inter-team dependencies, risk lists, and so on. I refer to them also as "promissory notes," as in:

There are two ways to reduce the need for promissory notes:

Delivering a working piece of the system quickly leads to these other benefits:

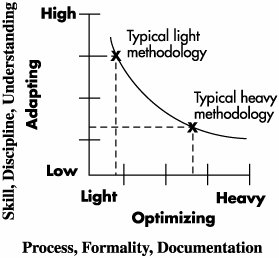

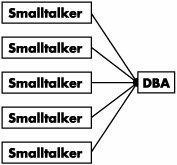

Note the word internal. The sponsors may still require written documentation of different sorts as part of the external communication needs. Principle 6. Discipline, skills, and understanding counter process, formality, and documentationWhen Jim Highsmith says, "Don't confuse documentation with understanding," he means that much of the knowledge that binds the project is tacit knowledge, knowledge that people have inside them, not on paper anywhere. The knowledge base of a project is immense, and much of that knowledge consists of knowing the team's rituals of negotiation, which person knows what information, who contributed heavily in the last release, what pieces of discussion went into certain design decisions, and so on. Even with the best documentation in the world, a new team cannot necessarily just pick up where the previous team left off. The new team will not start making progress until the team members build up their tacit knowledge base. When referring to "documentation" for a project, be aware that the knowledge that becomes documentation is only a small part of what there is to know. People who specialize in technology transfer know this. As the one IBM Fellow put it, "The way to get effective technology transfer is not to transfer the technology itself but to transfer the heads that hold the technology!" ("Jumping Gaps across Time," on page 129.) Highsmith continues, "Process is not discipline." Discipline involves a person choosing to work in a way that requires consistency. Process involves a person following instructions. Of the two, discipline is the more powerful. A person who is choosing to act with consistency and care will have a far better effect on the project than a person who is just following instructions. The common mistake is in thinking that somehow a process will impart discipline. Highsmith's third distinction is, "Don't confuse formality with skill." Insurance companies are in an unusual situation. We fill in forms, send them to the insurance back office, and receive insurance policies. This is quite amazing. Probably as a consequence of their living in this unusual realm, I have several times been asked by insurance companies to design use case and object-oriented design forms. Their goal, I was told on each occasion, was to make it foolproof to construct high-quality use cases and OO designs. Sadly, our world is not built that way. A good designer will read a set of use cases and create an OO design directly, one that improves as he reworks the design over time. No amount of form filling yet replaces this skill. Similarly, a good user interface designer creates much better programs than a mediocre interface designer can create. Figure 4-19 shows a merging of Highsmith's and my thoughts on these issues. Figure 4-19. Documentation is not understanding, process is not discipline, formality is not skill. Highsmith distinguishes exploratory or adapting activities from optimizing activities. The former, he says, is exemplified by the search for new oil wells. In searching for a new oil well, one cannot predict what is going to happen. After the oil well is functioning, however, the task is to keep reducing costs in a predictable situation. In software development, we become more like the optimizing oil company as we become more familiar with the problem to be solved, the team, and the technologies being used. We are more like the exploratory company, operating in an adaptive mode, when we don't know those things. Light methodologies draw on understanding, discipline, and skill more than on documentation, process, and formality. They are therefore particularly well suited for exploratory situations. The typical heavy methodology, drawing on documentation, process, and formality, is designed for situations in which the team will not have to adapt to changing circumstances but can optimize its costs. Of the projects I have seen, almost all fit the profile of exploratory situations. This may explain why I have only once seen a project succeed using an optimizing style of methodology. In that exceptional case, the company was still working in the same problem domain and was using the same basic technology, process, and architecture as it had done for several decades. The characteristics of exploratory and optimizing situations run in opposition to each other. Optimizing projects try to reduce the dependency on tacit knowledge, personal skill, and discipline and therefore rely more on documentation, process, and formality. Exploratory projects, on the other hand, allow people to reduce their dependency on paperwork, processes, and formality by relying more on understanding, discipline, and skill. The two sets draw away from each other. Highsmith and I hypothesize that any methodology design will live on the track shown in the figure, drawing either to one set or the other, but not both. Principle 7. Efficiency is expendable in nonbottleneck activitiesPrinciple 7 provides guidance in applying concurrent development and is a key principle in tailoring the Crystal methodologies for different teams in different situations. It is closely related to Elihu Goldratt's ideas as expressed in The Goal (Goldratt 1992) and The Theory of Constraints (Goldratt 1990). To get a start on the principle, imagine a project with five requirements analysts, five Smalltalk programmers, five testers, and one relational database designer (DBA), all of them good at their jobs. Let us assume, for the sake of this example, that the group cannot hire more DBAs. Figure 4-20 shows the relevant part of the situation, the five programmers feeding work to the single DBA. Figure 4-20. The five Smalltalk programmers feeding work to the one DBA. The DBA clearly won't be able to keep up with the programmers. This has nothing to do with his skills, it is just that he is overloaded. In Goldratt's terms, the DBA's activity is the bottleneck activity. The speed of this DBA determines the speed of the project. To make good progress, the team had better get things lined up pretty well for the DBA so that he has the best information possible to do his work. Every slowdown, every bit of rework he does, costs the project directly. That is quite the opposite story from the Smalltalk programmers. They have a huge amount of excess capacity compared with the DBA. Faced with this situation, the project manager can do one of two things:

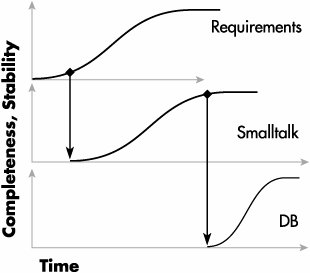

If he is mostly interested in saving money, he sends four of the programmers home and lives with the fact that the project is going to progress at the speed of these two solo developers. If he is interested in getting the project done as quickly as possible, he doesn't send the four Smalltalk programmers home. He takes advantage of their spare capacity. He has them revise their designs several times, showing the results to users, before they hand over their designs to the DBA. This way, they get feedback that enables them to change their designs before, not after, the DBA goes through his own work. He also has them start earlier in the requirements-gathering process, so that they can show intermediate results to the users sooner, again getting feedback earlier. He has them spend a bit more time drawing their designs so that the DBA can read them easily. He does this knowing that he is causing them extra work. He is drawing on their spare capacity. Figure 4-21 diagrams this second work strategy. In that figure you see only one requirements person submitting information to one Smalltalk programmer, who is submitting work to the one DBA. The top two curves are used five times, for the five requirements writers and the five programmers. Figure 4-21. Bottleneck station starts work higher on the completeness and stability curve than do nonbottleneck stations. Principle 7 has three consequences. Notice in Figure 4-21 that the Smalltalker starts work as soon as the requirements person has something to hand him, but the DBA waits until the Smalltalker's work is almost complete and quite stable before starting. Notice also that the DBA is completing work faster than the others. This is a reflection of the fact that the other groups are doing more rework, and hence reaching completeness and stability more slowly. This is necessary because four other groups are submitting work to the DBA. In a balanced situation, the DBA reaches completion five times as fast as the others. People on a bottleneck activity need to work as efficiently as possible and cannot afford to do much rework. (I say "much rework" because there is always rework in software development; the goal is to reduce the amount of rework.) Consequence 1. Do whatever you can to speed up the work at the bottleneck activityThat result is fairly obvious, except that people often don't do it. Every project has a bottleneck activity. It moves during the project, but there is always one. In the above example, it is the DBA's work. There are four ways to improve a bottleneck activity. Principle 7 addresses the fourth.

Consequence 2. People at nonbottleneck activities can work inefficiently without affecting the overall speed of the project!This is not obvious. Of course, one way for people to work inefficiently is to take long smoking breaks, surf the Web, and spend time at the water cooler. Those are relatively uninteresting for the project and for designing methodologies. More interesting is the idea of spending efficiency, trading it for stability. The nonbottleneck people can spend some of their extra capacity by starting earlier, getting results earlier, doing more rework and doing it earlier, and doing other work that helps the person at the bottleneck activity. Spending excess capacity for rework is significant for software development because rework is one of the things that causes software projects to take so much time. The users see the results and change their requests; the designers see their algorithm in action and change the design; the testers break the program; and the programmers change the code. In the case of the above example, all of these will cause the DBA rework. Applying Principle 7 and the diagram of concurrent development (Figure 4-13) to the problem of the five Smalltalkers and one DBA, the project manager can decide that the Smalltalk programmers can work "inefficiently," meaning "doing more rework than they might otherwise," in exchange for making their work more stable earlier. This means that the DBA, to whom rework is expensive, will be given more stable information at the start. Principle 7 offers a strategy for when and where to use early concurrency, and when and where to delay it. Most projects work from a given amount of money and an available set of people. Principle 7 helps the team members adjust their work to make the most of the people available. Principle 7 can be used on every project, not just those that are as out of balance as the sample project. Every project has a bottleneck activity. Even when the bottleneck moves, Principle 7 applies to the new configuration of bottleneck and nonbottleneck activities. Consequence 3. Applying the principle of expendable efficiency yields different methodologies in different situations, even keeping the other principles in placeHere is a first story, to illustrate:

I described this use of the principle as the Gold Rush strategy in Surviving Object-Oriented Projects (Cockburn 1998). That book also describes the related use of the Holistic Diversity strategy and examines project Winifred more extensively. Here is a second story, with a different outcome:

Note that this strategy appears at first glance to go against a primary idea of this book: maximizing face-to-face communication. However, in this situation, these programmers could not keep information in their heads. They needed the information to reach them in a "sticky" form, so they could refer to it after the conversations. After the programmers work through the backlog, the bottleneck activity will move, and the company may find it appropriate to move to a more concurrent, conversation-based approach. Just what they do will depend on where the next bottleneck shows up. Here is a third story:

This was a most surprising and effective application of the principle of expendable efficiency. When I interviewed one of the team leads, I asked, "What about all those other people? What did they do?" The team lead answered, "We let them do whatever they wanted to. Some did nothing, some did small projects to improve their technical skills. It didn't matter, because they wouldn't help the project more by doing anything else." The restarted project did succeed. In fact, it became a heralded success at that company. Consequences of the PrinciplesThe above principles work together to help you choose an appropriate size for the team when given the problem, and to choose an appropriate size for the methodology when given the team. Look at some of the consequences of combining the principles: Consequence 1. Adding people to a project is costlyPeople who are supposed to know this sometimes seem unaware of it, so it is worth reviewing. Imagine 40 or 50 people working together. You create teams and add meetings, managers, and secretaries to orchestrate their work. Although the managers and secretaries protect the programming productivity of the individual developers, their salaries add cost to the project. Also, adding these people adds communication needs, which call for additional methodology elements and overall lowered productivity for the group (Figure 4-22). Figure 4-22. Reduced effectiveness with increasing communication needs (methodology size). Consequence 2. Team size increases in large jumpsThe effects of adding people and adding methodology load combine, so that adding "a few" people is not as effective an approach as it might seem. Indeed, my experience hints that to double a group's output, one may need to almost square the number of people on the project! Here is a story to illustrate:

Here is a second, more recent story, with a similar outcome:

Consequence 3. Teams should be improved, not enlargedHere is a common problem: A manager has a 10-person team that sits close together and achieves high communication rates with little energy. The manager needs to increase the team's output. He has two choices: add people or keep the team the same size and do something different within the team. If he increases the team size from 10 to 15, the communications load, communications distances, training, meeting, and documentation needs go up. Most of the money spent on this new group will get spent on communications overhead, without producing more output. This group is likely to grow again, to 20 people (which will add a heavier communications burden but will at least show improvement in output). The second strategy, which seems less obvious, is to lock the team size at 10 people (the maximum that can be coordinated through casual coordination) and improve the people on the team. To improve the individuals on the team, the manager can do any or all of the following:

Repeating the strategy over time, the manager will keep finding better and better people who work better and better together. Notice that in the second scenario, the communications load stays the same, while the team becomes more productive. The organization can afford to pay the people more for their increased contribution. It can, in fact, afford to double their salaries, considering that these 10 are replacing 20! This makes sense. If the pay is good, bureaucratic burden is low, and team members are proud of their output, they will enjoy the place and stay, which is exactly what the organization wants them to do.

Consequence 4. Different methodologies are needed for different projectsFigure 4-23 shows one way to examine projects to select an appropriate methodology. The attraction of using a grid in this figure is that it works from fairly objective indices:

Figure 4-23. Characterizing projects by communication load, criticality, and priorities. You can walk into a project work area, count the people being coordinated, and ask for the system criticality and project priorities. In the figure, the lettering in each box indicates the project characteristics. A "C6" project is one that has six people and may cause loss of comfort; a "D20" project is one that has 20 people and may cause the loss of discretionary monies. In using this grid, you should recognize several things: Communication load rises with the number of people. At certain points, it becomes incorrect to run the project in the same way: Six people can work in a room, 20 in close proximity, 40 on a floor, 100 in a building. The coordination mechanisms for the smaller-sized project no longer fit the larger-sized project.

Here is how I once used the grid:

The grid characteristics can be used in reverse to help discuss the range of projects for which a particular methodology is applicable. This is what I do with the Crystal methodology family in Chapter 6. I construct one methodology that might be suitable for projects in the D6 category (Crystal Clear), another that might be suitable for projects in the D20 range (Crystal Yellow), another for D40 category projects (Crystal Orange), and so on. Looking at methodologies in this way, you would say that Extreme Programming is suited for projects in the C4 to E14 categories. Consequence 5. Lighter methodologies are better, until they run out of steamWhat we should be learning is that a small team with a light methodology can sometimes solve the same problem as a larger team with a heavier methodology. From a project cost point of view, as long as the problem can be solved with 10 people in a room, that will be more effective than adding more people. At some point, though, even the 10 best people in the world won't be able to deliver the needed solution in time, and then the team size must jump drastically. At that point, the methodology size will have to jump also (Figure 4-24). Figure 4-24. Small methodologies are good but run out of steam. There is no escaping the fact that larger projects require heavier methodologies. What you can escape, though, is using a heavy methodology on a small project. What you can work toward is keeping the methodology as light and nimble as possible for the particular project and team. Agile is a reasonable goal, as long as you recognize that a large-team agile methodology is heavier than a small-team agile methodology. Consequence 6. Methodologies should be stretched to fitLook for the lightest, most "face-to-face"-centric methodology that will work for the project. Then stretch the methodology. Jim Highsmith summarizes this with the phrase "A little less than enough is better than a little more than enough." A manager of a project with 50 people and the potential for "expensive" damage has two choices:

XP was first used on D8 types of projects. Over time, people found ways to make it work successfully for more and more people. As a result, I now rate it for E14 projects. More PrinciplesWe should be able to uncover other principles. One of the more interesting candidates I recently encountered is the "real options evaluation" model (Sullivan 1999). In considering the use of financial options theory in software development, Sullivan and his colleagues highlight the "value of information" (VOI) against the "value of flexibility" (VOF). VOI deals with this choice: "Pay to learn, or don't pay if you think you know." The concept of VOI applies to situations in which it is possible to discover information earlier by paying more. An application of the VOI concept is deciding which prototypes to build on a project. VOF deals with this choice: "Pay to not have to decide or don't pay, either because you are sure enough the decision is right, or because the cost of changing your decision later is low." The concept of VOF applies to situations in which it is not possible to discover information earlier. An application of the VOF concept is deciding how to deal with competing (potential) standards, such as COM versus CORBA. A second application, which they discuss in their article, is evaluating the use of a spiral development process. They say that using spiral development is a way of betting on a favorable future. If conditions improve at the end of the first iteration, the project continues. If the conditions worsen, the project can be dropped at a controlled cost. I haven't yet seen these concepts tried explicitly, but they certainly fit well with the notion of software development as a resource-limited cooperative game. They may provide guidance to some process designer and yield a new principle for designing methodologies. |

EAN: 2147483647

Pages: 126