Methodology Concepts

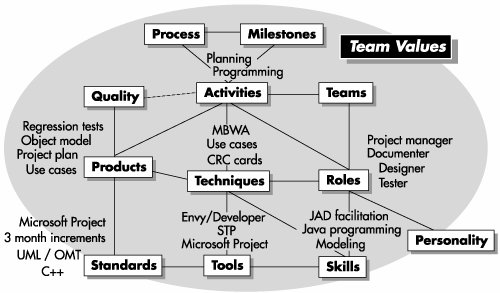

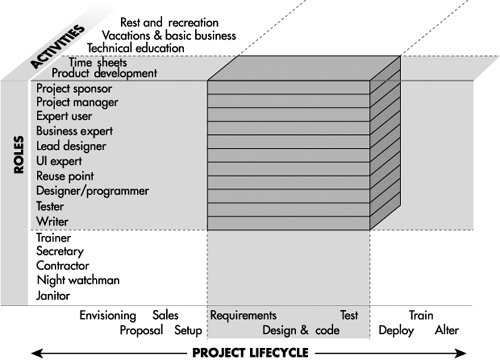

| I use the word methodology as found in the Merriam-Webster dictionaries: "A series of related methods or techniques." A method is a "systematic procedure," similar to a technique. (Readers of the Oxford English Dictionary may note that some OED editions only carry the definition of methodology as "study of methods," and others carry both. This helps explain the controversy over the word methodology.) The distinction between methodology and method is useful. Reading the phrases "a method for finding classes from use cases" or "different methods are suited for different problems," we understand that the author is discussing techniques and procedures, not establishing team rules and conventions. That frees the use of the word methodology for the larger issues of coordinating people's activities on a team. Coordination is important. The same average people who produce average designs when working alone often produce good designs in collaboration. Conversely, all the smartest people together still won't produce group success without coordination, cooperation, and communication. Most of us have witnessed or heard of such groups. Team success hinges on cooperation, communication, and coordination. Structural TermsThe first methodology structure I saw contained about seven elements. The one I now draw contains 13 (see Figure 4-1). The elements apply to any team endeavor, whether it is software development, rock climbing, or poetry writing. What you write for each box will vary, but the names of the elements won't. Figure 4-1. Elements of a methodology. Roles. Who you employ, what you employ them for, what skills they are supposed to have. Equally important, it turns out, are the personality traits expected of the person. A project manager should be good with people, a user interface designer should have natural visual talents and some empathy for user behavior, an object-oriented program designer should have good abstraction faculties, and a mentor should be good at explaining things. It is bad for the project when the individuals in the jobs don't have the traits needed for the job (for example, a project manager who can't make decisions or a mentor who does not like to communicate). Skills. The skills needed for the roles. The "personal prowess" of a person in a role is a product of his training and talent. Programmers attend classes to learn object-oriented, Java programming and unit-testing skills. User interface designers learn how to conduct usability examinations and do paper-based prototyping. Managers learn interviewing, motivating, hiring, and critical-path task-management skills. The best people draw heavily upon their natural talent, but in most cases adequate skills can be acquired through training and practice. Teams. The roles that work together under various circumstances. There may be only one team on a small project. On a large project, there are likely to be multiple, overlapping teams, some aimed at harnessing specific technologies and some aimed at steering the project or the system's architecture. Techniques. The specific procedures people use to accomplish tasks. Some apply to a single person (writing a use case, managing by walking around, designing a class or test case), while others are aimed at groups of people (project retrospectives, group planning sessions). In general, I use the word technique if there is a prescriptive presentation of how to accomplish a task, using an understood body of knowledge. Activities. How the people spend their days. Planning, programming, testing, and meeting are sample activities. Some methodologies are work-product-intensive, meaning that they focus on the work products that need to be produced. Others are activity-intensive, meaning that they focus on what the people should be doing during the day. Thus, where the Rational Unified Process is tool- and work-product-intensive, Extreme Programming is activity-intensive. It achieves its effectiveness, in part, by describing what the people should be doing with their day (pair programming, test-first development, refactoring, etc.). Process. How activities fit together over time, often with pre- and post conditions for the activities (for example, a design review is held two days after the material is sent out to participants and produces a list of recommendations for improvement). Process-intensive methodologies focus on the flow of work among the team members. Process charts rarely convey the presence of loopback paths, where rework gets done. Thus, process charts are usually best viewed as workflow diagrams, describing who receives what from whom. Work products. What someone constructs. A work product may be disposable, as with CRC design cards, or it may be relatively permanent, as the usage manual or source code. I find it useful to reserve deliverable to mean "a work product that gets passed across an organizational boundary." This allows us to apply the term deliverable at different scales: The deliverables that pass between two subteams are work products in terms of the larger project. The work products that pass between a project team and the team working on the next system are deliverables of the project and need to be handled more carefully. Work products are described in generic terms such as "source code" and "domain object model." Rules about the notation to be used for each work product are described in the work product standards. Examples of source-code standards include Java, Visual Basic, and executable visual models. Examples of class diagram standards could be UML or OML. Milestones. Events marking progress or completion. Some milestones are simply assertions that a task has been performed, and some involve the publication of documents or code. A milestone has two key characteristics: It occurs in an instant of time, and it is either fully met or not met (it is not partially met). A document is either published or not published, the code is delivered or not delivered, the meeting was held or not held. Standards. The conventions the team adopts for particular tools, work products, and decision policies. A coding standard might declare this: "Every function has the following header comment. . ." A language standard might be this: "We'll be using fully portable Java." A drawing standard for class diagrams might be this: "Only show public methods of persistent functions." A tool standard might be this: "We'll use Microsoft Project, Together/J, JUnit,. . ." A project-management standard might be this: "Use milestones of two days to two weeks and incremental deliveries every two to three months." Quality. Quality may refer to the activities or the work products. In XP, the quality of the team's program is evaluated by examining the source code work product: "All checked-in code must pass unit tests at 100 percent at all times." The XP team members also evaluate the quality of their activities: Do they hold a stand-up meeting every day? How often do the programmers shift programming partners? How available are the customers for questions? In some cases, quality is given a numerical value; in other cases, a fuzzy value ("I wasn't happy with the team morale on the last iteration."). Team values. The rest of the methodology elements are governed by the team's value system. An aggressive team working on quick-to-market values will work very differently than a group that values families and goes home at a regular time every night. As Jim Highsmith likes to point out, a group whose mission is to explore and locate new oil fields will operate on different values and produce different rules than a group whose mission is to squeeze every barrel out of a known oil field at the least possible cost. Types of MethodologiesMaier and Rechtin (2000) categorize methodologies themselves as being normative, rational, participative, or heuristic. Normative methodologies are based on solutions or sequences of steps known to work for the discipline. Electrical and other building codes in house wiring are examples. In software development, one would include state diagram verification in this category. Rational methodologies (no connection with the company) are based on method and technique. They would be used for system analysis and engineering disciplines. Participative methodologies are stakeholder based and capture aspects of customer involvement. Heuristic methodologies are based on lessons learned. Maier and Rechtin cite their use in the aerospace business (space and aircraft design). As a body of knowledge grows, sections of the methodology move from heuristic to normative and become codified as standard solutions for standard problems. In computer programming, searching algorithms have reached that point. The decision about whether to put people in common or private offices has not. Most of software development is still in the stage where heuristic methodologies are appropriate. MilestonesMilestones are markers for where interesting things happen in the project. At each milestone, one or more people in some named roles must get together to affect the course of a work product. Three kinds of milestones are used on projects, each with its particular characteristics. They are

In a review, several people examine a work product. With respect to reviews, we care about the following questions: Who is doing the reviewing? What are they reviewing? Who created that item? What is the outcome of the review? Few reviews cause a project to halt; most end with a list of suggestions that are supposed to be incorporated. A publication occurs whenever a work product is distributed or posted for open viewing. Sending out meeting minutes, checking source code into a configuration-management system, and deploying software to users' workstations are different forms of publication. With respect to publications, we care about the following: What is being published? Who publishes it? Who receives it? What causes it to be published? The declaration milestone is a verbal notice from one person to another, or to multiple people, that a milestone was reached. There is no objective measure for a declaration; it is simply an announcement or a promise. Declarations are interesting because they construct a web of promises inside the team's social structure. This form of milestone came as a surprise to me, when I first detected it.

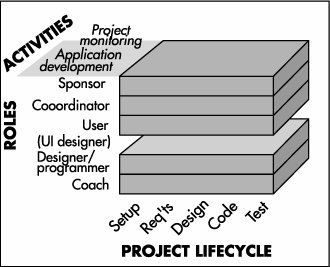

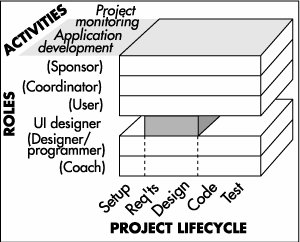

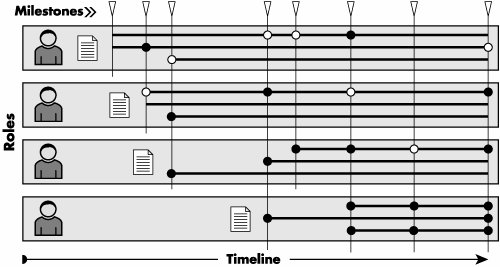

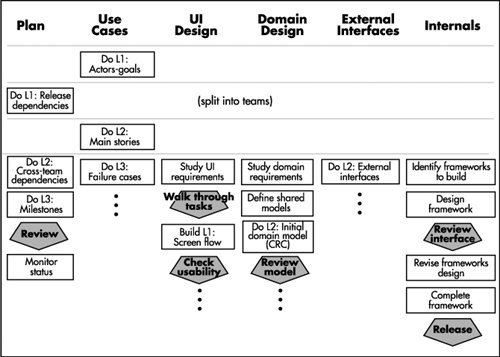

That assertion is full of social promises. It is a promise, given by a trained person, that in his judgement the trade-offs are balanced and that this is a good time to start. A declaration ("It's ready!") is often the form of milestone that moves code from development to test, alpha delivery, beta delivery, and even deployment. Declarations are interesting to me as a researcher, because I have not seen them described in process-centric methodologies, which focus on process entry and exit criteria. They are easier to discuss when we consider software development as a cooperative game. In a cooperative game, the project team's web of interrelationships, and the promises holding them together, are more apparent. The role-deliverable-milestone chart (Figure 4-15) is a quick way to view the methodology in brief. It has an advantage over process diagrams in that it shows the parallelism involved in the project quite clearly. It also allows the team to see the key stages of completion the artifacts go through. This helps them manage their actions according to the intermediate states of the artifacts, as recommended in some modern methodologies (Highsmith 2000). ScopeThe scope of a methodology consists of the range of roles and activities that it attempts to cover (Figure 4-2). Figure 4-2. The three dimensions of scope. A methodology selects a subset of all three. The earliest object-oriented methodologies presented the designer as having the key role and discussed the techniques, deliverables, and standards for the design activity of that role. These methodologies were considered inadequate in two ways:

Groups with a long history of continuous experience, such as the U.S. Department of Defense, Andersen Consulting, James Martin and Associates, IBM, and Ernst & Young already had methodologies covering the standard lifecycle of a project, even starting from the point of project sales and project setup. Their methodologies cover every person needed on the project, from staff assistant through sales staff, designer, project manager, and tester. The point is that both are "methodologies." The scope of their concerns is different. The scope of a methodology can be characterized along three axes: lifecycle coverage, role coverage, and activity coverage (Figure 4-2).

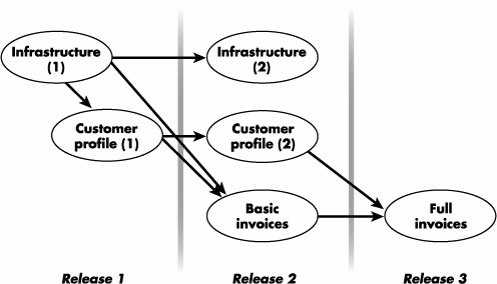

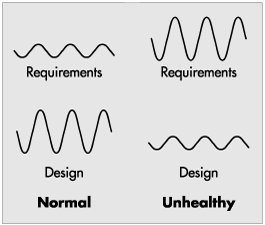

Clarifying a methodology's intended scope helps take some of the heat out of methodology arguments. Often, two seemingly incompatible methodologies target different parts of the lifecycle or different roles. Discussions about their differences go nowhere until their respective scope intentions are clarified. In this light, we see that the early OO methodologies had a relatively small scope. They addressed typically only one role, the domain designer or modeler. For that role, only the actual domain modeling activity is represented, and only during the analysis and design stages. Within that very narrow scope, they covered one or a few techniques and outlined one or a few deliverables with standards. No wonder experienced designers felt they were inadequate for overall development. The scope diagram helps us see where methodology fragments combine well. An example is the natural fit of Constantine and Lockwood's user interface design recommendations (Constantine 1999) with methodologies that omit discussion of UI design activities (leaving that aspect to authors who know more about the subject). Without having these scoping axes at hand, people would ask Larry Constantine, "How does your methodology relate to the other Agile Methodologies on the market?" In a talk at Software Development 2001, Larry Constantine said he didn't know he was designing a methodology, he was just discussing good ways to design user interfaces. Having the methodology scope diagram in view, we easily see how they fit. XP's scope of concerns is shown in Figure 4-3. Note that it lacks discussion of user interface design. The scope of concerns for Design for Use is shown in Figure 4-4. We see, from these figures, that the two fit together. The same applies for Design for Use and Crystal Clear. Figure 4-3. Scope of Extreme Programming. Figure 4-4. Scope of Constantine & Lockwood's Design for Use methodology fragment. Conceptual TermsTo discuss the design of a methodology, we need different terms: methodology size, ceremony, and weight; problem size; project size; system criticality; precision; accuracy; relevance; tolerance; visibility; scale; and stability. Methodology size. The number of control elements in the methodology. Each deliverable, standard, activity, quality measure, and technique description is an element of control. Some projects and authors will wish for smaller methodologies; some will wish for larger. Ceremony. The amount of precision and the tightness of tolerance in the methodology. Greater ceremony corresponds to tighter controls (Booch 1995). One team may write use cases on napkins and review them over lunch. Another team may prefer to fill in a three-page template and hold half-day reviews. Both groups write and review use cases, the former using low ceremony, the latter using high ceremony. The amount of ceremony in a methodology depends on how life critical the system will be and on the fears and wishes of the methodology author, as we will see. Methodology weight. The product of size and ceremony, the number of control elements multiplied by the ceremony involved in each. This is a conceptual product (because numbers are not attached to size and ceremony), but it is still useful. Problem size. The number of elements in the problem and their inherent cross-complexity. There is no absolute measure of problem size, because a person with different knowledge is likely to see a simplifying pattern that reduces the size of the problem. Some problems are clearly different enough from others that relative magnitudes can be discussed (launching a space shuttle is a bigger problem than printing a company's invoices). The difficulty in deciding the problem size is that there will often be controversy over how many people are needed to deliver the product and what the corresponding methodology weight is. Project size. The number of people whose efforts need to be coordinated: staff size. Depending on the situation, you may be coordinating only programmers or an entire department with many roles. Many people use the phrase project size ambiguously, shifting the meaning from staff size to problem size even within a sentence. This causes much confusion, particularly because a small, sharp team often outperforms a large, average team. The relationship between problem, staff, and methodology size are discussed in the next section. System criticality. The damage from undetected defects. I currently classify criticality simply as one of loss of comfort, loss of discretionary money, loss of irreplaceable money, or loss of life. Other classifications are possible. Precision. How much you care to say about a particular topic. Pi to one decimal place of precision is 3.1, to four decimal places is 3.1416. Source code contains more precision than a class diagram; assembler code contains more than its high-level source code. Some methodologies call for more precision earlier than others, according to the methodology author's wishes. Accuracy. How correct you are when you speak about a topic. To say "Pi to one decimal place is 3.3" would be inaccurate. The final object model needs to be more accurate than the initial one. The final GUI description is more accurate than the low-fidelity prototypes. Methodologies cover the growth of accuracy as well as precision. Relevance. Whether or not to speak about a topic. User interface prototypes do not discuss the domain model. Infrastructure design is not relevant to collecting user functional requirements. Methodologies discuss different areas of relevance. Tolerance. How much variation is permitted. The team standards may require revision dates to be put into the program codeor not. The tolerance statement may say that a date must be found, either put in by hand or added by some automated tool. A methodology may specify line breaks and indentation, leave those to peoples' discretion, or state acceptable bounds. An example in a decision standard is stating that a working release must be available every 3 months, plus or minus one month. Visibility. How easily an outsider can tell if the methodology is being followed. Process initiatives such as ISO9001 focus on visibility issues. Because achieving visibility creates overhead (cost in time, money, or both), agile methodologies as a group lower the emphasis on such visibility. As with ceremony, different amounts of visibility are appropriate for different situations. Scale. How many items are rolled together to be presented as a single item. Booch's former "class categories" provided for a scaled view of a set of classes. The UML "package" allows for scaled views of use cases, classes, or hardware boxes. Project plans, requirements, and designs can all be presented at different scales. Scale interacts somewhat with precision. The printer or monitor's dot density limits the amount of detail that can be put onto one screen or page. However, even if it could all be put onto one page, some people would not want to see all that detail. They want to see a rolled-up or high-level version. Stability. How likely it is to change. I use only three stability levels: wildly fluctuating, as when a team is just getting started; varying, as when some development activity is in mid-stride; and relatively stable, as just before a requirements / design / code review or product shipment. One way to find the stability state is to ask: "If I were to ask the same questions today and in two weeks, how likely would I be to get the same answers?" In the wildly fluctuating state, the answer is "Are you kidding? Who knows what this will be like in two weeks!" In the varying state, the answer is "Somewhat similar, but of course the details are likely to change." In the relatively stable state, the answer is "Pretty likely, although a few things will probably be different." Other ways to determine the stability may include measuring the "churn" in the use case text, the diagrams, the code base, the test cases, and so on (I have not tried these). PrecisionPrecision is a core concept manipulated within a methodology. Every category of work product has low-, medium-, and high-precision versions. Here are the low-, medium-, and high-precision versions of some key work products. The project planThe low-precision view of a project plan is the project map (Figure 4-5). It shows the fundamental items to be produced, their dependencies, and which are to be deployed together. It may show the relative magnitudes of effort needed for each item. It does not show who will do the work or how long the work will take (which is why it is called a map and not a plan). Figure 4-5. A project map: a low-precision version of a project plan. Those who are used to working with PERT charts will recognize the project map as a coarse-grained PERT chart showing project dependencies, augmented with marks showing where releases occur. This low-precision project map is very useful in organizing the project before the staffing and time lines are established. In fact, I use it to derive time lines and staffing plans. The medium-precision version of the project plan is a project map expanded to show the dependencies between the teams and the due dates. The high-precision version of the project plan is the well-known, task-based GANTT chart, showing task times, assignments, and dependencies. The more precision in the plan, the more fragile it is, which is why constructing GANTT charts is so feared: It is time-consuming to produce and gets out of date with the slightest surprise event. The user interface designThe low-precision description of the user interface is the screen flow diagram, which states only the purpose and linkage of each screen. The medium level of precision description consists of the screen definitions with field lengths and the various field or button activation rules. The highest-precision definition of the user interface design is the program's source code. Behavioral requirments / use casesBehavioral requirements are often written with use cases. The lowest-precision view of a set of use cases is the Actors-Goals list, the list of primary actors and the goals they have with respect to the system (Figure 4-6). This lowest-precision view is useful at the start of the project when you are prioritizing the use cases and allocating work to teams. It is useful again whenever an overview of the system is needed.

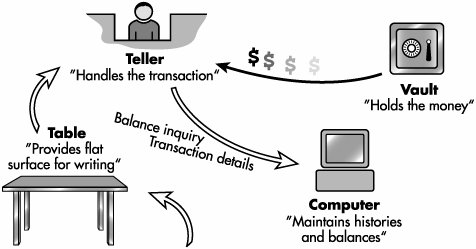

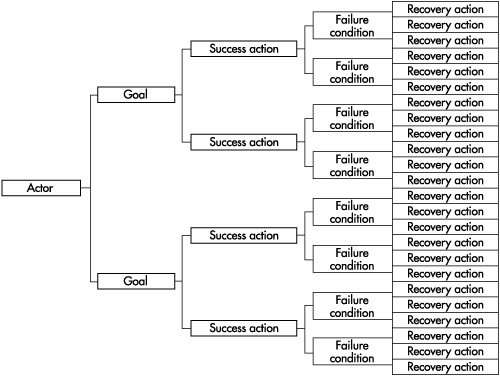

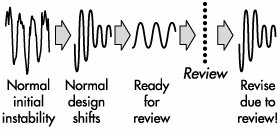

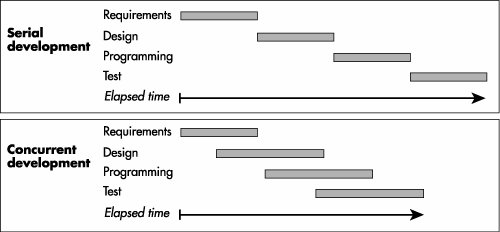

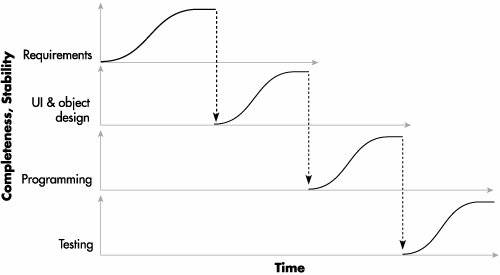

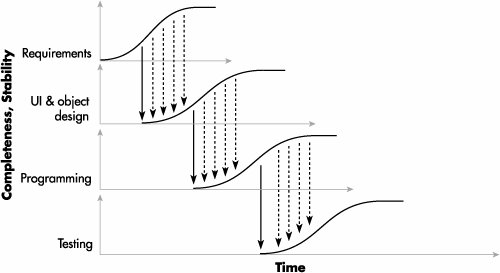

The medium level of precision consists of a one-paragraph brief synopsis of the use case, or the use case's title and main success scenario. The medium-high level of precision contains extensions and error conditions, named but not expanded. The final, highest level of precision includes both the extension conditions and their handling. These levels of precision are further described in Cockburn (2001c). The program designThe lowest level of precision in an object-oriented design is a Responsibility-Collaborations diagram, a very coarse-grained variation on the UML object collaboration diagram (Figure 4-7). The interesting thing about this simple design presentation is that people can already review it and comment on the allocation of responsibilities. Figure 4-7. A Responsibility-Collaborations diagram: the low-precision view of an object-oriented design. A medium level of precision is the list of major classes, their major purpose, and primary collaboration channels. A medium-high level is the class diagram, showing classes, attributes, and relationships with cardinality. A high level of precision is the list of classes, attributes, relations with cardinality constraints, and functions with function signatures. These often are listed on the class diagram. The final, highest level of precision is the source code. These levels for design show the natural progression from Responsibility-Driven Design (Beck 1989, Wirfs-Brock 1990) through object modeling with UML, to final source code. The three are not in opposition, as some imagine, but rather occur along a very natural progression of precision. As we get better at generating final code from diagrams, the designers will add precision and code-generation annotations to the diagrams. As a consequence, the diagrams plus annotations become the "source code." The C++ or Java stops being source code and becomes generated code. Working with "Precision"People do quite a lot with these low-precision views. During the early stages of the project, they plan and evaluate. At later stages, they use the low-precision views for training. I currently think of a level of precision as being reached when there is enough information to allow another team to start work. Figure 4-8 shows the evolution of six types of work products on a project: the project plan, the use cases, the user interface design, the domain design, the external interfaces, and the infrastructure design. Figure 4-8. Using low levels of precision to trigger other activities. In looking at Figure 4-8, we see that having the actor-goal list in place permits a preliminary project plan to be drawn up. This may consist of the project map along with time and staffing assignments and estimates. Having those, the teams can split up and capture the use-case briefs in parallel. As soon as the use-case briefsor a significant subset of themare in place, all the specialist teams can start working in parallel, evolving their own work products. One thing to note about precision is that the work involved expands rapidly as precision increases. Figure 4-9 shows the work increasing as the use cases grow from actors, to actors and goals, to main success scenarios, to the various failure and other extension conditions, and finally to the recovery actions. A similar diagram could be drawn for each of the other types of work products. Figure 4-9. Work expands with increasing precision level (shown for use cases). Because higher-precision work products require more energy and also change more often than their low-precision counterparts, a general project strategy is to defer, or at least carefully manage, their construction and evolution. Stability and Concurrent DevelopmentStability, the "likelihood of change," varies over the course of the project (Figure 4-10). Figure 4-10. Reducing fluctuations over the course of a project. A team starts in a situation of instability. Over time, team members reduce the fluctuations and reach a varying state as the design progresses. They finally get their work relatively stable just prior to a design review or publication. At that point, the reviewers and users provide new information to the development team, which makes the work less stable again for a period. On many projects, instability jumps unexpectedly on occasions, such as when a supplier suddenly announces that he will not deliver on time, a product does not perform as predicted, or an algorithm does not scale as expected. You might think that you should strive for maximum stability on a project. However, the appropriate amount of stability to target varies by topic, by project priorities, and by stage in the project. Different experts have different recommendations about how to deal with the varying rates of changes across the work products and the project stages. The simplest approach is to say, "Don't start designing until the requirements are Stable (with a capital S); don't start programming until the design is Stable," and so on. This is serial development (Figure 4-11). Its two advantages make it attractive to many people. It is, however, fraught with problems. Figure 4-11. Successful serial development takes longer (but fewer workdays) compared to successful concurrent development. The first advantage is its simplicity. The person doing the scheduling simply sequences the activities one after the other, scheduling a downstream activity to start when an upstream activity is finished (Figure 4-12). Figure 4-12. Serial development. Each workgroup waits for the upstream workgroup to achieve complete stability before starting. The second advantage is that, if no major surprises force a change to the requirements or design, a manager can minimize the work-hours spent on the project by carefully scheduling when people arrive to work on their particular tasks. There are three problems, though. The first problem is that the elapsed time needed for the project is the straight sum of the times needed for requirements, design, programming, test, and so on. This is the longest time that can be required for the project. With the most careful management, the project manager will get the longest elapsed time at the minimum labor cost. For projects on which reducing elapsed time is a top priority, this is a bad trade-off. The second problem is that surprises usually do crop up during the project. When one does, it causes unexpected revisions of the requirements or design and raises the development cost. In the end, the project manager minimizes neither the labor cost nor the development time. The third problem is the absence of feedback from the downstream activities to the upstream activities. In rare instances, the people doing the upstream activity can produce high-quality results without feedback from the downstream team. On most projects, though, the people who are creating the requirements need to see a running version of what they ordered so that they can correct and finalize their requests. Usually, after seeing the system in action, they change their requests. This forces changes in the design, coding, testing, and so on. Incorporating these changes lengthens the project's elapsed time and increases total project costs. Selecting the serial-development strategy really makes sense only if you can be sure that the team will be able to produce good, final requirements and design on the first pass. Few teams can do this. A different strategy, concurrent development, shortens the elapsed time and provides feedback opportunities at the cost of increased rework. Figure 4-11 and Figure 4-13 illustrate it, and "Principle 7. Efficiency is expendable in nonbottleneck activities." on page 190 analyzes it further. Figure 4-13. Concurrent development. Each group starts as early as its communications and rework capabilities indicate. As it progresses, the upstream group passes update information to the downstream group in a continuous stream (the dashed arrows). In concurrent development, each downstream activity starts at some point judged to be appropriate with respect to the completeness and stability of the upstream team's work (different downstream groups may start at different moments with respect to their upstream groups, of course). The downstream team starts operating with the available information, and as the upstream team continues work, it passes new information along to the downstream team. To the extent that the downstream team guesses right about where the upstream team is going and the upstream team does not encounter major surprises, the downstream team will get its work approximately right. The team will do some rework along the way, as new information shows up. The key issue in concurrent development is judging the completeness, stability, rework capability, and communication effectiveness of the teams. The advantages of concurrent development are twofold, the exact opposites of the disadvantages of serial development:

Such concurrent development is described as the Gold Rush strategy in Surviving Object-Oriented Projects (Cockburn 1998). The Gold Rush strategy presupposes both good communication and rework capacity. The Gold Rush strategy is suited to situations in which the requirements gathering is predicted to go on for longer than can be tolerated in the project plan, so there would simply not be enough time for proper design if the next team had to wait for the requirements to settle. Actually, many projects fit this profile. Gold-Rush-type strategies are not risk-free. There are three pitfalls to watch out for:

The complete discussion about when and where to apply concurrent development is presented in "Principle 7. Efficiency is expendable in nonbottleneck activities." on page 190. The point to understand now is that stability plays a role in methodology design. Both XP and Adaptive Software Development suggest maximizing concurrency (Highsmith 2000). This is because both are intended for situations with strong time-to-market priorities and requirements that are likely to change as a consequence of producing the emerging system. Fixed-price contracts often benefit from a mixed strategy: In those situations, it is useful to have the requirements quite stable before getting far into design. The mix will vary by project. Sometimes, the company making the bid may do some designing or even coding just to prepare its bid. Figure 4-15. Role-deliverable-milestone view of a methodology. Publishing a MethodologyPublishing a methodology has two components: the pictorial view and the text itself. The Pictorial ViewOne way to present the design of a methodology is to show how the roles interact across work products (Figure 4-15). In such a "Role-Deliverable-Milestone" view, time runs from left to right across the page, roles are represented as broad bands across the page, and work products are shown as single lines within a band. The line of a work product shows critical events in its life: its birth event (what causes someone to create it), its review events (who examines it), and its death event (at what moment it ceases to have relevance, if ever). Although the Role-Deliverable-Milestone view is a convenient way to capture the work-product dependencies within a methodology, it evidently is also good for putting people to sleep:

The pictorial view misses the practices, standards, and other forms of collaboration so important to the group. Those don't have a convenient graphical portrayal and must be listed textually. The Methodology TextIn published form, a methodology is a text that describes the techniques, activities, meetings, quality measures, and standards of all the job roles involved. You can find examples in Object-Oriented Methods: Pragmatic Considerations (Martin 1998), and The OPEN Process Specification (Graham 1997). The Rational Unified Process has its own Web site with thousands of Web pages. Methodology texts are large. At some level there is no escape from this size. Even a tiny methodology, with four roles, four work products per role, and three milestones per work product has 68 (4 + 16 + 48) interlocking parts to describe, leaving out any technique discussions. And even XP, which initially weighed in at only about 200 pages (Beck 2000), now approaches 1,000 pages when expanded to include additional guidance about each of its parts (Jeffries 2001, Beck 2000, Auer 2002, Newkirk 2001). There are two reasons why most organizations don't issue a thousand-page text describing their methodology to each new employee:

The real methodology resides in the minds of the staff and in their habits of action and conversation. Documenting chunks of the methodology is not at all the same as providing understanding, and having understanding does not presuppose having documentation. Understanding is faster to gain, because it grows through the normal job experiences of new employees.

As new technologies show up, the teams must invent new ways of working to handle them, and those cannot be written in advance. An organization needs ways to evolve new variants of the methodologies on the fly and to transfer the good habits of one team to the next team. You will learn how to do that as you proceed through this book. Reducing Methodology BulkThere are several ways to reduce the physical size of the methodology publication: Provide examples of work productsProvide work examples rather than templates. Take advantage of people's strengths in working with tangibles and examples, as discussed earlier. Collect reasonably good examples of various work products: a project plan, a risk list, a use case, a class diagram, a test case, a function header, a code sample. Place them online, with encouragement to copy and modify them. Instead of writing a standards document for the user interface, post a sample of a good screen for people to copy and work from. You may need to annotate the example showing which parts are important. Doing these things will lower the work effort required to establish the standards and will lower the barrier to people using them. One of the few books to show deliverables and their standards is Developing Object-Oriented Software (IBM OOTC 1997), which was prepared for IBM by its Object-Oriented Technology Center in the late 1990s and was then made public. Remove the technique guidesRather than trying to teach the techniques by providing detailed descriptions of them within the methodology document, let the methodology simply name the recommended techniques in the methodology, along with any known books and courses that teach them. Techniques-in-use involve tacit knowledge. Let people learn from experts, using apprenticeship-based learning, or let them learn from a hands-on course in which they can practice the technique in a learning environment. Where possible, get people up to speed on the techniques before they arrive on the project, instead of teaching the technique as part of a project methodology on project time. The techniques will then become skills owned by people, who simply do their jobs in their natural ways. Organize the text by roleIt is possible to write a low-precision but descriptive paragraph about each role, work product, and milestone, linking the descriptions with the Role-Deliverable-Milestone chart. The sample role descriptions might look something like these:

For the work products, you need to record who writes them, who reads them, and what they contain. A fuller version would contain a sample, noting the tolerances permitted and the milestones that apply. Here are a few simple descriptions:

For the review milestones, record what is being reviewed, who is to review it, and what the outcome is. For example:

With these short paragraphs in place, the methodology can be summarized by role (as the following two examples show). The written form of the methodology, summarized by role, is a checklist for each person that can be fit onto one sheet of paper and pinned up in the person's workspace. That sheet of paper contains no surprises (after the first reading) but serves to remind team members of what they already know. Here is a slightly abridged example for the programmers:

You can see that this is not a methodology used to stifle creativity. To a newcomer, it is a list outlining how he is to participate on the team. To the ongoing developer, it is a reminder. Using the Process MiniaturePublishing a methodology does not convey the visceral understanding that forms tacit knowledge. It does not convey the life of the methodology, which resides in the many small actions that accompany teamwork. People need to see or personally enact the methodology. My current favorite way of conveying the methodology is through a technique I call the process miniature. In a process miniature, the participants play-act one or two releases of the process in a very short period of time. On one team I interviewed, new people were asked to spend their first week developing a (small) piece of software all the way from requirements to delivery. The purpose of the week-long exercise was to introduce the new person to the people, the roles, the standards, and the physical placement of things in the company. More recently, Peter Merel invented a one-hour process miniature for Extreme Programming, giving it the nickname Extreme Hour. The purpose of the Extreme Hour is to give people a visceral encounter with XP so that they can discuss its concepts from a base of almost-real experience. In the Extreme Hour, some people are designated "customers." Within the first 10 minutes of the hour, they present their requests with developers and work through the XP planning session. In the next 20 minutes, the developers sketch and test their design on overhead transparencies. The total length of time for the first iteration is 30 minutes. In the next 30 minutes, the entire cycle is repeated so that two cycles of XP are experienced in just 60 minutes. Usually, the hosts of the Extreme Hour choose a fun assignment, such as designing a fish-catching device that keeps the fish alive until delivering them to the cooking area at the end of the day and also keeps the beer cold during the day. (Yes, they do have to cut scope during the iterations!) We used a 90-minute process miniature to help the staff of a 50-person company experience a new development process we were proposing (you might notice the similarity of this process miniature experience to the informance described on page 81). In this case, we were primarily interested in conveying the programming and testing rules we wanted people to use. We therefore could not use a drawing-based problem such as the fish trap but had to select a real programming problem that would produce running, tested code for a Web application.

Whatever form of process miniature you use, plan on replaying it from time to time in order to reinforce the team's social conventions. Many of these conventions, such as the scope negotiation rules just described, won't find a place in the documentation but can be partially captured in the play. | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

EAN: 2147483647

Pages: 126