Administrative T-SQL

|

|

You can use some little-known T-SQL stored procedures to shorten your stored procedure development cycle significantly. The disadvantage to using these is that some of your code may no longer be portable to Oracle or another DBMS. There is also a slight chance that Microsoft may pull a stored procedure that is not documented out of a future release of SQL Server. Most of the stored procedures I cover in this section have been around since SQL Server 6.5, reducing the worry that they'll disappear.

Simplifying Cursors

A cursor is a way to loop through a given amount of rows and perform an action on each row individually. Nothing slows down performance of an application more than a cursor in your code. Cursors do have their place, however. For example, they're useful if you want to loop through a list of tables and perform an action on the tables. This section lists a number of stored procedures that ship with SQL Server that simplify life for a DBA to where they won't have to create a cursor to perform certain functions. It's important to note that cursors still are running in the background but you don't have to worry about the coding of them.

sp_MSforeachtable

Hidden in the depths of the master database is a series of stored procedures that can replace some cursors with one-liners. Traditionally, if you wanted to run a DBCC CHECKTABLE on every table in a database you'd have to write an elaborate cursor like this:

DECLARE @dataname varchar(255), @dataname_header varchar(255) DECLARE datanames_cursor CURSOR FOR SELECT name FROM master..sysdatabases WHERE name not in ('master', 'pubs', 'tempdb', 'model') OPEN datanames_cursor FETCH NEXT FROM datanames_cursor INTO @dataname IF (@@fetch_status = 0) BEGIN SELECT @dataname_header = "Database " + RTRIM(UPPER(@dataname)) PRINT @dataname_header SELECT @dataname_header = RTRIM(UPPER(@dataname)) EXEC ("DBCC CHECKDB " + "(" + @dataname + ")") END CLOSE datanames_cursor DEALLOCATE datanames_cursor Beginning with version 6.5 of SQL Server, Microsoft provides a stored procedure called sp_MSforeachtable. Using the question mark as a placeholder for all table names, the procedure performs the same actions as the above query, using a single line. You can replace the above cursor with this single line:

sp_MSforeachtable @command1="print '?' dbcc checktable ('?')" | Note | SQL Server will still create a cursor behind the scenes when running sp_MSforeachtable. This will simplify your queries, though. |

You can issue up to three commands to the stored procedure using @command1 through @command3. The following syntax will loop through each table and output the number of records in each table:

sp_MSforeachtable @COMMAND1 = "Print '?'", @COMMAND2= "SELECT COUNT(*) FROM ?"

| Caution | Before you run the above query in Query Analyzer, make sure you have the results go to text versus the grid. You may want this in order to find text inside your report. In Query Analyzer, a shortcut to change to Results in Text mode is to press CTRL-T. |

You can replace what you use as the placeholder by using the @replacechar command. One of the most useful parameters is the @whereand parameter, which conditionally selects tables. The sp_MSforeachtable stored procedure uses system tables in a cursor to derive the table names. You can use the @whereand parameter to tag additional conditions on the query. The @precommand and @postcommand parameters perform commands before and after the table command. The two parameters are useful for either creating a temporary table or creating and dropping a temporary table.

For example, the following command updates statistics on all tables in the pubs database that begin with title:

sp_MSforeachtable @whereand = "and name like 'title%'", @replacechar='~', @precommand="print 'Updating Statistics.....' print ''", @command1="print '~' update statistics ~", @postcommand="print '' print 'Complete Updating Statistics!'"

The command outputs the following results, which will update all the statistics in a database:

Updating Statistics..... [dbo].[titleauthor] [dbo].[titles] Complete Updating Statistics!

| Tip | You can also use the @whereand parameter to order the tables. For example, you can use the syntax @whereand = 'order by name' to order the tables by name. |

A Twist on sp_spaceused

Has this ever happened to you? You come to work after a long weekend, and a database has grown twice as fast as normal. Where is all that space going? Generally you do a row count on the tables and then make calculations based on the results. You can use sp_spaceused to find out where your space is going at the database, table, or index level. The sp_spaceused stored procedure tells you the following:

-

Name of the table or database

-

Number of rows in the table or database

-

Amount of space in kilobytes reserved in the table or database

-

Amount of space in kilobytes taken by the table or database

-

Amount of space in kilobytes taken by indexes

-

Amount of space in kilobytes unused by the table or database

You can also gain the same information at the database level. If you run the stored procedure without any parameters, the procedure returns database-level information. To use the stored procedure for tables, pass the table name in single quotes and it will return table-level information as shown here:

sp_spaceused 'Suppliers'

A great way to use the sp_MSforeachtable stored procedure is in conjunction with the sp_spaceused stored procedure. With this combination, you can find out the space used in every table in a database. For example, the following syntax executes the sp_spaceused stored procedure against every table:

sp_MSforeachtable @command1 = "print '?'", @command2=" sp_spaceused '?'"

sp_MSforeachdb

Another handy system stored procedure you can use is sp_MSforeachdb. All the parameters that work in the sp_MSforeachtable stored procedure work in this stored procedure, with the exception of @whereand. For example, you can use the stored procedure to check every database on your server with the following syntax:

sp_MSforeachdb @command1 = "Print '?'", @command2 = "DBCC CHECKDB (?)"

Creating Your Own sp_MSforeach Stored Procedures

Now that we have the basics out of the way, let's discuss how to make your own sp_MSforeach stored procedure. Each of the sp_MSforeach stored procedures references another stored procedure called sp_MSforeachworker, which generically accepts an array of data and loops through it. A customized stored procedure can be easily created by modifying a piece of the query. Here is the part of a sp_MSforeach query that you would care about.

/* Create the select */ exec(N'declare hCForEach cursor global for select ''['' + REPLACE(user_name(uid), '']'', N'']]'') + '']'' + ''.'' + ''['' + REPLACE(object_name(id), N'']'', '']]'') + '']'' from dbo.sysobjects o ' + N' where OBJECTPROPERTY(o.id, N''IsUserTable'') = 1 ' + N' and o.category & ' + @mscat + N' = 0 ' + @whereand) declare @retval int select @retval = @@error if (@retval = 0) exec @retval = sp_MSforeach_worker @command1, @replacechar, @command2, @command3

To make a sp_MSforeachview stored procedure, all you would have to do is change the bolded N'IsUserTable'' to N'IsView'' or for a trigger you could use the IsTrigger syntax. The final cut of code would look like the following (this is only a code snippet):

exec(N'declare hCForEach cursor global for select ''['' + REPLACE(user_name(uid), N'']'', N'']]'') + '']'' + ''.'' + ''['' + REPLACE(object_name(id), N'']'', N'']]'') + '']'' from dbo.sysobjects o ' + N' where OBJECTPROPERTY(o.id, N''IsView'') = 1 ' + N' and o.category & ' + @mscat + N' = 0 ' + @whereand) declare @retval int select @retval = @@error if (@retval = 0) exec @retval = sp_MSforeach_worker @command1, @replacechar, @command2, @command3

| Note | For some examples of other sp_MSforeach stored procedures, go to http://www.sqlservercentral.com/scripts/. |

Extended Stored Procedures

Extended stored procedures allow you to create programs in C++ and execute them from stored procedures or ad hoc queries. Visual C++ includes some wizards to get you started with programming extended stored procedures. These procedures, which are compiled as a .dll file, give you the ability to perform actions you could never perform in regular T-SQL. For example, you can use xp_cmdshell to shell out to a command prompt and execute a program, or list all the files in a directory.

Documentation on extended stored procedures is scarce. In this section, I cover some of the extended stored procedures that may help you administrate your SQL Server. You'll find more information about extended stored procedures in Appendix B.

First and foremost, you can list all the extended stored procedures installed on your SQL Server using the sp_helpextendedproc stored procedure with no parameters.

Before you begin implementation of extended stored procedures into your applications, you need to be aware of the security issues. When executing most extended stored procedures, you have the same rights and permissions as the Windows account that is configured to start SQL Server (MSSQLSERVER service). This account is usually either an administrator or system account. In either case, you pose a substantial security risk if you don't lock down the extended stored procedure to forbid your non-SA users from executing it.

| Caution | Custom extended stored procedures are notorious for leaking memory. Make sure you carefully test these before implementing them. |

Adding an Extended Stored Procedure

Adding an extended stored procedure is simple. You can add it in Enterprise Manager using the following steps:

-

Copy the procedure's DLL file to the \Program Files\Microsoft Sql Server\ Mssql\BINN path.

-

Open Enterprise Manager and drill down to the Extended Stored Procedures group in the master database.

-

Right-click the Extended Stored Procedures group, and select New Extended Stored Procedure.

-

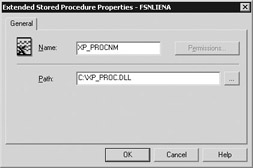

Name the stored procedure, and specify the DLL file (see Figure 6-2).

Figure 6-2: Adding an extended stored procedure -

Click OK, then grant the appropriate permissions by reopening the extended stored procedure and clicking Permissions.

You can also add an extended stored procedure through the sp_addextendedproc system stored procedure. The procedure takes only two parameters: the extended stored procedure name, and the DLL filename. For example, the following syntax would add the same extended stored procedure you see in Figure 6-2.

sp_addextendedproc 'XP_PROCNM', 'XP_PROC.DLL'

| Tip | Extended stored procedures can't be called from any database the way system stored procedures can be called. When calling an extended stored procedure, always preface the call with the fully qualified path. This way, the user can be connected to any database without having to worry about changing to the master database. There is an exception to this. If the extended stored procedure begins with sp_, you will not have to preface the name. |

xp_cmdshell

xp_cmdshell is the most commonly used stored procedure. This procedure allows you to execute command-line programs. This is handy when you need to move files or look in a directory for the list of files.

| Caution | Never execute programs with GUI interfaces or programs that need user intervention. Since the program that you execute is being executed from SQL Server, you cannot interact with the program. Restrict the programs you execute to command-line programs. |

If you type a bad DLL file for the Path option, no error checking occurs to make sure you didn't mistype the information. You won't know that the procedure is faulty until execution time when you receive the following error:

ODBC: Msg 0, Level 16, State 1 Cannot load the DLL XP_PROC.DLL, or one of the DLLs it references. Reason: 126(The specified module could not be found.)

SQL Server is carefully tested under certain environments, and introduction of a new procedure could make your SQL Server unstable. Test the behavior of your extended stored procedures after each Service Pack installation to make sure that no regression has occurred.

There is also a security risk associated with these procedures. Before you load a third-party extended stored procedure, be sure you fully understand what the procedure does.

Stored procedures extend SQL Server's normal behavior. With that in mind, quite a lot of damage could be done to your server by an extended stored procedure developed by a malicious person.

xp_cmdshell accepts either of two parameters: the command and the optional parameter, or no_output. The no_output parameter suppresses the display of any results to the client. The following syntax outputs the results of the DOS command DIR to the client:

master..xp_cmdshell "DIR"

| Tip | When calling a program, use fully qualified filenames. Notice that if you execute the command xp_cmdshell 'DIR' that the default path is the \WINNT\system32 directory. If you fully qualify your paths, you never have to worry about where SQL Server perceives the files to be. |

| Caution | The command you execute can only contain one set of double quotes. If the file you're trying to execute has any spaces in its name, place single quotes around the filename or use the FAT 8.3 filename. |

Warning Users About Disconnection

Another application for xp_cmdshell is to warn users through a net send message before you place a database in single user mode. For example, look at the following code:

EXEC master..xp_cmdshell "net send XANADU You will be disconnected from the Northwind database in 1 minute", no_output WAITFOR DELAY '00:00:30' EXEC master..xp_cmdshell "net send XANADU You will be disconnected from the Northwind database in 30 seconds", no_output ALTER DATABASE Northwind SET SINGLE_USER WITH ROLLBACK AFTER 30 SECONDS

You can see that a message is sent to the user one minute before the database is placed in single user mode. Then another message is sent 30 seconds before disconnecting all the users. During the last 30 seconds, no new users are allowed to connect to the database because it is in transition (this is done through the ROLLBACK syntax).

This function can be expanded to any type of application. You can shut down the server by using a similar syntax:

xp_cmdshell "net stop MSSQLServer", no_output

This action results in the following error, which indicates that you lost your connection because SQL Server stopped:

[Microsoft][ODBC SQL Server Driver][Shared Memory]ConnectionCheckForData (CheckforData()). Server: Msg 11, Level 16, State 1, Line 0 General network error. Check your network documentation. Connection Broken

Executing a DTS Package from Within a Query

You can execute a DTS package from a stored procedure with xp_cmdshell, which allows you to execute the package with DTSRUN.EXE. For example, to execute the package called DTSTest that is saved locally on your SQL Server, use the following syntax:

master..xp_cmdshell "DTSRun /S(local) /N DTSTest /E"

The /E parameter connects to the SQL Server with Windows authentication. This query outputs the following results:

Output DTSRun: Loading... DTSRun: Executing... DTSRun OnStart: DTSStep_DTSActiveScriptTask_1 DTSRun OnFinish: DTSStep_DTSActiveScriptTask_1 DTSRun: Package execution complete. NULL (6 row(s) affected)

| Caution | Be careful when executing a DTS package using this method. If you place any steps in your package that need to interact with the user, such as a message box, your connection will stall. Since there is no one to click on the OK box in a message box, the query will continue to execute until the timeout is crossed (if one exists). |

Piping a Query to a Text File

It's not uncommon to need to pipe the results of a query to a text file. For instance, you may have to pipe to a text file in order to produce a text file nightly through a stored procedure. This is quite easy in Oracle, but a little more complex in SQL Server.

One of the methods available to you is the xp_cmdshell extended stored procedure and osql. You can call osql from xp_cmdshell and use the -o parameter to output the results to a text file, as shown here:

xp_cmdshell 'osql -Usa -P -dNorthwind -Q"select * from customers" -oc:\output.txt -N'

xp_fileexist

One of the undocumented extended stored procedures in a DBA's arsenal is xp_fileexist. As you can probably infer from its name, this procedure is a powerful tool that checks for the existence of a file.

To execute the xp_fileexist extended stored procedure, type the following from an osql command, Query Analyzer, or a stored procedure:

master..xp_fileexist 'c:\autoexec.bat'

This results in the following output:

File Exists File is a Directory Parent Directory Exists ----------- ------------------- ----------------------- 1 0 1 (1 row(s) affected)

Let's try using xp_fileexist for a more practical case. Suppose that a legacy system is going to deposit a file into a specific directory on an hourly basis. You would like to have a stored procedure that can detect the existence of a file in a specific directory, and then fire off a DTS package to convert the flat file data after it's in the directory. If you create a SQL Server Agent job to run every 30 minutes, you could use the following code to fulfill your requirements:

SET NOCOUNT ON --Create temporary table create table #fileexists ( doesexist smallint, fileindir smallint, direxist smallint) -- Insert into the temporary table Insert into #fileexists exec master..xp_fileexist 'C:\AUTOEXEC.BAT' --Queries the temporary table to see if the file exists If exists (select doesexist FROM #fileexists FE WHERE FE.doesexist = 1) BEGIN --Executes DTS Package If File Exists Exec master..xp_cmdshell 'DTSRUN /S servername /N DTSPackageName /U sa /P password', no_output -- Must change the above parameters to match your server requirements. Print 'File Does Exists and Running Package' End Else Begin Print 'File Does Not Exists' End -- Clean up TempDB DROP TABLE #fileexists

| Note | This same function could be performed through VBScript and the FileSystemObject. However, the VBScript code to perform the same function is much more complex than the preceding code. VBScript does offer more flexibility if you don't know exactly what type of file will be in the directory. |

When executing a stored procedure or query through SQL Server Agent as a job, make sure you always turn on the NOCOUNT option. In SQL Server Agent, you may experience significant slowdowns if you don't turn on this option. You may also see problems in Visual Basic when executing a stored procedure without the SET NOCOUNT ON option. Visual Basic treats the (n row(s) affected) as a separate result set, potentially causing Visual Basic to return control to the application before it has finished executing the stored procedure.

xp_getfiledetails

When trying to uncover extended stored procedures, we have to make a short pit stop to xp_getfiledetails. This stored procedure gem finds all the details about a file, including:

-

Size of file in bytes

-

Creation date

-

Date of last access

-

Date the file was last modified

It loads all these values into a varchar that you can use for review. Here's an example of this procedure:

xp_getfiledetails 'c:\autoexec.bat'

So what would be a practical application for this stored procedure? When you receive files from a mainframe, they may be a size of 0 kilobytes until the file is finished uploading. You can then run the following stored procedure to look for the file to be a certain size. If the file is larger than that size, you can trigger an event such as a DTS package to load the file.

Declare @filename varchar(255) SELECT @filename = 'c:\autoexec.bat' CREATE TABLE #filedetails (altname varchar(30), size int, createdate varchar(32), createtime varchar(32), lastwrittendt varchar(30), lastwrittentime varchar(32), lastaccessdt varchar(30), lastaccesstime varchar(32), attributes int) INSERT INTO #filedetails Exec xp_getfiledetails @filename SELECT * FROM #filedetails If (SELECT size FROM #filedetails) > 50000 Begin print 'Criteria is Greater than Expected' End if (select size from #filedetails) <49999 BEGIN Print 'Criteria is Less than Expected' End DROP TABLE #filedetails

xp_readerrorlog

Another handy tool that ships with SQL Server is the xp_readerrorlog extended stored procedure. This procedure allows you to read the complete error log through T-SQL. This is a great way to e-mail the last few errors that have occurred on the server. Following is a sample script that uses the xp_readerrorlog extended stored procedure to send you only the last ten errors on your server. It does this through inserting the log into a table, so data can selectively be pulled from it:

SET NOCOUNT ON CREATE TABLE #error_lg ( errortext varchar(500), continuerow int) INSERT INTO #error_lg exec xp_readerrorlog SELECT TOP 10 errortext from #error_lg WHERE errortext like '%severity%' order by 1 desc DROP TABLE #error_lg

Administrative DBCC Commands

The main way to perform health checks on a SQL Server is through database consistency check (DBCC) commands. DBCC commands were extremely helpful in SQL Server 6.5, when corruption was more common. In SQL Server 7.0, database object corruption is very rare because Microsoft has done away with devices (among other things). There is a slight chance that this stability could be compromised if you lose power or someone abruptly turns off the SQL Server. Even if you lose power, the likeliness of corruption is rare. As a safeguard, investing in a good UPS can reduce the risk to almost 0.

DBCC commands can help pinpoint problems in your SQL Server, in addition to performing regular maintenance such as rebuilding indexes. One of the minor enhancements in SQL Server 2000 is that DBCC commands can now use multiple processors when executing. To obtain an entire list of DBCC commands that are on your server, run the following command:

DBCC HELP ('?') | Note | Many DBCC commands are undocumented. I discuss some of these in this chapter as well as in Appendix A. Be careful when executing these commands, as they can slow down your server or change the behavior of SQL Server. |

DBCC CHECKDB

The most common DBCC is DBCC CHECKDB. This is a well-known command from SQL Server 6.5 and you had to run it more often to detect corruption in your database. Now Microsoft has added additional syntax to help you locate trouble in your database objects. DBCC CHECKDB is the same as running DBCC CHECKALLOC and DBCC CHECKTABLE on every table in the database. If there is a specific table that is reporting an error, you can run the other commands, but otherwise, always run DBCC CHECKDB. You can run the command with the following syntax:

DBCC CHECKDB ( 'database_name' [ , NOINDEX | { REPAIR_ALLOW_DATA_LOSS | REPAIR_FAST | REPAIR_REBUILD } ] [ WITH { [ ALL_ERRORMSGS ] [ , [ NO_INFOMSGS ] ] [ , [ TABLOCK ] ] [ , [ ESTIMATEONLY ] ] [ , [ PHYSICAL_ONLY ] ] } ] Parameters are not required to run the command. If you run DBCC CHECKDB without any of the above parameters, the command checks the consistency of the current database. The most important parameters in the syntax are those connected to repairs. To use any of the three repair options, your database must be in single user mode.

The repair parameters range from REPAIR_FAST, which repairs minor key problems in non-clustered indexes, to REPAIR_ALLOW_DATA_LOSS, which repairs major problems and allows SQL Server to drop corrupt text objects. In the middle is REPAIR_REBUILD, which provides all the features of REPAIR_FAST and also rebuilds problem indexes. When executing REPAIR_REBUILD and REPAIR_FAST, you do not risk data loss. You can run this command and repair minor problems with the following syntax:

ALTER Database Northwind Set SINGLE_USER WITH ROLLBACK IMMEDIATE DBCC CHECKDB (Northwind, REPAIR_FAST)

When executing DBCC CHECKDB on large, multi-gigabyte databases, the command could potentially take hours to execute and use large quantities of space in your TempDB database. Before you execute the command, you may want to consider running it with the ESTIMATEONLY parameter. This will show you how much space in the TempDB is going to be required to run the command. For example, the following command:

DBCC CHECKDB (Northwind, REPAIR_REBUILD) With ESTIMATEONLY

outputs the following result:

Estimated TEMPDB space needed for CHECKALLOC (KB) ------------------------------------------------- 14 (1 row(s) affected) Estimated TEMPDB space needed for CHECKTABLES (KB) -------------------------------------------------- 17 (1 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator.

You can run DBCC CHECKDB against every database on your system with the following syntax:

sp_MSforeachdb @command1 = "Print '?'", @command2 = "DBCC CHECKDB (?)"

You can also use the TABLOCK parameter to capture shared table locks during the execution. This speeds up the execution of the DBCC CHECKDB command.

To run it, use the following syntax:

DBCC CHECKDB (Northwind) with TABLOCK

Some DBCC commands, including DBCC CHECKDB, can dramatically impact performance during execution. To increase the performance of your DBCC commands, I offer the following suggestions:

-

Run during off-peak hours

-

Limit the amount of transactions during the execution

-

Place your database in single user mode

-

Place the TempDB on a separate drive and make sure there's enough space

-

Use the NO_INFOMSGS option to reduce the TempDB usage

-

Try not to run any I/O intensive processes on the server (stop unnecessary services if you can)

Of course, some 24/7 shops won't be able to implement some of these suggestions.

Corrupt Databases

It is rare that a database is marked as corrupt, but when that occurs you can use

the sp_resetstatus stored procedure to take the database out of the corrupt status. A database can be marked corrupt for many reasons. Generally, it happens as a result of one or more of the following conditions:

-

A database or log file is missing.

-

SQL Server may not have been able to restore the database in ample time.

-

A data page in the database could be corrupt.

Once you have evidence that a database is corrupt, it is critical that you attend to it immediately or the corruption may spread in the database to other pages. To fix this problem, perform the following steps:

-

Review the SQL Server and Windows NT error logs to see if you can find where the problem occurred. For example, a hard drive may be full.

-

Start SQL Server in single user mode.

-

Run sp_resetstatus with the @dbname parameter (for example, sp_resetstatus @dbname = 'pubs').

-

Restart SQL Server in single user mode.

-

If the database is still in suspect mode, set it again back to normal mode and attempt to dump the transactions of the suspect database with the following command:

DUMP TRANSACTION Northwind WITH NO_LOG

-

Restart the SQL Server again in single user mode and if the database comes up, perform detailed DBCC checks (CHECKDB, CHECKALLOC, and so on).

-

Run a few random queries to see if you experience any problems.

-

If no problems occur, stop and start SQL Server and open the database to production.

What if you start your SQL Server and it still shows your database as corrupt? At some point you may have to cut your losses and begin a database restore from the last known good backup in order to get the end-users up again. If that doesn't work, the next step is to place your database in emergency mode to copy the data out.

| Note | Keep in mind that even if you're able to successfully get the data out, it may be bad or corrupt data. |

To place your database in emergency mode, use the following command:

sp_configure 'allow updates', 1 RECONFIGURE WITH OVERRIDE GO UPDATE master..sysdatabases set status = -32768 WHERE name = 'pubs' GO SP_CONFIGURE 'allow updates', 0 RECONFIGURE WITH OVERRIDE

After running the command, the database appears in Enterprise Manager in Read-Only\Offline\Emergency Mode. While the database is in this mode, you can only read from it. If you try to update any values, you receive the following error:

Server: Msg 3908, Level 16, State 1, Line 1 Could not run BEGIN TRANSACTION in database 'pubs' because the database is in bypass recovery mode. The statement has been terminated.

| Caution | Placing your database in emergency mode is unsupported by Microsoft and potentially harmful to your database. |

After you have retrieved the data and backed up the new database, set the status back to normal and restart SQL Server in regular mode with the following command:

sp_configure 'allow updates', 1 RECONFIGURE WITH OVERRIDE GO UPDATE master..sysdatabases set status = 0 WHERE name = 'pubs' GO sp_configure 'allow updates', 0 RECONFIGURE WITH OVERRIDE

Table corruption also occurs, although rarely. When either table corruption or database corruption occurs, it is generally due to SQL Server suddenly stopping. When you discover the problem, first run DBCC CHECKTABLE to determine the extent of the problem. SQL Server will not let you drop corrupt tables because some allocation errors may have occurred. This means if you delete one table, you may be deleting data pages from other tables.

To solve the problem, perform either of these steps:

-

Restore from the last known good backup.

-

Copy the data and schema from the corrupt tables to a separate database. Rename the corrupt database to something different and the new database to the old database name.

Sometimes Microsoft Product Support can help correct some data allocation problems. They have some tools that allow you to edit the linkages in the tables. This is not supported, however, and they make you sign a waiver form before they perform this type of action.

DBCC SQLPERF

The DBCC SQLPERF command lets you find out how much space each of the logs on your server is using. (Only the logspace parameter is documented, but I discuss some additional parameters in this section.) The syntax for the logspace parameter is as follows:

DBCC SQLPERF(logspace)

The command outputs the following information:

Database Name Log Size (MB) Log Space Used (%) Status ------------- -------------- ------------------ ----------- master 3.99219 14.3469 0 msdb 3.99219 17.0132 0 model 1.0 12.7953 0 Northwind 0.9921875 33.120079 0 pubs 1.49215 4.26471 0 tempdb 10.9921 1.64216 0

Another parameter you can use is lrustats. This parameter outputs a fantastic report that shows you how many server hits are being cached versus those pulled from disk (cache hit ratio). Without knowing about this parameter, you would have to pull up System Monitor to view this type of information.

DBCC SQLPERF(lrustats)

outputs the following results:

Statistic Value -------------------------------- ------------------------ Cache Hit Ratio 99.791077 Cache Flushes 0.0 Free Page Scan (Avg) 0.0 Free Page Scan (Max) 0.0 Min Free Buffers 331.0 Cache Size 4362.0 Free Buffers 36.0 (7 row(s) affected)

Other parameters for this DBCC command are covered in Appendix A.

DBCC OPENTRAN

One of the deathblows to any database's performance is open transactions. Leaving transactions open is the number one problem I find with applications, and the problem creates a memory leak that is easily fixed. You can detect these open transactions with DBCC OPENTRAN. The command shows you the oldest open transaction, as well as lots of valuable information about the transaction.

You can execute the command without any parameters. You can pass the database name if you want to look at any transactions for a database that you're not connected to. If you run the command, and there is no transaction open, you receive the following message:

No active open transactions. DBCC execution completed. If DBCC printed error messages, contact your system administrator.

If there are transactions open, the oldest transaction is shown in results that look like the following:

Transaction information for database 'pubs'. Oldest active transaction: SPID (server process ID) : 54 UID (user ID) : 1 Name : user_transaction LSN : (4:385:1) Start time : Jan 4 2002 4:53:11:600PM DBCC execution completed. If DBCC printed error messages, contact your system administrator.

From these results, you can determine that SPID 54 has the transaction open. You can also see how long the transaction has been open. If someone has neglected to close his or her transaction, you can kill the connection with the following syntax:

KILL 54

| Tip | Notice that the transaction's name is included in this information. From this, you can determine which transaction in your application is causing the problem. Transaction names are not required when opening a transaction, but they are quite helpful because they help you debug your applications. |

DBCC USEROPTIONS

The DBCC USEROPTIONS command returns everything for which the user has issued a SET command (knowingly or unknowingly). For example, when you connect to SQL Server through Query Analyzer, it issues a series of SET commands based on the way you configured Query Analyzer in the Connection Properties tab. Most of the SET commands concern ANSI settings.

You can use the DBCC USEROPTIONS command when debugging users' problems on the phone. This provides a quick snapshot that a user can send you via e-mail. If the user has issued an incorrect SET command, for example, using incorrect ANSI defaults, that could affect the way data is displayed.

You can also use the results of this command for auditing purposes. To execute the command, use the following syntax:

DBCC USEROPTIONS

The output depends on what you have set, but the following is an example:

Set Option Value ---------------------------- ---------------- textsize 64512 language us_English dateformat mdy datefirst 7 quoted_identifier SET arithabort SET ansi_null_dflt_on SET ansi_defaults SET implicit_transactions SET cursor_close_on_commit SET ansi_warnings SET ansi_padding SET ansi_nulls SET concat_null_yields_null SET (14 row(s) affected) DBCC execution completed. If DBCC printed error messages, contact your system administrator.

System Functions

In this section, I discuss a number of useful functions that you can use in your applications. (Appendix A covers even more handy functions.)

Auditing Functions

SQL Server provides quite a few functions you can use to audit database activity. You can determine what account a user is connected with, what type of application is connecting, and what computer is used for the connection.

-

The app_name() function returns the application that's currently requesting data from your SQL Server.

-

The getdate() function returns the current date on the SQL Server.

-

The host_name() function determines which workstation is connecting to your SQL Server.

-

The system_user() function gives you the logon name of the connecting user.

For example, you can create an audit table as shown here:

CREATE Table Audit ( ApplicationNm varchar(30), InsertDt DateTime, ByWorkstation varchar(30), ByUser varchar(30))

Then you can create a trigger to insert into the table whenever a record is modified, such as this:

INSERT INTO Audit SELECT APP_NAME(), GETDATE(), HOST_NAME(), SYSTEM_USER

| Note | Placing auditing triggers on tables slows down query performance. You can use third-party programs like Log Explorer to view the transaction log of a database and rollback individual transactions. |

REPLACE

The replace() function is a great function based on its Visual Basic counterpart. It allows you to replace certain string values with data you supply. The basic syntax looks like this:

REPLACE(column or 'string value', 'old value', 'new value')

For example, you can replace the abbreviation of a state name with the full name in

a SELECT statement by using the following syntax:

SELECT REPLACE(Region, 'WA','Washington') As Region FROM CUSTOMERS

Position Functions

The position functions allow you to select pieces of data from a string or column. This is useful if you have a phone number that you receive as one string, and you want to break it into three separate fields. Use the left() function to select a certain number of characters beginning at the left, or use the right() function to select characters from the right. For example, you can use the left() function followed by the number of characters you want to select, as shown here:

SELECT LEFT('ABCDEFG',3) As Example This outputs the following result:

Example ------- ABC (1 row(s) affected)

Make sure that you alias the column as shown in this example. If you don't give the column an alias, the column does not have a name.

You can use the substring() function to select data from the middle of a string. Use the substring() function, followed by the starting character, and then the number of characters to select.

Combine the three functions to create three fields out of a phone number, as shown here:

SELECT LEFT('9022338372',3) as AreaCode, SUBSTRING('9022338372',4,3) as Prefix, RIGHT('9022338372',4) as Suffix This results in the following output:

AreaCode Prefix Suffix -------- ------ ------ 902 233 8372 (1 row(s) affected)

You can concatenate the strings to add dashes to a Social Security number or phone number. With the following syntax, you can change a phone number to the appropriate format:

SELECT '('+LEFT('9022338372',3) + ')'+ SUBSTRING('9022338372',4,3) + '-'+ RIGHT('9022338372',4) as PhoneNumber The result looks like this:

PhoneNumber ------------ (902)233-8372 (1 row(s) affected)

db_name()

One way of passing light metadata is to use the database name. (Metadata is data that describes your data.) For example, if your company is servicing several customers, you may have separate databases for each client, rather than one central database. As a matter of fact, most large customers will insist that they not share a database with other customers. For security, it's preferable to maintain separate client databases. In addition, clients are usually uncomfortable sharing a database with other clients (some of which could be competitors).

For example, suppose your database name is WIDGETC999, and suppose this database stores information about client 999, who has purchased WIDGETC. Your application could obtain this information for the database dynamically using the db_name() function. The db_name() function tells you which database you're currently connected to. This is useful when you need to perform either of the following chores:

-

Derive information about the client from the database, such as the client ID

-

Learn the database name in order to pass it to another application or DTS package

If you're trying to find the client number from database WIDGETC999, you could use the following command:

SELECT SUBSTRING(DB_NAME(),8,11)

This command returns 999. The catch, of course, is that this only works if your database names follow a standard.

Working with Identities

Identities are a way to automatically generate unique keys in a table. You can create an identity with the IDENTITY keyword. For example, you can create an Employees table with an artificial key on EmployeeID as shown here:

CREATE TABLE [Employees] ( [EmployeeID] [int] IDENTITY (1, 1) NOT NULL , [LastName] [nvarchar] (20) NOT NULL , [FirstName] [nvarchar] (10) NOT NULL)

In the parentheses after the IDENTITY statement, you can see the seed and increment. A seed is the number at which SQL Server starts counting, and the increment is the amount that the seed is incremented by.

Resetting the Seed

As you may already have experienced, you must often reset the seed of an identity column back to its original state. One method for achieving this is by using the TRUNCATE TABLE command. This is also the quickest way to delete all the records from a table and set the identity back to its original state:

TRUNCATE TABLE table1

| Caution | The TRUNCATE TABLE command is an unlogged event. Since it is not logged, it is much faster than running a DELETE statement, but it can also be more difficult to recover from. |

The TRUNCATE TABLE command only works on tables that don't have foreign keys. If you want to reset the seed of a table with foreign keys, you must first delete the records from the table, then run the DBCC CHECKIDENT command with the RESEED parameter. You must also specify what you would like to reseed to, as follows:

DELETE FROM table1 DBCC CHECKIDENT(table1, RESEED, 0)

This outputs results similar to the following:

Checking identity information: current identity value '30', current column value '0'. DBCC execution completed. If DBCC printed error messages, contact your system administrator.

| Caution | Keep in mind that if you use a 4-byte integer column for your identity column, the maximum positive number that column can hold is 2,147,483,647. This is almost never a limitation, but as years pass on a high-traffic system, you may be concerned with this. You also can use a bigint field. bigint fields are 8 bytes and can store values up to 9,223,372,036,854,775,807. If you reach the bigint limitation, business is very good! |

@@IDENTITY

Now that you've inserted into a table with an identity column, how do you detect the last inserted value? You may have to do this if you're inserting into a series of tables that all relate to each other. The @@IDENTITY command lets you find the last identity value that your connection created. If there are two connections open, and both are creating identities, you don't risk collisions between connections with this function.

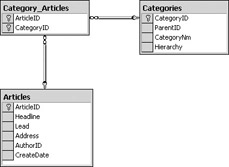

Let's take the simple scenario where an online publishing company pulls its articles from a SQL Server database. There are three tables: one holds the articles, another holds the categories, and the third resolves a many-to-many relationship between the two tables. In other words, any article can be in more than one category, and a category can have more than one article. Generally in this type of scenario, the Category table is updated rarely, but the Article table may be updated daily. The following script shows you the schema for the publishing company:

CREATE TABLE Articles ( [ArticleID] [int] IDENTITY (1, 1) NOT NULL , [Headline] [varchar] (50), [Lead] [varchar] (500), [Address] [varchar] (255), [AuthorID] [int] NOT NULL , [CreateDate] [datetime] NULL CONSTRAINT [DF_Articles_CreateDate] DEFAULT (getdate())) CREATE TABLE Categories ( [CategoryID] [int] IDENTITY (1, 1) NOT NULL , [ParentID] [int] NULL , [CategoryNm] [varchar] (30), [Hierarchy] [varchar] (15)) CREATE TABLE Category_Articles ( [ArticleID] [int] NOT NULL , [CategoryID] [int] NOT NULL)

For brevity's sake, I've left out the relationships and primary keys information since it's not important for our example. As you can see, I've also left out the Authors table, which AuthorID in the Articles table would relate to. The abbreviated database diagram for our publisher appears in Figure 6-3.

Figure 6-3: Simple online publisher's database schema

A common issue you face when trying to insert into a schema like this is that the stored procedure to insert into the Categories table may also be the same procedure to insert into the Category_Articles table. The code generally inserts into the Articles table, and then must assign categories to the article in the same transaction. You don't want to have to SELECT(MAX) to find the last inserted identity column because that causes unnecessary I/O. You also risk reading a record that someone just inserted before you can read the table. Instead, you can use the @@IDENTITY command.

Now that the tables are created, you can insert into the Articles table as

shown here:

INSERT INTO Articles (Headline, Lead, Address, AuthorID) VALUES ('Test Headline 1', 'This must see article will...', 'http://www.sqlservercentral.com', 1) To select the value of that identity column that you just inserted (ArticleID), you can use the following syntax:

SELECT @@IDENTITY as AuthorID

This returns:

AuthorID ---------------------------------------- 1 (1 row(s) affected)

You can then insert into the Category_Articles table by using the @@IDENTITY command, followed by the category name you want to associate the new article to. The complete syntax is here:

INSERT INTO Category_Articles (ArticleID, CategoryID) SELECT @@IDENTITY, 1

| Tip | I like to place the @@IDENTITY command into a parameter and then reuse it multiple times. |

| Note | If you execute the @@IDENTITY command from a different connection, the command |

scope_identity()

The scope_identity() function returns the last identity value inserted in the same scope (a scope is essentially a unit of work). For example, you have two scopes if you have a trigger established on TableA that, when executed, inserts into TableB. The @@IDENTITY command returns the identity for TableB if it too has an identity column, since it was the last table to be inserted into.

Instead, you may want scope_identity(), which returns the value of the last identity inserted into TableA. The following syntax shows you how to perform this type of action:

INSERT INTO Category_Articles (ArticleID, CategoryID) SELECT SCOPE_IDENTITY(), 1

| Note | The second your scope is destroyed by executing a new batch, the scope_identity() function is purged. |

ident_current()

The @@IDENTITY command helps you solve one problem, but the ident_current() function is a much more graceful solution to solve the same problem. The ident_current() function offers all the same features as the @@IDENTITY command with an added twist. The ident_current() function also allows you to specify a table name. It then determines the last identity inserted into the given table. For example, the following syntax returns the last identity value inserted into your Articles table:

SELECT IDENT_CURRENT('Articles') You could wrap this into the previous INSERT statement like this:

INSERT INTO Category_Articles (ArticleID, CategoryID) SELECT IDENT_CURRENT('Articles'), 1 | Caution | Unlike the other identity functions, ident_current() is not limited to the scope of the current connection. When you issue the command, it returns the last identity, whether your connection or another inserted it. This has its benefits, but could lead to collisions of data if you're not careful. If the SQL Server is stopped and your connection is reestablished, this command will still return the correct value. |

Extended Properties

Extended properties allow you to tie metadata to many database objects. Extended properties are a new feature in SQL Server 2000 (although the feature has existed in Access for a long time). You can use extended properties for any of the following tasks:

-

Self-document your data model

-

Place standardized captions on each field in your application

-

Automatically generate forms on a Web page

-

Tell the application how large the field display should be

Extended properties are stored as sql_variants and can be up to 7,500 characters in length. Since the data is stored as a sql_variant, you can essentially store any type of data in the field. This data is stored in the sysproperties system table in each database.

Perhaps the most useful application of extended properties is to create a standard screen that is automatically painted. Your applications can query the extended properties to determine how to paint the screen, and how the information is displayed to the user. Extended properties create a common interface that all of your applications can use to standardize their presentation layer. If you change the properties, all the applications automatically change the presentation.

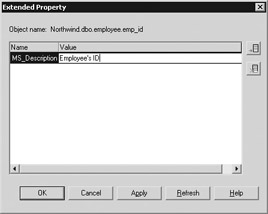

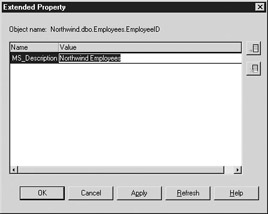

What's nice about extended properties is that they're open-ended. You can call your properties anything you want, and use them in ADO or in ad hoc queries. The primary way to access extended properties is through T-SQL or the standard SQL Server GUI tools. If you want to access extended properties through the GUI tools, one way to do so is to use Enterprise Manager. In design mode, you have the new option to add a description, as shown in Figure 6-4.

Figure 6-4: Setting a table-level extended property

In Query Analyzer, you can right-click almost any object in the Object Browser, and select Extended Properties to create, delete, or modify extended properties, as shown in Figure 6-5. The name is a user-defined name for the property. The value is what is assigned to that name for that database object. There can only be one item of the same name per database object.

Figure 6-5: Modifying an extended property in Query Analyzer

| Note | Anything that's added as a description through the Enterprise Manager's Design Table screen shows up with the name MS_Description in Query Analyzer, or when you select it through a function. Figure 6-5 shows the same extended property that's set in Figure 6-4. |

Now that you know how to set these properties in the GUI, let's learn how to set them in T-SQL. You can add an extended property by using the sp_addextendedproperty system stored procedure. The stored procedure depends on object levels. An object level tells SQL Server what type of object you're assigning the property.

Table 6-2 shows the various levels for extended properties and the valid values. Levels are hierarchical in nature, and when you add a property you must specify that level and also specify any levels that are higher. For example, if you add a property to a level 1 object, you must also specify the level 0 type. (Remember that 0 is the highest level.)

| Level 0 | Level 1 | Level 2 |

|---|---|---|

| User, Type, NULL | Table, Procedure, View, Function, Default, Rule, and NULL | Column, Parameter, Trigger, Index, |

You can use sp_addextendedproperty to add a property using the following syntax:

sp_addextendedproperty [ @name = ] { 'extended property name' } [ , [ @value = ] { 'property value' } [ , [ @level0type = ] { 'level 0 object type' } , [ @level0name = ] { 'level 0 object name' } [ , [ @level1type = ] { 'level 1 object type' } , [ @level1name = ] { 'level 1 object name' } [ , [ @level2type = ] { 'level 2 object type' } , [ @level2name = ] { 'level 2 object name' }] ] ] ] For example, to add an extended property on the Articles table that I used in the earlier example, use the following syntax:

sp_addextendedproperty 'caption', 'View Articles', 'user', dbo, 'table', 'Articles'

A table is an example of a level 1 property. It includes the owner (dbo) and the table name (Articles). This type of caption property could be used to generate a View Articles button on a Web page. If a property called 'caption' already exists on the specified object, you receive the following error:

Server: Msg 15233, Level 16, State 1, Procedure sp_addextendedproperty, Line 48 Property cannot be added. Property 'caption' already exists for 'object specified'.

You can add a level 2 property with this syntax:

sp_addextendedproperty 'caption', 'Headline', 'user', dbo, 'table', 'Articles', 'column', Headline

This generates a caption property on a column named Headline in the

Articles table. This is useful when you're creating a management system or

editing a screen for an online form. To delete the property on any level, use the sp_dropextendedproperty stored procedure. The procedure uses the same syntax

as the sp_addextendedproperty stored procedure. To drop the property on the Articles table, use this syntax:

sp_dropextendedproperty 'caption', 'user', dbo, 'table', 'Articles'

These properties won't do you a bit of good until you can select them. To retrieve the properties on a table through T-SQL, use the fn_listextendedproperty() function, with the following syntax:

FN_LISTEXTENDEDPROPERTY ( { default | [ @name = ] 'property name' | NULL } , { default | [ @level0type = ] 'level 0 object type' | NULL } , { default | [ @level0name = ] 'level 0 object name' | NULL } , { default | [ @level1type = ] 'level 1 object type' | NULL } , { default | [ @level1name = ] 'level 1 object name' | NULL } , { default | [ @level2type = ] 'level 2 object type' | NULL } , { default | [ @level2name = ] 'level 2 object name' | NULL } ) For example, I can retrieve the properties I created earlier with this syntax:

SELECT * FROM ::FN_LISTEXTENDEDPROPERTY ('caption', 'user', 'dbo', 'table', 'articles', 'column', 'headline') The code outputs the following response:

objtype objname name value -------------- ------------ --------- ------------------ COLUMN Headline caption Current Headlines (1 row(s) affected)

You can also return all the properties for the table by specifying a level 2 value of 'default.' This syntax returns all the caption properties for that table:

SELECT * FROM ::FN_LISTEXTENDEDPROPERTY ('caption', 'user', 'dbo', 'table', 'articles', 'column', default) You could replace the word ‘caption' in the above syntax with the word default (without quotes) to return all column properties for the table. Extended properties are still in their infancy. Expect bigger and better things from them in future releases.

| Tip | The Generate SQL Script screen has been enhanced to script extended properties. You can access the feature by right-clicking a database object (such as a table in Enterprise Manager) and selecting All Tasks | Generate SQL Script. The option to include extended properties is on the Formatting tab. |

|

|