Chapter 7: Associated Quality Concerns

Some issues, while of concern to software quality practitioners, are usually outside of their direct responsibility and authority. These issues have no less impact on the quality of the software system, however. This chapter discusses four important software quality issues. The role of the software quality practitioner with respect to these issues is to ensure that decision-making, action-capable management is aware of their importance and impact on the software system during its development and after its implementation. These important issues are security, education of developers and users, management of vendors, and maintenance of the software after implementation.

7.1 Security

Security is an issue that is frequently overlooked until it has been breached, either in the loss of, or damage to, critical data or in a loss to the data center itself.

Security has three main aspects. Two of these deal primarily with data: the security of the database and the security of data being transmitted to other data centers. The third aspect is that of the data center itself and the protection of the resources contained therein.

The software quality practitioner has a responsibility not to protect the data or the data center but to make management aware of the need for or inadequacies in the security provisions.

7.1.1 Database security

The software quality data security concern is that the data being used by the software is protected. Database security is two-fold. The data being processed must be correct, and, in many cases, restricted in its dissemination. Many things impact the quality of the output from the software, not the least of which is the quality of the data that the software is processing. The quality of the data is affected in several ways. The correctness of the data to be input, the correctness of the inputting process, the correctness of the processing, and, of interest to security, the safety of the data from modification before or after processing is a database security issue.

This modification can be in the form of inadvertent change by an incorrectly operating hardware or software system outside the system under consideration. It can be caused by something as simple to detect as the mounting of the wrong tape or disk pack, or as difficult to trace as faulty client-server communication. From a security point of view, it can also be the result of intentional tampering, known as hacking or attacking. Anything from the disgruntled employee who passes a magnet over the edges of a tape to scramble the stored images to the attacker who finds his or her way into the system and knowingly or unknowingly changes the database can be a threat to the quality of the software system output. Large distributed computing installations are often victims of the complexity of the data storage and access activities. While quality practitioners are not usually responsible for the design or implementation of the database system, they should be aware of increasing security concerns as systems become more widely disbursed or complex.

The physical destruction of data falls into the area of data center security that will be discussed later. Modification of data while they are part of the system is the concern of data security provisions.

Database security is generally imposed through the use of various password and access restriction techniques. Most commonly, a specific password is assigned to each individual who has access to the software system. When the person attempts to use the system, the system asks for identification in the form of the password. If the user can provide the correct password, then he or she is allowed to use the system. A record of the access is usually kept by a transaction recording routine so that if untoward results are encountered, they can be backed out by reversing the actions taken. Further, if there is damage to the data, it can be traced to the perpetrator by means of the password that was used.

This scheme works only up to a point. If care is not taken, passwords can be used by unauthorized persons to gain access to the system. For this reason, many systems now use multiple levels of password protection. One password may let the user access the system as a whole, while another is needed to access the database. Further restrictions on who can read the data in the database and who can add to it or change it are often invoked. Selective protection of the data is also used. Understanding databases and the logical and physical data models used will help the quality practitioner recommend effective security methods.

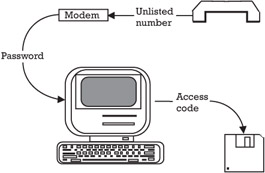

One typical system of data protection is shown in Figure 7.1. The first control is the unlisted telephone number that accesses the computer. A user who has the telephone number and reaches the computer must then identify himself or herself to the computer to get access to any system at all. Having passed this hurdle and selected a system to use, the user must pass another identification test to get the specific system to permit any activity. In Figure 7.1, the primary system uses a remote subsystem that is also password protected. Finally, the database at this point is in a read-only mode. To change or manipulate the data, special password characteristics would have to have been present during the three sign-on procedures. In this way, better than average control has been exercised over who can use the software and manipulate the data.

Figure 7.1: Dialup protection.

Another concern of database security is the dissemination of the data in the database or the output. Whether or not someone intends to harm the data, there is, in most companies, data that are sensitive to the operation or competitive tactics of the company. If those data can to be accessed by a competitor, valuable business interests could be damaged. For this reason, as well as the validity of the data, all database accesses should be candidates for protection.

7.1.2 Teleprocessing security

The data within the corporate or intercompany telecommunications network are also a security concern.

Data contained within the database are vulnerable to unauthorized access but not to the extent of data transmitted through a data network. These networks include such things as simple, remotely actuated processing tasks all the way to interbank transfers of vast sums of money. As e-commerce becomes common, personal data are increasingly at risk as well. Much effort is being expended to create secure sites for on-line transactions. Simple password schemes are rarely satisfactory in these cases. To be sure, they are necessary as a starting point, but much more protection is needed as the value of the data being transmitted increases.

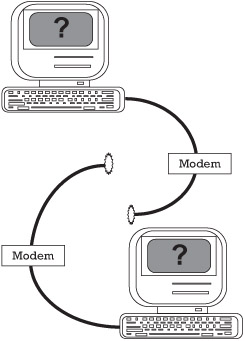

Two concerns are of importance in regard to telecommunications. The first concern, usually outside the control of the company, is the quality of the transmission medium. Data can be lost or jumbled simply because the carrier is noisy or breaks down, as is depicted in Figure 7.2. Defenses against this type of threat include parity checking or check sum calculations with retransmission if the data and the parity or checksums do not coincide. Other more elaborate data validity algorithms are available for more critical or sensitive data transmissions.

Figure 7.2: Interruption.

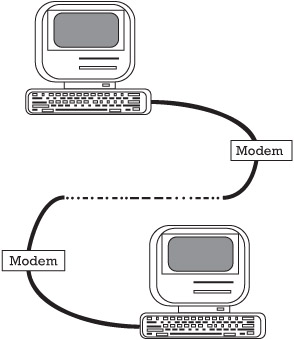

Unauthorized access to the data is the other main concern of transmission security. As data are being transmitted, they can be monitored, modified, or even redirected from their original destination to some other location. Care must be taken to ensure that the data transmitted get to their destination correctly and without outside eavesdropping. The methods used to prevent unauthorized data access usually involve encryption (see Figure 7.3) to protect the data and end-to-end protocols that make sure the data get to their intended destination.

Figure 7.3: Encryption.

Encryption can be performed by the transmission system software or by hardware specially designed for this purpose. Industries in the defense arena use highly sophisticated cryptographic equipment, while other companies need only a basic encryption algorithm for their transmissions.

As in the case of prevention of loss due to faulty network media, the use of check sums, parity checking, and other data validity methods are employed to try to assure that the data have not been damaged or tampered with during transmission.

Prevention of the diversion of data from the intended destination to an alternative is controlled through end-to-end protocols that keep both ends of the transmission aware of the activity. Should the destination end not receive the data it is expecting, the sending end is notified and transmission is terminated until the interference is identified and counteracted.

The proliferation of the use of the Internet and Web sites has immensely complicated and exacerbated these problems. It is the responsibility of software quality to monitor the data security provisions and keep management informed as to their adequacy.

7.1.3 Viruses

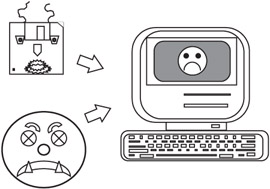

A frequent entry into the threat scenario is the computer virus. A virus is software that is attached to a legitimate application or data set. It then can commit relatively benign, nuisance acts like blanking a screen or printing a message announcing its presence. It also can be intended, like some more recent viruses, to be malignant, in that it intentionally destroys software or data. Some can even erase a full hard disk.

Viruses are usually introduced by being attached to software that, often in violation of copyright laws, is shared among users. Downloading software from a bulletin board is one of the more frequent virus infection methods. Data disks used on an infected system may carry the virus back to an otherwise healthy system, as shown in Figure 7.4.

Figure 7.4: Virus introduction.

Some viruses do not act immediately. They can be programmed to wait for a specific date, like the famous Michelangelo virus, or, perhaps, some specific processing action. At the preprogrammed time, the virus activates the mischief or damage it is intended to inflict.

Many antiviral packages are available. Unfortunately, the antiviral vaccines can only fight those viruses that have been identified. New viruses do not yet have vaccines and can cause damage before the antiviral software is available.

The best defense against viruses, although not altogether foolproof, is to use only software fresh from the publisher or vendor. Pirated software—software that has not been properly acquired—is a common source of infection.

7.1.4 Disaster recovery

A specific plan of recovery should be developed and tested to ensure continued operation in the case of damage to the data center.

Even the best risk analysis, prevention, detection, and correction are not always enough to avoid that damage that can prevent the data center from operating for some period of time.

Many companies are now so dependent on their data processing facility that even a shutdown of a few days could be life-threatening to the organization. Yet, the majority of companies have done little or no effective planning for the eventuality of major damage to their data center. Sometimes a company will enter into a mutual assistance agreement with a neighboring data center. Each agrees to perform emergency processing for the other in the event of a disaster to one of them. What they often fail to recognize is that each of them is already processing at or near the capacity of their own data center and has no time or resources to spare for the other's needs. Another fault with this approach is that the two companies are often in close physical proximity. This makes assistance more convenient from a travel and logistics viewpoint if, in fact, they do have sufficient reserve capacity to assist in the data processing. However, if the disaster suffered by one of them was the result of a storm, serious accident, an act of war, or a terrorist attack, the odds are high that the backup center has also suffered significant damage. Now both of them are without alternate facilities.

One answer to these threats is the remote, alternative processing site. A major company may provide its own site, which it keeps in reserve for an emergency. The reserve site is normally used for interruptible processing that can be set aside in the case of a disaster. However, because a reserve site is an expensive proposition, many companies enroll in disaster recovery backup cooperatives. These cooperatives provide facilities of varying resources at which a member company can perform emergency processing until its own center is repaired or rebuilt.

To augment this backup processing center approach, two conditions are necessary. The first condition, usually in place for other reasons, is the remote storage of critical data in some location away from the data center proper. That way, if something happens to a current processing run or the current database, a backup set of files is available from which the current situation can be reconstructed. The backup files should be generated no less frequently than daily, and the place in which they are stored should be well protected from damage and unauthorized access as part of the overall data security scheme.

The second necessity in a disaster recovery program is a comprehensive set of tests of the procedures that will enable the emergency processing to commence at the remote backup site. All aspects of the plan, from the original notification of the proper company authorities that a disaster has occurred through the actual implementation of the emergency processing software systems at the backup site should be rehearsed on a regular basis. Most backup cooperatives provide each member installation with a certain number of test hours each year. These need to be augmented by a series of tests of the preparations leading up to the move to the backup site. Notification procedures, access to the backup files, transportation to the backup site, security for the damaged data center to prevent further damage, provision for the acquisition of new or repaired data processing equipment both for the backup site and the damaged data center, provisions for telecommunications if required, and other types of preparations should be thoroughly documented and tested along with the actual operation at the backup site.

Software quality practitioners are natural conductors for these tests, since they must ensure that the disaster recovery plan is in place and that the emergency processing results are correct. Software quality is the reporting agency to management on the status of disaster recovery provisions.

7.1.5 Security wrap-up

Security has three main aspects: the database, data transmission, and the physical data center itself. Most companies could not last long without the data being processed. Should the data become corrupted, lost, or known outside the company, much commercial harm could result.

The failure of the data center, no matter what the cause, can also have a great negative impact on the organization's viability. A disaster recovery plan should be developed and tested by the organization, with the software quality practitioner monitoring the plans for completeness and feasibility.

As systems increase in size and complexity and as companies rely more and more on their software systems, the security aspects of quality become more important. The software quality practitioner is responsible for making management aware of the need for security procedures.

EAN: 2147483647

Pages: 137