Workload Manger and Serviceguard Example Scenario

| This scenario discusses the use of WLM in a Serviceguard cluster. In this scenario there are two nodes in the cluster, each of which has nPartitions residing in different complexes. The first nPartition's primary role is a testing nPartition and it also serves as the failover node for a Serviceguard package. The second nPartition is a production nPartition that serves as the preferred node for running the Serviceguard package. Table 15-2 provides an overview of the configuration of the two nPartitions. The Serviceguard cluster hosts a single Serviceguard package, which is a Tomcat application server. The primary node for the Tomcat package is zoo16; rex04 serves as a failover node (also referred to as an adoptive node) only when the zoo16 nPartition is down or otherwise unavailable.

When the Tomcat package is running on the rex04 nPartition, two unlicensed Temporary Instant Capacity CPUs can be activated as needed to ensure that the Tomcat application has the required resources. This allows the unlicensed CPUs to be inactive a majority of the time; they get activated only when the Tomcat package fails over to the rex04 nPartition and the application is busy enough to warrant activating them. The result is a highly available application without the requirement of redundant, idle hardware. This scenario describes the process of configuring WLM to monitor the Tomcat Serviceguard package on rex04. When the package is active on the node and the application's CPU utilization goes above 75%, WLM will be configured to automatically activate unlicensed Temporary Instant Capacity CPUs. When the workload is migrated back to zoo16 or its utilization goes below 50%, WLM will deactivate the unlicensed CPUs. The process of configuring the Serviceguard cluster used in this scenario is described in Chapter 16, "Serviceguard." Overview of WLM and Serviceguard ScenarioThis example scenario consists of the following steps. These steps are discussed throughout the example scenario.

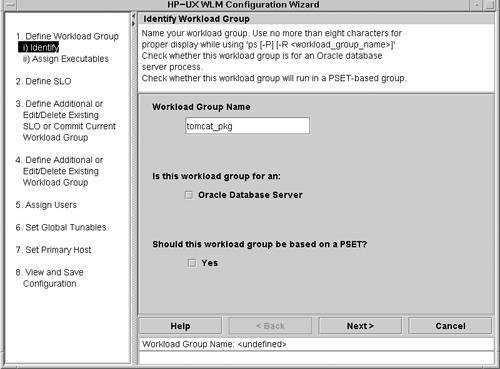

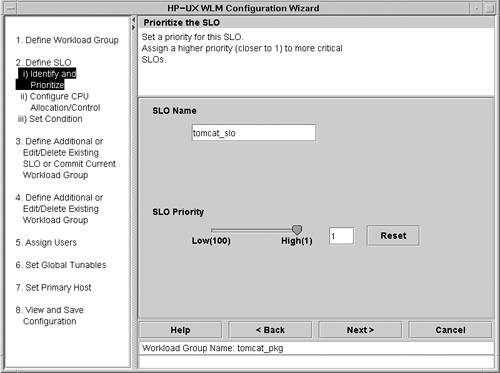

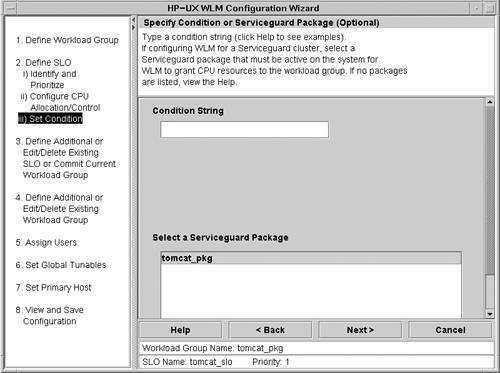

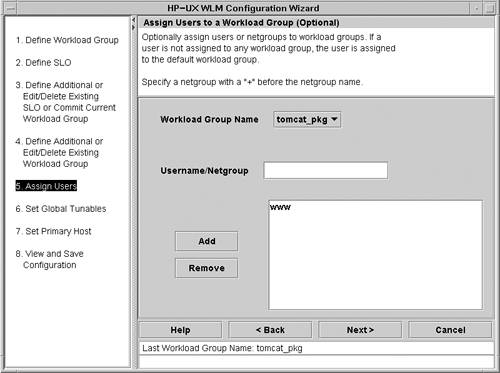

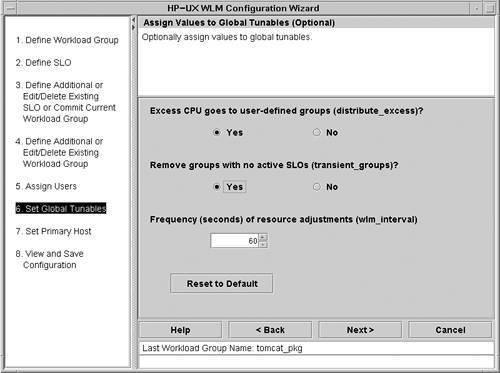

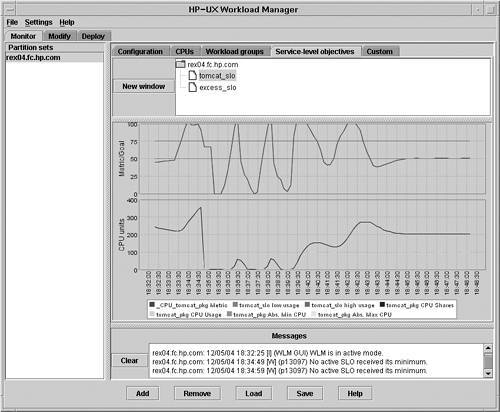

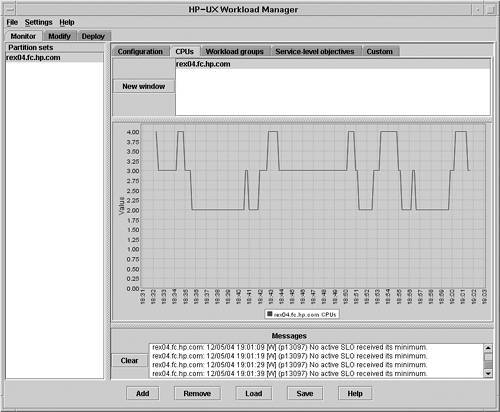

Configuring Workload ManagerWLM is not configured on zoo16. When the Tomcat workload is running on the primary host, the entire nPartition is dedicated to the Tomcat server, so there is no need to configure WLM on that nPartition. The configuration of WLM described in this section occurs on rex04. The rex04 nPartition has several testing workloads running on it, so it is important to configure WLM to ensure that when the Tomcat package is activated on the testing nPartition it receives the resources necessary to meet its SLO. Workload Manager will be configured using the graphical configuration wizard. The wizard does not currently support configuration of Temporary Instant Capacity. Therefore, the basics of the workload configuration will be specified using the configuration wizard and the Temporary Instant Capacity configuration parameters will be manually added to the configuration file. Note HP recommends that you configure the Serviceguard packages on the node before you configure WLM. This will enable WLM to automatically discover the Serviceguard packages. The screen shown in Figure 15-19 shows the identification page for the workload group. In this scenario, the name of the workload group is tomcat_pkg. This workload is not an Oracle database server and it will not be based on a PSET, so those options are not selected. Figure 15-19. Workload Manager Wizard Specify Workload Group Name The next screen of the configuration wizard allows the executable path to be specified. The path to the Tomcat application server is difficult to specify because the actual process that runs for the duration of the application is a Java process, which means that the parameters to the Java Virtual Machine must be examined to determine which Java process is Tomcat. Instead of configuring WLM to identify Tomcat by its executable location, a specific user (www) will always run the application; therefore the workload will be identified by user instead of executable path. Configuration of the user is shown in a subsequent screen. After the executable path screen, the CPU allocation screen is displayed. This is the same screen shown during the vPar WLM scenario in Figure 15-17. As with the vPar scenario, the non-metric-based CPU usage policy will be used for the tomcat_pkg workload. When the tomcat_pkg workload is consuming more than 75% of the entitled CPU resources, WLM will allocate additional resources to the workload. When consumption of CPU resources by the tomcat_pkg falls below 50% of its entitlement, WLM will release resources. The screen shown in Figure 15-20 contains the configuration of the SLO for the tomcat_pkg. The name of the SLO is defined to be tomcat_slo and the priority is 1. The tomcat_pkg workload is the highest-priority workload on the rex04 testing nPar. Figure 15-20. Workload Manager Wizard Configure SLO The next screen, shown in Figure 15-21, illustrates Serviceguard integration with WLM. The name of the Serviceguard package is tomcat_pkg. In this case, the WLM workload group and the Serviceguard package have the same names; however, there is no requirement that the two names be the same. WLM displays the set of packages that are configured to run on this system in the Select a Serviceguard Package list. Since this workload is a Serviceguard package, the package is selected. This causes the wizard to create conditions for the tomcat_slo. The packages will be inactive when the package is not running on this system, thus ensuring that resources are not wasted by reserving space for a workload that is running on another system the vast majority of the time. Figure 15-21. Workload Manager Wizard Select Serviceguard Package Next the WLM wizard displays a screen that enables users to specify the CPU limits. This screen is similar to the one shown in Figure 15-8. The CPU limits for this workload should be set to a minimum of one and a maximum of four. As a result of this setting, the tomcat_pkg is allowed to consume up to four CPUs. When more than two CPUs are active, Temporary Instant Capacity will be utilized. The Temporary Instant Capacity configuration occurs in subsequent steps; at this point, nothing has been configured that would cause Temporary Instant Capacity to be used. If the maximum value of three were specified instead, a maximum of one CPU would be activated with temporary capacity instead of two. Identifying the workload by executable path is problematic in this case because the workload is a Java application. Therefore, the workload will be identified by the user who owns the process. For this scenario, the user www will own the Tomcat server. The sole purpose of this user is to run the Tomcat application. All processes owned by this user will be assigned to this WLM group. Activities that do not relate to this workload should not be initiated by the www user because they will be considered part of the Tomcat application and will have a high priority on the system. The screen shown in Figure 15-22 allows the user to select the workload group along with the user or group name. In this case, the name of the user, www, is input. Figure 15-22. Workload Manager Wizard Assign Users The final screen shown for configuring the WLM group is presented in Figure 15-23. This screen provides the ability to distribute the excess resources to alternate workload groups instead of allocating the excess resources to the OTHERS group. In addition, this screen provides the ability to configure WLM so only groups with active workloads are allocated resources. When you are configuring a workload that corresponds to a Serviceguard package, the transient_groups option should be set to Yes. The result is a workload that only consumes resources when the corresponding package is running on the local partition. Accordingly, the transient_groups option is set to Yes for this example. Figure 15-23. Workload Manager Wizard Global Tunables Important When configuring workload groups to be associated with Serviceguard packages, selecting Yes for the transient_groups parameter is especially important. If this option is set to No, all workloads will be allocated a minimum of 1% of a CPU. For a single application, this may not be a major concern, but in situations where tens or even hundreds of packages have workload groups defined, it can become a significant waste of resources. Integrating WLM with Temporary Instant CapacityThe configuration of the tomcat_pkg is complete except for the Temporary Instant Capacity configuration elements. The file is saved by the WLM configuration wizard in the user-specified location of /etc/wlm.conf. The Temporary Instant Capacity configuration components are then added manually. The file /opt/wlm/toolkits/utility/config/minimalist.wlm can be used as a guide for augmenting the configuration file generated by the WLM configuration wizard. Listing 15-4 shows the final WLM configuration file for rex04. Lines 1 through 17 were generated by the configuration wizard except for line 4, which was manually added. These lines define the tomcat PRM group and associate the www user with the tomcat_pkg workload group. Line 4 and the associated SLO defined from lines 19 to 25 define a second workload group, excess, which is assigned an extremely low priority. This workload group is configured to always request the same amount of resources, 400 shares, or four CPUs. In addition, no processes will run in this workload group. The only purpose of the workload group is to request all of the excess shares on the system. As a result of its low priority and lack of processes, the workload group will receive resources only when the system has unused capacity. When the utility data collector, utilitydc, notices that the excess group is receiving additional resources, it deactivates unlicensed processors. Conversely, when the excess group is receiving no resources, the utilitydc realizes that all of the system's resources are being consumed and activates additional unlicensed CPUs. The first three settings in the tune structure defined from lines 27 to 33 were defined using the WLM configuration wizard. The coll_argv tune attribute defined on line 31 was manually added as part of the Temporary Instant Capacity configuration to specify the default data collector. The wlm_interval on line 32 was also added for Temporary Instant Capacity integration. This setting is the number of seconds WLM should wait between checking the performance data. In this case, WLM will check the performance data every 10 seconds. As will be described later, this does not necessarily mean that it will activate or deactivate unlicensed CPUs every interval. The tune structure defined from lines 35 to 38 was generated by the WLM configuration wizard. This setting results in the tomcat_pkg receiving resources only when the associated Serviceguard package is active. The final tune structure, defined from lines 40 to 48, is used by the excess_slo to activate and deactivate unlicensed processors. Line 43 specifies the name of the excess workload group. Line 44 indicates that the system contains unlicensed processors. Line 45 specifies that there should always be at least two processors active. In most cases, this value should represent the number of licensed processors in the system. If the value is set too high, temporary capacity will be consumed at all times. Setting the value too low may cause too many licensed processors to be inactive and licensed resources will become unusable. Line 46 instructs WLM to wait for 6 intervals before adjusting the number of unlicensed processors. The interval in this configuration is set to 10 seconds. As a result, WLM requires a workload to be above the SLO usage goal for at least 60 seconds (6 intervals multiplied by 10 seconds per interval) before unlicensed CPUs are activated. Similarly, WLM will wait for 60 seconds before deactivating unlicensed CPUs. This number in combination with the wlm_interval setting can be tuned to ensure that unlicensed CPUs are activated for the most appropriate amount of time without activating and deactivating processors too rapidly or too slowly. Finally, line 47 specifies the contact information for the authorizing administrator for activating unlicensed CPUs. Listing 15-4. Workload Manager Configuration File for rex04 1 prm { 2 groups = 3 tomcat_pkg : 2, 4 excess:60; 5 6 users = 7 www : tomcat_pkg; 8 } 9 10 slo tomcat_slo { 11 pri = 1; 12 entity = PRM group tomcat_pkg; 13 mincpu = 1; 14 maxcpu = 400; 15 goal = usage _CPU; 16 condition = metric tomcat_pkg_active; 17 } 18 19 slo excess_slo { 20 pri = 100; 21 entity = PRM group excess; 22 mincpu = 400; 23 maxcpu = 400; 24 goal = metric ticod_metric > -1; 25 } 26 27 tune { 28 absolute_cpu_units = 1; 29 distribute_excess = 1; 30 transient_groups = 1; 31 coll_argv = wlmrcvdc; 32 wlm_interval = 10 33 } 34 35 tune tomcat_pkg_active { 36 coll_argv = wlmrcvdc 37 sg_pkg_active tomcat_pkg; 38 } 39 40 tune ticod_metric { 41 coll_argv = 42 /opt/wlm/toolkits/utility/bin/utilitydc 43 -g excess 44 -i temporary-icod 45 -m 2 46 -f 6 47 -d "Bryan Jacquot:bryanj@rex04.fc.hp.com:555.555.4700"; 48 } Starting Workload ManagerThe last step to be performed before starting WLM is validation of the configuration. The WLM command /opt/wlm/bin/wlmd c /etc/wlm.conf should be used to ensure that there are no errors in the configuration file. After the configuration file has been validated, it is activated with the /opt/wlm/bin/wlmd a /etc/wlm.conf command. At this point, the WLM configuration is active. As long as the Serviceguard package is running on zoo16, there will be no user-visible changes to the rex04 nPartition. When the tomcat_pkg Serviceguard package does fail over to rex04 as the adoptive node, the WLM SLO for the tomcat_pkg workload will be activated. As the workload demands increase, WLM will activate unlicensed CPUs in rex04 to meet the SLOs. When the workload demands decrease or when the package is migrated back to zoo16, any unlicensed CPUs that have been activated will be deactivated. In order to monitor the tomcat_pkg workload on rex04, the wlmcomd daemon must be started by executing the /opt/wlm/bin/wlmcomd command. To ensure that WLM starts after rebooting rex04, the /etc/rc.config.d/wlm file should be updated. The WLM_ENABLE variable should be set to 1, and if remote monitoring is desired, the WLMCOMD_ENABLE variable should be set to 1 as well. The WLM_STARTUP_SLOFILE variable should also be explicitly configured to ensure that the proper configuration file is used when WLM starts. Monitoring Workload ManagerWhen the Serviceguard cluster package is not running on rex04, the workload group is disabled, as is apparent in the output following wlminfo command. # /opt/wlm/bin/wlminfo slo -s tomcat_slo Sun Dec 5 15:16:01 2004 SLO Name Group Pri Req Shares State Concern tomcat_slo tomcat_pkg 1 - 0 OFF Disabled No resources are consumed by this workload because it is disabled. When the zoo16 node is unavailable or has failed, the Tomcat package will be migrated to rex04. Figure 15-24 shows the tomcat_slo graphs available from the wlmgui command. Notice in the upper graph that when the CPU usage of the tomcat_slo is above 75%, the workload is requesting more CPU resources. Because of the minimum of a 60-second delay in activating unlicensed CPUs, the erratic nature of the workload in the upper graph becomes smoother in the CPU allocation graph. None of the utilization spikes between the time of 18:35 and 18:39 resulted in any substantial change in the number of shares assigned to the workload, and consequently no unlicensed CPUs were activated during that period. Figure 15-24. Workload Manager Service Level Objectives Graph The graph shown in Figure 15-25 illustrates the number of CPUs active in the rex04 nPar. In this case, all CPU values above 2 indicate periods when Temporary Instant Capacity is being consumed. From this graph it is readily apparent that CPUs are being activated and deactivated quite regularly. As a result, it may be appropriate to increase the wlm_interval value, increase the number of intervals WLM will wait to make Temporary Instant Capacity changes, or both. The result would be a flatter graph that would show only sustained workload peaks causing Temporary Instant Capacity to be allocated and only continued workload lulls causing deallocation of unlicensed CPUs. Figure 15-25. Workload Manager CPU Graph Viewing the Instant Capacity LogBecause WLM automatically activates and deactivates processors, it becomes increasingly important to monitor the usage of temporary capacity. As described in Chapter 11, "Temporary Instant Capacity," the administrative e-mail address should be configured so every WLM activation and deactivation of unlicensed CPUs can be monitored. In addition, a detailed log is maintained by the Instant Capacity infrastructure. Listing 15-5 shows an example Instant Capacity log file when WLM is automatically activating and deactivating CPUs. Listing 15-5. Instant Capacity Logfile with Workload Manager CPU Activations and Deactivations # cat /var/adm/icod.log Date: 12/05/04 18:56:36 Log Type: Configuration Change Total processors: 4 Active processors: 2 Intended Active CPUs: 2 Description: Surplus resources Changed by: Workload-Manager Date: 12/05/04 18:59:41 Log Type: Configuration Change Total processors: 4 Active processors: 3 Intended Active CPUs: 3 Description: SLOs failing Changed by: Workload-Manager Date: 12/05/04 19:00:16 Log Type: Temporary capacity debit CPU minutes debited: 30 Licensed processors: 14 Active processors: 15 nPar Active ID CPUs 0 4 1 3 2 4* 3 4* Temporary capacity available: 198 days, 0 hours, 0 minutes Date: 12/05/04 19:00:18 Log Type: Configuration Change Total processors: 4 Active processors: 4 Intended Active CPUs: 4 Description: SLOs failing Changed by: Workload-Manager Date: 12/05/04 19:01:22 Log Type: Configuration Change Total processors: 4 Active processors: 3 Intended Active CPUs: 3 Description: Surplus resources Changed by: Workload-Manager |

EAN: 2147483647

Pages: 197