3.4 Context Awareness

3.4.1 IntroductionOne radical change in computing is the introduction of context awareness . One popular definition of context is "that which surrounds and gives meaning to something else." [14] A context describes a situation and the environment a device or user is in.

Computing until now tried to avoid context as much as possible. Most of today's software acts exactly the same, regardless of when and where you use it or who you are, whether you are new to it or have used it in the past, whether you are a beginner or an expert, whether you are using it alone or with friends . But what you may want the computer to do could be different under all those circumstances. This comes from the desire for abstraction by software companies. They want to sell one piece of software to as many people as possible without any change. Henry Lieberman and Ted Selker (both MIT [15] ) have written a very good introduction on this revolutionary introduction of context [16] .

Computers, so far, have been considered as black boxes. You have some input, the computer does something with it, and you receive some output. The nice thing about context-free computing is the high level of abstraction. If you look at mathematical functions, you can clearly see why. They derive their power from the fact that they ignore the context and work in all possible contexts. The fewer exceptions a function has, the better it is. This paradigm also works for grammars. Context-free grammars are much simpler than context-sensitive grammars and therefore are preferred to describe languages. Selker and Lieberman believe that software has become too abstract and that there is a need for context sensitivity where it is appropriate. The divide-and-conquer model assumes that if you divide something into two, they are independent of each other. This strategy created a lot of independent modules that can be operated on their own, but it neglects the fact that there is sometimes the need to understand how each piece fits in its context. There are several reasons why context is important. First and foremost, explicit input from the user is expensive. It slows down the interaction, interrupts the user's train of thought and raises the possibility of mistakes. The user may be uncertain about what input to provide, and may not be able to provide it all at once. Everybody is familiar with the hassle of continually re-filling out forms on the Web. If the system can get the information it needs from context, why ask for it again? Devices that sense the environment and use speech recognition or visual recognition may act on input they sense that may or may not be explicitly indicated by the user. Therefore, in many user interface situations, the goal is to minimize input explicitly required from the user. The same applies to explicit output. It is not always desirable to have it for each computational process, because it requires the user's attention. Hiroshi Ishii, for example, and others have worked on so-called "ambient interfaces" where the output is a subtle change in barelynoticeable environmental factors such as lights and sounds, the goal being to establish a background awareness rather than force the user's attention to the system's output. Another issue with context-free computing is that it assumes that the input-output loop is sequential. But this is no longer true of modern user interfaces, where several inputs and outputs can happen at the same time. Each context provides a relevant set of features with a range of values that is determined (implicitly or explicitly) by the context. By relating information processing and communication to aspects of the situations in which such processing occurs, results can be obtained much more quickly. Context is a powerful and longstanding concept in human-computer interaction. Interaction with computation is by explicit acts of communication (e.g., pointing to a menu item), and the context is implicit (e.g., default settings). Context can be used to interpret explicit acts, making communication much more efficient. Thus, by carefully embedding computing into the context of our lived activities, it can serve us with minimal effort on our part. Communication not only can be effortless, but also can naturally fit in with our ongoing activities. Pushing this further, the actions we take are not even felt to be attempts at communication; rather, we just engage in normal. Graphical user interfaces in operating systems adapt menus to such contexts as dialogue status and user preferences. Mobile phones have context-sensitive buttons to reduce the number of buttons required and even more advanced car stereos use context-sensitive menus to enable the car owners not only to listen to the radio, but also to change the time and date without having to remember a complex process. Context is also a key component of mobile computing. While many people thought that mobile computing would only be about location awareness, it has become quite clear that this is only one aspect of context that can be used by mobile computing devices. These new devices (such as PDAs, mobile phones, and wearable computers) relate their services to the surrounding situation of usage. A primary concern of context awareness is awareness of the physical environment surrounding a user and his device (see Figure 3.4). This has already been implemented in several products that provide location awareness, for instance based on global positioning, or the use of beacons . As mentioned before, location is only one aspect of the physical environment, and it is often used as an approximation of a more complex context. Beyond location, awareness of the physical conditions in a given environment is also important. This is based on the assumption that the more a mobile device knows about its usage context, the better it can support its user. With advances in sensor technology, awareness of physical conditions is now embedded in mobile devices at low cost. Figure 3.4. Context Types Research in the area of context can be divided into two main categories: human factors and physical environment. Human factors can include information on the user, such as emotional state, knowledge of habits, or biophysiological conditions. It can also include information about the social environment of the user, which typically includes the co-location of others, group dynamics, and social interaction. Besides information about the user and the social environment, context about the user's tasks are covered by the category of human factors. This includes information about the type of activity (e.g., spontaneous or planned), a description of the task itself and the goal of the task. All computing systems have some dependency on prior history or state . The complexity of systems, or power of systems, is related to how many distinct prior conditions they distinguish, and these distinctions show up in how they react to future events. So when a machine has state, it has also a sense of history and a variety of behaviors in the future that appropriately vary in response to its history. When people have this kind of memory, we refer to it as knowledge. So knowledge is what machines acquire through their interactions with people that enable them to behave more appropriately in the future. When we use this knowledge to predispose our systems to interpret new inputs or to respond appropriately, we are "contextualizing" our interpretations and response. So the long history of computing, going back to the first efforts to think about a hierarchy of machines that had more and more state and could use it more and more powerfully, is actually the forerunner of our modern-day appreciation of context. Besides human factors, the physical environment plays an important role in context-aware computing. Probably best known is location awareness, which includes the absolute position of a person, relative position, and co-location. Besides location, the device can receive contextual information about the infrastructure, such as surrounding resources for computation, communication, task performance, and physical conditions, which include noise, light, and pressure. The notion of context is much more widely appreciated today. Context is key to dispersing and enmeshing computation into our lives. Context refers to the physical and social situation in which computational devices are embedded. One goal of context-aware computing is to acquire and utilize information about the context of a device to provide services that are appropriate to the particular people, place, time, events, etc. For example, a cell phone will always vibrate and never beep at a concert if the system knows the location of the cell phone and the concert schedule. However, this is more than simply a question of gathering more and more contextual information about complex situations. More information is not necessarily more helpful. Further, gathering information about our activities intrudes on our privacy. Context information is useful only when it can be usefully interpreted, and it must be treated with sensitivity. 3.4.2 Human FactorsHuman factors play an important role in context, which has been neglected so far, because it is very difficult to get reliable information into intelligent appliances, especially because human beings are not always logical. The appliances need to understand who the users are, the tasks that need to be done in that moment, and the social context in order to "behave" correctly. UserAs we talk about a me-centric or human-centric computing world, it is probably the most important task to accommodate users with different skills, knowledge, age, gender, disabilities , disabling conditions (mobility, sunlight, noise), literacy , culture, or income, just to name a few variables . Since skill levels with computing vary greatly, it is necessary to adapt the application to the knowledge and skills of the user. This means that applications need to segment the user base. NASA [17] , for example, provides a children's section on its space mission pages. Similar segmenting strategies can be employed to accommodate users with poor reading skills or users who require other natural languages.

A more difficult problem comes in trying to accommodate users with a wide range of incomes, cultures, or religions. The appliances and services need to make sure that the information offered is not offensive to the user, and they need to understand what the typical process in a particular country may be to support the users instead of confusing them. The most simple example could be a Web site that allows you to order goods and requires you to select a U.S. state, even though you may be living abroad. Another set of issues deals with the wide range of disabilities or differential capabilities of users. Blind users will be more active users of information and communications services if they can receive documents by speech synthesis or in Braille, and provide input by voice or their customized interfaces. Physically disabled users are more likely to use services if they can connect their customized interfaces to standard graphical user interfaces, even though they may work at a much slower pace. Cognitively impaired users with mild learning disabilities, dyslexia, poor memory, and other special needs could also be accommodated with modest changes to improve layouts, control vocabulary, and limit short- term memory demands. Expert and frequent users also have special needs. Enabling customization that speeds high-volume users, using "macros" (series of operations) to automate repeated operations, and including special-purpose devices could benefit many. Therefore, appropriate services and appliances for a broader range of users need to be developed, tested , and refined. Corporate knowledge workers are the primary target audience for many contemporary software projects, so the interface and information needs of the unemployed, homemakers, disabled, or migrant workers usually get less attention. Task KnowledgeIn order to have smart devices help you more efficiently , two aspects of task knowledge need to be considered. First, the device needs a description of the tasks it can fulfill on behalf of its owner, and it needs to recognize when it should initiate such a task. In order to have a good description, a task analysis needs to take place. The smart device needs to learn how to solve problems and get tasks done right. It needs to know the sequence of the work within a specific task and the related workflows and process specifications. This technical information can either be stored in the device itself or requested from a server on demand. Below the process layer, the smart device also needs to have some IT-specific how-to knowledge to get access, set privileges, change permissions, and delegate tasks and responsibilities. The device needs to understand the normal and best ways to do things. It needs to learn how to allocate resources or get more resources available to do work. It needs to be able to choose among alternative sources or methods , based on up-front costs and opportunity costs. Only then can it avoid unnecessary additional costs or create unnecessary work. But first and foremost, the devices need to learn that perfection is not always the best solution and that a quick fix may help much more than a lengthy process that may resolve the issue, but may be too late or too expensive. Social KnowledgeSocial knowledge is about getting along with people. It means doing things in a courteous and appropriately informal way, comporting oneself when one is an agent working on behalf of a principal, and appreciating the efforts of people. This is a huge challenge to computing today, as social knowledge can vary not only from country to country, but from family to family. Especially in diverse environments, like Paris, Berlin, or London, where many cultures live side by side, some people are integrated into the local culture and some are not. How should agents react if they need to communicate with a German butcher, Lebanese hairdresser, and Chinese bankers for a single service? Although technology and business are driving towards globalization, it is still the people who run the technology and the business and make the difference. Implementing rules of conduct will be important, as they will help the appliances and services to interpret input from humans better. It also helps to reduce the amount of information that is required from a person, as it takes facial expressions and gestures into account and is able to interpret them in the correct way. 3.4.3 Physical EnvironmentIntelligent appliances can already gauge the physical environment fairly easily. Mankind has spent quite a lot of effort to measure physical conditions such as the weather and convert it into digital information. The challenge is to get information about local conditions through small sensors that do not affect the size or the longevity of the device. Lots of time and effort has also been spent on getting to know which other technical resources are available. In order to get the most out of an intelligent appliance, it needs to know which other services and devices are available to support a certain process. Although this has been researched for decades, we are only slowly moving towards a semiautomatic discovery service, and it will be some time before we see automatic service and device discovery in every device. Last, but not least, location plays an important role. Through GPS and similar services, it is possible to detect the exact location of every single device or person on earth. This information can be used to provide specific information and services related to that location. ConditionsPhysical conditions, which can include noise, light, and pressure, can be used in many applications to reduce the amount of data that needs to be entered into a context-sensitive appliance. Imagine a personal MP3 player that adjusts the volume depending on the surrounding noise. This could also be used for a communication system embedded in helmets that are worn by construction workers. Many cars already switch on the lights automatically if it gets dark, such as in the evening or when the car enters a tunnel. The weather conditions could also be used to force the driver to slow down in curves, where difficult conditions are expected. Rain could start the windshield wipers. Pressure can be of importance to applications in planes or submarines to indicate the altitude at which the vehicle operates. This could trigger some operations to be conducted automatically. It can be also used as an indicator for changing weather, which could force planes to reroute , for example, or move appointments back half an hour for business people driving in a car. InfrastructureThe physical infrastructure around a certain device is also of importance. It includes the surrounding resources for computation, communication, and task performance. This area of research is very well developed. Sun, IBM, and Apple have been working on this issue for a while, and Apple [18] released its "Rendezvous" technology in July 2002, which allows a user to connect a certain device with all surrounding devices with no configuration required at all. This means that a user can walk into an office location and have the laptop/PDA/mobile phone automatically recognize all available resources that could be used. This would include printers and scanners from a hardware point of view, but also software services, such as configurations (e.g., proxies) or applications (e.g., databases) that help users to do their work.

This so-called "Zero Configuration Networking" [19] has become a working group in IETF [20] , which has established standards on which Rendezvous is based, for example. By knowing which devices are available, the device used can provide different services to the user. Steve Cheshire of Apple has an example of how Zero Configuration Networking can help in everyday life. Imagine having two TiVo Personal Video Recorders, one in the living room and one in the bedroom. Now what is the problem? At night the user turns on the bedroom television to watch a recorded episode of Seinfeld before he goes to sleep, but he can't because it is recorded on the other TiVo. Imagine if any TiVo in your house could automatically discover and play content recorded on any other TiVo in your house, or even exchange recordings with other TiVos in the neighborhood.

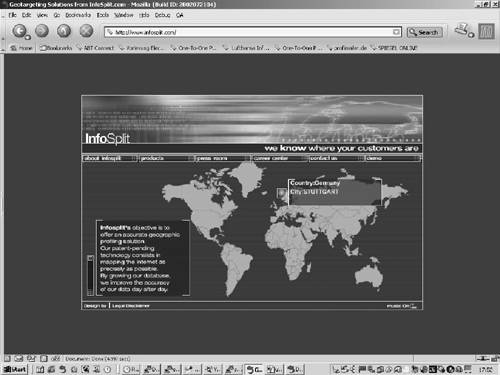

Speaking more generally about Zero Configuration Networking, it means that we can easily move data and services among intelligent devices, if required. LocationA user's location is an important service customization criterion. It is an important service customization parameter for mobile me-centric services, for example. The most common forms of representing location information are physical and geographical. In particular, absolute physical location is the form of choice for most positioning devices such as GPS receivers. However, it is not easy for humans to express and comprehend a latitude-longitude pair. Even with an accurate absolute physical location of a passenger travelling on a public transport bus, a service may not be able to conclusively determine the bus's route number. As a result, the service cannot offer richer services like estimating the time of arrival at the passenger's destination. In Figure 3.5, you can see the service of Info -Split [21] . They can track down a user based on the network connection he is using without involving GPS. You can see that Danny Amor is currently in Stuttgart, Germany, for example.

Figure 3.5. Location-Based Services The city of Palo Alto will be recognized by those interested in technology as an important city in the San Francisco Bay area. However, a person who has never heard of that city won't know where it is. City and other common location descriptions such as zip codes and postal addresses convey physical location as well as certain implicit semantics. Such geographical location representations are useful for services requiring explicit user input, as they are easier for people to remember and communicate. However, this representation format can be ambiguous, difficult to sense with devices and too coarse for many applications. Geographic location representation carries more semantic information than physical, but there is still not enough information in either representation to determine the nature or purpose of a place. To address these issues, HP Labs has defined an orthogonal form of location that they call semantic location. Semantic locations are globally uniform and unambiguous (URIs are by definition unique), and links to them can carry as much semantic information as required. They are represented by URLs, and are linked and accessed using standard Web infrastructure. This is a highly scalable approach as there is no central control point. Depending on the application, the place, and the desired accuracy, one of the many URL sensing technologies can be selected. Even at a single place, a heterogeneous set of technologies can be deployed. Visiting a semantic location does not imply physical presence at the associated place. This impedes the ability of mobile e-services to conclusively track the physical movements of nomadic users, without restricting their ability to provide localized service. 3.4.4 Building Context-Aware ApplicationsContext-aware applications can be built by following a series of steps described in this subsection. First, it is important to identify the contexts that really matter. In many cases, there is no context at all that matters, so the first step is to analyze the usage of the artifact that should become smarter . You should try to find out if the artifact is used in changing situations, if the expectation of the user varies with the situation, and if the interaction pattern is different in various situations. Only if these questions can be answered positively should one consider this context to be of use for the application. For all the situations that matter, you have to identify the conditions of the informational, physical, and social environment. Real-world situations that should be treated the same are grouped into one context. These situations typically identify a number of variables that discriminate the context. This can include information about time interval, number of messages, temperature, value, number of people in the vicinity, relationship with people nearby, for example. Once the relevant contexts have been identified, it is necessary to find the appropriate sensors for these context artifacts. When selecting a sensor, the accuracy and the cost for providing the information should be taken into account. The resulting selection of sensors should be done such that the sensors cover all variables with sufficient accuracy at minimal cost. Now that the context artifacts and the relevant sensors have been selected, you should build and assess a prototype. Here it is especially interesting to experiment with the positions of the sensors on the device. Then the sensing device is used in the situations that should be detected , and data is recorded to be analyzed later. After the prototype has been tested and analyzed successfully, you have to determine recognition and abstraction technologies. Most sensors will provide too much information, so it is important to identify a set of cues that reduces the amount of data but not the knowledge about the situation. Based on the cues selected as above and applied to the data recorded, an algorithm is selected that recognizes the contexts with maximal certainty and is also suitable for the usage of the artifact. Now that the cues have been identified, the integration of cue processing and the context abstraction needs to take place. In this step, the sensing technology and processing methods are integrated in a prototypical artifact in which the reaction of the artifact is immediate. A design decision can be that the processing is done in the back-end, transparent to the user of the artifact. Using the prototypical artifact, the recognition performance and reliability is assessed in the realworld situations identified. If recognition problems or ambiguities are identified, the algorithms or cues have to be optimized or the sensor selection may even need to be rethought. Once this works out and is accepted by the target group, you can go ahead and build applications on top of the artifacts that use the context knowledge. 3.4.5 Context SummaryAs you can see from this section, context is a powerful and longstanding concept in human-computer interaction, which in turn is a key feature of me-centric computing. Context helps to reduce the amount of data that a user needs to enter. It makes it possible to concentrate on the real task and enables intelligent appliances to act autonomously on behalf of the user, based on the current context, no matter if it is a process step or the temperature outside of the appliance that will trigger the action. |

EAN: 2147483647

Pages: 88