Memory Basics

| This chapter discusses memory from both a physical and logical point of view. First, we'll examine what memory is, where it fits into the PC architecture, and how it works. Then we'll look at the various types of memory, speeds, and packaging of the chips and memory modules you can buy and install. This chapter also covers the logical layout of memory, defining the various areas and their uses from the system's point of view. Because the logical layout and uses are within the "mind" of the processor, memory mapping and logical layout remain perhaps the most difficult subjects to grasp in the PC universe. This chapter contains useful information that removes the mysteries associated with memory and enables you to get the most out of your system. Memory is the workspace for the computer's processor. It is a temporary storage area where the programs and data being operated on by the processor must reside. Memory storage is considered temporary because the data and programs remain there only as long as the computer has electrical power or is not reset. Before being shut down or reset, any data that has been changed should be saved to a more permanent storage device (usually a hard disk) so it can be reloaded into memory in the future. Memory often is called RAM, for random access memory. Main memory is called RAM because you can randomly (as opposed to sequentially) access any location in memory. This designation is somewhat misleading and often misinterpreted. Read-only memory (ROM), for example, is also randomly accessible, yet is usually differentiated from the system RAM because it maintains data without power and can't normally be written to. Although a hard disk can be used as virtual random access memory, we don't consider that RAM either. Over the years, the definition of RAM has changed from a simple acronym to become something that means the primary memory workspace the processor uses to run programs, which usually is constructed of a type of chip called dynamic RAM (DRAM). One of the characteristics of DRAM chips (and therefore most types of RAM in general) is that they store data dynamically, which really has two meanings. One meaning is that the information can be written to RAM repeatedly at any time. The other has to do with the fact that DRAM requires the data to be refreshed (essentially rewritten) every few milliseconds or so; faster RAM requires refreshing more often than slower RAM. A type of RAM called static RAM (SRAM) does not require the periodic refreshing. An important characteristic of RAM in general is that data is stored only as long as the memory has electrical power. Note Both DRAM and SRAM memory maintain their contents only as long as power is present. However, a different type of memory known as Flash memory does not. Flash memory can retain its contents without electricity, and it is most commonly used today in digital camera media and USB keychain drives. As far as the PC is concerned, a Flash memory device emulates a disk drive (not RAM) and is accessed by a drive letter, just as with any other disk or optical drive. When we talk about a computer's memory, we usually mean the RAM or physical memory in the system, which is mainly the memory chips or modules the processor uses to store primary active programs and data. This often is confused with the term storage, which should be used when referring to things such as disk and tape drives (although they can be used as a form of RAM called virtual memory). RAM can refer to both the physical chips that make up the memory in the system and the logical mapping and layout of that memory. Logical mapping and layout refer to how the memory addresses are mapped to actual chips and what address locations contain which types of system information. People new to computers often confuse main memory (RAM) with disk storage because both have capacities that are expressed in similar megabyte or gigabyte terms. The best analogy to explain the relationship between memory and disk storage I've found is to think of an office with a desk and a file cabinet. In this popular analogy, the file cabinet represents the system's hard disk, where both programs and data are stored for long-term safekeeping. The desk represents the system's main memory, which allows the person working at the desk (acting as the processor) direct access to any files placed on it. Files represent the programs and documents you can "load" into the memory. To work on a particular file, it must first be retrieved from the cabinet and placed on the desk. If the desk is large enough, you might be able to have several files open on it at one time; likewise, if your system has more memory, you can run more or larger programs and work on more or larger documents. Adding hard disk space to a system is similar to putting a bigger file cabinet in the officemore files can be permanently stored. And adding more memory to a system is like getting a bigger deskyou can work on more programs and data at the same time. One difference between this analogy and the way things really work in a computer is that when a file is loaded into memory, it is a copy of the file that is actually loaded; the original still resides on the hard disk. Because of the temporary nature of memory, any files that have been changed after being loaded into memory must then be saved back to the hard disk before the system is powered off (which erases the memory). If the changed file in memory is not saved, the original copy of the file on the hard disk remains unaltered. This is like saying that any changes made to files left on the desktop are discarded when the office is closed, although the original files are still preserved in the cabinet. Memory temporarily stores programs when they are running, along with the data being used by those programs. RAM chips are sometimes termed volatile storage because when you turn off your computer or an electrical outage occurs, whatever is stored in RAM is lost unless you saved it to your hard drive. Because of the volatile nature of RAM, many computer users make it a habit to save their work frequentlya habit I recommend. (Some software applications can do timed backups automatically.) Launching a computer program brings files into RAM, and as long as they are running, computer programs reside in RAM. The CPU executes programmed instructions in RAM and also stores results in RAM. RAM stores your keystrokes when you use a word processor and also stores numbers used in calculations. Telling a program to save your data instructs the program to store RAM contents on your hard drive as a file. Physically, the main memory in a system is a collection of chips or modules containing chips that are usually plugged into the motherboard. These chips or modules vary in their electrical and physical designs and must be compatible with the system into which they are being installed to function properly. This chapter discusses the various types of chips and modules that can be installed in different systems. How much you spend on memory for your PC depends mostly on the amount and type of modules you purchase. Baseline DDR or DDR2 DRAM memory modules totaling 256MB1GB can be among the more inexpensive components inside your system, costing less than $100. However, modules designed for high performance (particularly for use with overclocked systems) can be considerably more expensive. Before the big memory price crash in mid-1996, memory had maintained a fairly consistent price for many years of about $40 per megabyte. A typical configuration back then of 16MB cost more than $600. In fact, memory was so expensive at that time that it was worth more than its weight in gold. These high prices caught the attention of criminals and memory module manufacturers were robbed at gunpoint in several large heists. These robberies were partially induced by the fact that memory was so valuable, the demand was high, and stolen chips or modules were virtually impossible to trace. After the rash of armed robberies and other thefts, memory module manufacturers began posting armed guards and implementing beefed-up security procedures. By the end of 1996, memory prices had cooled considerably to about $4 per megabytea tenfold price drop in less than a year. Prices continued to fall after the major crash until they were at or below 50 cents per megabyte in 1997. All seemed well, until events in 1998 conspired to create a spike in memory prices, increasing them by four times their previous levels. The main culprit was Intel, who had driven the industry to support a then-new type of memory called Rambus DRAM (RDRAM) and then failed to deliver the supporting chipsets on time. The industry was caught in a bind by shifting production to a type of memory for which there were no chipsets or motherboards to plug into, which then created a shortage of the existing (and popular) SDRAM memory. An earthquake in Taiwan during that year served as the icing on the cake, disrupting production and furthering the spike in prices. Since then, things have cooled considerably, and memory prices have dropped to all-time lows, with actual prices of under 13 cents per megabyte. In particular, 2001 was a disastrous year in the semiconductor industry, prompted by the dot-com crash as well as worldwide events, and sales dropped well below that of previous years. This conspired to bring memory prices down further than they had ever been and even forced some companies to merge or go out of business. Memory is less expensive now than ever, but its useful life has become much shorter. New types of memory are being adopted more quickly than before, and any new systems you purchase now most likely will not accept the same memory as your existing ones. In an upgrade or a repair situation, that means you often have to change the memory if you change the motherboard. The chance that you can reuse the memory in an existing motherboard when upgrading to a new one is slim. Because of this, you should understand all the various types of memory on the market today, so you can best determine which types are required by which systems, and thus more easily plan for future upgrades and repairs. To better understand physical memory in a system, you should understand what types of memory are found in a typical PC and what the role of each type is. Three main types of physical memory are used in modern PCs:

The only type of memory you need to purchase and install is DRAM. The other types are built in to the motherboard (ROM); processor (SRAM); and other components such as the video card, hard drives, and so on. ROMRead-only memory, or ROM, is a type of memory that can permanently or semipermanently store data. It is called read-only because it is either impossible or difficult to write to. ROM also is often referred to as nonvolatile memory because any data stored in ROM remains there, even if the power is turned off. As such, ROM is an ideal place to put the PC's startup instructionsthat is, the software that boots the system. Note that ROM and RAM are not opposites, as some people seem to believe. Both are simply types of memory. In fact, ROM could be classified as technically a subset of the system's RAM. In other words, a portion of the system's random access memory address space is mapped into one or more ROM chips. This is necessary to contain the software that enables the PC to boot up; otherwise, the processor would have no program in memory to execute when it was powered on.

The main ROM BIOS is contained in a ROM chip on the motherboard, but there are also adapter cards with ROMs on them as well. ROMs on adapter cards contain auxiliary BIOS routines and drivers needed by the particular card, especially for those cards that must be active early in the boot process, such as video cards. Cards that don't need drivers active at boot time typically don't have a ROM because those drivers can be loaded from the hard disk later in the boot process. Most systems today use a type of ROM called electrically erasable programmable ROM (EEPROM), which is a form of Flash memory. Flash is a truly nonvolatile memory that is rewritable, enabling users to easily update the ROM or firmware in their motherboards or any other components (video cards, SCSI cards, peripherals, and so on). DRAMDynamic RAM (DRAM) is the type of memory chip used for most of the main memory in a modern PC. The main advantages of DRAM are that it is very dense, meaning you can pack a lot of bits into a very small chip, and it is inexpensive, which makes purchasing large amounts of memory affordable. The memory cells in a DRAM chip are tiny capacitors that retain a charge to indicate a bit. The problem with DRAM is that it is dynamic. Also, because of the design, it must be constantly refreshed; otherwise, the electrical charges in the individual memory capacitors will drain and the data will be lost. Refresh occurs when the system memory controller takes a tiny break and accesses all the rows of data in the memory chips. Most systems have a memory controller (normally built in to the North Bridge portion of the motherboard chipset or located within the CPU in the case of the AMD Athlon 64 and Opteron processors), which is set for an industry-standard refresh time of 15ms (milliseconds). This means that every 15ms, all the rows in the memory are automatically read to refresh the data.

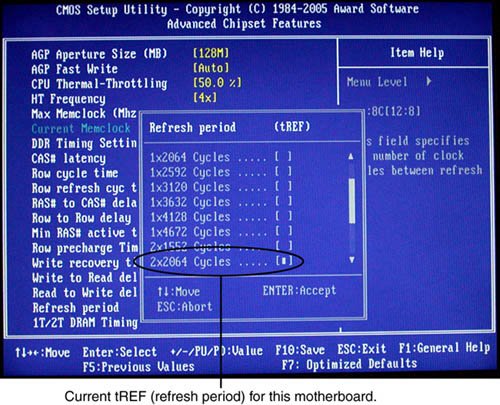

Refreshing the memory unfortunately takes processor time away from other tasks because each refresh cycle takes several CPU cycles to complete. In older systems, the refresh cycling could take up to 10% or more of the total CPU time, but with modern systems running in the multi-gigahertz range, refresh overhead is now on the order of a fraction of a percent or less of the total CPU time. Some systems allow you to alter the refresh timing parameters via the CMOS Setup. The time between refresh cycles is known as tREF and is expressed not in milliseconds, but in clock cycles (see Figure 6.1). Figure 6.1. The refresh period dialog box and other advanced memory timings can be adjusted manually through the system CMOS setup program. It's important to be aware that increasing the time between refresh cycles (tREF) to speed up your system can allow some of the memory cells to begin draining prematurely, which can cause random soft memory errors to appear. A soft error is a data error that is not caused by a defective chip. To avoid soft errors, it is usually safer to stick with the recommended or default refresh timing. Because refresh consumes less than 1% of modern system overall bandwidth, altering the refresh rate has little effect on performance. It is almost always best to use default or automatic settings for any memory timings in the BIOS Setup. Many modern systems don't allow changes to memory timings and are permanently set to automatic settings. On an automatic setting, the motherboard reads the timing parameters out of the serial presence detect (SPD) ROM found on the memory module and sets the cycling speeds to match. DRAMs use only one transistor and capacitor pair per bit, which makes them very dense, offering more memory capacity per chip than other types of memory. Currently, DRAM chips are available with densities of up to 1Gb or more. This means that DRAM chips are available with one billion or more transistors! Compare this to a Pentium D, which has 230 million transistors, and it makes the processor look wimpy by comparison. The difference is that in a memory chip, the transistors and capacitors are all consistently arranged in a (normally square) grid of simple repetitive structures, unlike the processor, which is a much more complex circuit of different structures and elements interconnected in a highly irregular fashion. The transistor for each DRAM bit cell reads the charge state of the adjacent capacitor. If the capacitor is charged, the cell is read to contain a 1; no charge indicates a 0. The charge in the tiny capacitors is constantly draining, which is why the memory must be refreshed constantly. Even a momentary power interruption, or anything that interferes with the refresh cycles, can cause a DRAM memory cell to lose the charge and therefore the data. If this happens in a running system, it can lead to blue screens, global protection faults, corrupted files, and any number of system crashes. DRAM is used in PC systems because it is inexpensive and the chips can be densely packed, so a lot of memory capacity can fit in a small space. Unfortunately, DRAM is also slow, typically much slower than the processor. For this reason, many types of DRAM architectures have been developed to improve performance. These architectures are covered later in the chapter. Cache Memory: SRAMAnother distinctly different type of memory exists that is significantly faster than most types of DRAM. SRAM stands for static RAM, which is so named because it does not need the periodic refresh rates like DRAM. Because of how SRAMs are designed, not only are refresh rates unnecessary, but SRAM is much faster than DRAM and much more capable of keeping pace with modern processors. SRAM memory is available in access times of 2ns or less, so it can keep pace with processors running 500MHz or faster. This is because of the SRAM design, which calls for a cluster of six transistors for each bit of storage. The use of transistors but no capacitors means that refresh rates are not necessary because there are no capacitors to lose their charges over time. As long as there is power, SRAM remembers what is stored. With these attributes, why don't we use SRAM for all system memory? The answers are simple. Compared to DRAM, SRAM is much faster but also much lower in density and much more expensive (see Table 6.1). The lower density means that SRAM chips are physically larger and store fewer bits overall. The high number of transistors and the clustered design mean that SRAM chips are both physically larger and much more expensive to produce than DRAM chips. For example, a DRAM module might contain 64MB of RAM or more, whereas SRAM modules of the same approximate physical size would have room for only 2MB or so of data and would cost the same as the 64MB DRAM module. Basically, SRAM is up to 30 times larger physically and up to 30 times more expensive than DRAM. The high cost and physical constraints have prevented SRAM from being used as the main memory for PC systems.

Even though SRAM is too expensive for PC use as main memory, PC designers have found a way to use SRAM to dramatically improve PC performance. Rather than spend the money for all RAM to be SRAM memory, which can run fast enough to match the CPU, designing in a small amount of high-speed SRAM memory, called cache memory, is much more cost-effective. The cache runs at speeds close to or even equal to the processor and is the memory from which the processor usually directly reads from and writes to. During read operations, the data in the high-speed cache memory is resupplied from the lower-speed main memory or DRAM in advance. Up until the late 1990s, DRAM was limited to about 60ns (16MHz) in speed. To convert access time in nanoseconds to MHz, use the following formula: 1 / nanoseconds x 1000 = MHz Likewise, to convert from MHz to nanoseconds, use the following inverse formula: 1 / MHz x 1000 = nanoseconds When PC systems were running 16MHz and less, the DRAM could fully keep pace with the motherboard and system processor and there was no need for cache. However, as soon as processors crossed the 16MHz barrier, DRAM could no longer keep pace, and that is exactly when SRAM began to enter PC system designs. This occurred back in 1986 and 1987 with the debut of systems with the 386 processor running at speeds of 16MHz and 20MHz or faster. These were among the first PC systems to employ what's called cache memory, a high-speed buffer made up of SRAM that directly feeds the processor. Because the cache can run at the speed of the processor, the system is designed so that the cache controller anticipates the processor's memory needs and preloads the high-speed cache memory with that data. Then, as the processor calls for a memory address, the data can be retrieved from the high-speed cache rather than the much lower-speed main memory. Cache effectiveness is expressed as a hit ratio. This is the ratio of cache hits to total memory accesses. A hit occurs when the data the processor needs has been preloaded into the cache from the main memory, meaning the processor can read it from the cache. A cache miss is when the cache controller did not anticipate the need for a specific address and the desired data was not preloaded into the cache. In that case the processor must retrieve the data from the slower main memory, instead of the faster cache. Anytime the processor reads data from main memory, the processor must wait longer because the main memory cycles at a much slower rate than the processor. If the processor with integral on-die cache is running at 3400MHz (3.4GHz), both the processor and the integral cache would be cycling at 0.29ns, while the main memory would most likely be cycling 8.5 times more slowly at 2.5ns (200MHz DDR). Therefore, the memory would be running at only a 400MHz equivalent rate. So, every time the 3.4GHz processor reads from main memory, it would effectively slow down 8.5-fold to only 400MHz! The slowdown is accomplished by having the processor execute what are called wait states, which are cycles in which nothing is done; the processor essentially cools its heels while waiting for the slower main memory to return the desired data. Obviously, you don't want your processors slowing down, so cache function and design become more important as system speeds increase. To minimize the processor being forced to read data from the slow main memory, two or three stages of cache usually exist in a modern system, called Level 1 (L1), Level 2 (L2), and Level 3 (L3). The L1 cache is also called integral or internal cache because it has always been built directly into the processor as part of the processor die (the raw chip). Because of this, L1 cache always runs at the full speed of the processor core and is the fastest cache in any system. All 486 and higher processors incorporate integral L1 cache, making them significantly faster than their predecessors. L2 cache was originally called external cache because it was external to the processor chip when it first appeared. Originally, this meant it was installed on the motherboard, as was the case with all 386, 486, and Pentium systems. In those systems, the L2 cache runs at motherboard and CPU bus speed because it is installed on the motherboard and is connected to the CPU bus. You typically find the L2 cache directly next to the processor socket in Pentium and earlier systems.

In the interest of improved performance, later processor designs from Intel and AMD included the L2 cache as a part of the processor. In all processors since late 1999 (and some earlier models), the L2 cache is directly incorporated as a part of the processor die just like the L1 cache. In chips with on-die L2, the cache runs at the full core speed of the processor and is much more efficient. By contrast, most processors from 1999 and earlier with integrated L2 had the L2 cache in separate chips that were external to the main processor core. The L2 cache in many of these older processors ran at only half or one-third the processor core speed. Cache speed is very important, so systems having L2 cache on the motherboard were the slowest. Including L2 inside the processor made it faster, and including it directly on the processor die (rather than as chips external to the die) is the fastest yet. Any chip that has on-die full core speed L2 cache has a distinct performance advantage over any chip that doesn't. Processors with built-in L2 cache, whether it's on-die or not, still run the cache more quickly than any found on the motherboard. Thus, most motherboards designed for processors with built-in cache don't have any cache on the board; all the cache is contained in the processor module instead. L3 cache has been present in high-end workstation and server processors such as the Xeon and Itanium families since 2001. The first desktop PC processor with L3 cache was the Pentium 4 Extreme Edition, a high-end chip introduced in late 2003 with 2MB of on-die L3 cache. Although it seemed at the time that the introduction of L3 cache in the Pentium 4 Extreme Edition was a forerunner of widespread L3 cache in desktop processors, later versions of the Pentium 4 Extreme Edition (as well as its successor, the Pentium Extreme Edition) no longer include L3 cache. Instead, larger L2 cache sizes are used to improve performance. The key to understanding both cache and main memory is to see where they fit in the overall system architecture. See Chapter 4, "Motherboards and Buses," for diagrams showing recent systems with different types of cache memory. Table 6.2 illustrates the need for and function of cache memory in modern systems.

Cache designs originally were asynchronous, meaning they ran at a clock speed that was not identical or in sync with the processor bus. Starting with the 430FX chipset released in early 1995, a new type of synchronous cache design was supported. It required that the chips now run in sync or at the same identical clock timing as the processor bus, further improving speed and performance. Also added at that time was a feature called pipeline burst mode, which reduces overall cache latency (wait states) by allowing single-cycle accesses for multiple transfers after the first one. Because both synchronous and pipeline burst capability came at the same time in new modules, specifying one usually implies the other. Synchronous pipeline burst cache allowed for about a 20% improvement in overall system performance, which was a significant jump. The cache controller for a modern system is contained in either the North Bridge of the chipset, as with Pentium and lesser systems, or within the processor, as with the Pentium II, Athlon, and newer systems. The capabilities of the cache controller dictate the cache's performance and capabilities. One important thing to note is that most external cache controllers have a limitation on the amount of memory that can be cached. Often, this limit can be quite low, as with the 430TX chipset-based Pentium systems. Most original Pentium class chipsets such as the 430FX/VX/TX can cache data only within the first 64MB of system RAM. If you add more memory than that, you will see a noticeable slowdown in system performance because all data outside the first 64MB is never cached and is always accessed with all the wait states required by the slower DRAM. Depending on what software you use and where data is stored in memory, this can be significant. For example, 32-bit operating systems such as Windows load from the top down, so if you had 96MB of RAM, the operating system and applications would load directly into the upper 32MB (past 64MB), which is not cached. This results in a dramatic slowdown in overall system use. Removing the additional memory to bring the system total down to the cacheable limit of 64MB is the solution. In short, it is unwise to install more main RAM memory than your system (CPU or chipset) can cache. Chipsets made for the Pentium Pro/II and later processors did not control the L2 cache because it was moved into the processor instead. So, with the Pentium Pro/II and beyond, the processor sets the cacheability limits. The Pentium Pro and some of the earlier Pentium IIs can address up to 64GB but only cache up to 512MB. The later Pentium IIs and all Pentium III and Pentium 4 processors can cache up to 4GB. Most desktop chipsets for those processors allow only up to 1GB, 2GB, or 4GB of RAM anyway, making cacheability limits moot. All the server-oriented Xeon processors can cache up to 64GB. This is beyond the maximum RAM support of any of the chipsets. In any case, it is important not to install more memory than the cache controller can support. If you want to know the cacheability limit for your system, consult the chipset documentation if you have a Pentium class or older system (or any system with cache on the motherboard), or check the processor documentation if you have a Pentium II class or newer system (or any system with all the cache integrated into the CPU). |

EAN: 2147483647

Pages: 283