Creating a Disaster Recovery Plan

Planning for the unthinkable is not as difficult as it may seem. Computers and networks are not fickle creatures . They'll do what they're told until some event occurs that disrupts them. A disaster recovery plan is really just a matter of creating a list of standards and practices and adhering to it. If you fully understand your computing environment, what it is doing, and how it was created, you should be able to re-create the setup (or a portion of it) without going insane. Unfortunately, there is no way to gauge what is appropriate for any given person's situation, so the following rules may be a subset (or even a superset) of what you should lay down for your systems and network.

Standardization

Standardize, if possible, on a single hardware and software platform. Obviously, if you're reading this book, you probably want to standardize on the combination of Macintosh/Mac OS X.

In many ways, you have it much easier than your Windows counterparts. There is only a single source for Macintosh hardware (Apple), and an installation of Mac OS X (on, say, an external Firewire hard drive ”your iPod, of course!) can be used to boot any of the existing compatible hardware. Reviving a server that is failing for hardware reasons, such as a dying network card or power supply, is often a matter of removing the drive from one machine and placing it in another. In the Windows world, unless you have identical hardware, trying a similar trick will likely play havoc with the computer as it busily tries to reconfigure itself for different motherboards, I/O controllers, video, and so on.

NOTEAlthough standardizing on a platform will greatly improve the rate at which you can recover from a serious problem, it may introduce other issues. If an exploit is discovered that affects your platform of choice, it could be carried out against your entire network infrastructure. If support and equipment costs permit, implementing redundancy on multiple server platforms (Apache on Mac OS X and Apache on Linux, and so on) will help improve network resiliency. |

Storage

Macintosh users, however, are still susceptible to hardware inconsistencies between models. The transition from SCSI to IDE has been a heartbreak for some, while a welcomed cost-saving measure for others. Apple's recent introduction of the Xserve, based on IDE drives , cements their belief that IDE technology is a viable alternative for server platforms. Those who believe otherwise are likely to stick with tower-based servers with build-to-order SCSI support.

NOTEVisit http://www.scsi-planet.com/vs/ for links to a number of SCSI versus IDE comparisons and evaluations. |

Indecisiveness when choosing a storage standard often leads to "evaluating" a few standards (Firewire, IDE, SCSI) on different machines. Unfortunately, the end result of this approach is that none of the storage media is interchangeable. Set and maintain a storage standard for all your systems. Being able to swap media from a failing computer to one that is stable is a Macintosh advantage that system administrators should recognize and utilize.

Operating System

Okay, I've standardized on Mac OS X, now what? By saying you've standardized on Mac OS X, what does that mean? Mac OS X 10.0? 10.1? 10.1.5? Jaguar? Mac OS X Server? Mac OS X had been available for almost a year and half when this book started to be written. In that time, there have been over half a dozen releases, including two major updates, and the introduction of Mac OS X Server.

Although operating system standardization doesn't mean that you have to be running the latest version of Mac OS X, it does require that you run a standard release on each of your computers with the latest security patches, and that you can easily re-create the base system at a moment's notice. Create a patch CD that contains all the Apple system and security updates that have been released since the system version that you have on CD. Although usually installed with the Apple System Updater, you can download these packages directly from http://www. info .apple.com/support/downloads.html or use the Updater's Download Checked Items to Desktop Update menu option. Keeping all the updates (along with any specialized drivers you may need) in one place (such as on a CD or AppleShare server) will make reinstalling much less of a hassle.

TIPYou may want to consider joining one of Apple's Developer programs, http://developer.apple.com/membership/descriptions.html. Regardless of whether you actively develop for the Macintosh platform, the developer programs offer prerelease system software for testing, the latest (patched) versions of the system software on CD, and discounts on hardware and support services. |

Utilize RAID and Journaling

Mac OS X supports two important technologies for maintaining data integrity: RAID and HFS+ Journaling. RAID provides a safety net for drive failures by writing information to two disks simultaneously . Journaling, on the other hand, stores a database of "change" information as data is written to a disk. If a power failure or crash corrupts the system, the database can be used to reconstruct the last "working" version of the data on the drive. Both these technologies are built into Mac OS X and are accessed in the Disk Utility application.

To activate RAID support, you must start with two identical disks in your computer. These disks will be combined into an array and appear to your system as a single drive. If you're planning to use the RAID array for your system installation, you must boot from your installation CD and run Disk Utility from the Installer menu.

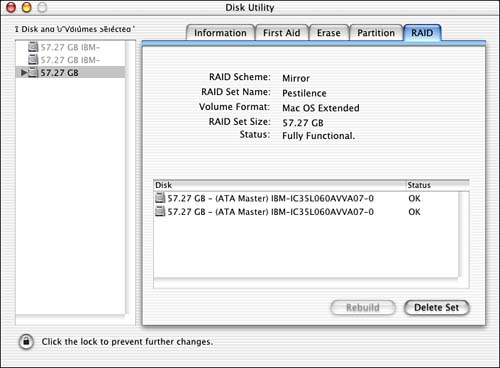

Start the Disk Utility (/Applications/Utilities/Disk Utility) and click the RAID tab, as shown in Figure 20.1.

Figure 20.1. RAID support can increase the reliability of your system.

RAID support is available in one of two "flavors":

-

Mirroring (level 0) . Mirroring creates an exact duplicate of data on a second drive. If one disk fails, the second disk takes over. The disk can then be replaced without loss of information.

-

Striping (level 1) . Data is written in " stripes " on both disks. There is no data redundancy; the benefit lies in the speed at which data can be written and read because the drives can operate in parallel.

Drag the two disks to use in the array from the list on the left of the window into the "Disk" list on the right. Use the RAID Scheme pop-up menu to choose between mirroring and striping (mirroring provides the data security we're going for). Choose a name for the set. (This is the volume label of the disk that will appear on your desktop.) Finally, choose the file system type and click Create. After a few moments the RAID array is created and appears as a new single disk on the system. You can use this like any other disk, with the added benefit of data redundancy.

You enable journaling support from the command line in Mac OS X by using the syntax diskutil enableJournal <mount path > . For example, to enable Journal support on your root disk, you would type the following as root:

# diskutil enableJournal / File System Layout

As wasdiscussed in Chapter 1, "An Introduction to Mac OS X Security," you should do what you can to use a standardized Unix file system layout (third-party software is installed in /usr/local , partitions where possible, and so on). If necessary, you could reinstall Mac OS X on top of a properly configured file system and not replace any of the additional software you may have installed. The goal of disaster recovery is to restore your computer to a working state with as little trouble as possible. If that means spending a bit more time when you first set up your system, so be it.

TIPAs hinted at here, Mac OS X can be reinstalled on a partition without destroying the existing data. On a system that has failed or will no longer boot, you can rerun the Mac OS X Installer without erasing the destination drive. The operating system is installed, preserving user accounts and any additional software that was not part of the original system installation. If, however, you have upgraded versions of applications that are included on the CD (Apache, sendmail, and so on), these are replaced with what came on the CD. |

Create a Division of Services

Although budget constraints may limit the extent to which you can divide the services you offer, you should still consider it a best-practices measure to be implemented whenever possible. If you run Mail, Web, and Appleshare services, it's in your best interest to keep them on separate machines. Small companies can often get away with keeping everything on a single server, but they risk losing everything should a single service fail or be compromised.

For example, storing your internal development and work files on your public Web server opens them to the possibility of being read, modified, or removed by external forces beyond your control. Keep in mind that no matter how many patches you've applied, and how well you've kept up with the security bulletins , software is rarely perfect. Something inevitably comes along with the potential of breaking even the most meticulously protected system. To lose your and your co-workers ' documents or email, and perhaps expose private information simply because a previously unknown PHP bug is discovered is simply unacceptable.

SCARY, ISN'T IT? PART IIIBy keeping as few services running on a particular machine as possible, you greatly reduce the risk of any given service failing at any given time. An example of the worst-case scenario occurred recently when a business was making a move from one building to another. Very little investment had been made in the company's server, and they had, in fact, made the mistake of placing all their eggs in one basket . The server being transported handled file serving, database, Web, and email. On the short trip from building to building, the jostling damaged the computer's drives. When it was plugged back in, it failed. The first reaction was "We need to get a list of the customers so we can notify them that there is a problem." Unfortunately, the current customer list was in a database, stored where? On the failed server. After much fumbling, written invoices were produced to gather the customer list. The next lament was, "I'm trying to email everyone, but it can't connect to the email server." Sadly, the DHCP server required to configure the internal network was also on the primary server ”as was the mail server itself. In the end, after configuring all the network settings by hand, email was sent from various personal accounts (Jotmail, AOL, and so on) to clients to inform them of what had happened . Think of the PR nightmare of explaining this scenario to your customers. This is yet another example of poor planning, poor implementation, and poor recovery. |

Division of services not only makes sense from the perspective of disaster recovery, but from the standpoint of service scalability, as well. Dedicated servers offer more scalability than a combination of services because they are not competing for resources. A file server, for example, makes a poor pairing companion with a Web server. Both can quickly become bound by the performance of the I/O systems ”where they will be in perpetual competition. A combination file/DHCP server, however, is workable because the DHCP is not I/O bound (unless you have a very large network with extremely low lease times).

In addition to dividing services between machines, you should also consider making a division between internal and external services. Unnecessarily exposing internal network services (file server, intranet Web, and so on) to the Internet is an invitation for trouble. Internal services should, if possible, not have a viable route to the Internet, or should be firewalled at the very least.

Write Documentation

One of the most important disaster recovery precautions is creating appropriate system and infrastructure documentation. Although certainly less exciting than actually building your servers and network, documenting their components is just as important. How many people in your organization are familiar with your systems? How many could take over if something happened to you ? Although the obvious solution is to cross-train employees, in reality, this is rarely completely possible. Employees specialize in different areas and it is a rare person who can simply serve as a "drop-in" replacement for another.

I've personally run into a situation where a network's administrator isn't present and the network goes offline. Although I understood the network, the problem was between the ISP and the building; diagnosis required an invasive test, initiated by the ISP. Unfortunately, the ISP required that trouble-tickets be created through a certain phone number (the administrator's cell phone) or that they be supplied with a personal password (of course, known only to the administrator). In this case, the network and computer systems were well documented, but a few pieces of information that normally wouldn't be needed were not available.

Specifically, you should keep tabs on the following:

-

Network Topology . Networks aren't usually difficult to understand. For this reason, many people don't properly document their network wiring, switch configuration, and so on. Unfortunately, over time, networks tend to grow and evolve . When forced to re-create a network from scratch, it is often impossible to remember what goes where, and more importantly, why. Take the time to map your network properly. For some help, you may be interested in InterMapper (http://www.intermapper.com/) for mapping, autodevice discovery, and monitoring, or OmniGraffle (http://www.omnigroup.com/applications/ omnigraffle /) for mapping only.

-

Service Providers . Most people are not their own ISPs, nor their own power generators, nor telephone companies. These services are critical for communicating with your clients and users, keeping your services online, and providing a useful network environment for everyone. Create a list of contact information for reporting failures and monitoring service status. Make sure that this list is distributed among multiple support people outside your organization and that people understand when it should be used.

-

Hardware and Software Inventories . For each server, you should keep a list of the software, services provided, and instructions on how the applications were installed and configured. Like networks, software configurations tend to change over time. Any modifications should be logged to the inventory at the time they are made, not after the fact.

-

Procedures . Document routine procedures that may seem like common sense to you, but may not be known to others ”such as creating backups and restoring, running disk maintenance software, performing network diagnostics, and so on. Obviously, these depend on the nature of your operation, but you should do everything possible to empower others to fix trouble should it occur. (Note: If you're concerned about job security because of documenting everything you do, consider what people might think if you don't document anything !)

-

Clients and Users . Last but not least, document your clients and users ” anyone who will need to know when there is a disruption in service. Unfortunately, in times of stress, end users are often the last to hear what is going on. You'll find that people are infinitely more understanding if they are kept informed of problems instead of having to discover them on their own.

When documenting your system, take the time to create print copies of all instructions. Commenting within configuration files is a good practice during routine system maintenance, but in the case of complete data loss, electronic documentation will be of little use. A copy of your printed documentation should also be stored offsite, guarding against catastrophic failure.

Of course, complete data loss should never happen, because of the final component of a well-rounded disaster recovery plan: backups.

Develop a Backup Schedule

The most important part of any disaster recovery plan is creating a backup schedule and following through on it. Backups should enable you to quickly restore a computer's settings without requiring a complete reinstall and setup. Additionally, a copy of all backups should be archived offsite; if an event occurs that can destroy your servers, it's a good bet that it will also take out your local backup systems. I recommend looking into an NAS (Network Attached Storage) unit for a remote backup solution that is simple to use and maintain. Quantum's SNAP server, for example, can be ordered in RAID configurations of hundreds of gigabytes. Hosting the NAS unit at a remote site or your ISP gives you the security of an offsite backup and (for the most part) worry-free operation.

Macintosh Backups

Running backups on Mac OS X is one of the more un-Mac-like tasks that can be undertaken on the operating system. The trouble stems from a difference between how the Mac has traditionally stored its files versus the standard Unix file system. The native Mac OS X file system, HFS+, stores many files as two separate forks: the resource fork and data fork. The resource fork stores menu and window definitions, icons, and other elements that can easily be changed even after a program is compiled. The data fork contains the "meat" of the file and is the only portion that can be read by most of the standard BSD command-line utilities. Copying a file with both data and resource forks by using cp effectively strips the resource fork from the file. In most cases the data fork cannot stand on its own, so the duplicate file is useless. Apple is slowly migrating to a bundle (basically folders of flat files) architecture, but until the entire system is migrated and third-party developers catch up, resource forks must still be taken into account.

Backup Strategies

The next section looks at the available built-in options for backing up and archiving information ”both flat files and files with resource and data forks. But before deciding how you'll be performing your backups, however, you need to come up with a backup strategy. This, like so many other things we've discussed, is dependent on the services that a given computer is providing.

A Web server that is updated twice a year presents a very different backup scenario than a file server that changes every single day. In the case of the Web server, you'd be best off archiving the Web site after each update. With Mac OS X's built-in CD burning, you can simply copy the contents of the Web site(s) to the CD. A file server likely contains more than can fit on a single CD-ROM, and is in a constant state of flux. Here, a backup of only what has changed is more appropriate and will save you both time and backup media.

Backups generally fall into two categories: full and incremental. A full backup is an exact duplicate of everything within your file system (or a branch thereof, such as /Users or /usr/local ). From a full backup you can quickly restore the state of all the backed up files as they existed at the time the backup was made. Full backups are time consuming (all files must be copied each time the backup is run) and cannot efficiently be used to store multiple versions of files over an extended period of time. If, for example, you want to have a copy of each day's updates to your fileserver for the period of a year, you would need to make 365 full copies of each of the files ”this can quickly add up in terms of storage media. Full backups are usually reserved for mostly static information that can be copied and stored.

An incremental backup, on the other hand, is used to archive file systems that aren't static. You start with a single full backup, and then periodically back up the files that have changed since the full backup took place. Incremental backups can take place at multiple "levels," with each level backing up only the files that have changed since the preceding level was backed up.

For example, the BSD dump command creates backups at levels from to 9 . A backup with a level of is a full backup, and the subsequent levels can be assigned arbitrarily depending on how you want to structure your backup system. Many people work with a monthly, daily, weekly model. In this case, a full backup is made to start (level 0). On a monthly basis, the files that have changed since the last full backup are added (level 1). Weekly, the files that have changed since the last monthly backup are added (level 2). And, finally, daily, the files that have changed since the last weekly backup are added (level 3).

To restore the most recent set of files from an incremental backup, one would first restore the most recent 0 level dump (full), then the most recent level 1 dump (monthly), followed by the current level 2 dump (weekly), and finally the current day's level 3 dump. Following this model of daily/weekly/monthly incremental backups enables you to restore the file system to its exact state at the time the backup was performed for any given day. Of course you'll need to keep every single one of these backup sets to do that, but the amount of storage required to do this is significantly less than if a full backup were made every day. (The preceding statement is usually true. If all of the files change every day, there is no difference between an incremental backup and a full backup. This, however, is very, very unlikely .)

NOTEWhen working with dump , the level numbers can be arbitrarily assigned between 1 and 9 ”as long as you understand that higher numbered dumps back up whatever has changed since the next available lower dump. The assignment of level 1, 2, and 3 in this example could just as well been 2, 5, and 8. Only level (full backup) has a specific purpose. Other incremental backup tools, such as Retrospect, offer a more visual, user-friendly approach to incremental backups, so if this seems confusing, don't worry; it isn't the only option. The incremental backup "philosophy" is shared among many backup tools, but the verbiage of the documentation may vary between products. |

WHAT BACKUP MEDIA SHOULD I USE?Like so many other choices you make, there are advantages and drawbacks to the different types of backup media you use with your system. Optical media, such as CD-ROMs, are inexpensive, durable, and easy to use. Unfortunately, they limit you to roughly 680MB per disc. With the base Mac OS X install itself occupying more than the capacity of a single CD, this can be a somewhat painful solution for doing complete system backups. CD carousels are one solution to the problem, but may not be priced within reach. DVD-R and DVD-RW offer greater storage capacities , but still well below that of today's hard drives. Modern DAT systems are economical, have very large capacities, and are remarkably fast, but the potential for media failure is great. Tapes should be maintained in a temperature- and humidity-controlled environment. I've been witness to many failed restores because tapes were either mishandled in storage or left beside someone's CRT and inadvertently demagnetized. Tapes are the best high-capacity storage medium available, but must be cared for appropriately. Disks, whether in the form of NAS (Network Attached Storage), Firewire drives, SCSI, and so on have only recently moved into the realm of being high enough in capacity and cheap enough in cost to be an effective backup medium. Although far more expensive than optical media or tapes, you can use disks effectively for periodic backups without spending thousands of dollars. Choose what you can afford and what is needed to get the job done. You must have backups for effective disaster recovery ”this is not a good place to skimp. |

| | |

| |

| Top |

EAN: 2147483647

Pages: 158