FMEA: Failure Modes and Effects Analysis

| Much has been written about the analysis of failure modes and their effects (FMEA) for hardware design, development, and manufacture. Here we want to apply this proven, effective technology to software design and development. A system integrating both software and hardware functionality may fail due to the capabilities of the software component or components that are invoked by external control, by a user, or by another device. Software may also fail when used in an unfamiliar environment or by an inexperienced, impatient, or clumsy user. A software failure is defined by many designers as a departure from the expected "output" result or action of a program's operation, which differs from the requirement or specification. The program may either run or fail to run when called upon. This failure may be due to user or "pilot error" (referred to as "smoke in the cockpit" by software designers, who have little faith in the end user's ability to operate software properly), by a hardware or network communications error, or by an actual problem (defect or bug) in the software itself. Hardware design is often constrained by engineering trade-offs that can be totally overcome only by a cost-is-no-object approach, which is rarely used, for obvious reasons. Software design is constrained by the same cost and time factors as hardware design projects but is not so vulnerable to the need for intrinsic functional capability or engineering trade-offs common in hardware design. Software design is done in more of an "anything is possible" context, so there is a great temptation for the designer to multiply functions beyond what the end user needs or actually requested. This leads to the same risk that an overly complex machine design would have. Software designers and programmers often must be reminded of the rule that mechanical designers try to follow: "Any part that is not designed into this machine will never cause it to fail." In other words, the programmer's chief canon of either elegance or reliability is simplicity! Unlike building rococo late-medieval cathedrals, writing reliable computer programs is rocket science! The rocket designer quickly learns that the weight of any feature added to the rocket increases the rocket's final weight by 10 times the weight of the added feature. Computer programming does not suffer such a severe penalty for redundancy or gratuitous functionality, but simplicity and economy of program expression nonetheless are very highly desired. Curiously, FMEA literally is rocket science, because it began with the German V1 rocket bomb. The first models of this weapon were notoriously unreliable, but the development of the first version of FMEA and its application eventually produced a success rate of 60%. That doesn't sound very impressive by today's quality standards, even for rockets, but the V1 was the first production rocket vehicle. What became FMEA in the American aerospace industry came with the German rocket scientists and their second model, or version V2, which was the basis of the later American Redstone rocket.[1] Here we will begin with a review of FMEA applied to hardware and examine the potential for its use at each stage of the DFTS methodology described in Figure 2.6. FMEA is a systematic step-by-step process that predicts potential failure modes in a machine or system and estimates their severity. The initial design can then be reviewed to determine where and how changes to the design (and, in the case of mechanical systems, operations, inspection, and maintenance strategies as well) can eliminate failure modes or at least reduce their frequency and severity. FMEA is also sometimes applied to identify weak areas in a design, to highlight safety-critical components, or to make a design more robust (and expensive) but less costly to maintain later when in service.[2] Some of FMEA's key features and benefits are not applicable to software design. However, those that are will be very beneficial in our effort to build quality and reliability into software as far upstream in the design and development process as possible. An extension of FMEA called FMECA adds a criticality analysis. It is used by the Department of Defense and some aerospace firms. The criticality analysis ranks each potential failure mode according to the combined influence of its severity classification and probability of failure based on the data available. Criticality analysis is used for complex systems with many interdependent components in mission-critical scenarios. It is not used by the automotive industry, as is the basic FMEA, and it is not generally applicable to enterprise software design and development. Functional FMEA is normally applied to systems in a top-down manner in which the system is successively decomposed into subsystems, components, and ultimately units or subassemblies that are treated as the "black boxes" that provide the system with essential functionality. This approach is very well suited to analyzing computer programs for business applications, which naturally decompose in a hierarchical manner, right down to the fundamental subroutines, functions, or software objects. (These elements are also referred to as "black boxes" by software designers and testers.) The hardware reliability engineer considers the loss of critical inputs, upstream subassembly failures, and so on for the functional performance of each unit. This helps the engineer identify and classify components as to the severity of their failure modes on overall system operation. There also exists a dual or bottom-up FMEA approach that is not as applicable to software systems design. Much has been written on FMEA, and we could quote many authors and as many variations of procedure, but a good basic paradigm is that of Andrews and Moss,[3] which we repeat here as a seven-step process:

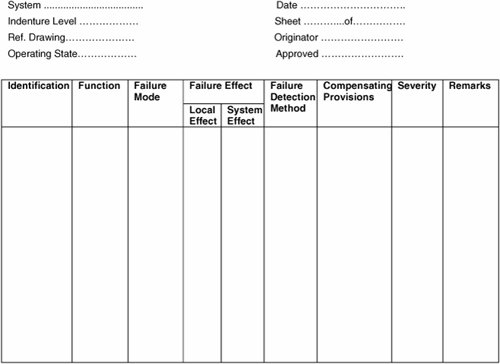

You can surely already detect the aroma of a typical mechanical or civil engineering process that has been "reduced to code" as the saying goes. FMEA is supported by basic tutorial manuals giving all the forms and instructions on how to fill them out and perform an analysis,[4] by training courses teaching automotive engineers the technology,[5] and by handbooks that help the engineer keep the many details of the process in mind.[6] We do not need to go through a sample analysis here, because much of it is not applicable to software, but it is useful to show a typical FMEA worksheet such as that shown in Figure 13.1. Figure 13.1. A Typical FMEA Analysis Worksheet As you can see from this worksheet, the difficult stage is determining failure severity and its frequency or range. Because the system is typically under design, the analyst has no way to assign precise values, but he or she can deal with subjective estimates and ranges. For example, severity in mechanical systems and continuous processes can be estimated as follows:

Range estimates of failure probability for mechanical systems are typically as follows:[7]

These levels of criticality and ranges are typical of large mechanical systems but are not directly applicable to the reliability of enterprise application software. We need to develop a more dynamic range in the middle of the severity scale, because real application software reliability problems run from major to critical. Catastrophic errors are of a serious business-risk nature that would hopefully be caught during an audit. The failure frequency ranges seem benign by software standards. High in our field would be several times a day and very low something that occurred once in ten years. Recently such an error came to the attention of one of the authors when an accounts-payable module that had run without problems for ten years blew up when in use by a large construction company. The vendor's testing expert coded a stress test to discover the problem and let it run all night to perform 250,000 transactions. The next morning the run had finished without error. The next evening he ran it 500,000 times and discovered the problem. A storage leak in a C program module had returned to the storage manager one fewer byte than it had requested for each transaction. The error did not produce an application failure until the program was used by a very large international construction company that purchases many thousands of items. This would rate as a minor problem with a low frequency of occurrence on the typical FMEA scales, but it would be a very rare combination of circumstances for software errors. |

EAN: 2147483647

Pages: 394