10.2 The Technology Behind IP Storage Data Replication

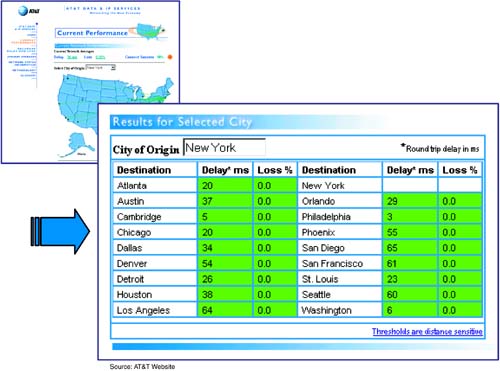

| Today, virtually all high-speed networks use fiber- optic cables to transmit digital voice and data. While the speed of light in a vacuum is about 186,000 miles per second (300,000 Km/s), the index of refraction of the typical single-mode fiber-optic cable reduces that to about 100,000 miles per second, or 100 miles per millisecond. In other words, if the distance between the primary and backup data centers within a metropolitan area were 50 miles, then the 100-mile round-trip delay would be about a millisecond. Conventional Fibre Channel switches can tolerate a millisecond of delay without much performance degradation, but the locations that can be reached within a millisecond may not be sufficiently far from the primary data center to escape the same disaster, defeating the purpose of the backup data center. Consequently, many IT professionals want to transfer block-mode storage data beyond the metropolitan area to other cities. The backup center may also serve as a centralized data replication site for data centers in other cities that are much farther away. Taken to the extreme, that could be thousands of miles, resulting in tens of milliseconds of round-trip latency. For example, it would take about 50 ms for data to be transmitted over fiber-optic cable on a 5,000-mile round trip across the United States or Europe. For reference, this transmission latency also is called propagation delay . Added to that delay is the latency of the switches, routers, or multiplexers that are in the transmission path. Today's optical multiplexers add very little latency, and Gigabit Ethernet switches also add only tens of microseconds (a small fraction of a millisecond), but some routers can add a few milliseconds of latency to the data path . Modern routers, however, are much faster than their earlier counterparts. For example, in a test conducted by the Web site Light Reading, the maximum latency of a Juniper M160 router with OC-48c links was about 170 microseconds. In a typical network, data would pass through two or three of these routers in each direction, adding only about a millisecond to the round trip. This form of latency typically is called switching delay, store-and-forward delay, insertion delay , or node delay . The third type of latency is congestion delay or queuing delay . As in highway design, shared network design primarily is a tradeoff between cost and performance. Highways that have more lanes can carry more vehicles at full speed, but also are more costly to build and are underutilized except during peak commuting periods. So, taxpayers are willing to put up with a few extra minutes of driving time during peak periods to avoid the large tax increases that would be needed to fund larger capacity roads . Likewise, shared networks can be designed to handle peak data traffic without congestion delays, but their cost is much greater and the utilization much lower, so most organizations are willing to tolerate occasional minor delays. Within a building or campus network, where 100 Mbps or 1 Gbps of LAN traffic can be carried over relatively inexpensive twisted-pair cables and Gigabit Ethernet switches, the average network utilization may be only a few percent ”even during peak hours. But MAN and WAN facilities are much more costly to lease or build, so network engineers typically acquire only enough capacity to keep the average MAN or WAN utilization below 25 to 50 percent. That amount of bandwidth provides a peak-to-average ratio of 2:1 or 4:1, which generally is sufficient to handle the peak periods without adding unacceptable queuing delays. As mentioned in the previous chapter, most service providers have installed much more capacity over the past few years by upgrading their routers and transmission links to multi-gigabit speeds. Those upgrades have resulted in dramatic improvements in the overall performance of their networks while reducing all types of latency and error rates. For example, the AT&T IP network consistently provides end-to-end data transmission at close to light speed with near-zero packet loss rates. The network's current performance can be monitored via the AT&T Web site: http://ipnetwork.bgtmo.ip.att.net/current_network_performance.shtml . Figure 10-2 shows the results of a query made at 1:30 p.m. Pacific Time on a typical Tuesday afternoon, which would be near the nationwide peak U.S. network loading period. Figure 10-2. IP network performance is close to light speed and packet loss is near zero. (Source: AT&T Web site) As shown, most of the latency figures are quite impressive. Assuming a nominal propagation delay of one millisecond per hundred miles, the round-trip latency between New York and San Francisco would be about 59 ms (5,900 miles) plus the delays attributable to the routers and some congestion queuing, which one would expect during this peak period. In this case, however, the round-trip latency was measured to be only 61 ms, which means that the sum of the delays caused by the routers and the congestion queuing was two milliseconds. With at least two routers (most likely more) in the data path adding latency in each direction, the network congestion delay must be negligible for this link and many of the others measured. Not all IP networks perform this well, but IP can handle far worse conditions, as it originally was designed to tolerate the latencies of congested global networks that, in the early days, could be hundreds of milliseconds. TCP/IP, which runs on top of IP, also withstands packet loss with no loss of data and is resilient enough to provide a highly reliable transfer of information. With the advent of the IP storage protocols, which adapt block-mode storage data to the standard TCP/IP stack, mass storage data now can be transmitted over hundreds or thousands of miles on IP networks. This new IP storage capability lets IT professionals make their data more accessible in three important ways:

|

EAN: 2147483647

Pages: 108