9.2 Options for Metropolitan-and Wide-Area Storage Networking

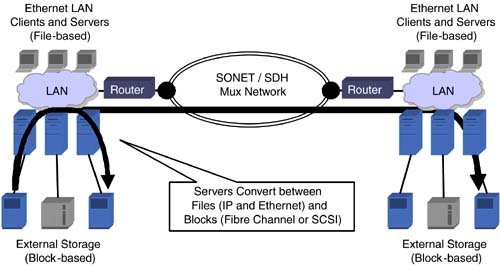

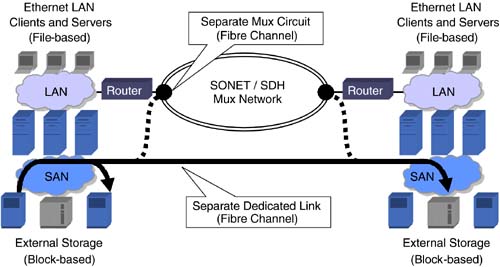

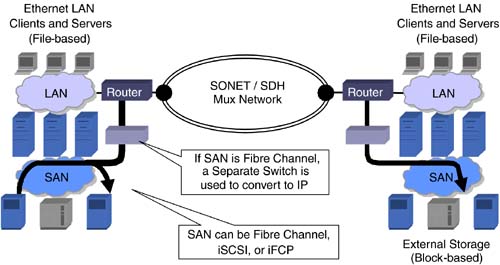

9.2 Options for Metropolitan-and Wide-Area Storage NetworkingAlthough the proportion of data centers using SANs is growing, most mass storage devices are attached directly to servers or NAS filers via SCSI cables. Some direct-attached storage (DAS) has been converted from SCSI to Fibre Channel, even though SANs have not yet been deployed. Direct-attached disk arrays can be backed up to other arrays and tape libraries by sending the data back through the attached server, across the LAN, to the target storage device through its attached server. DAS has the advantage of being simple, but it places an added load on the servers and the LAN, as they handle the same data twice (once to write it and again to replicate it). The performance may not meet the requirements of the application, since the servers must convert the more efficient block-mode data into file format for transmission across the LAN, then back again to block mode for storing on the target array or tape. Still, if the applications can tolerate the reduced performance, this approach also enables simple remote backups , as the data that is converted into a file format uses Ethernet and IP as its network protocols. In most cases, as shown in Figure 9-3, file-based storage data simply can share the organization's existing data network facilities for transmission to other data centers in the metropolitan and wide areas. Figure 9-3. File-based backup locally, via the LAN, and remotely, via the existing data network. Data centers outfitted with SANs not only enjoy the benefits of any-to-any connectivity among the servers and storage devices, but also have better options for local and remote backup. LAN -free and serverless backups are enabled by the addition of a SAN, and those functions can be extended within the metropolitan area via dedicated fiber, as shown in Figure 9-4. Figure 9-4. Block-based backup locally, via the SAN, and remotely, via dedicated fiber or over the existing SONET network, using a separate circuit and Fibre Channel interface cards in the muxes. As outlined in the previous section, dedicated fiber is generally costly, requires a lengthy installation process, and is not available at all locations. In those cases, it may be possible to run fiber a much shorter distance to the multiplexer that is nearest to each data center and send the storage data over an existing SONET/SDH backbone network. This approach became possible with the advent of Fibre Channel interface cards for the multiplexers. These cards are similar to those developed for Gigabit Ethernet and, as with the Ethernet cards, encapsulate the Fibre Channel frames to make them compatible with the SONET or SDH format for transmission between locations. In this case, the network demarcation point would be Fibre Channel, so subscribers simply can connect their Fibre Channel SANs to each end of the network, with no need for protocol conversion, as shown in Figure 9-4 by the dashed lines. There are, however, some drawbacks to this approach. As the transmission distance is increased, it takes more time to move control messages and data between locations, as dictated by the laws of physics. Fibre Channel switches originally were designed for use within data centers, where transmission delays can be measured in microseconds rather than milliseconds , and the rate at which they transmit data is approximately inversely proportional to the distance between switches. Performing operations in parallel can overcome some the performance impact of long transmission delays. It is possible to have other data on the fly while waiting for the acknowledgments from earlier transmissions, but that requires large data buffers, and most Fibre Channel switches have relatively small ones. Consequently, the buffers fill quickly in long-distance applications, and the switches then stop transmitting while they wait for replies from the distant devices. Once a write-complete acknowledgment and additional flow control credits are received, the switches can clear the successfully transmitted data and fill their buffers again, but the overall performance drops off dramatically over distances of 20 to 30 miles, as the buffers are not large enough to keep the links full. Inefficiency is another drawback. The channels or logical circuits used by SONET and SDH multiplexers have fixed bandwidth, so what's not used is wasted . Gigabit Ethernet and Fibre Channel circuits on these multiplexers have similar characteristics, but there are dozens of applications that use Ethernet, and only storage uses Fibre Channel. This means that the cost of the Fibre Channel backbone circuit cannot be shared among applications, as is the case with Ethernet. Besides sharing a circuit, efficiency also can be improved by subdividing a large circuit into multiple smaller ones. For example, a service provider's multiplexer with a single OC-48c backbone link can support, say, three OC-12c subscribers and four OC-3c subscribers. That would limit the subscribers' peak transmission rates to 622 Mbps or 155 Mbps, in return for significant cost savings, as the service provider can support many subscribers with a single mux and pass some of the savings on to subscribers. For smaller data centers that don't require full Gigabit peak rates, IP storage networks work fine at sub-Gigabit data rates. All standard routers and switches have buffers that are large enough for speed-matching between Gigabit Ethernet and other rates. In contrast, the minimum rate for Fibre Channel is approximately one Gigabit per second. Figure 9-5 shows the standard transmission rates for SONET and SDH. The small letter c suffix indicates that the OC-1 channels are concatenated to form a single, higher speed channel. For example, OC-3c service provides a single circuit at 155 Mbps, not three logical circuits at 52 Mbps. Figure 9-5. Rates for SONET and SDH transmissions. Today, most midsize and high-end data subscribers use 155 Mbps or 622 Mbps services. The higher rates are very costly and generally are used only by service providers for their backbone circuits. In those applications, OC-192 is more common than OC-192c, probably because the clear-channel 10 Gbps OC-192c router interface cards only became commonly available in 2001, just as the frenzied demand for broadband services started its decline and service providers dramatically scaled back their expansion plans. OC-192 cards, which support four OC-48c logical circuits, already had been installed, and most service providers have continued to use them rather than upgrading. However, as the demand for broadband network capacity eventually resumes its upward march , and as 10 Gbps Ethernet becomes more common in LAN and IP storage network backbones, high-end subscribers will ask their service providers for clear-channel 10 Gbps metropolitan- and wide-area connections. At that time, routers and Windows Driver Model (WDM) products will be outfitted with more OC-192c interfaces. Meanwhile, the equipment vendors are developing even faster OC-768 interface cards, which support four 10 Gbps OC-192c circuits, so the service providers may wait until they become readily available to upgrade to those products. Other types of multiplexers are used to deliver services at rates below 155 Mbps. In North America, 45 Mbps (DS3 or T3) is popular, and the equivalent international service is 34 Mbps (E3) . The bandwidth ultimately can be subdivided into hundreds of circuits at 64 Kbps, which is the amount traditionally used to carry a single, digitized voice call. Note that in all cases, the services operate at the same speed in both directions. This is called full-duplex operation. For storage networking applications, it means that the remote reads and writes can be performed concurrently. Because Fibre Channel requires at least one or two Gigabits per second of backbone bandwidth, the Fibre Channel interface cards are designed to consume half or all of an OC-48c multiplexer circuit. Many potential subscribers to metropolitan-area Fibre Channel services currently may have only a single OC-3c connection to handle all of their nonstorage data services for those sites, so adding Fibre Channel would increase their monthly telecom fees by a large factor. At least one mux vendor has tried to overcome this Fibre Channel bandwidth granularity problem by adding buffers and a speed step-down capability to its Fibre Channel interface cards. That may allow Fibre Channel to run over a less costly OC-3c circuit. There are, however, some practical limitations to this approach. Since there are no standards for this technology, these solutions are proprietary, and the speed step-down cards on each end of the circuit must be the same brand. That makes it difficult to find a global solution or change service providers once it has been deployed. Fortunately, all of the problems with long-distance Fibre Channel performance and efficiency have been solved , within the laws of physics, by IP storage switches. Because they can convert Fibre Channel to IP and Ethernet, the switches can be used to connect Fibre Channel SANs to the standard IP network access router, as shown in Figure 9-6. Figure 9-6. Block-based backup locally, via the SAN, and remotely, via the existing SONET network, using IP storage protocols. By connecting to the service provider network via the router rather than directly, users may be able to get by with the existing network bandwidth ” especially if they already have 622 Mbps or Gigabit Ethernet service. The resulting cost savings can be significant, and the storage network performance can be installed and tuned easily, using familiar network design practices. This approach also uses standards-based interfaces and protocols end to end, so there's no risk of obsolescence or compatibility problems. IP storage also has the flexibility to be used with the new ELEC services. Subscribers may elect to use the same network topology ”that is, a router at each network access point ”as shown in Figure 9-6. In that case, the link between the router and the network would be Fast Ethernet or Gigabit Ethernet rather than SONET or SDH, but all other connections, including the storage links, would remain unchanged. Should subscribers elect instead to eliminate the routers, as shown previously in Figure 9-2, the IP storage link would be connected to a Gigabit Ethernet LAN switch or directly to a Gigabit Ethernet port on the ELEC's access mux. Connecting to a LAN switch has the economic advantage of sharing the existing backbone connections ( assuming there is ample bandwidth to do that), while the direct ELEC link would provide a second, dedicated circuit for higher volume applications. |

EAN: 2147483647

Pages: 108