17.2 Linux

|

| < Day Day Up > |

|

17.2.1 Oracle Clustered File System

The Oracle Clustered File System (OCFS) was developed by Oracle to simplify the management of RAC database data files on Linux and Windows operating systems.

Installation of the OCFS requires a private network configured and consists of these general steps:

-

Like the Linux operating system, OCFS is developed by Oracle as open source software and is available with source code under the general public license (GPL). The latest version of the OCFS packages could be obtained from either of the following websites

-

http://otn.oracle.com/tech/linux/content.html

-

http://www.ocfs.org/ocfs/

The package comprises three files:

-

Support file that contains all the generic packages required for OCFS. The file is identified with a version of the release, for example ocfs-support-1.0.9-4.i686.rpm

-

Tools file that contains all packages to install the various tools required for the configuration and management of OCFS. This file is also identified with a version number of the release, for example ocfs-support-1.0.9-4.i686.rpm

-

A Linux kernel module, which is specific to the type version of the Linux kernel. For example if the type version of the Linux operating system is e12smp the file that needs to be downloaded would be ocfs-2.4.9-e12smp-1.0.9-4.i686.rpm

Note The type version of the Linux operating system could be determined using the uname command, for example

# uname -a Linux oradb1.summerskyus.com 2.4.9-e.12smp #1 SMP

-

-

The OCFS packages (RPM files) are required to be installed in the appropriate /lib/modules tree in use by the operating system kernel as user root in a specific order. The packages are installed using the following syntax:

rpm -i <ocfs_rpm_package>

-

Install the support RPM file

-

rpm -iv ocfs-support-1.0.9-4.i686.rpm

-

-

Install the correct kernel module RPM file for the system

-

rpm -iv ocfs-2.4.9-e-smp-1.0.9-4.i686.rpm

-

-

Install the tools RPM file

-

rpm -iv ocfs-tools-1.0.9-4.i686.rpm

-

Note It is important to install the kernel module RPM file before installing the tools file. If this order is not followed, the install processes will error with the following error message: ocfs = 1.09 is needed by ocfs-tools-1.0.9-4

-

-

Next step is to configure the ocfs.conf located in the /etc directory. This can be performed using the Oracle provided ocfstool from an x-windows interface as user root. The tool is invoked using the following command /usr/bin/ocfstool Use the Generate Config option under the Tasks tab to configure the private network adapters used by the cluster. Once configured the /etc/ocfs.conf file would contain the following entries.

$ more /etc/ocfs.conf # # ocfs config # Ensure this file exists in /etc# node_name = oradb1.summerskyus.com node_number = ip_address = 192.37.210.21 ip_port = 7000 comm_voting = 1 guid = 21FA5FA6715E2AB31451000BCD41E12B

-

OCFS needs to be started every time the system is started. This is done by adding the following lines in the /etc/rc.d/rc.local file /sbin/load_ocfs /sbin/mount -a -t ocfs

-

Next step is to create the partitions for use by OCFS. This is done using the Linux fdisk utility.

fdisk /dev/d439

Size of partitions should take into consideration the block size used during the format operation. Oracle supports a block size between 2K to 1MB range. The max partition size is derived using the formula.

block_size * 1M * 8 (Bitmap size is fixed to 1M)

If the block size if 2K the partition size would be

(2 * 1024) * (1M * 1024 * 1024) *8 = 16GB

Note Like all files systems OCFS has an operational overhead, the overhead would be approximately 10MB which is used for the files metadata, the ''system files'' that is stored at the end of the disk and the partition headers. This should be taken into consideration when creating smaller partitions.

-

Once the partitions are created using the fdisk utility they have to be formatted under OCFS. This step could be performed using the ocfstool utility or manually from the command line. This step should also be performed as user root. This process also defines the block size for the partition. For optimal performance benefits it is recommended that the block size be set at 128K.

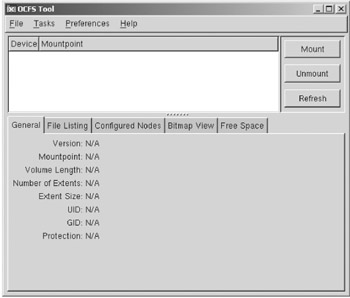

After invoking the ocfstool (/usr/bin/ocfstool), using the format option (Figure 17.1) under the tasks tab is used to format the partitions with OCFS.

Figure 17.1: OCFS Tool Interface -

Next step is to mount the partitions. Prior to mounting these partitions the appropriate mount points (directories) need to be created.

mkdir --p /u01 /u02 /u03

Once the mount points are created, they could be mounted using the

ocfstool utility or from the command line using the mount command

mount -t ocfs -L /devld439 /u01

Note The mount points must be the same for all the nodes in the cluster.

-

The last step in the OCFS configuration is to add these definitions in the /etc/fstab file using the following syntax.

<partition device name> <mount point name> ocfs uid =1001,gid =100

Note The numbers following the uid and gid options correspond to the user id of the oracle user and the group id of the dba group verify that these values are correct for any RAC deployment where OCFS will be used.

17.2.2 Oracle Cluster Management Software

Oracle Cluster Management Software (OCMS) is included with the Oracle 9i Enterprise Edition for Linux. It provides cluster membership services, a global view of clusters, node monitoring, and cluster reconfiguration. It is a component of RAC on Linux and is installed automatically when RAC is selected. OCMS consists of the following components:

-

Watchdog daemon

-

Cluster manager

17.2.3 Watchdog daemon

The watchdog daemon (watchdogd) uses a software-implemented watchdog timer to monitor selected system resources to prevent database corruption. The watchdog timer is a feature of the Linux kernel. The watchdog daemon is part of RAC.

The watchdog daemon monitors the CM and passes notifications to the watchdog timer at defined intervals. The behavior of the Watchdog timer is partially controlled by the CONFIG_WATCHDOG_NOWAYOUT configura tion parameter of the Linux kernel.

The value of the CONFIG_WATCHDOG_NOWAYOUT configuration parameter should be set to Y. If the watchdog timer detects an Oracle instance or CM failure, it resets the instance to avoid possible database corruption.

17.2.4 Cluster manager

The CM maintains the status of the nodes and the Oracle instances across the cluster. The CM process runs on each node of the cluster. Each node has one CM. The number of Oracle instances for each node is not limited by RAC. The CM uses the following communication channels between nodes:

-

Private network.

-

Quorum partition on the shared disk, also called a Quorum disk.

During normal cluster operations, the CMs on each node of the cluster communicate with each other through heartbeat messages sent over the private network. The quorum partition is used as an emergency communication channel if a heartbeat message fails. A heartbeat message can fail for the following reasons:

-

The CM terminates on a node.

-

The private network fails.

-

There is an abnormally heavy load on the node.

The CM uses the quorum disk to determine the reason for the failure. From each node, the CM periodically updates the designated block on the quorum disk. Other nodes check the timestamp for each block. If the message from one of the nodes does not arrive, but the corresponding partition on the quorum has a current timestamp, the network path between this node and other nodes fails.

Figure 17.2 illustrates the Linux watchdog to Oracle instance communication hierarchy. Each Oracle instance registers with the local CM. The CM monitors the status of local Oracle instances and propagates this information to CMs on other nodes. If the Oracle instance fails on one of the nodes, the following events occur:

Figure 17.2: Linux watchdog process.

-

The CM on the node with the failed Oracle instance informs the watchdog daemon about the failure.

-

The watchdog daemon requests the watchdog timer / hangcheck timer to reset the failed node.

-

The watchdog timer / hangcheck timer resets the node.

-

The CMs on the surviving nodes inform their local Oracle instances that the failed node is removed from the cluster.

-

Oracle instances on the surviving nodes start the RAC reconfigura tion procedure.

The nodes must reset if an Oracle instance fails. This ensures that:

-

No physical I/O requests to the shared disks from the failed node occur after the Oracle instance fails.

-

Surviving nodes can start the cluster reconfiguration procedure without corrupting the data on the shared disk.

| Oracle 9iR2 | New Feature: The watchdog daemon process that existed in Oracle Release 9.1 impacted on system availability as it initiated system reboots under heavy workloads. Since then, this module has been removed from Oracle. In place of the watchdog daemon (watchdogd), Version 9.2.0.3 of the oracm for Linux now includes the use of a Linux kernel module called hangcheck-timer. The hangcheck-timer module monitors the Linux kernel for long operating system hangs, and reboots the node if this occurs, thereby preventing potential corruption of the database. This is the new I/O fencing mechanism for RAC on Linux. |

OCMS Installation

Prior to installing the CM it is important the environment variable for ORACLE_HOME and the following directories are created on all the nodes in the cluster.

mkdir - p $ORACLE_HOME/oracm/log mkdir - p $ORACLE_HOME/network/log mkdir - p $ORACLE_HOME/network/trace mkdir -p $ORACLE_HOME/rdbms/log mkdir -p $ORACLE_HOME/rdbms/audit mkdir -p $ORACLE_HOME/network/agent/log mkdir $ORACLE_HOME/network/agent/reco

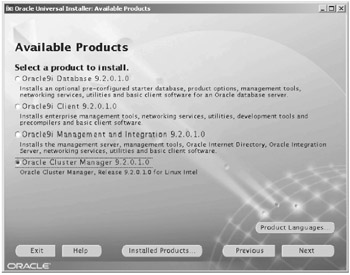

The CM comes bundled with the Oracle database software and is installed using the OUI. After invoking the Oracle installer, the CM option is selected from the available products screen.

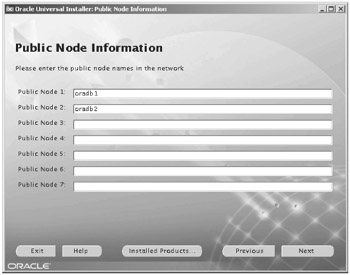

Once the Oracle CM has been selected, the next screen is used to define the public node information. This is done from the following screen.

Subsequent to the public node definition is the private node definition screen, followed by the watchdog definition screen and the quorum disk definition screen. Once this is defined, the CM manager is installed. These steps should be followed on all nodes in the cluster.

| Note | The order of entering public and private should be paid attention to; they need to exactly correspond. For example the first public node that is entered must be the first private node entered and vice versa |

Figure 17.3: Oracle Cluster Manager

Figure 17.4: Public Node Information

Once CM is installed, the cmcfg.ora file located in $ORACLE_HOME/ oracm/admin directory should have the following entries.

HeartBeat=15000 ClusterName=Oracle Cluster Manager, version 9i PollInterval=1000 MissCount=20 PrivateNodeNames=oradb1_ic oradb2_ic PublicNodeNames=oradbl oradb2 ServicePort=9998 WatchdogSafetyMargin=5000 WatchdogTimerMargin=60000 CmDiskfile=HostName = oradb1_ic

After CM for 9.2.0.1 is installed and configured, the next step is the installation of the RAC software. On completion of the RAC installation, the product could be upgraded to 9.2.0.3

| Oracle 9iR2 | New Feature: The watchdog daemon process that existed in Oracle Release 9.1 impacted system availability as it initiated system reboots under heavy workloads. This module has since been removed from Oracle. In place of the watchdog daemon (watchdogd), Version 9.2.0.3 of the oracm for Linux now includes the use of a Linux kernel module called hangcheck-timer. The hangcheck-timer module monitors the Linux kernel for long operating system hangs, and reboots the node if this occurs, thereby preventing potential corruption of the database. This is the new I/O fencing mechanism for RAC on Linux. |

Verify the miscount parameter for the CM in the cmcfg.ora file is set to a value greater than hangcheck-tick + hangcheck-margin (default should be OK at 210 seconds)

Quorum disk is required when using hangcheck timer. Create a partition on a raw device or if using OCFS create a partition using the steps defined above (section 17.2.1, Oracle Clustered File System). The required file could be created using the touch command for example:

touch/u12/quorum.dbf

When using hangchecktimer the cmcfg.ora file should look like this:

HeartBeat=15000 ClusterName=Oracle Cluster Manager, version 9i PollInterval=1000 MissCount=20 PrivateNodeNames=oradb1_ic oradb2_ic PublicNodeNames=oradb1 oradb2 ServicePort=9998 KernelModuleName=hangcheck-timer CmDiskFile=/u12/quorum.dbf HostName=oradbl_ic

17.2.5 Starting OCMS

The following sections describe how to start OCMS. Oracle Corporation supplies the $ORACLE_HOME/oracm/bin/ocmstart.sh sample startup script. Run this script as the root user.

| Note | Once familiarity with the process is gained, the script can be used to automate the startup process. |

Starting the watchdog daemon

To start the watchdog daemon, enter the following:

$ su root # cd $ORACLE_HOME/oracm/bin # watchdogd

The default watchdog daemon log file is $ORACLE_HOME/oracm/log/wdd.log.

Table 17.1 lists the various watchdog daemon arguments that are used during starting of the watchdog daemon process.

| Argument | Valid Values | Default Value | Description |

|---|---|---|---|

| -l number | 0 or 1 | 1 | If the value is 0, no resources are registered for monitoring. This argument is used for debugging system configuration problems. If the value is 1, the CMs are registered for monitoring |

| -m number | 5000 to 180,000 ms | 5000 | The watchdog daemon expects to receive heartbeat messages from all clients (oracm threads) within the time specified by this value. If a client fails to send a heartbeat message within this time, the watchdog daemon stops sending heartbeat messages to the kernel watchdog timer, causing the system to reset |

| -d string | /dev/watchdog | Path of the device file for the watchdog timer | |

| -e string | $ORACLE_HOME/ oracm/log/ wdd.log | Filename of the watchdog daemon log file |

Configuring the cluster manager

The $ORACLE_HOME/oracm/admin/cmcfg.ora CM configuration file must be created on each node of the cluster before starting OCMS. The cmcfg.ora file should contain the following parameters:

-

PublicNodeNames

-

PrivateNodeNames

-

CmDiskFile

-

WatchdogTimerMargin/KernelModuleNames hangcheck-timer

-

HostName

Before the creation of the CM configuration file the following should be verified:

-

The /etc/hosts file on each node of the cluster has an entry for the public network (public name) and an entry for the private network (private name for each node).

-

A private network is used for the RAC internode communication.

-

The CmDiskFile parameter defines the location of the CM quorum disk.

The CmDiskFile parameter on each node in a cluster must specify the same quorum disk.

The following example shows a cmcfg.ora file on the first node of a four node cluster:

PublicNodeNames=pubnode1 pubnode2 pubnode3 pubnode4 PrivateNodeNames=prinode1 prinode2 prinode3 prinode4 CmDiskFile=/dev/raw1 WatchdogTimerMargin=1000 HostName=prinode1

Table 17.2 lists all the CM parameters used for the creation of the CM configuration file.

| Parameter | Valid Values | Default Value | Description |

|---|---|---|---|

| CmDiskFile | Directory path, up to 256 charac- ters in length | No default value. Explicit value should be set | Specifies the pathname of the quorum partition |

| MissCount | 2 to 1000 | 5 | Specifies the time that the CM waits for a heartbeat from the remote node before declaring that node inactive. The time in seconds is determined by multiplying the value of the MissCount parameter by 3 |

| PublicNodeNames | List of host names, up to 4096 characters in length | No default value | Specifies the list of all host names for the public network, separated by spaces. List host names in the same order on each node |

| PrivateNodeNames | List of host names, up to 4096 characters in length | No default value | Specifies the list of all host names for the private network, separated by spaces. List host names in the same order on each node |

| HostName | A host name, up to 256 characters in length | No default value | Specifies the local host name for the private network. Define this name in the /etc/hosts file |

| ServiceName | A service, up to 256 characters in length | CMSrvr | Specifies the service name to be used for communication between CM. If a CM cannot find the service name in the /etc/services file, it uses the port specified by the ServicePort parameter. ServiceName is a fixed-value param- eter in this release. Use the ServicePort parameter if an alternative port for the CM is required |

| ServicePort | Any valid port number | 9998 | Specifies the number of the port to be used for communication between cluster managers when the ServiceName parameter does not specify a service |

| WatchdogTimerMargin | 1000 to 180,000 ms | No default value | The same as the value of the soft_margin parameter speci- fied at Linux softdog startup. The value of the soft_margin parameter is specified in seconds and the value of the Watchdog TimerMargin parameter is speci- fied in milliseconds. |

| WatchdogSafetyMargin | 1000 to 180,000 ms | 5000 ms | Specifies the time between when the CM detects a remote node failure and when the cluster reconfiguration is started. This parameter is part of the formula that specifies the time between when the CM on the local node detects an Oracle instance failure or join on any node and when it reports the cluster reconfiguration to the Oracle instance on the local node |

Starting the cluster manager

To start the CM:

-

Confirm that the watchdog daemon is running.

-

Confirm that the host names specified by the PublicNodeNames and PrivateNodeNames parameters in the cmcfg.ora file are listed in the /etc/hosts file.

-

As the root user, start the oracm process as a background process.

To track activity, redirect any output to a log file. For example, the following script directs all the output messages and error messages to $ORACLE_HOME/oracm/log/cm.out file:

$ su root # cd $ORACLE_HOME/oracm/bin # oracm </dev/null >$ORACLE_HOME/oracm/log/cm.out 2>&1 &

The oracm process spawns multiple threads. The following command lists all the threads:

ps -elf

Table 17.3 describes the arguments used for the oracm executable.

| Argument | Values | Default Value | Description |

|---|---|---|---|

| /a:action | 0,1 | 0 | Specifies the action taken when the LMON process or another Oracle process that can write to the shared disk terminates abnormally. |

| /l:filename | Any | /$ORACLE_HOME/ oracm/log/ cm.log | Specifies the pathname of the log file for the CM. The maxi- mum pathname length is 192 characters |

| /? | None | None | Shows help for the arguments of the oracm executable. If this argument is provided, CM will not start |

| /m | Any | 25,000,000 | The size of the oracm log file in bytes |

Configuring timing for cluster reconfiguration

When a node fails, there is a delay before the RAC reconfiguration commences. Without this delay, simultaneous access of the same data block by the failed node and the node performing the recovery can cause database corruption. The length of the delay is defined by the sum of the following:

-

Value of the WatchdogTimerMargin parameter.

-

Value of the WatchdogSafetyMargin parameter.

-

Value of the watchdog daemon -m command-line argument.

If the default values for the Linux kernel soft_margin and CM parameters are used, the time between when the failure is detected and the start of the cluster reconfiguration is 70 seconds. For most workloads, this time can be significantly reduced. The following example shows how to decrease the time of the reconfiguration delay from 70 seconds to 20 seconds:

-

Set the value of the WatchdogTimerMargin (soft_margin) parameter to 10 seconds.

-

Leave the value of the WatchdogSafetyMargin parameter at the default value, 5000 ms.

-

Leave the value of the watchdog daemon -m command-line argument at the default value, 5000 ms.

To change the values of the WatchdogTimerMargin (soft_margin) and the WatchdogSafetyMargin:

-

Stop the Oracle instance.

-

Reload the softdog module with the new value of soft_margin. For example,

#/sbin/insmod softdog soft_margin =10

Change the value of the WatchdogTimerMargin in the CM con figuration file $ORACLE_HOME/oracm/admin/cmcfg.ora. The value is modified by editing the parameter WatchdogTimerMargin =50000.

-

Restart watchdogd with the -m command-line argument set to 5000.

-

Restart the oracm executable.

-

Restart the Oracle instance.

| Note | The removal of the watchdogd and the introduction of the hangcheck-timer module require several parameter changes in the CM configuration file, $ORACLE_HOME/oracm/admin/cmcfg.ora.

hangcheck_tick – the hangcheck_tick is an interval indicating how often the hangcheck-timer checks on the health of the system. hangcheck_margin – certain kernel activities may randomly introduce delays in the operation of the hangcheck-timer. hangcheck_margin provides a margin of error to prevent unnecessary system resets due to these delays. The node reset occurs when the system hang time > (hangcheck_tick + hangcheck_margin) |

Cluster manager starting options

OCMS supports node fencing by completely resetting the node if an Oracle instance fails and the CM thread malfunctions. This approach guarantees that the database is not corrupted.

However, it is not always necessary to reset the node if an Oracle instance fails. If the Oracle instance uses synchronous I/O, a node reset is not required. In addition, in some cases where the Oracle instance uses asynchronous I/O, it is not necessary to reset the node, depending on how asynchronous I/O is implemented in the Linux kernel.

The /a:action flag in the following command defines OCMS behavior when an Oracle process fails:

$ oracm /a:[action]

In this example:

-

If the action argument is set to 0, the node does not reset. By default, the watchdog daemon starts with the -l 1 option and the oracm process starts with the /a:0 option. With these default values, the node resets only if the oracm or watchdogd process terminates. It does not reset if an Oracle process that can write to the disk terminates. This is safe if a certified Linux kernel is used that does not require node-reset.

-

If the action argument is set to 1, the node resets if oracm, watchdogd, or an Oracle process that can write to the disk terminates. In these situations, a SHUTDOWN ABORT command on an Oracle instance resets the node and terminates all Oracle instances that are running on that node.

| Note | Only the additional processes required for configuration of RAC on Linux have been discussed. All other steps discussed in Chapter 8 (Installation and Configuration) have to be followed for the complete installation of RAC on Linux. |

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 174

- ERP Systems Impact on Organizations

- ERP System Acquisition: A Process Model and Results From an Austrian Survey

- The Second Wave ERP Market: An Australian Viewpoint

- The Effects of an Enterprise Resource Planning System (ERP) Implementation on Job Characteristics – A Study using the Hackman and Oldham Job Characteristics Model

- Healthcare Information: From Administrative to Practice Databases