Virtualization Today

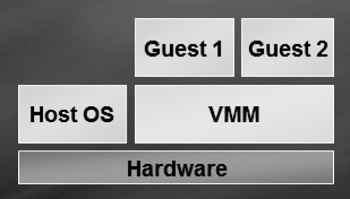

Virtualization today on Windows platforms basically takes one of two forms: Type 2 or Hybrid. A typical example of Type 2 virtualization is the Java virtual machine, while another example is the common language runtime (CLR) of the .NET Framework. In both examples, you start with the host operating system-that is, the operating system installed directly onto the physical hardware. On top of the host OS runs a Virtual Machine Monitor (VMM), whose role is to create and manage virtual machines, dole out resources to these machines, and keep these machines isolated from each other. In other words, the VMM is the virtualization layer in this scenario. Then on top of the VMM you have the guests that are running, which in this case are Java or .NET applications. Figure 3-1 shows this arrangement, and because the guests have to access the hardware by going through both the VMM and the host OS, performance is generally not at its best in this scenario.

Figure 3-1: Architecture of Type 2 VMM

More familiar probably to most IT pros is the Hybrid form of virtualization shown in Figure 3-2. Here both the host OS and the VMM essentially run directly on the hardware (though with different levels of access to different hardware components), whereas the guest OSs run on top of the virtualization layer. Well, that’s not exactly what’s happening here. A more accurate depiction of things is that the VMM in this configuration still must go through the host OS to access hardware. However, the host OS and VMM are both running in kernel mode and so they are essentially playing tug o’ war with the CPU. The host gets CPU cycles when it needs them in the host context and then passes cycles back to the VMM and the VMM services then provide cycles to the guest OSs. And so it goes, back and forth. The reason why the Hybrid model is faster is that the VMM is running in kernel mode as opposed to the Type 2 model where the VMM generally runs in User mode.

Anyway, the Hybrid VMM approach is used today in two popular virtualization solutions from Microsoft, namely Microsoft Virtual PC 2007 and Microsoft Virtual Server 2005 R2.

The performance of Hybrid VMM is better than that of Type 2 VMM, but it’s still not as good as having separate physical machines.

Figure 3-2: Architecture of Hybrid VMM

| Note | Another way of distinguishing between Type 2 and Hybrid VMMs is that Type 2 VMMs are process virtual machines because they isolate processes (services or applications) as separate guests on the physical system, while Hybrid VMMs are system virtual machines because they isolate entire operating systems, such as Windows or Linux, as separate guests. |

A third type of virtualization technology available today is Type 1 VMM, or hypervisor technology. A hypervisor is a layer of software that sits just above the hardware and beneath one or more operating systems. Its primary purpose is to provide isolated execution environments, called partitions, within which virtual machines containing guest OSs can run. Each partition is provided with its own set of hardware resources-such as memory, CPU cycles, and devices-and the hypervisor is responsible for controlling and arbitrating access to the underlying hardware.

Figure 3-3 shows a simple form of Type 1 VMM in which the VMM (the hypervisor) is running directly on the bare metal (the underlying hardware) and several guest OSs are running on top of the VMM.

Figure 3-3: Architecture of Type 1 VMM

Going forward, hypervisor-based virtualization has the greatest performance potential, and in a moment we’ll see how this will be implemented in Windows Server 2008. But first let’s compare two variations of Type 1 VMM: monolithic and microkernelized.

Monolithic Hypervisor

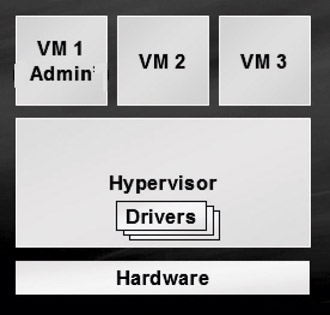

In the monolithic model, the hypervisor has its own drivers for accessing the hardware beneath it. (See Figure 3-4.) Guest OSs run in VMs on top of the hypervisor, and when a guest needs to access hardware it does so through the hypervisor and its driver model. Typically, one of these guest OSs is the administrator or console OS within which you run the tools that provision, manage, and monitor all guest OSs running on the system.

Figure 3-4: Monolithic hypervisor

The monolithic hypervisor model provides excellent performance, but it can have weaknesses in the areas of security and stability. This is because this model inherently has a greater attack surface and much greater potential for security concerns due to the fact that drivers (and even sometimes third-party code) runs in this very sensitive area. For example, if malware were downloaded onto the system, it could install a keystroke logger masquerading as a device driver in the hypervisor. If this happened, every guest OS running on the system would be compromised, which obviously isn’t good. Even worse, once you’ve been “hyperjacked” there’s no way the operating systems running above can tell because the hypervisor is invisible to the OSs above and can be lied to by the hypervisor!

The other problem is stability-if a driver were updated in the hypervisor and the new driver had a bug in it, the whole system would be affected, including all its virtual machines. Driver stability is thus a critical issue for this model, and introducing any third-party code has the potential to cause problems. And given the evolving nature of server hardware, the frequent need for new and updated drivers increases the chances of something bad happening. You can think of the monolithic model as a “fat hypervisor” model because of all the drivers the hypervisor needs to support.

Microkernelized Hypervisor

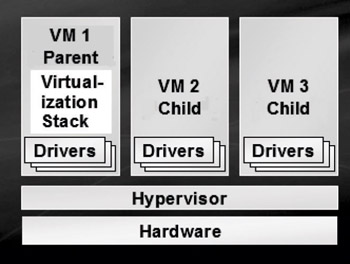

Now contrast the monolithic approach just mentioned with the microkernelized model. (See Figure 3-5.) Here you have a truly ”thin” hypervisor that has no drivers running within it. Yes, that’s right-the hypervisor has no drivers at all. Instead, drivers are run in each partition so that each guest OS running within a virtual machine can access the hardware through the hypervisor. This arrangement makes each virtual machine a completely separate partition for greater security and reliability.

Figure 3-5: Microkernelized hypervisor

In the microkernelized model, which is used in Windows Server virtualization in Windows Server 2008, one VM is the parent partition while the others are child partitions. A partition is the basic unit of isolation supported by the hypervisor. A partition is made up of a physical address space together with one or more virtual processors, and you can assign specific hardware resources-such as CPU cycles, memory and devices-to the partition. The parent partition is the partition that creates and manages the child partitions, and it contains a virtualization stack that is used to control these child partitions. The parent partition is generally also the root partition because it is the partition that is created first and owns all resources not owned by the hypervisor. And being the default owner of all hardware resources means the root partition (that is, the parent) is also in charge of power management, plug and play, managing hardware failure events, and even loading and booting the hypervisor.

Within the parent partition is the virtualization stack, a collection of software components that work in conjunction with and sit on top of the hypervisor and that work together to support the virtual machines running on the system. The virtualization stack talks with the hypervisor and performs any virtualization functions not directory supplied by the hypervisor. Most of these functions are centered around the creation and management of child partitions and the resources (CPU, memory, and devices) they need.

The virtualization stack also exposes a management interface, which in Windows Server 2008 is a WMI provider whose APIs will be made publicly known. This means that not only will the tools for managing virtual machines running on Windows Server 2008 use these APIs, but third-party system management vendors will also be able to code new tools for managing, configuring, and monitoring VMs running on Windows Server 2008.

The advantage of the microkernelized approach used by Windows Server virtualization over the monolithic approach is that the drivers needed between the parent partition and the physical server don’t require any changes to the driver model. In other words, existing drivers just work. Microsoft chose this route because requiring new drivers would have been a showstopper. And as for the guest OSs, Microsoft will provide the necessary facilities so that these OSs just work either through emulation or through new synthetic devices.

On the other hand, one could argue that the microkernelized approach does suffer a slight performance hit compared with the monolithic model. However, security is paramount nowadays, so sacrificing a percentage point or two of performance for a reduced attack surface and greater stability is a no-brainer in most enterprises.

| Tip | What’s the difference between a virtual machine and a partition? Think of a virtual machine as comprising a partition together with its state. |

EAN: 2147483647

Pages: 138