Understanding Virtualization in Windows Server 2008

Before I get you too excited, however, you need to know that what I’m going to describe now is not yet present in Windows Server 2008 Beta 3, the platform that this book covers. It’s coming soon, however. Within 180 days of the release of Windows Server 2008, you should be able to download and install the bits for Windows Server virtualization that will make possible everything that I’ve talked about in the previous section and am going to describe now. In fact, if you’re in a hotel after a long day at TechEd and you’re reading this book for relaxation (that is, you’re a typical geek), you can probably already download tools for your current prerelease build of Windows Server 2008 that might let you test some of these Windows Server virtualization technologies by creating and managing virtual machines on your latest Windows Server 2008 build.

I said might let you test these new technologies. Why? First, Windows Server virtualization is an x64 Editions technology only and can’t be installed on x86 builds of Windows Server 2008. Second, it requires hardware processors with hardware-assisted virtualization support, which currently includes AMD-V and Intel VT processors only. These extensions are needed because the hypervisor runs out of context (effectively in ring 1), which means that the code and data for the hypervisor are not mapped into the address space of the guest. As a result, the hypervisor has to rely on the processor to support various intercepts, which are provided by these extensions. And finally, for security reasons it requires processor support for hardware-enabled Data Execution Prevention (DEP), which Intel describes as XD (eXecute Disable) and AMD describes as NX (No eXecute). So if you have suitable hardware and lots of memory, you should be able to start testing Windows Server virtualization as it becomes available in prere-lease form for Windows Server 2008.

Let’s dig deeper into the architecture of Windows Server virtualization running on Windows Server 2008. Remember, what we’re looking at won’t be available until after Windows Server 2008 RTMs-today in Beta 3, there is no hypervisor in Windows Server 2008, and the operating system basically runs on top of the metal the same way Windows Server 2003 does. So we’re temporarily time-shifting into the future here, and assuming that when we try and add the Windows Virtualization role to our current Windows Server 2008 build that it actually does something!

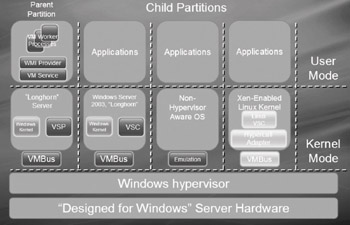

Figure 3-6 shows the big picture of what the architecture of Windows Server 2008 looks like with the virtualization bits installed.

Figure 3-6: Detailed architecture of Windows Server virtualization

Partition 1: Parent

Let’s unpack this diagram one piece at a time. First, note that we’ve got one parent partition (at the left) together with three child partitions, all running on top of the Windows hypervisor. In the parent partition, running in kernel mode, there must be a guest OS, which must be Windows Server 2008 but can be either a full installation of Windows Server 2008 or a Windows server core installation. Being able to run a Windows server core installation in the parent partition is significant because it means we can minimize the footprint and attack surface of our system when we use it as a platform for hosting virtual machines.

Running within the guest OS is the Virtualization Service Provider (VSP), a “server” component that runs within the parent partition (or any other partition that owns hardware). The VSP talks to the device drivers and acts as a kind of multiplexer, offering hardware services to whoever requests them (for example, in response to I/O requests). The VSP can pass on such requests either directly to a physical device through a driver running in kernel or user mode, or to a native service such as the file system to handle.

The VSP plays a key role in how device virtualization works. Previous Microsoft virtualization solutions such as Virtual PC and Virtual Server use emulation to enable guest OSs to access hardware. Virtual PC, for example, emulates a 1997-era motherboard, video card, network card, and storage for its guest OSs. This is done for compatibility reasons to allow the greatest possible number of different guest OSs to run within VMs on Virtual PC. (Something like over 1,000 different operating systems and versions can run as guests on Virtual PC.) Device emulation is great for compatibility purposes, but generally speaking it’s lousy for performance. VSPs avoid the emulation problem, however, as we’ll see in a moment.

In the user-mode portion of the parent partition are the Virtual Machine Service (VM Service), which provides facilities to manage virtual machines and their worker processes; a Virtual Machine Worker Process, which is a process within the virtualization stack that represents and services a specific virtual machine running on the system (there is one VM Worker Process for each VM running on the system); and a WMI Provider that provides a set of interfaces for managing virtualization on the system. As mentioned previously, these WMI Providers will be publicly documented on MSDN, so you’ll be able to automate virtualization tasks using scripts if you know how. Together, these various components make up the user-mode portion of the virtualization stack.

Finally, at the bottom of the kernel portion of the parent partition is the VMBus, which represents a system for sending requests and data between virtual machines running on the system.

Partition 2: Child with Enlightened Guest

The second partition from the left in Figure 3-6 shows an “enlightened” guest OS running within a child partition. An enlightened guest is an operating system that is aware that it is running on top of the hypervisor. As a result, the guest uses an optimized virtual machine interface. A guest that is fully enlightened has no need of an emulator; one that is partially enlightened might need emulation for some types of hardware devices. Windows Server 2008 is an example of a fully enlightened guest and is shown in partition 2 in the figure. (Windows Vista is another possible example of a fully enlightened guest.) The Windows Server 2003 guest OS shown in this partition, however, is only a partially enlightened, or “driver-enlightened,” guest OS.)

By contrast, a legacy guest is an operating system that was written to run on a specific type of physical machine and therefore has no knowledge or understanding that it is running within a virtualized environment. To run within a VM hosted by Windows Server virtualization, a legacy guest requires substantial infrastructure, including a system BIOS and a wide variety of emulated devices. This infrastructure is not provided by the hypervisor but by an external monitor that we’ll discuss shortly.

Running in kernel mode within the enlightened guest OS is the Virtualization Service Client (VSC), a “client” component that runs within a child partition and consumes services. The key thing here is that there is one VSP/VSC pair for each device type. For example, say a user-mode application running in partition 2 (the child partition second from the left) wants to write something to a hard drive, which is server hardware. The process works like this:

-

The application calls the appropriate file system driver running in kernel mode in the child partition.

-

The file system driver notifies the VSC that it needs access to hardware.

-

The VSC passes the request over the VMBus to the corresponding VSP in partition 1 (the parent partition) using shared memory and hypervisor IPC messages. (You can think of the VMBus as a protocol with a supporting library for transferring data between different partitions through a ring buffer. If that’s too confusing, think of it as a pipe. Also, while the diagram makes it look as though traffic goes through all the child partitions, this is not really the case-the VMBus is actually a point-to-point inter-partition bus.)

-

The VSP then writes to the hard drive through the storage stack and the appropriate port driver.

Microsoft plans on providing VSP/VSC pairs for storage, networking, video, and input devices for Windows Server virtualization. Third-party IHVs will likely provide additional VSP/VSC pairs to support additional hardware.

Speaking of writing things to disk, let’s pause a moment before we go on and explain how pass-through disk access works in Windows Server virtualization. Pass-through disk access represents an entire physical disk as a virtual disk within the guest. The data and commands are thus “passed through” to the physical disk via the partition’s native storage stack without any intervening processing by the virtual storage stack. This process contrasts with a virtual disk, where the virtual storage stack relies on its parser component to make the underlying storage (which could be a .vhd or an .iso image) look like a physical disk to the guest. Pass-through disk access is totally independent of the underlying physical connection involved. For example, the disk might be direct-attached storage (IDE disk, USB flash disk, FireWire disk) or it might be on a storage area network (SAN).

Now let’s resume our discussion concerning the architecture of Windows Server virtualization and describe the third and fourth partitions shown in Figure 3-6 above.

Partition 3: Child with Legacy Guest

In the third partition from the left is a legacy guest OS such as MS-DOS. Yes, there are still a few places (such as banks) that run DOS for certain purposes. Hopefully, they’ve thrown out all their 286 PCs though. The thing to understand here is that basically this child partition works like Virtual Server. In other words, it uses emulation to provide DOS with a simulated hardware environment that it can understand. As a result, there is no VSC component here running in kernel mode.

Partition 4: Child with Guest Running Linux

Finally, in the fourth partition on the right is Linux running as a guest OS in a child partition. Microsoft recognizes the importance of interoperability in today’s enterprises. More specifically, Microsoft knows that their customers want to be able to run any OS on top of the hyper-visor that Windows Server virtualization provides, and therefore it can’t relegate Linux (or any other OS) to second-class status by forcing it to have to run on emulated hardware. That’s why Microsoft has decided to partner with XenSource to build VSCs for Linux, which will enable Linux to run as an enlightened guest within a child partition on Windows Server 2008. I knew those FOSS guys would finally see the light one day…

EAN: 2147483647

Pages: 138