Speeding Data Exports

| < Day Day Up > |

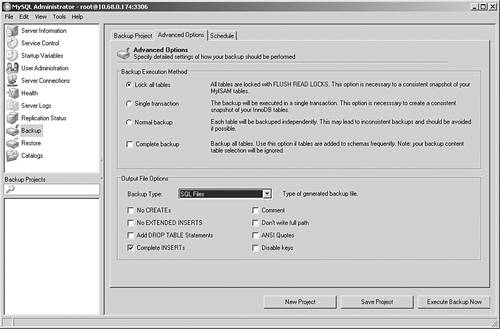

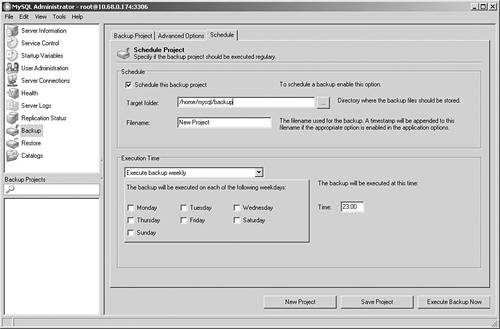

| You have some choices when exporting information from your MySQL server. Your first choice is to pick a binary or text-based method. If you are simply interested in backing up information for a potential restoration at some point in the future, the more rapid binary format is your best choice. On the other hand, if you want the platform independence and interoperability of text-based data, choose this kind of approach, although it will run more slowly than its binary counterpart for large amounts of information. Although mysqlhotcopy is a great tool for performing database backups in binary format, it is currently not available on several key platforms, nor is it particularly designed for data import and export. Consequently, this chapter devotes most of its attention to the powerful and versatile mysqldump utility for text-based usage, especially as invoked from a shell prompt. The MySQL Administrator backup utility also provides a handy graphical user interface for many of the same types of text-based data import and export operations, with the added convenience of schedule control. The MySQL Administrator backup utility lets you define repeatable projects and then gives you fine-grained control over which elements will be affected (see Figure 15.1). Figure 15.1. Choosing a table for backup in the MySQL Administrator. Once a backup project has been defined, you may fine-tune how the operation will proceed (see Figure 15.2). Figure 15.2. Managing details of the backup operation. After defining and configuring your backup, you may specify a schedule for execution (see Figure 15.3). Figure 15.3. Selecting a repeatable execution schedule for a MySQL Administrator backup. Because the primary focus is on performance optimization, this chapter avoids an exhaustive overview of all possible options. Instead, it describes several scenarios, along with the correct invocation to make them run as quickly as possible. It's also important to note that certain mysqldump directives, such as -opt, actually include several other options. However, to keep things simple, any relevant options are specifically cited, regardless of whether they're included "for free" as part of other options. Finally, it's important to decide what you want to do when it comes time to reload this exported information. You can choose between the SQL script generated by mysqldump or the mysqlimport utility (and, optionally, its corresponding LOAD DATA INFILE statement). Your answer will determine the correct data exporting tool. Generally, if you want to back up and then copy or restore an entire database and don't want to use a binary format, first run mysqldump, and then import the information using the generated SQL script. However, you can reap performance benefits from exporting information with SELECT ... INTO OUTFILE and then loading this data with either mysqlimport or LOAD DATA INFILE. If you decide to use these commands for full database backups, you need a little more preparation, primarily in the area of reliably automating the table generation process followed by launching mysqlimport/LOAD DATA INFILE. We ran a battery of tests on a variety of tables to gauge the efficiency differences between the two options. We found that the SELECT ... INTO OUTFILE/LOAD DATA INFILE (mysqlimport) pairing generally ran 25% to 30% faster than the mysqldump alternative. Others have reported much more dramatic performance differences between the two choices. The Importance of Regular ExportsEven though this book's primary mission is to help improve MySQL productivity, it's worth mentioning how important it is to combine the power and control of binary backups (including InnoDB's transaction logs where applicable) with regularly scheduled, ASCII-based exports. Whether you use mysqldump or SELECT ... INTO OUTFILE, the extra safety realized by running these exports (and safeguarding their results) protects you from possible data integrity damage due to circumstances beyond your control. Avoiding Excessive Memory ConsumptionThis book has continually stressed how important it is to take advantage of in-memory processing to speed database access. However, several exceptions to this rule exist. One of these arises when exporting large amounts of data. If you forget to include either the quick or -opt directives when launching mysqldump (especially in older versions), MySQL attempts to retrieve the data export into memory before sending it on to its destination. For a small database, this isn't a problem. However, for a more voluminous database (or smaller amount of memory), you run the risk of crowding this finite resource with export results. Be aware of this possibility when launching your data retrieval process. Fortunately, more recent versions of MySQL include this option as a default. Concurrency and ExportsGenerally, it's developers who focus on concurrency issues when building their applications. Database administrators also consider concurrency because they want their data load operations to have minimal impact on other users. Recall that concurrency and performance were discussed earlier as part of Chapter 9, "Developing High-Speed Applications," review of optimal application design. However, database administrators also need to keep concurrency in mind when running data extraction. In particular, you should decide whether you want to lock your tables (via the --lock-tables attribute) or treat the export as one distinct transaction (via the --single-transaction attribute). The - single-transaction attribute is only meaningful as long as the storage engine is InnoDB. Which should you choose? Much depends on the amount of data to be exported, along with the transactional and concurrency demands on your database. For example, although opting for the --single-transaction tactic runs a slight risk of added undo space requirements, this is more than offset by the increased concurrency supplied by letting you avoid locking your tables. Although mysqldump operates very quickly, if your database is large, it still may take quite some time for this utility to finish its work. If you chose -lock-tables, database users may experience severe concurrency problems until the operation finishes. From the perspective of concurrency alone, --single-transaction represents the better choice in most cases. Retrieving Subsets of InformationThe mysqldump utility offers administrators increasingly fine levels of granularity when deciding what to export. You might elect to export all of your databases, a single database, or even a single table with one database. In fact, you might even create a more selective extract: a set of rows from within a single table. To export a subset of a table, include either the --where or w directives with your mysqldump request. For example, if you want to export only those transactions older than January 1, 2003, request the following: [mysql@DBSERVER1 /tmp/results]$ mysqldump -w=transaction_date<=2003-01-01 high_hat transactions The results include your WHERE directive: ... ... -- -- Dumping data for table `transactions` -- -- WHERE: transaction_date<=2003-01-01 ... ... Be aware that the --where option can be a bit finicky, especially when it comes to quote placement; you might need to experiment to get it just right for your query. If your query criteria don't include any spaces or special characters (such as those that might confuse the operating system into thinking that you are making a request of it), you can even omit quotes. Also remember that you should ideally have the right indexes in place to help the export run more quickly, especially if the export is run regularly. Copying Table Structures Without DataAs described in Chapter 1, "Setting Up an Optimization Test Environment," it's important to replicate your production schema, data, hardware, and configuration environment when performing optimization tests. However, there might be times when you don't want or need all of your data, but do want the correct schema. One quick way to create identical copies of your schema without all associated data and indexes is to specify the --no-data option when running mysqldump: [mysql@DBSERVER1 /tmp/results]$ mysqldump --no-data high_hat -- MySQL dump 8.23 -- -- Host: localhost Database: high_hat --------------------------------------------------------- -- Server version 5.0.2-alpha-max-log -- -- Table structure for table `awards` -- CREATE TABLE awards ( award_id int(10) unsigned NOT NULL auto_increment, customer_id int(10) unsigned NOT NULL default '0', award_date date NOT NULL default '0000-00-00', miles_deducted int(10) unsigned NOT NULL default '0', origin char(3) NOT NULL default '', destination char(3) NOT NULL default '', PRIMARY KEY (award_id) ) ENGINE=MyISAM DEFAULT CHARSET=latin1; ... ... You can even request a single table: [mysql@DBSERVER1 /tmp/results]$ mysqldump --no-data high_hat customer_master This option creates an identical, empty schema (including index definitions) that you can deploy on any MySQL server. The following section looks at how you can speed up index re-creation on the destination system. Delaying Index Re-creation MyISAMWhen copying large tables between systems, it is usually more advantageous to load all data first, and only then rebuild your indexes. Otherwise, you might find that it takes much longer to complete the reloading on the destination system because each row loaded requires index maintenance even though MyISAM offers algorithms to quickly perform this index work for empty tables when using LOAD DATA INFILE. To delay these indexing operations, specify --disable-keys when running mysqldump. Note that this option is only effective for MyISAM tables; if you specify it for InnoDB tables, MySQL still performs the laborious task of updating indexes in real time. Also, in earlier versions of MySQL, it was necessary to run several different commands, including launching myisamchk, to achieve the same results; passing this flag is much less cumbersome. [mysql@DBSERVER1 /tmp/results]$ mysqldump --no-data -disable-keys high_hat customer_master When chosen, this option embeds directives into the output stream. These commands instruct MySQL to rebuild the indexes after all data has been loaded: CREATE TABLE awards ( award_id int(10) unsigned NOT NULL auto_increment, ... ... ) ENGINE=MyISAM DEFAULT CHARSET=latin1; ... ... /*!40000 ALTER TABLE `awards` DISABLE KEYS */; ... ... INSERT INTO awards VALUES (98481,30018,'2003-09-05',50000,'ORD','LGA'); INSERT INTO awards VALUES (98482,26818,'2001-10-25',100000,'PDX','LHR'); INSERT INTO awards VALUES (98483,13293,'2003-03-19',50000,'ALB','PHX'); ... ... /*!40000 ALTER TABLE `awards` ENABLE KEYS */; Delaying Index Re-creation InnoDBDue to architectural differences between MyISAM and InnoDB, delaying index re-creation when loading InnoDB tables won't benefit import performance. However, there are still a number of significant steps that you can take to make imports run faster. For example, one handy InnoDB feature is its disabling of foreign key constraint checks for the data export/import process. With these constraint checks in place, there's a good chance that your reload process might fail if rows are inserted out of sequence. This topic is discussed later in the chapter. Also, if at all possible try to export your information in primary key order; reloading will proceed many times faster by simply following this basic directive. If you are unable to make this happen during export, consider sorting the file prior to reloading. Preparing for Data ReloadYou'll discover the most optimal ways to import information in a moment. However, there are a number of performance-enhancing suggestions you can make while generating your export. These simple suggestions can make a big difference when it comes time to reload your exported data. Multirow INSERTTo begin, you can request that mysqldump generate an export file that inserts multiple rows as part of each INSERT operation. This helps boost the speed of reloading the information later, while keeping the size of the extract file smaller. For example, contrast the following small samples of the traditional and multirow versions of this output: INSERT INTO awards VALUES (1,28565,'2001-08-01',75000,'ORD','SFO'); INSERT INTO awards VALUES (2,6850,'2001-05-25',75000,'MSP','CDG'); INSERT INTO awards VALUES (3,10665,'2001-09-06',50000,'SIN','SEA'); INSERT INTO awards VALUES (4,11347,'2001-03-22',50000,'IAD','RIO'); versus INSERT INTO awards VALUES (1,28565,'2001-08-01',75000,'ORD','SFO'),(2,6850,'2001-05-25' To request a multiple row insert, simply specify --extended-insert when you launch mysqldump: [mysql@DBSERVER1 /tmp/results]$ mysqldump -extended-insert high_hat awards To make these multirow SQL commands as big and efficient as possible, provide a large value for max_allowed_packet when you launch mysqldump. However, be certain that this value doesn't exceed the size of the same variable on the server. INSERT DELAYEDIn Chapter 8, "Advanced SQL Tips," review of optimal SQL tactics you saw how INSERT DELAYED takes advantage of memory buffering to boost multiclient access to a MyISAM-hosted table. If you want to employ delayed inserts when reloading information, specify --delay when you run mysqldump. Remember that this does come with a price additional memory consumption. Generally, you should only request it for those tables that are likely to be accessed (in read/write mode) by other clients at the same time the reload is happening. You will receive a much better return on investment by using multi-row INSERT statements as seen earlier in this chapter. Locking TablesLocking your target tables is one simple way to reduce overhead (and boost performance) when reloading large blocks of data. By including --add-lock with your mysqldump command, MySQL embeds a LOCK TABLES ... WRITE directive after the CREATE TABLE statement, but before the INSERT operations. After the inserts have been completed, MySQL appends UNLOCK TABLES to release the table lock. By delaying key buffer flushes until when the lock is released, MySQL is able to reduce overhead and boost loading speed. You examined the impact of locks on multiuser database concurrency earlier; use this directive wisely when loading information onto heavily accessed database servers. Using SELECT ... INTO OUTFILEAt the beginning of this chapter, you learned how the SELECT ... INTO OUTFILE/LOAD DATA INFILE SQL statement pair can extract and then import information more quickly than the mysqldump/mysqlimport utility pair. If you choose the SQL route, simply generate your SELECT statement as follows: mysql> SELECT * FROM flights WHERE flight_id < 100 INTO OUTFILE '/tmp/flights' Take a look at some of the contents of the resulting file: [mysql@DBSERVER1 /tmp]$ head flights 1 2358 2003-03-09 IAD MSP 2 109 2002-11-12 LGW CDG 3 9893 2001-10-30 PHX KUL 4 642 2004-07-23 ROM FRA 5 8845 2002-08-11 SFO SJO ... ... As you saw earlier, this type of file loads in approximately one-quarter to one-third less time than the collection of SQL statements created by mysqldump. Improving Network PerformanceIf you are planning to extract data from one machine and then insert the same information onto another machine in real time across your network, be certain that your MySQL communication variables are set to handle this type of task as efficiently as possible. These parameters (including net_buffer_length, max_allowed_packet, net_retry_count, and so on) are discussed in Chapters 10, "General Server Performance and Parameters Tuning," and 14, "Operating System, Web Server and Connectivity Tuning." In addition to tuning these parameters, you might also pass --compress to utilities such as mysqldump and mysqlimport. This requests that they make use of compression (if available) to reduce the amount of traffic flowing across your network. When you consider the amount of repetitive information typically found in most ASCII data streams, compression might be able to squeeze significant volume from this communication. From the parameters listed above, --compress is likely to have the most impact. |

| < Day Day Up > |

EAN: 2147483647

Pages: 131