PCI and Future Bus Architectures

| |

There was a time when network servers weren't expected to do much more than provide shared file-and-print services. Today, these same boxes are being asked to host applications (such as databases), act as firewalls, process intensive multimedia data, and support a multitude of peripheral devices.

And while network backbones have increased in speed to accommodate all of the requests going to and from servers and all the data that must pass through them, and as processor speeds and clock rates have increased, the servers themselves have had to endure a lot of finger-pointing as the source of traffic bottlenecks.

The I/O capacity and throughput of servers are the keys to smooth operation. But when the bus architecture has trouble shouldering the burden placed on I/O subsystems, the rest of the network notices. Often, even a fast CPU has to slow down to the bus speed when it wants to communicate with an adapter in the system.

A few years ago, Peripheral Component Interconnect (PCI) became widely adopted as the de facto bus for PC systems. Chances are, if you bought a system in the past few years , it came with multiple PCI slots.

But even though PCI has a higher transfer rate and clock speed than the previous EISA, ISA, and Micro Channel Architecture (MCA) buses, today's high-speed networks need something more.

There are three efforts to develop the next step beyond PCI: PCI-X, Future I/O, and Next Generation I/O.

In this tutorial, I'll first look at PCI and what it offers. Then, I'll examine the three proposals that hope to inherit PCI's place at the heart of I/O systems.

PCI Overview

Before the PCI bus architecture became the I/O system of choice, the EISA and ISA bus types were the most common.

For devices in EISA and ISA systems to communicate with the CPU, or host, they may be required to first go through an expansion bridge, the memory bus, the bus cache, and the CPU local bus. As you can imagine, this can lead to quite a bit of latency in processing I/O and data requests.

Although buffering, which stores signals if a bus is busy, solved some of the delay issues surrounding EISA and ISA, other problems remainedincluding what to do if more than one peripheral device needed to use the CPU local bus at the same time.

Because of EISA and ISA limitations, Intel and other vendors created the PCI specification, which is based on the idea of a local bus. This means that instead of peripheral devices having to jump through so many hoops to communicate with the CPU, each device can access the CPU local bus directly. The PCI architecture contains a bridge that serves as the connecting point between the PCI local bus and the CPU local bus and system memory bus. PCI devices are independent of the CPU, which means a CPU can be replaced or upgraded without any impact on the devices or the redesign of the bus.

Current PCI implementations are based on a 32-bit architecture and operate at 33MHz or 66MHz with data transfer rates of under 300Mbytes/sec. But as CPU power grows exponentially, in certain instances the current bus technology can't keep up with demands placed on the I/O system.

I/O systems in use today are mostly based on a shared bus architecture, meaning that memory is shared by the host and the devices. However, this approach does have its drawbacks. For example, if one peripheral device monopolizes the bus, then other devices trying to access the bus at the same time will see lower performance.

So, while most systems will continue to use PCI for communicating between the CPU and peripheral devices, in the next year or two PCI will coexist with a new breed of I/O solutions.

X Marks The Spot

In the fall of 1998, Compaq Computer, Hewlett-Packard, and IBM proposed to extend the current PCI for cases where high-performance I/O is desperately needed. Dubbed PCI-X, this specification basically takes the existing PCI technology and moves it from 32 bits to 64 bits, and increases throughput and clock speed.

PCI-X operates at speeds up to 133MHz with transfer rates going higher than 1Gbyte/seca significant increase over the traditional PCI architecture. Additionally, PCI-X features more efficient data transfer and simpler electrical timing requirements, which is important as clock frequencies increase. PCI-X remains backward compatible with the current PCI bus.

The 64-bit nature of PCI-X's architecture brings it in line with other 64-bit technologies that are becoming common in the industry. Chip manufacturers such as Intel will start shipping 64-bit processors within a year, and the next versions of both NetWare and Windows NT (Windows 2000) will natively support 64-bit addressing. This means that when a system's bus and OS are both running at 64 bits, system performance naturally increases. However, most companies will have quite a task ahead to revamp drivers and other components to conform to the new addressing scheme.

The companies that developed the PCI-X proposal have submitted it to the PCI Special Interest Group (SIG), the body that oversees the PCI specification and its implementation.

Systems that support PCI-X could be on the market as early as the end of 1999. At first, expect to see PCI-X implemented at the server level, where higher levels of I/O are really needed. After that, this architecture could work its way down to workstations and even high-performance PCs within the next few years.

The Future's So Bright

Interestingly, the same camp that's endorsing PCI-XCompaq, HP, and IBM is also throwing its considerable weight behind another post-PCI bus architecture.

What they are all working so hard on is Future I/O, which is based on a point-to-point, switched-fabric interconnect. This is an evolutionary leap ahead of the current PCI architecture, where the bus is shared by all devices. In the switched environment, a direct connection between each card and processor means that as new devices are connected, total throughput actually increases. The plan is to integrate the Future I/O protocol into peripheral devices so they can communicate with the switch in a more effective manner.

The initial throughput from Future I/O connections will be 1Gbyte/sec per link in either direction. Work on Future I/O, which is still in the specification stage, should result in one interconnect that can be used for communication among processors in a cluster, as well as for technologies that need the additional bandwidth, such as Fibre Channel, SCSI, and Gigabit Ethernet.

Because Future I/O aims to support a variety of connectivity types, three distance models are being designed. The first works for distances less than 10 meters (m) and involves ASIC-to-ASIC, board-to-board, and chassis-to-chassis connections. This model uses a parallel cable between chassis.

The second model supports distances of 10m to 300m and will be used for data-center server-to-server connections. This model will use fiber- optic or serial- copper cable with additional logic. The third model supports distances greater than 300m, which is made possible with additional buffering and logic.

The Future I/O Alliance plans to continue developing the specification during 1999 and hopes to have a final version by the end of the year. The group expects ratification of Future I/O in early 2000, with prototypes being demonstrated shortly thereafter. Products are expected to start shipping by early 2001.

The Next Generation

Although Intel was instrumental in the creation of PCI, the companyalong with partners Dell Computer, Hitachi, NEC, Siemens, and Sun Microsystemshas thrown its support behind a new bus architecture dubbed Next Generation I/O, or NGIO. Much like Future I/O, NGIO is based on a switched-fabric architecture rather than on the shared-bus model that is so common today.

Shared buses do not scale very well, which can limit the number of peripherals that can be supported by a system. Shared buses have other shortcomings, including some difficulty with peripheral and system configuration, difficulty in hot-swapping components, and distance limitations between peripherals and memory controllers. But point-to-point switched-fabric technology should address these drawbacks and provide a more efficient system.

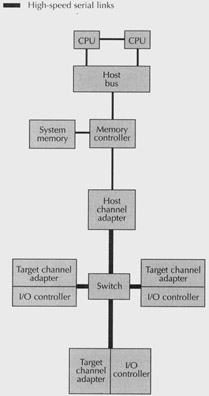

In addition to the switched fabric, another innovation within NGIO is the use of a channel architecturea more efficient I/O engine that processes requests from peripherals. Previously, this type of architecture was used only on mainframe systems. See the figure for an overview of NGIO's channel architecture.

Figure 1: Generation I/O: By combining a point-to-point switched fabric with a channel architecture, Next Generation I/O offers a more scalable and efficient method of connecting hosts with peripheral devices.

NGIO's channel architecture includes a Host Channel Adapter (HCA), which is an interface to and from the memory controller of a host. It contains DMA engines and has a tight link to the host memory controller. NGIO also includes Target Channel Adapters (TCAs), which connect fabric links to the I/O controller. The I/O controllers can be SCSI, Fibre Channel, or Gigabit Ethernet, for example, which allows a variety of network and storage devices to be mixed within the I/O unit.

HCAs and TCAs can connect either to another channel adapter or to switches. The switches in an NGIO architecture let hosts and devices communicate with many other hosts and devices. Switches transmit information among fabric links, although the switches themselves remain transparent to end stations .

Each link in an NGIO system carries requests and responses in packet form. These packets consist of many cells , which is a bit like ATM formatting. These packets travel at speeds up to 2.5Gbits/sec. In fact, NGIO's Physical layer is very much like Fibre Channel's, which supports speeds of 1.25Gbits/sec or 2.5Gbits/sec.

The companies working on NGIO hope to release products that support the technology starting in 2000.

Which Bus To Take?

All of these development efforts mean that within a year or two there could be a glut of I/O architectures on the market. Chances are good that not all of the technologies outlined here will come into widespread use, and industry analysts speculate that support from software vendors like Microsoft and Novell will be a key to success.

Currently, all three post-PCI architectures have support from big names in the hardware world, which is important since it is these vendors that will eventually develop servers, chipsets, boards , and other components that support one or more of the technologies currently on the table.

In the meantime, don't expect PCI to slip away quietly anytime soon. PCI has been the workhorse of the I/O world for several years, and even after new technologies are introduced to the market, you'll see systems that have PCI coexisting with other architectures.

Resources

For more detailed information on the PCI bus specification, visit the PCI Special Interest Group's Web site at www.pcisig.com.

For an overview of PCI, see www.adaptec.com/technology/whitepapers/ pcibus.html.

To see a detailed discussion of Intel's Next Generation I/O, see http://developer.intel.com/design/servers/future_server_io/index.htm.

This tutorial, number 130, written by Anita Karv, was originally published in the May 1999 issue of Network Magazine.

| |

EAN: 2147483647

Pages: 193

- Using SQL Data Definition Language (DDL) to Create Data Tables and Other Database Objects

- Using SQL Data Manipulation Language (DML) to Insert and Manipulate Data Within SQL Tables

- Understanding SQL Transactions and Transaction Logs

- Working with Comparison Predicates and Grouped Queries

- Exploiting MS-SQL Server Built-in Stored Procedures