A Remote File System with Attached Storage

| |

As stated in the previous chapter, the NAS devices perform a singular directive with a duality of function, network file I/O processing, and storage. Their directive as a remote file system or network file system has a colorful legacy enhanced and developed though multiple projects that span many academic and commercial computer research environments.

UNIX, having long been the mainstay of scientific, academic, and research computing environments, evolved as an open system with enhancements coming from these communities on a continual, although discretionary, basis. The need to share data in some organized yet distributed fashion became a popular and increasingly important development effort. Projects at AT&T labs and several major universities began to research this need in the late 1970s. UNIX became a viable offering to both the scientific and business community during the distributed systems revolution in the early 1980s, at which time data sharing really became predominant due to the exponential growth of users and open networks.

The problem of data sharing was addressed in two ways: First, by AT&T labs through their development of the RFS file system; second, and more importantly, a development effort by SUN using the inherent components of UNIX developed a Network File System (NFS) as the standard we know today. NFS was based upon the use of the UNIX remote procedure call (RPC). An RPC is a programming term for a 'remote procedure call,' where one system can execute a program on another system and return the results. Implemented on all open UNIX code bases, this formed the basis for allowing a user on one system to access files on another (see Figure 11-2, which shows how the UNIX RPC function allows a user to execute UNIX commands on a second system).

Figure 11-2: What is an RPC?

Although many of the academic projects provided alternative ways of using the RPC function, the basis was the same in terms of providing access between UNIX file systems. The implementation differences were characterized by the diverse levels of file systems, functions performed by the file system versus activities on the client, and the sophistication of administrative and housekeeping functions. It's important to point out that in some sense parallel development took place in providing solutions for Windows-based systems as Novell took a similar path in providing a file server solution with its Novell Netware Network Operating System. Although similar to what was happening in the UNIX environments, it went further as it developed a proprietary communications protocol creating a local network of computers based upon the Windows operating system.

Other functions were also developed around the open characteristics of the UNIX operating system. These were concepts of file and record locking, security, and extensions to the UNIX I/O manager. Unfortunately, these functions were open to compromise given the lack of enforcement of operating system standards, processing activities, and core UNIX functions. Operating within these environments, users were confronted with unexpected file deletions, records modification and corruption, and security violations. In many cases, this was predicated by the system security level perspective of UNIX academics that security was the responsibility of the user.

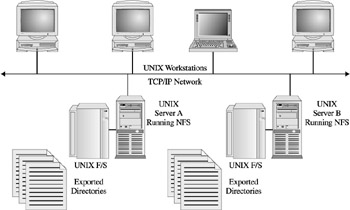

Sun's Network File System became an enabling component for specific vendors looking for ways to provide a specialized solution for file sharing with networked environments. The idea grew out of customer NFS configurations in which general purpose UNIX workstations were used only for storing common data within a network and making it accessible through the server's UNIX file system, something which had been set up to export portions of the file system to authorized clients . (Figure 11-3 shows the initial configurations of UNIX workstations and the designations of storage workstations-for example, the server.)

Figure 11-3: An initial NFS configuration

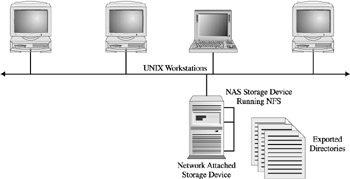

These vendors developed small footprint servers running a specialized UNIX kernel optimized for I/O and network connectivity. Its only job was to provide shared access to files within a network, where any client computer attached to the network could store and access a file from this specialized UNIX server. Given that the sole component visible to the network was its storage, the only appropriate acronym was Network Attached Storage, vis- -vis NAS. (Figure 11-4 shows the initial NAS configurations with UNIX workstations, with a close-up of an early NAS system.)

Figure 11-4: An early NFS implementation

The NAS Micro-Kernel OS

The NAS legacy relies heavily on UNIX operating system foundations and functionality as it evolved to support distributed computing and networking. The NAS device operates from a micro-kernel operating system and although most NAS vendors offer their products with UNIX-based micro- kernels , it's not offered by everyone. As we'll discover, the positive aspect of standards with UNIX and Windows plays an important role in the heterogeneous functionality of the NAS devices. For example, the POSIX standard has provided a level of commonality to key operating system components and has become important as NAS devices expand beyond their native UNIX families to support other operating systems, communications, and file protocols.

| Note | POSIX (Portable Operating System Interface) standards were developed by IEEE for the purpose of cross-platform interoperability. |

Core systems functionality within an operating system is contained in the kernel. This consists of a collection of software that performs the basic functions of the computer and interfaces with the lower-level micro-code and system ROM facilities provided by the hardware vendor. Although this core-level software of the operating system will be called different names in different implementations , such as the nucleus in IBM's MVS OS, the term 'kernel' has become synonymous with the core functionality of the operating system used by both UNIX variants and Microsoft Windows.

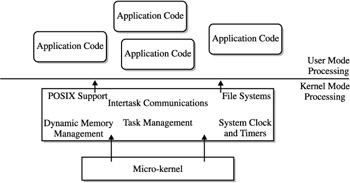

Within the kernel are the routines that manage the various aspects of computer processing, such as process management, scheduling, memory management, and I/O supervision by the I/O manager (refer to Chapter 10 for more information). The kernel also operates in a supervisory manner and services the requests of applications that operate in user-mode, thereby forming the fundamental cycle of processing depicted in Figure 11-5.

Figure 11-5: Overview of the NAS micro-kernel components

An interesting aspect of the UNIX kernel is the open nature of the UNIX operating system and the user's ability to modify the kernel code and rebuild it to suit their particular needs. This created a chaotic systems administration problem. However, outside the bounds of a stable environment, it did provide the basis for enhancements that solved particular problems. This flexibility provided the best platform for those individuals and companies that had to provide a special operating system for embedded or specialized applications.

These applications generally required operating a computer as a transparent entity where the OS supported a singular application such as mechanical automation, monitoring, and process control. This required the OS to be small, reliable, and transparent to the end user. The development of the OS for these particular applications was built from UNIX kernel code that had been modified to suit the specific needs of each application. Given that these applications had to run in real time, controlling equipment and monitoring machines, they became known as Real Time Operating Systems or RTOS.

All NAS devices in today's world are based on some type of RTOS. Given their highly customized ability to facilitate the OS for a single application, the RTOS offerings were highly compartmentalized, allowing vendors that used the OS to customize the components they needed. Most special applications did not require the entire functionality of the UNIX kernel and needed both performance optimization and limited overhead customized for the particular application. This resulted in the more common term of 'micro-kernel.' Even though I have used this term previously and many reading this section may have encountered the term before, the importance of accurately describing the micro-kernel cannot be overlooked given its relation to NAS devices.

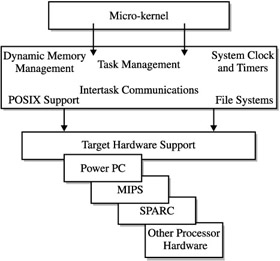

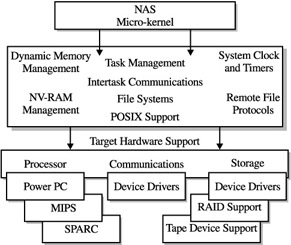

A typical micro-kernel is shown in Figure 11-6. We can see that the selection of components depends on the application; however, there are multiple components to choose from that provide vendors with complete flexibility in how they customize the OS. Important NAS components include the following:

Figure 11-6: A typical micro-kernel

-

Task management, multitasking, scheduling, context switching, priority levels

-

Intertask communications, message queues, interrupt and exception handling, shared memory

-

Dynamic Memory Management

-

The system clock and timing facilities

-

A flexible I/O and local file system

-

POSIX support

-

OS hardware targets, PowerPC, MIPS, SPARC, NEC, 80x86, Pentium, i960

The customization of the micro-kernel in NAS devices centers on effective and efficient storage I/O, its compatibilities to remote file systems, and its effective task processing for file-level operations. Given these tasks , the NAS micro-kernel will look like Figure 11-7 and contain the necessary components. On top of the micro-kernel are the embedded applications each vendor supplies in addition to the unique I/O processing through customization of the kernel. Key areas within these applications are the storage and network device drivers, file systems, and buffer and cache management.

Figure 11-7: An example of an NAS micro-kernel

Another key area to introduce is the implementation of non-volatile RAM used in many NAS devices (for example, NV-RAM). Implemented through the physical RAM capacities , it is driven through specific memory management routines that allow these buffer areas to be used for checkpoints in the status of the file system and related I/O operations. This is memory that is protected through some type of battery backup so that when power is off, the contents of memory are not flushed but are available when the system is restored. This makes temporary storage less sensitive to system outages and permits recovery to take place quickly using routines built into the micro-kernel proprietary applications.

NV-RAM becomes an important factor when working with both device drivers and RAID software.

Not all micro-kernels are based on UNIX. The Windows NT and Windows2000 I/O managers form the basis for NAS micro-kernels used by an assortment of large and small vendors, through the Storage Appliance Kit (SAK) offering. Although the importance of POSIX compliance pays off, with Microsoft providing levels of compatibility, the characteristics of NT and subsequent Windows 2000 I/O manager derivatives have different architectures and the following additional components.

The Microsoft OS model is an object-oriented architecture. This is characterized by its use of layered architectures and I/O synchronicity (for example, the employment of file handles as compared to virtual files used in UNIX). The encapsulation of instructions, data, and system functions used in the object models provides programming power and flexibility; however, it comes with a higher cost of processing and resource overhead.

Micro-kernel communications with device drivers operate with a layered architecture. This condition allows operating subsystems such as a file system to call services to read a specific amount of data within a file. This is accomplished by the operating system service passing this request to the I/O manager, who calls a disk driver. The device driver actually positions the disk to the right cylinder, track, and cluster and reads the specific data and transfers control and data back to the I/O manager. The I/O manager then returns the requested data to the file system process that initiated the request on behalf of the application.

The Microsoft model uses mapped I/O in conjunction with its virtual memory and cache manager. This allows access to files in memory and manages their currency through normal paging activities of the virtual memory manager.

Although both UNIX and Microsoft architectures support synchronous and asynchronous I/O processing, the level of processing affinity is consistent within the Microsoft model. The asynchronous model used for many years in mainframe multitasking systems allows applications to continue to process while slower I/O devices complete their operations. This becomes important in processing scalability as more CPUs are added to the processor component.

Given its multilayered architecture and its ability to shield the developer from many of the OS internals, the Microsoft model comes with additional overhead. Considering the increased levels of abstraction of object orientation resulting in multilayered functions (for example, device drivers calling device drivers and file handles as virtual files or representations of the files in memory), it remains difficult to minimize the extra processing inherent with any of its OS RTOS derivatives.

The NAS File System

NAS uses a network file system or NFS. The implementation of NFS works by the use of the RPC, or remote procedure calls, to manipulate a UNIX file system existing on a server. The system encapsulates these functions to allow remote users on a network to issue commands to the server's file system. However, in order to understand the internal happenings of the system, we must look briefly at UNIX file systems.

The UNIX File System

The file system is one of the major components of an operating system. It is responsible for storing information on disk drives and retrieving and updating this information as directed by the user or application program. Users and application programs access file systems through system calls such as open , create , read , write , link , and unlink commands. The UNIX system consists of several different types of files. These are Ordinary files, Directory files, Special Files, and FIFO files.

For our discussion, the most important are the Directory files that manage the cataloging of the file system. Directories associate names with groups of files. Ordinary files are generally reflected through directory names created by users and applications. Special files are used to access peripheral devices. FIFO files are used by UNIX functions called PIPES that pass information and data from program to program.

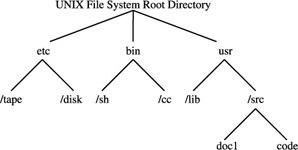

The UNIX system contains a multilevel hierarchical directory structure set up as an inverted tree with a single root node (see Figure 11-8). When the system is booted , the root node is visible to the system; other file systems are attached by mounting them under the root or subsequently under the current directory known to the system. While directories cannot be split across file systems (they're restricted to a single reference of a physical disk or logical disk partition), files can appear in several directories and may be referenced by the same or different names.

Figure 11-8: The UNIX hierarchical directory system

The directory entry for a file consists of the filename and a pointer to a data structure called an inode. The inode describes the disk addresses for the file data segments. The association between a file and a directory is called a link. Consequently a file may be linked to a number of directories. An important feature of UNIX systems is that all links have equal status. Access control and write privileges are a function of the file itself and have no direct connection with the directory. However, a directory has its own access control and the user has to satisfy the directory access control first, followed by the file access control in order to read, write, or execute the file.

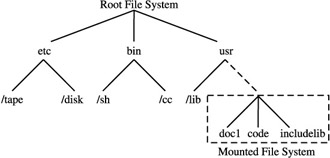

Figure 11-9 shows an example of how the mount command works as users mount file systems for access. A formatted block device may be mounted at any branch directory level of the current hierarchy.

Figure 11-9: Mounting a file system

Since file systems can be mounted, they can also be unmounted. The unmount system call handles all the checking to ensure that it is unmounting the right device number, that it updates the system tables, and that the inode mount flags are cleared.

Internal Operations of NFS

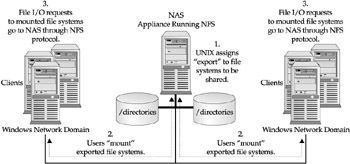

The implementation of NFS is supported on a number of UNIX implementations. These installations support transparent network wide read/write access to files and directories. Workstations or file servers that allow their file systems to be accessible for remote access accomplish this through the export command. The export command allows these file systems to be sharable resources to the network. Workstations, or clients, wishing to use these files import the file systems to access the files.

Let's follow an example of a user requesting access to data within an NFS configuration. User 'A' requires engineering drawings and a specifications document in the /Eng folder that is supposed to be shared by all in his department. Here is what has to happen for him to access this in a transparent mode.

First, as indicated in the following illustration, the system administrator has to set the /Eng directory as exportable to the network. They do this by using the export command, which allows users who have access to the network to share this directory and the files associated with it. Secondly, the user has to initiate a mount command that allows the server side to issue a virtual file system, consisting of directories as indicated in Figure 11-10. Although simple in an external representation, the internal activities provide us with a better indication of the complexities involved in using NFS.

Figure 11-10: Mounting a file directory

The functions associated with this illustration are broken down as activities on the server file system. This is both in the form of local commands to the file system and remote commands via RPC. The server RPC sends out the acknowledgment any time a client wishes to mount the NFS file system (sometimes referred to as mounting the NFS device), given that the UNIX file system refers to a logical disk. Consequently, the client sends initial requests to the server in the form of RPC to initiate the import, linking, and access to the NFS file system. Once this connection is made, the client then communicates via RPCs to gain read/write access to the associated files.

Although transparent to most users, all this takes place under the cover of UNIX and the application, the configuration and processing sequence provides an affordable storage network using general purpose hardware, operating systems, and networks.

The Storage Software

The third major component is the storage software consisting of device drivers and RAID support. Most prominent for consideration are RAID support and the relative inflexibility outside of pre-configured arrays, as well as the use of different levels such as RAID 4. As discussed in Part II, RAID storage systems are offered in various levels depending on the workload supported and fault resiliency required. This is important when considering NAS storage software options and their relative RAID support because NAS is a bundled solution and the level of RAID support may not be easily changed or upgraded.

Device driver support is also important, as the flexibility to upgrade and support new types of devices is driven from this element of NAS software. As stated previously, NAS supports enhanced disk hardware and is starting to offer support for attached tape drives for backup/recovery and archival operations. Within disk support are drive connectivity options that are now being offered in both SCSI and FC disk arrays. FC support should not be confused with storage area network connectivity or with functionality as a switched fabric. Additional information about NAS-SAN integration can be found in Chapter 20.

Support for RAID is determined by the NAS solution. Entry-level appliance devices come with little to no RAID support (for example, disk mirroring, both protected and unprotected ). On the other end, enterprise multiterabyte NAS solutions have a pre-configured RAID selection ranging from level 1, disk mirroring, to level 5, disk striping with protection. Other vendors offer levels of RAID that best support their file system and processing architecture.

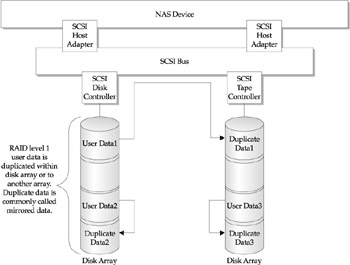

RAID level 1, disk mirroring with protection, is configured to provide a redundant file system. Figure 11-11 illustrates this point as the file system data is effectively duplicated within the storage arrays. Actually, the file system processing knows that two systems exist and each will be updated as files are updated and changed. Protection is provided by way of the storage software's ability to synchronize its processing so that in the event of a failure on any of the arrays, the other set of files can continue processing. This poses a problem when it comes to the resynchronization of both file systems after a failure. The functions of synchronization become a feature of the NAS solution. As stated previously, the entry level NAS solutions provide just straight mirroring with no synchronization. This is considered a RAID 0 or disk-mirroring solution without the benefit of continuous operations. The data would be protected but the system would become inoperative while the duplicate mirror is switched to become the primary file system.

Figure 11-11: NAS using RAID level 1

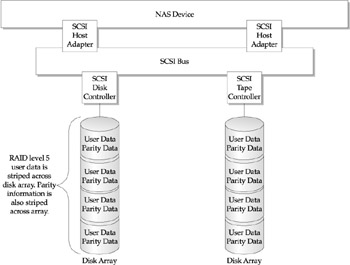

As the NAS solution assumes a larger role in transactional types of processing (for example, multiusers and OLTP-type transaction support), the need for continuous processing becomes more defined. In these cases, the NAS solution offers RAID level 5 support. This provides the file system with the ability to strip its files throughout a storage array. To provide data and continuous operation protection, parity data is created on each device across the array.

The storage software uses the parity information, which is also striped and distributed across each drive, allowing it to recover from disk failures within the array. Recalculating the parity information and rebuilding the lost data and information onto the remaining disks accomplishes this. Figure 11-12 illustrates the same file system functioning within a RAID 5 environment.

Figure 11-12: NAS using RAID level 5

RAID 5 works well in processing models where transactions are small and write operations are limited. As we can conclude, the RAID 5 software and related disk operations in providing parity, file, and data currency across the storage array can be intensive when performing transactional work with heavy updates. However, this should be balanced with the read/write ratio of the workload and the ability to provide NV-RAM for operational efficiencies.

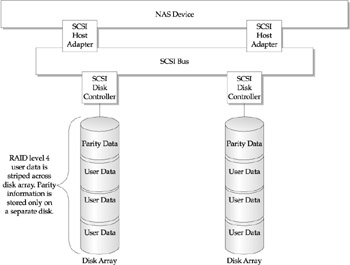

A relatively unknown RAID level you will encounter with NAS is RAID level 4. Similar to level 5, RAID 4 provides data striping across the array; however, it reserves one disk within the array for its parity, as shown in Figure 11-13. This offers a level of efficiency for write-intensive operations, but sacrifices operational protection in the event the parity disk becomes inoperative. Again, this level of RAID should be balanced against the workload and the quality of the NAS solution. Given the reliability of disk drives in today's market, the likelihood of the parity disk becoming inoperative within a multidisk array becomes statistically non-existent. However, for the workload that demands a fully redundant 99.999 percent uptime, this may become an issue.

Figure 11-13: NAS using RAID level 4

Regardless of the RAID level, the workload and subsequent service level dictates the type of storage protection required. NAS offers a complement of RAID protection strategies such as popular levels of disk mirroring with protection or RAID level 1, and data striping with protection offered though levels 4 and 5. It's important to keep in mind that many of these storage protection offerings are bundled and pre-configured with the NAS solution. Flexibility to change or upgrade to other levels of RAID will be at the discretion of the NAS vendor.

| |

EAN: 2147483647

Pages: 192