Bus Operations

| |

Buses operate by requesting the use of paths to transfer data from a source element to a target element. In its simplest form, a controller cache, defined as a source, transfers data to a disk, defined as a target. The controller signals the bus processor that he needs the bus. When processing, control is given to the controller cache operation. It owns the bus for that operation and no one else connected to the bus can use it. However, as indicated earlier, the bus processor can interrupt the process for a higher priority process and give control to that device.

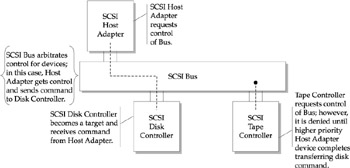

This is called a bus arbitration scheme, as shown in Figure 7-3. The devices connected to the bus arbitrate for bus control based on a predefined set of commands and priorities. The devices can be both source and target for data transfers. This type of internal communication makes up the bus protocol and must be available to all sources and targets-for example, connected devices that participate within the I/O bus.

Figure 7-3: Bus operations: arbitration for control

Parallel vs. Serial

Bus operations will perform data transfer operations using parallel or serial physical connections. These two connections perform the same task, but have distinct architectures, each with their own pros and cons. It's likely you'll be confronted with each as storage systems begin to integrate both for their strengths. Over and above this option is the secondary choice of the type used within each category, such as point-to-point and differential for parallel SCSI and various serial implementations . It's important to understand the limitations, as well as the strengths, when applying these to particular application requirements.

Parallel connections for storage utilize multiple lines to transport data simultaneously . This requires a complex set of wires making parallel cables quite large and thus subjecting them to an increased overhead of ERC (error, recovery, and correction), length limitations, and sensitivity to external noise. Of the parallel connections, there are two types: the single ended bus and the differential bus. The single ended bus is just what it describes, a connection point where devices are chained together with a termination point at the end. On the other hand, a differential bus is configured more like a circuit where each signal has a corresponding signal. This allows for increased distances and decreasing sensitivity to external noise and interference among the multiple wires that make up the cable. Of these two parallel connections, differential will be the more effective for high-end storage systems; however, they are also more costly given the increases in function and reliability.

Serial connections are much simpler, consisting basically of two wires. Although they pass data in serial fashion, they can do this more efficiently with longer distances, and with increased reliability. High-speed network communications transmission media such as ATM and fiber optics already use serial connections. Wide buses that use parallel connections have the potential for cross-talk interference problems, resulting in intermittent signaling problems, which ultimately show up in reliability and performance issues for storage operation. The use of two wires allows substantially improved shielding. Although it may seem counter-intuitive, serial connections can provide greater bandwidth than wide parallel connections. The simpler characteristics of a serial connection's physical make-up allow a clock frequency to be increased 50 to 100 times, improving the throughput by a multiple of 200.

| |

EAN: 2147483647

Pages: 192