File System Driver Architecture

File system drivers (FSDs) manage file system formats. Although FSDs run in kernel mode, they differ in a number of ways from standard kernel-mode drivers. Perhaps most significant, they must register as an FSD with the I/O manager and they interact more extensively with the memory manager and the cache manager. Thus, they use a superset of the exported Ntoskrnl functions that standard drivers use. Whereas you need the Windows 2000 DDK in order to build standard kernel-mode drivers, you must have the Windows 2000 Installable File System (IFS) Kit to build file system drivers. (See Chapter 1 for more information on the DDK, and see www.microsoft.com/ddk/ifskit for more information on the IFS Kit.)

Windows 2000 has two different types of file system drivers:

- Local FSDs manage volumes directly connected to the computer.

- Network FSDs allow users to access data volumes connected to remote computers.

Local FSDs

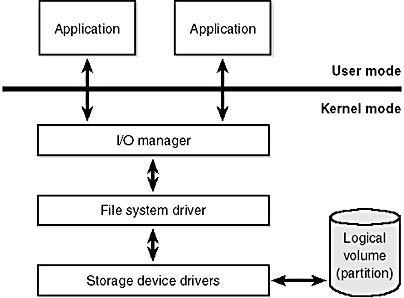

Local FSDs include Ntfs.sys, Fastfat.sys, Udfs.sys, Cdfs.sys, and the Raw FSD (integrated in Ntoskrnl.exe). Figure 12-5 shows a simplified view of how local FSDs interact with the I/O manager and storage device drivers. As we described in the section "Volume Mounting" in Chapter 10, a local FSD is responsible for registering with the I/O manager. Once the FSD is registered, the I/O manager can call on it to perform volume recognition when applications or the system initially access the volumes. Volume recognition involves an examination of a volume's boot sector and often, as a consistency check, the file system metadata.

Figure 12-5 Local FSD

The first sector of every Windows 2000-supported file system format is reserved as the volume's boot sector. A boot sector contains enough information so that a local FSD can both identify the volume on which the sector resides as containing a format that the FSD manages and locate any other metadata necessary to identify where metadata is stored on the volume.

When a local FSD recognizes a volume, it creates a device object that represents the mounted file system format. The I/O manager makes a connection through the volume parameter block (VPB) between the volume's device object (which is created by a storage device) and the device object that the FSD created. The VPB's connection results in the I/O manager redirecting I/O requests targeted at the volume device object to the FSD device object. (See Chapter 10 for more information on VPBs.)

To improve performance, local FSDs usually use the cache manager to cache file system data, including metadata. They also integrate with the memory manager so that mapped files are implemented correctly. For example, they must query the memory manager whenever an application attempts to truncate a file in order to verify that no processes have mapped the part of the file beyond the truncation point. Windows 2000 doesn't permit file data that is mapped by an application to be deleted either through truncation or file deletion.

Local FSDs also support file system dismount operations, which permit the system to disconnect the FSD from the volume object. A dismount occurs whenever an application requires raw access to the on-disk contents of a volume or the media associated with a volume is changed. The first time an application accesses the media after a dismount, the I/O manager reinitiates a volume mount operation for the media.

Remote FSDs

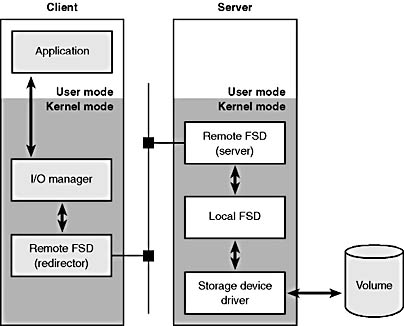

Remote FSDs consist of two components: a client and a server. A client-side remote FSD allows applications to access remote files and directories. The client FSD accepts I/O requests from applications and translates them into network file system protocol commands that the FSD sends across the network to a server-side remote FSD. A server-side FSD listens for commands coming from a network connection and fulfills them by issuing I/O requests to the local FSD that manages the volume on which the file or directory that the command is intended for resides. Figure 12-6 shows the relationship between the client and server sides of a remote FSD interaction.

Figure 12-6 Remote FSD operation

Windows 2000 includes a client-side remote FSD named LANMan Redirector (redirector) and a server-side remote FSD server named LANMan Server (server). The redirector is implemented as a port/miniport driver combination, where the port driver (\Winnt\System32\Drivers\Rdbss.sys) is implemented as a driver subroutine library and the miniport (\Winnt\System32\Drivers\Mrxsmb.sys) uses services implemented by the port driver. The port/miniport model simplifies redirector development because the port driver, which all remote FSD miniport drivers share, handles many of the mundane details involved with interfacing a client-side remote FSD to the Windows 2000 I/O manager. In addition to the FSD components, both LANMan Redirector and LANMan Server include Win32 services named Workstation and Server, respectively.

Windows 2000 relies on the Common Internet File System (CIFS) protocol to format messages exchanged between the redirector and the server. CIFS is an enhanced version of Microsoft's Server Message Block (SMB) protocol. (For more information on CIFS, go to www.cifs.com.)

Like local FSDs, client-side remote FSDs usually use cache manager services to locally cache file data belonging to remote files and directories. However, client-side remote FSDs must implement a distributed cache coherency protocol, called oplocks (opportunistic locking), so that the data an application sees when it accesses a remote file is the same as the data applications running on other computers that are accessing the same file see. Although server-side remote FSDs participate in maintaining cache coherency across their clients, they don't cache data from the local FSDs, because local FSDs cache their own data. (Oplocks are described further in the section "Distributed File Caching" in Chapter 13.)

NOTE

A filter driver that layers over a file system driver is called file-system filter driver. The ability to see all file system requests and optionally modify or complete them enables a range of applications, including on-access virus scanners and remote file replication services. Filemon, on the companion CD as \Sysint\Filemon, is an example of a file-system filter driver that is a pass-through filter. Filemon displays file system activity in real time without modifying the requests it sees.

EXPERIMENT

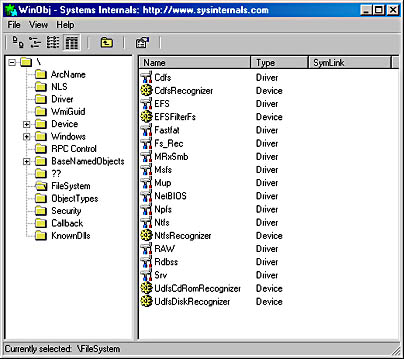

Viewing the List of Registered File SystemsWhen the I/O manager loads a device driver into memory, it typically names the driver object it creates to represent the driver so that it's placed in the \Drivers object manager directory. The driver objects for any driver the I/O manager loads that have a Type attribute value of SERVICE_FILE_SYSTEM_DRIVER (2) are placed in the \FileSystem directory by the I/O manager. Thus, using a tool like Winobj (on the companion CD in \Sysint\Winobj.exe), you can see the file systems that have registered on a system, as shown in the following screen shot. (Note that some file system drivers also place device objects in the \FileSystem directory.)

File System Operation

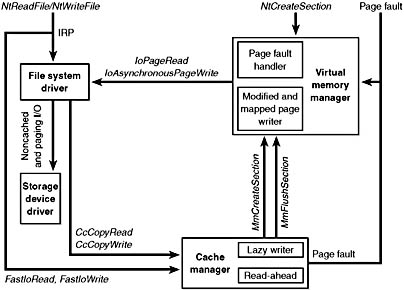

Applications and the system access files in two ways: directly, via file I/O functions (such as ReadFile and WriteFile), and indirectly, by reading or writing a portion of their address space that represents a mapped file section. (See Chapter 7 for more information on mapped files.) Figure 12-7 is a simplified diagram that shows the components involved in these file system operations and the ways in which they interact. As you can see, an FSD can be invoked through several paths:

- From a user or system thread performing explicit file I/O

- From the memory manager's modified page writer

- Indirectly from the cache manager's lazy writer

- Indirectly from the cache manager's read-ahead thread

- From the memory manager's page fault handler

Figure 12-7 Components involved in file system I/O

The following sections describe the circumstances surrounding each of these scenarios and the steps FSDs typically take in response to each one. You'll see how much FSDs rely on the memory manager and the cache manager.

Explicit File I/O

The most obvious way an application accesses files is by calling Win32 I/O functions such as CreateFile, ReadFile, and WriteFile. An application opens a file with CreateFile and then reads, writes, or deletes the file by passing the handle returned from CreateFile to other Win32 functions. The CreateFile function, which is implemented in the Kernel32.dll Win32 client-side DLL, invokes the native function NtCreateFile, forming a complete root-relative pathname for the path that the application passed to it (processing ."" and ."." symbols in the pathname) and prepending the path with "\??" (for example, \??\C:\Susan\Todo.txt).

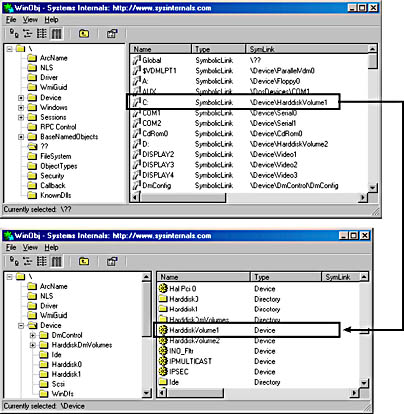

The NtCreateFile system service uses ObOpenObjectByName to open the file, which parses the name starting with the object manager root directory and the first component of the path name ("??"). \?? is a subdirectory that contains symbolic links representing volumes that are assigned drive letters (and symbolic links to serial ports and other device objects that Win32 applications access directly), so the "C:" component of the name resolves to the \??\C: symbolic link. The symbolic link points to a volume device object under \Device, so when the object manager encounters the volume object, the object manager hands the rest of the pathname to the parse function that the I/O manager has registered for device objects, IopParseDevice. (In volumes on dynamic disks, a symbolic link points to an intermediary symbolic link, which points to a volume device object.) Figure 12-8 shows how volume objects are accessed through the object manager namespace. The figure shows how the \??\C: symbolic link points to the \Device\HarddiskVolume1 volume device object.

Figure 12-8 Drive-letter name resolution

After locking the caller's security context and obtaining security information from the caller's token, IopParseDevice creates an I/O request packet (IRP) of type IRP_MJ_CREATE, creates a file object that stores the name of the file being opened, follows the VPB of the volume device object to find the volume's mounted file system device object, and uses IoCallDriver to pass the IRP to the file system driver that owns the file system device object.

When an FSD receives an IRP_MJ_CREATE IRP, it looks up the specified file, performs security validation, and if the file exists and the user has permission to access the file in the way requested, returns a success code. The object manager creates a handle for the file object in the process's handle table and the handle propagates back through the calling chain, finally reaching the application as a return parameter from CreateFile. If the file system fails the create, the I/O manager deletes the file object it created for it.

We've skipped over the details of how the FSD locates the file being opened on the volume, but a ReadFile function call operation shares many of the FSD's interactions with the cache manager and storage driver. The path into the kernel taken as the result of a call to ReadFile is the same as for a call to CreateFile, but the NtReadFile system service doesn't need to perform a name lookup—it calls on the object manager to translate the handle passed from ReadFile into a file object pointer. If the handle indicates that the caller obtained permission to read the file when the file was opened, NtReadFile proceeds to create an IRP of type IRP_MJ_READ and sends it to the FSD on which the file resides. NtReadFile obtains the FSD's device object, which is stored in the file object, and calls IoCallDriver, and the I/O manager locates the FSD from the device object and gives the IRP to the FSD.

If the file being read can be cached (the FILE_FLAG_NO_BUFFERING flag wasn't passed to CreateFile when the file was opened), the FSD checks to see whether caching has already been initiated for the file object. The PrivateCacheMap field in a file object points to a private cache map data structure (which we described in Chapter 11), if caching is initiated for a file object. If the FSD hasn't initialized caching for the file object (which it does the first time a file object is read from or written to), the PrivateCacheMap field will be null. The FSD calls the cache manager CcInitializeCacheMap function to initialize caching, which involves the cache manager creating a private cache map and, if another file object referring to the same file hasn't initiated caching, a shared cache map and a section object.

After it has verified that caching is enabled for the file, the FSD copies the requested file data from the cache manager's virtual memory to the buffer that the thread passed to ReadFile. The file system performs the copy within a try/except block so that it catches any faults that are the result of an invalid application buffer. The function the file system uses to perform the copy is the cache manager's CcCopyRead function. CcCopyRead takes as parameters a file object, file offset, and length.

When the cache manager executes CcCopyRead, it retrieves a pointer to a shared cache map, which is stored in the file object. Recall from Chapter 11 that a shared cache map stores pointers to virtual address control blocks (VACBs), with one VACB entry per 256-KB block of the file. If the VACB pointer for a portion of a file being read is null, CcCopyRead allocates a VACB, reserving a 256-KB view in the cache manager's virtual address space, and maps (using MmCreateSection and MmMapViewOfSection) the specified portion of the file into the view. Then CcCopyRead simply copies the file data from the mapped view to the buffer it was passed (the buffer originally passed to ReadFile). If the file data isn't in physical memory, the copy operation generates page faults, which are serviced by MmAccessFault.

When a page fault occurs, MmAccessFault examines the virtual address that caused the fault and locates the virtual address descriptor (VAD) in the VAD tree of the process that caused the fault. (See Chapter 7 for more information on VAD trees.) In this scenario, the VAD describes the cache manager's mapped view of the file being read, so MmAccessFault calls MiDispatchFault to handle a page fault on a valid virtual memory address. MiDispatchFault locates the control area (which the VAD points to) and through the control area finds a file object representing the open file. (If the file has been opened more than once, there might be a list of file objects linked through pointers in their private cache maps.)

With the file object in hand, MiDispatchFault calls the I/O manager function IoPageRead to build an IRP (of type IRP_MJ_READ) and sends the IRP to the FSD that owns the device object the file object points to. Thus, the file system is reentered to read the data that it requested via CcCopyRead, but this time the IRP is marked as noncached and paging I/O. These flags signal the FSD that it should retrieve file data directly from disk, and it does so by determining which clusters on disk contain the requested data and sending IRPs to the volume manager that owns the volume device object on which the file resides. The volume parameter block (VPB) field in the FSD's device object points to the volume device object.

The virtual memory manager waits for the FSD to complete the IRP read and then returns control to the cache manager, which continues the copy operation that was interrupted by a page fault. When the CcCopyRead completes, the FSD returns control to the thread that called NtReadFile, having copied the requested file data—with the aid of the cache manager and the virtual memory manager—to the thread's buffer.

The path for WriteFile is similar except that the NtWriteFile system service generates an IRP of type IRP_MJ_WRITE and the FSD calls CcCopyWrite instead of CcCopyRead. CcCopyWrite, like CcCopyRead, ensures that the portions of the file being written are mapped into the cache and then copies to the cache the buffer passed to WriteFile.

If a file's data is already stored in the system's working set, there are several variants on the scenario we've just described. If a file's data is already stored in the cache, CcCopyRead doesn't incur page faults. Also, under certain conditions, NtReadFile and NtWriteFile call an FSD's fast I/O entry point instead of immediately building and sending an IRP to the FSD. Some of these conditions follow: the portion of the file being read must reside in the first 4 GB of the file, the file can have no locks, and the portion of the file being read or written must fall within the file's currently allocated size.

The fast I/O read and write entry points for most FSDs call the cache manager's CcFastCopyRead and CcFastCopyWrite functions. These variants on the standard copy routines ensure that the file's data is mapped in the file system cache before performing a copy operation. If this condition isn't met, CcFastCopyRead and CcFastCopyWrite indicate that fast I/O isn't possible. When fast I/O isn't possible, NtReadFile and NtWriteFile fall back on creating an IRP.

Memory Manager's Modified and Mapped Page Writer

The memory manager's modified and mapped page writer threads wake up periodically to flush modified pages. The threads call IoAsynchronousPageWrite to create IRPs of type IRP_MJ_WRITE and write pages to either a paging file or a file that was modified after being mapped. Like the IRPs that MiDispatchFault creates, these IRPs are flagged as noncached and paging I/O. Thus, an FSD bypasses the file system cache and issues IRPs directly to a storage driver to write the memory to disk.

Cache Manager's Lazy Writer

The cache manager's lazy writer thread also plays a role in writing modified pages because it periodically flushes views of file sections mapped in the cache that it knows are dirty. The flush operation, which the cache manager performs by calling MmFlushSection, triggers the memory manager to write any modified pages in the portion of the section being flushed to disk. Like the modified and mapped page writers, MmFlushSection uses IoAsynchronousPageWrite to send the data to the FSD.

Cache Manager's Read-Ahead Thread

The cache manager includes a thread that is responsible for attempting to read data from files before an application, a driver, or a system thread explicitly requests it. The read-ahead thread uses the history of read operations that were performed on a file, which are stored in a file object's private cache map, to determine how much data to read. When the thread performs a read-ahead, it simply maps the portion of the file it wants to read into the cache (allocating VACBs as necessary) and touches the mapped data. The page faults caused by the memory accesses invoke the page fault handler, which reads the pages into the system's working set.

Memory Manager's Page Fault Handler

We described how the page fault handler is used in the context of explicit file I/O and cache manager read-ahead, but it is also invoked whenever any application accesses virtual memory that is a view of a mapped file and encounters pages that represent portions of a file that aren't part of the application's working set. The memory manager's MmAccessFault handler follows the same steps it does when the cache manager generates a page fault from CcCopyRead or CcCopyWrite, sending IRPs via IoPageRead to the file system on which the file is stored.

EAN: 2147483647

Pages: 121

- Structures, Processes and Relational Mechanisms for IT Governance

- An Emerging Strategy for E-Business IT Governance

- A View on Knowledge Management: Utilizing a Balanced Scorecard Methodology for Analyzing Knowledge Metrics

- Governing Information Technology Through COBIT

- Governance in IT Outsourcing Partnerships

- Chapter V Consumer Complaint Behavior in the Online Environment

- Chapter IX Extrinsic Plus Intrinsic Human Factors Influencing the Web Usage

- Chapter XI User Satisfaction with Web Portals: An Empirical Study

- Chapter XIV Product Catalog and Shopping Cart Effective Design

- Chapter XVIII Web Systems Design, Litigation, and Online Consumer Behavior